I.

It is a well known fact that the gods hate prophets.

False prophets they punish only with ridicule. It’s the true prophets who have to watch out. The gods find some way to make their words come true in the most ironic way possible, the one where knowing the future just makes things worse. The Oracle of Delphi told Croesus he would destroy a great empire, but when he rode out to battle, the empire he destroyed was his own. Zechariah predicted the Israelites would rebel against God; they did so by killing His prophet Zechariah. Jocasta heard a prediction that she would marry her infant son Oedipus, so she left him to die on a mountainside – ensuring neither of them recognized each other when he came of age.

Unfortunately for him, Oxford philosopher Toby Ord is a true prophet. He spent years writing his magnum opus The Precipice, warning that humankind was unprepared for various global disasters like pandemics and economic collapses. You can guess what happened next. His book came out March 3, 2020, in the middle of a global pandemic and economic collapse. He couldn’t go on tour to promote it, on account of the pandemic. Nobody was buying books anyway, on account of the economic collapse. All the newspapers and journals and so on that would usually cover an exciting new book were busy covering the pandemic and economic collapse instead. The score is still gods one zillion, prophets zero. So Ord’s PR person asked me to help spread the word, and here we are.

Imagine you were sent back in time to inhabit the body of Adam, primordial ancestor of mankind. It turns out the Garden of Eden has motorcycles, and Eve challenges you to a race. You know motorcycles can be dangerous, but you’re an adrenaline junkie, naturally unafraid of death. And it would help take your mind off that ever-so-tempting Tree of Knowledge. Do you go?

Before you do, consider that you’re not just risking your own life. A fatal injury to either of you would snuff out the entire future of humanity. Every song ever composed, every picture ever painted, every book ever written by all the greatest authors of the millennia would die stillborn. Millions of people would never meet their true loves, or get to raise their children. All of the triumphs and tragedies of humanity, from the conquests of Alexander to the moon landings, would come to nothing if you hit a rock and cracked your skull.

So maybe you shouldn’t motorcycle race. Maybe you shouldn’t even go outside. Maybe you and Eve should hide, panicked, in the safest-looking cave you can find.

Ord argues that 21st century humanity is in much the same situation as Adam. The potential future of the human race is vast. We have another five billion years until the sun goes out, and 10^100 until the universe becomes uninhabitable. Even with conservative assumptions, the galaxy could support quintillions of humans. Between Eden and today, the population would have multiplied five billion times; between today and our galactic future, it could easily multiply another five billion. However awed Adam and Eve would have been when they considered the sheer size of the future that depended on them, we should be equally awed.

So maybe we should do the equivalent of not motorcycling. And that would mean taking existential risks (“x-risks”) – disasters that might completely destroy humanity or permanently ruin its potential – very seriously. Even more seriously than we would take them just based on the fact that we don’t want to die. Maybe we should consider all counterbalancing considerations – “sure, global warming might be bad, but we also need to keep the economy strong!” – to be overwhelmed by the crushing weight of the future.

This is my metaphor, not Ord’s. He uses a different one – the Cuban Missile Crisis. We all remember the Cuban Missile Crisis as a week where humanity teetered on the precipice of destruction, then recovered into a more stable not-immediately-going-to-destroy-itself state. Ord speculates that far-future historians will remember the entire 1900s and 2000s as a sort of centuries-long Cuban Missile Crisis, a crunch time when the world was unusually vulnerable and everyone had to take exactly the right actions to make it through. Or as the namesake precipice, a place where the road to the Glorious Future crosses a narrow rock ledge hanging over a deep abyss.

Ord has other metaphors too, and other arguments. The first sixty pages of Precipice are a series of arguments and thought experiments intended to drive home the idea that everyone dying would be really bad. Some of them were new to me and quite interesting – for example, an argument that we should keep the Earth safe for future generations as a way of “paying it forward” to our ancestors, who kept it safe for us. At times, all these arguments against allowing the destruction of the human race felt kind of excessive – isn’t there widespread agreement on this point? Even when there is disagreement, Ord doesn’t address it here, banishing counterarguments to various appendices – one arguing against time discounting the value of the future, another arguing against ethical theories that deem future lives irrelevant. This part of the book isn’t trying to get into the intellectual weeds. It’s just saying, again and again, that it would be really bad if we all died.

It’s tempting to psychoanalyze Ord here, with help from passages like this one:

I have not always been focused on protecting our longterm future, coming to the topic only reluctantly. I am a philosopher at Oxford University, specialising in ethics. My earlier work was rooted in the more tangible concerns of global health and global poverty – in how we could best help the worst off. When coming to grips with these issues I felt the need to take my work in ethics beyond the ivory tower. I began advising the World Health Organization, World Bank, and UK government on the ethics of global health. And finding that my own money could do hundreds of times as much good for those in poverty as it could do for me, I made a lifelong pledge to donate at least a tenth of all I earn to help them. I founded a society, Giving What We Can, for those who wanted to join me, and was heartened to see thousands of people come together to pledge more than one billion pounds over our lifetimes to the most effective charities we know of, working on the most important causes. Together, we’ve already been able to transform the lives of thousands of people. And because there are many other ways beyond our donations in which we can help fashion a better world, I helped start a wider movement, known as “effective altruism”, in which people aspire to use prudence and reason to do as much good as possible.

We’re in the Garden of Eden, so we should stop worrying about motorcycling and start worrying about protecting our future. But Ord’s equivalent of “motorcycling” was advising governments and NGOs on how best to fight global poverty. I’m familiar with his past work in this area, and he was amazing at it. He stopped because he decided that protecting the long-term future was more important. What must he think of the rest of us, who aren’t stopping our ordinary, non-saving-thousands-of-people-from-poverty day jobs?

In writing about Ord’s colleagues in the effective altruist movement, I quoted Larissa MacFarquahar on Derek Parfit:

When I was interviewing him for the first time, for instance, we were in the middle of a conversation and suddenly he burst into tears. It was completely unexpected, because we were not talking about anything emotional or personal, as I would define those things. I was quite startled, and as he cried I sat there rewinding our conversation in my head, trying to figure out what had upset him. Later, I asked him about it. It turned out that what had made him cry was the idea of suffering. We had been talking about suffering in the abstract. I found that very striking.

Toby Ord was Derek Parfit’s grad student, and I get sort of the same vibe from him – someone whose reason and emotions are unusually closely aligned. Stalin’s maxim that “one death is a tragedy, a million deaths is a statistic” accurately describes how most of us think. I am not sure it describes Toby Ord. I can’t say confidently that Toby Ord feels exactly a million times more intense emotions when he considers a million deaths than when he considers one death, but the scaling factor is definitely up there. When he considers ten billion deaths, or the deaths of the trillions of people who might inhabit our galactic future, he – well, he’s reduced to writing sixty pages of arguments and metaphors trying to cram into our heads exactly how bad this would be.

II.

The second part of the book is an analysis of specific risks, how concerned we should be about each, and what we can do to prevent them. Ord stays focused on existential risks here. He is not very interested in an asteroid that will wipe out half of earth’s population; the other half of humanity will survive to realize our potential. He’s not completely uninterested – wiping out half of earth’s population could cause some chaos that makes it harder to prepare for other catastrophes. But his main focus is on things that would kill everybody – or at least leave too few survivors to effectively repopulate the planet.

I expected Ord to be alarmist here. He is writing a book about existential risks, whose thesis is that we should take them extremely seriously. Any other human being alive would use this as an opportunity to play up how dangerous these risks are. Ord is too virtuous. Again and again, he knocks down bad arguments for worrying too much, points out that killing every single human being on earth, including the ones in Antarctic research stations, is actually quite hard, and ends up convincing me to downgrade my risk estimate.

So for example, we can rule out a high risk of destruction by any natural disaster – asteroid, supervolcano, etc – simply because these things haven’t happened before in our species’ 100,000 year-odd history. Dino-killer sized asteroids seem to strike the Earth about once every few hundred million years, bounding the risk per century around the one-in-a-million level. But also, scientists are tracking almost all the large asteroids in the solar system, and when you account for their trajectories, the chance that one slips through and hits us in the next century goes down to less than one in a hundred fifty million. Large supervolcanoes seem to go off about once every 80,000 years, so the risk per century is 1/800. There are similar arguments around nearby supernovae, gamma ray bursts, and a bunch of other things.

I usually give any statistics I read a large penalty for “or maybe you’re a moron”. For example, lots of smart people said in 2016 that the chance of Trump winning was only 1%, or 0.1%, or 0.00001%, or whatever. But also, they were morons. They were using models, and their models were egregiously wrong. If you hear a person say that their model’s estimate of something is 0.00001%, very likely your estimate of the thing should be much higher than that, because maybe they’re a moron. I explain this in more detail here.

Ord is one of a tiny handful of people who doesn’t need this penalty. He explains this entire dynamic to his readers, agrees it is important, and adjusts several of his models appropriately. He is always careful to add a term for unknown unknowns – sometimes he is able to use clever methods to bound this term, other times he just takes his best guess. And he tries to use empirically-based methods that don’t have this problem, list his assumptions explicitly, and justify each assumption, so that you rarely have to rely on arguments shakier than “asteroids will continue to hit our planet at the same rate they did in the past”. I am really impressed with the care he puts into every argument in the book, and happy to accept his statistics at face value. People with no interest in x-risk may enjoy reading this book purely as an example of statistical reasoning done with beautiful lucidity.

When you accept very low numbers at face value, it can have strange implications. For example, should we study how to deflect asteroids? Ord isn’t sure. The base rate of asteroid strikes is so low that it’s outweighed by almost any change in the base rate. If we successfully learn how to deflect asteroids, that not only lets good guys deflect asteroids away from Earth, but also lets bad guys deflect asteroids towards Earth. The chance that an dino-killer asteroid approaches Earth and needs to be deflected away is 1/150 million per century, with small error bars. The chance that malicious actors deflect an asteroid towards Earth is much harder to figure out, but it has wide error bars, and there are a lot of numbers higher than 1/150 million. So probably most of our worry about asteroids over the next century should involve somebody using one as a weapon, and studying asteroid deflection probably makes that worse and not better.

Ord uses similar arguments again and again. Humanity has survived 100,000 years, so its chance of death by natural disaster per century is probably less than 1 / 1,000 (for complicated statistical reasons, he puts it at between 1/10,000 and 1/100,000). But humanity has only had technology (eg nuclear weapons, genetically engineered bioweapons) for a few decades, so there are no such guarantees of its safety. Ord thinks the overwhelming majority of existential risk comes from this source, and singles out four particular technological risks as most concerning.

First, nuclear war. This was one of the places where Ord’s work is cause for optimism. You’ve probably heard that there are enough nuclear weapons to “destroy the world ten times over” or something like that. There aren’t. There are enough nuclear weapons to destroy lots of majors city, kill the majority of people, and cause a very bad nuclear winter for the rest. But there aren’t enough to literally kill every single human being. And because of the way the Earth’s climate works, the negative effects of nuclear winter would probably be concentrated in temperate and inland regions. Tropical islands and a few other distant locales (Ord suggests Australia and New Zealand) would have a good chance of making it through even a large nuclear apocalypse with enough survivors to repopulate the Earth. A lot of things would have to go wrong at once, and a lot of models be flawed in ways they don’t seem to be flawed, for a nuclear war to literally kill everyone. Ord gives the per-century risk of extinction from this cause at 1 in 1,000.

Second, global warming. The current scientific consensus is that global warming will be really bad but not literally kill every single human. Even for implausibly high amounts of global warming, survivors can always flee to a pleasantly balmy Greenland. The main concern from an x-risk point of view is “runaway global warming” based on strong feedback loops. For example, global warming causes permafrost to melt, which releases previously trapped carbon, causing more global warming, causing more permafrost to melt, etc. Or global warming causes the oceans to warm, which makes them release more methane, which causes more global warming, causing the oceans to get warmer, etc. In theory, this could get really bad – something similar seems to have happened on Venus, which now has an average temperature of 900 degrees Fahrenheit. But Ord thinks it probably won’t happen here. His worst-case scenario estimates 13 – 20 degrees C of warming by 2300. This is really bad – summer temperatures in San Francisco would occasionally pass 140F – but still well short of Venus, and compatible with the move-to-Greenland scenario. Also, global temperatures jumped 5 degree C (to 14 degrees above current levels) fifty million years ago, and this didn’t seem to cause Venus-style runaway warming. This isn’t a perfect analogy for the current situation, since the current temperature increase is happening faster than the ancient one did, but it’s still a reason for hope. This is another one that could easily be an apocalyptic tragedy unparalleled in human history but probably not an existential risk; Ord estimates the x-risk per century as 1/1,000.

The same is true for other environmental disasters, of which Ord discusses a long list. Overpopulation? No, fertility rates have crashed and the population is barely expanding anymore (also, it’s hard for overpopulation to cause human extinction). Resource depletion? New discovery seems to be faster than depletion for now, and society could route around most plausible resources shortages. Honeybee collapse? Despite what you’ve heard, losing all pollinators would only cause a 3 – 8% decrease in global crop production. He gives all of these combined plus environmental unknown unknowns an additional 1/1,000, just in case.

Third, pandemics. Even though pathogens are natural, Ord classifies pandemics as technological disasters for two reasons. First, natural pandemics are probably getting worse because our technology is making cities denser, linking countries closer together, and bringing humans into more contact with the animal vectors of zoonotic disease (in one of the book’s more prophetic passages, Ord mentions the risk of a disease crossing from bats to humans). But second, bioengineered pandemics may be especially bad. These could be either accidental (surprisingly many biologists alter diseases to make them worse as part of apparently legitimate scientific research) or deliberate (bioweapons). There are enough unknown unknowns here that Ord is uncomfortable assigning relatively precise and low risk levels like he did in earlier categories, and this section generally feels kind of rushed, but he estimates the per-century x-risk from natural pandemics as 1/10,000 and from engineered pandemics as 1/30.

The fourth major technological risk is AI. You’ve all read about this one by now, so I won’t go into the details, but it fits the profile of a genuinely dangerous risk. It’s related to technological advance, so our long and illustrious history of not dying from it thus far offers no consolation. And because it could be actively trying to eliminate humanity, isolated populations on islands or in Antarctica or wherever offer less consolation than usual. Using the same arguments and sources we’ve seen every other time this topic gets brought up, Ord assigns this a 1/10 risk per century, the highest of any of the scenarios he examines, writing:

In my view, the greatest risk to humanity’s potential in the next hundred years comes from unaligned artificial intelligence, which I put at 1 in 10. One might be surprised to see such a high number for such a speculative risk, so it warrants some explanation.

A common approach to estimating the chance of an unprecedented event with earth-shaking consequences is to take a sceptical stance: to start with an extremely small probability and only raise it from there when a large amount of hard evidence is presented. But I disagree. Instead, I think that the right method is to start with a probability that reflects our overall impressions, then adjust this in light of the scientific evidence. When there is a lot of evidence, these approaches converge. But when there isn’t, the starting point can matter.

In the case of artificial intelligence, everyone agrees the evidence and arguments are far from watertight, but the question is where does this leave us? Very roughly, my approach is to start with the overall view of the expert community that there is something like a 1 in 2 chance that AI agents capable of outperforming humans in almost every task will be developed in the coming century. And conditional on that happening, we shouldn’t be shocked if these agents that outperform us across the board were to inherit our future.

The book also addresses a few more complicated situations. There are ways humankind could fail to realize its potential even without being destroyed. For example, if it got trapped in some kind of dystopia that it was impossible to escape. Or if it lost so many of its values that we no longer recognized it as human. Ord doesn’t have too much to say about these situations besides acknowledging that they would be bad and need further research. Or a series of disasters could each precipitate one another, or a minor disaster could leave people unprepared for a major disaster, or something along those lines.

Here, too, Ord is more optimistic than some other sources I have read. For example, some people say that if civilization ever collapses, it will never be able to rebuild, because we’ve already used up all easily-accessible sources of eg fossil fuels, and an infant civilization won’t be able to skip straight from waterwheels to nuclear. Ord is more sanguine:

Even if civilization did collapse, it is likely that it could be re-established. As we have seen, civilization has already been independently established at least seven times by isolated peoples. While one might think resources depletion could make this harder, it is more likely that it has become substantially easier. Most dissasters short of human extinction would leave our domesticated animals and plants, as well as copious material resources in the ruins of our cities – it is much easier to re-forge iron from old railings than to smelt it from ore. Even expendable resources such as coal would be much easier to access, via abandoned reserves and mines, than they ever were in the eighteenth century. Moreover, evidence that civilisation is possible, and the tools and knowledge to help rebuild, would be scattered across the world.

III.

Still, these risks are real, and humanity will under-respond to them for predictable reasons.

First, free-rider problems. If some people invest resources into fighting these risks and others don’t, both sets of people will benefit equally. So all else being equal everyone would prefer that someone else do it. We’ve already seen this play out with international treaties on climate change.

Second, scope insensitivity. A million deaths, a billion deaths, and complete destruction of humanity all sound like such unimaginable catastrophes that they’re hardly worth differentiating. But plausibly we should put 1000x more resources into preventing a billion deaths than a million, and some further very large scaling factor into preventing human extinction. People probably won’t think that way, which will further degrade our existential risk readiness.

Third, availability bias. Existential risks have never happened before. Even their weaker non-omnicidal counterparts have mostly faded into legend – the Black Death, the Tunguska Event. The current pandemic is a perfect example. Big pandemics happen once every few decades – the Spanish flu of 1918 and the Hong Kong Flu of 1968 are the most salient recent examples. Most countries put some effort into preparing for the next one. But the preparation wasn’t very impressive. After this year, I bet we’ll put impressive effort into preparing for respiratory pandemics the next decade or two, while continuing to ignore other risks like solar flares or megadroughts that are equally predictable. People feel weird putting a lot of energy into preparing for something that has never happened before, and their value of “never” is usually “in a generation or two”. Getting them to care about things that have literally never happened before, like climate change, nuclear winter, or AI risk, is an even taller order.

And even when people seem to care about distant risks, it can feel like a half-hearted effort. During a Berkeley meeting of the Manhattan Project, Edward Teller brought up the basic idea behind the hydrogen bomb. You would use a nuclear bomb to ignite a self-sustaining fusion reaction in some other substance, which would produce a bigger explosion than the nuke itself. The scientists got to work figuring out what substances could support such reactions, and found that they couldn’t rule out nitrogen-14. The air is 79% nitrogen-14. If a nuclear bomb produced nitrogen-14 fusion, it would ignite the atmosphere and turn the Earth into a miniature sun, killing everyone. They hurriedly convened a task force to work on the problem, and it reported back that neither nitrogen-14 nor a second candidate isotope, lithium-7, could support a self-sustaining fusion reaction.

They seem to have been moderately confident in these calculations. But there was enough uncertainty that, when the Trinity test produced a brighter fireball than expected, Manhattan Project administrator James Conant was “overcome with dread”, believing that atmospheric ignition had happened after all and the Earth had only seconds left. And later, the US detonated a bomb whose fuel was contaminated with lithium-7, the explosion was much bigger than expected, and some bystanders were killed. It turned out atomic bombs could initiate lithium-7 fusion after all! As Ord puts it, “of the two major thermonuclear calculations made that summer at Berkeley, they got one right and one wrong”. This doesn’t really seem like the kind of crazy anecdote you could tell in a civilization that was taking existential risk seriously enough.

So what should we do? That depends who you mean by “we”.

Ordinary people should follow the standard advice of effective altruism. If they feel like their talents are suited for a career in this area, they should check out 80,000 Hours and similar resources and try to pursue it. Relevant careers include science (developing helpful technologies to eg capture carbon or understand AI), politics and policy (helping push countries to take risk-minimizing actions), and general thinkers and influencers (philosophers to remind us of our ethical duties, journalists to help keep important issues fresh in people’s minds). But also, anything else that generally strengthens and stabilizes the world. Diplomats who help bring countries closer together, since international peace reduces the risk of nuclear war and bioweapons and makes cooperation against other threats more likely. Economists who help keep the market stable, since a prosperous country is more likely to have resources to devote to the future. Even teachers are helping train the next generation of people who can help in the effort, although Ord warns against going too meta – most people willing to help with this will still be best off working on causes that affect existential risk directly. If they don’t feel like their talents lie in any of these areas, they can keep earning money at ordinary jobs and donate some of it (traditionally 10%) to x-risk related charities.

Rich people, charitable foundations, and governments should fund anti-x-risk work more than they’re already doing. Did you know that the Biological Weapons Convention, a key international agreement banning biological warfare, has a budget lower than the average McDonald’s restaurant (not total McDonald corporate profits, a single restaurant)? Or that total world spending on preventing x-risk is less than total world spending on ice cream? Ord suggests a target of between 0.1% and 1% of gross world product for anti-x-risk efforts.

And finally, Ord has a laundry list of requests for sympathetic policy-makers (Appendix F). Most of them are to put more research and funding into things, but the actionable specific ones are: restart various nuclear disarmament treaties, take ICBMs off “hair-trigger alert”, have the US rejoin the Paris Agreement on climate change, fund the Biological Weapons Convention better, and mandate that DNA synthesis companies screen consumer requests for dangerous sequences so that terrorists can’t order a batch of smallpox virus (80% of companies currently do this screening, but 20% don’t). The actual appendix is six pages long, there are a lot of requests to put more research and funding into things.

In the last section, Ord explains that all of this is just the first step. After we’ve conquered existential risk (and all our other problems), we’ll have another task: to contemplate how we want to guide the future. Before we spread out into the galaxy, we might want to take a few centuries to sit back and think about what our obligations are to each other, the universe, and the trillions of people who may one day exist. We cannot take infinite time for this; the universe is expanding, and for each year we spend not doing interstellar colonization, three galaxies cross the cosmological event horizon and become forever unreachable, and all the potential human civilizations that might have flourished there come to nothing. Ord expects us to be concerned about this, and tries to reassure us that it will be okay (the relative loss each year is only one five-billionth of the universe). So he thinks taking a few centuries to reflect before beginning our interstellar colonization is worthwhile on net. But for now, he thinks this process should take a back seat to safeguarding the world from x-risk. Deal with the Cuban Missile Crisis we’re perpetually in the middle of, and then we’ll have time for normal philosophy.

IV.

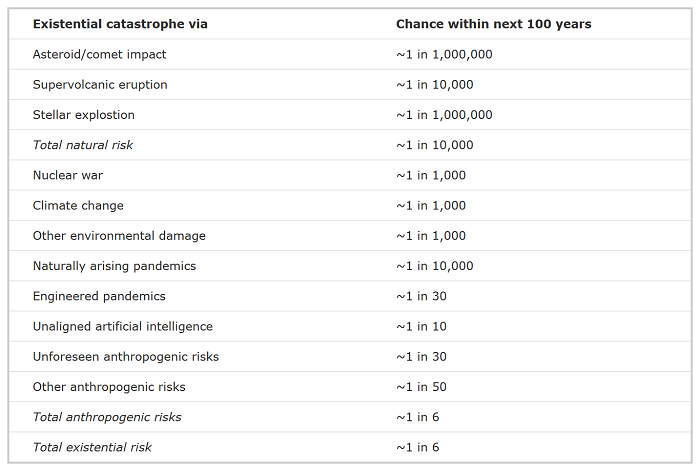

In the spirit of highly-uncertain-estimates being better than no estimates at all, Ord offers this as a draft of where the existential risk community is right now (“they are not in any way the final word, but are a concise summary of all I know about the risk landscape”):

Again, the most interesting thing for me is how low most of the numbers are. It’s a strange sight in a book whose thesis could be summarized as “we need to care more about existential risk”. I think most people paying attention will be delighted to learn there’s a 5 in 6 chance the human race will survive until 2120.

This is where I turn to my psychoanalysis of Toby Ord again. I think he, God help him, sees a number like that and responds appropriately. He multiplies 1/6th by 10 billion deaths and gets 1.6 billion deaths. Then he multiplies 1/6th by the hundreds of trillions of people it will prevent from ever existing, and gets tens of trillions of people. Then he considers that the centuries just keep adding up, until by 2X00 the risk is arbitrarily high. At that point, the difference between a 1/6 chance of humanity dying per century vs. a 5/6 chance of humanity dying may have psychological impact. But the overall takeaway from both is “Holy @!#$, we better put a lot of work into dealing with this.”

There’s an old joke about a science lecture. The professor says that the sun will explode in five billion years, and sees a student visibly freaking out. She asks the student what’s so scary about the sun exploding in five billion years. The student sighs with relief: “Oh, thank God! I thought you’d said five million years!”

We can imagine the opposite joke. A professor says the sun will explode in five minutes, sees a student visibly freaking out, and repeats her claim. The student, visibly relieved: “Oh, thank God! I thought you’d said five seconds.”

When read carefully, The Precipice is the book-length version of the second joke. Things may not be quite as disastrous as you expected. But relief may not quite be the appropriate emotion, and there’s still a lot of work to be done.