📣 Final weekend - applications close Monday 12 Jan at noon (GMT)

🚀 Apply your expertise to advanced AI governance.

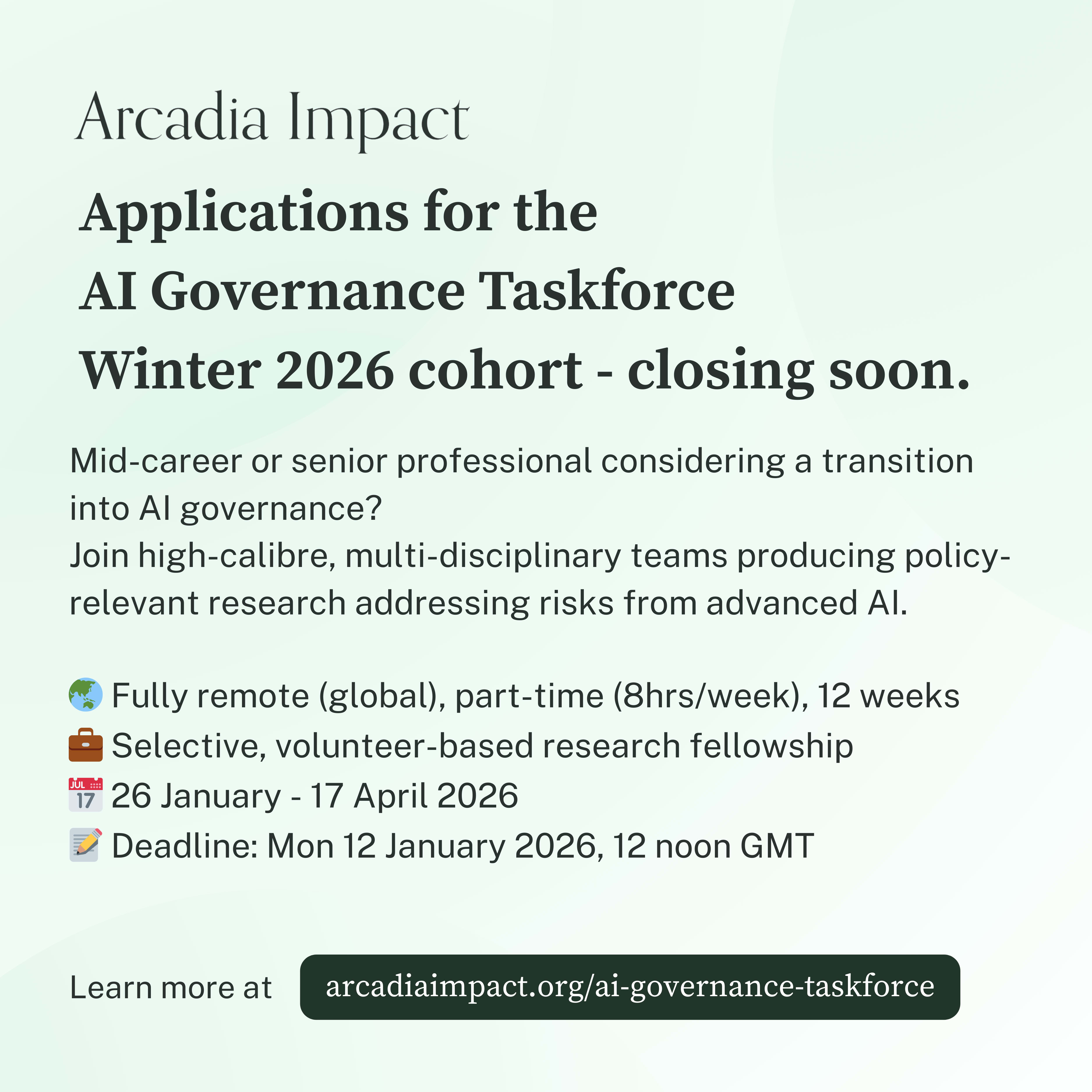

Are you a mid-career or senior professional considering a transition into AI governance focused on advanced-AI risk?

Applications are closing imminently for the AI Governance Taskforce Research Associate role for our 12-week Winter 2026 cohort, starting end of January.

This role is designed for experienced professionals who want to apply existing domain expertise to frontier AI governance. You will work in small, high-calibre, multidisciplinary research teams producing policy-relevant research, led by Research Team Leaders and guided by expert practitioners based at respected organisations.

Projects:

🔹 Phase identification of AI incident emergence

Sean McGregor (Founder, AI Incident Database)

🔹 Leveraging open source intelligence (OSINT) for loss of control AI risk

Tommy Shaffer Shane (Interim Director of AI policy, Centre for Long-Term Resilience)

🔹 Stress-testing a loss of control safety case

Henry Papadatos (Executive Director, SaferAI)

🔹 Advancing dangerous capability red lines through the AI Safety Institute network

Su Cizem (Visiting Analyst, The Future Society)

🔹 Developing international AI incident tracking and response infrastructure: the severity threshold — technical triggers for ‘unacceptable risk’

Caio Vieira Machado (Senior Associate, The Future Society)

What this involves:

👥 Applied research in small teams

📄 Policy-relevant outputs (papers, briefs, articles)

🌍 Fully remote, global, part-time (8 hrs/week), 12 weeks

💼 Selective, volunteer-based research fellowship

📅 Deadline: Mon 12 January 2026, 12 noon GMT

🔗 More info and apply: https://www.arcadiaimpact.org/ai-governance-taskforce

For queries, please email:

Ben R Smith, Taskforce Lead

ben [at] arcadiaimpact [dot] org