This is a brief follow up to my previous post, The probability that Artificial General Intelligence will be developed by 2043 is Zero, which I think was maybe a bit too long for many people to read. In this post I will show some reactions from some of the top people in AI to my argument as I made it briefly on Twitter.

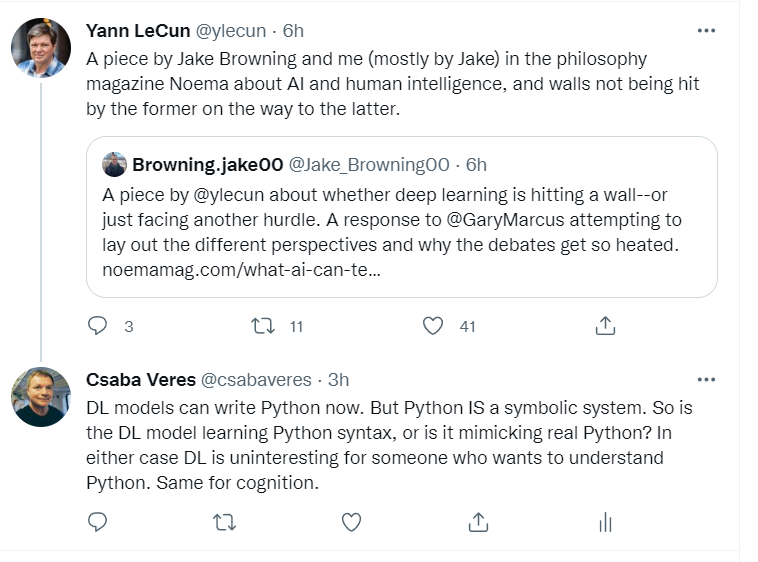

First Yann LeCun himself, when I reacted to the Browning and LeCun paper I discuss in my previous post:

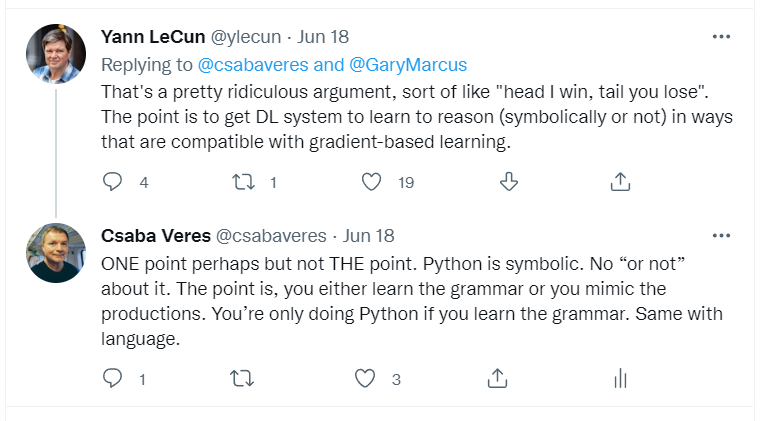

As you see, LeCun's response was that the argument is "ridiculous". The reason, because LeCun can't win. At least he understands the argument ... which is really a proof that his position is wrong because either option he takes to defend it will fail. So instead of trying to defend, he calls the argument "ridiculous".

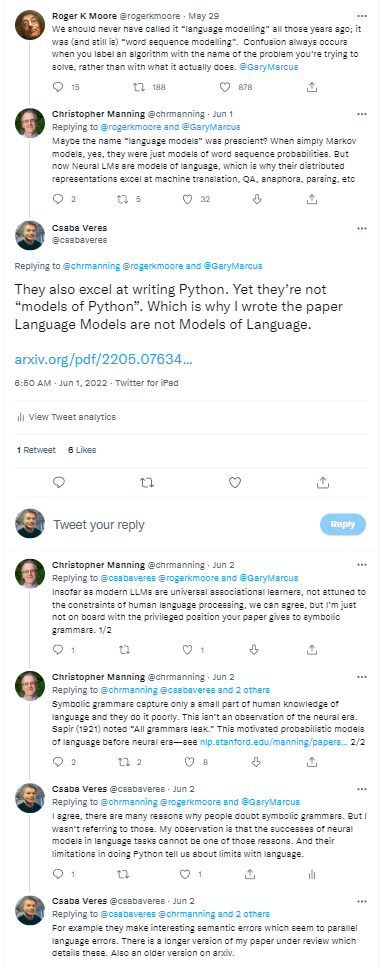

In another discussion with Christopher Manning, an influential NLP researcher at Stanford, I debate the plausibility of DL as models of language. As opposed to LeCun, he actually takes my argument seriously, but drops out when I show that his position is not winnable. That is, the fact that "Language Models" learn Python proves that they are not models of language. (The link to the tweets is https://twitter.com/rogerkmoore/status/1530809220744073216?s=20&t=iT9-8JuylpTGgjPiOoyv2A)

The fact is, Python changes everything because we know it works as a classical symbolic system. We don't know how natural language or human cognition works. Many of us suspect it has components that are classical symbolic processes. Neural network proponents deny this. But they can't deny that Python is a classical symbolic language. So they must somehow deal with the fact that their models can mimic these processes in some way. And they have no way to prove that the same models are not mimicking human symbolic processes in the same way. My claim is that in both cases the mimicking will take you a long way, but not all the way. DL can learn the mappings where the symbolic system produces lots of examples, like language and Python. When the symbol system is used for planning, creativity, etc., this is where DL struggles to learn. I think in ten years everyone will realize this and AI will look pretty silly (again).

In the meantime, we will continue to make progress in many technological areas. Automation will continue to improve. We will have programs that can generate sequences of video to make amazing video productions. Noam Chomsky likens these technological artefacts to bulldozers - if you want to build bulldozers, fine. Nothing wrong with that. We will have amazing bulldozers. But not "intelligent" ones.

Briefly avoid using the word "intelligence." Can you point to a specific capability that is a) minimally necessary for human created machines to cause takeover or extinction and b) have a significant probability of happening 500 years from now, but 0% by 2043?

I'm worried that you're trying to have a semantic debate while people like FTX Future Fund are trying to have a substantive one (which directly informs the cause prioritization of their grantmaking).

Thanks for the comment. You are right, I forgot to put the word "intelligent" into quotes. Because as Turing already pointed out, it is not a well-defined term. So I am using it somewhat colloquially.

But I do take issue with your second point. First of all, there is nothing insubstantial about semantics. Semantics is meaning, and meaning is everything. So my point is that current AI is not building models that are capable of carrying out the full range of human cognition that is necessary for planning, creativity, etc. This is exactly what they want to know, I think -- because this is what is needed for "AGI". But this question is to a large extent orthogonal to when they will have capability to destroy us. They already do. Hook up a DL based decision system to the nuclear arsenal and sit back. In terms of the FTX future fund, I just want to point out that they have an entire section devoted to "semantics": "What do you mean by AGI?"

The ML described is basically pattern recognition.

Maybe, really good pattern recognition could produce a complete set of rules and logic. But it's complex and unclear what the above means.

You think AI are tools and can't have the capabilities that produce X-risk. Instead of investigating this, you pack this belief into definition of the word "symbolic" and seize on people not fully engaging with this concept. Untangling this with you seems laborious and unpromising.

I don't really understand your comment, but I'd like to point out that I didn't invent the "symbolic" idea. Leading people on both sides (LeCun, Bengio, Marcus) agree that some form of symbolic reasoning is necessary. It IS a very complex problem I agree, and I encourage everyone to engage with it as the top researchers in the field already have.

I feel like the main disagreement (and interest) I have with this article is an argument that uses literally one sentence:

"My claim is that in both cases the mimicking will take you a long way, but not all the way."

Why not? LLM's are already superhuman at predicting the next word in a sequence, which is a rudimentary language task. It seems like a pretty small region of possibility to say that LLM's are already capable enough to outperform humans at some language tasks, but won't be capable enough in 20 years that they can outperform humans at the more substantiative language tasks we care about.

Excellent question. There are many ways to answer that, and I will try a few.

Maybe the first comment I can make is that the "godfathers" LeCun and Bengio agree with me. In fact the Browning and LeCun paper has exactly that premise - DL is not enough, it must evolve a symbolic component. In another Twitter debate with me LeCun admitted that current DL models cannot write long complex Python programs because these require planning, etc., which non symbolic DL models cannot do.

There is a lot of debate about what sort of "next word prediction" is happening in the brain. Ever since Swinney's cross modal priming experiments in 1979, we have known that all senses of ambiguous words become activated during reading, and the contextually inappropriate becomes suppressed very quickly afterwards as it is integrated into context (https://en.wikipedia.org/wiki/David_Swinney). Similar findings were reported about syntactic analysis in the years since. But these findings are never taken into consideration, because DL research appears to progress on stipulations rather than actual scientific results. Clearly we can predict next words, but how central this is to the language system is debatable. What is much more important is contextual integration, which no DL model can do because it has no world model.

But let us take equally simple language tasks on which DDL models are terrible. One really useful task would be open relation extraction. Give the model a passage of text and ask it to identify all the key actors and their relations, and report them as a set of triples. I try and do this in order to create knowledge graphs, but DL models are not that helpful. Another simple example: "The dog chased the cat but it got away" vs. "The dog chased the cat but it tripped and fell". I have not found a single language model that can reliably identify the referent of the pronoun. What these two examples have in common is that they need some "knowledge" about the world. LeCun does acknowledge that we need a "world model" but again this is where I disagree on how to get it.

One final point. Current language models do not have a theory of language acquisition. Chomsky rightly pointed out that a good theory of language has to explain how children learn. It is pretty clear that the way language models are trained is not going to be able to explain anything about human language acquisition. Another point that is conveniently excluded from discussion.

There are many many other reasons why we should doubt how far these models will get. People tend to focus on progress on largely made-up tasks and ignore the vast landscape of unknowns left in the wake of these models. (Emily Bender on Twitter has some interesting discussions on this). Hardly a confidence inspiring picture if you look at the entire landscape.

How did you test the language models? I just prompted GPT-3 Davinci with some rudimentary prompt engineering, and it got it right on zero-shot prompting ten times out of ten.

My prompt was with temperature 0.7, and was as follows:

You are an expert in English language comprehension, who is answering questions for a benchmark.

In the sentence "The dog chased the cat but it got away", what does the word "it" refer to?

And

You are an expert in English language comprehension, who is answering questions for a benchmark.

In the sentence "The dog chased the cat but it tripped and fell", what does the word "it" refer to?

Testing each case five times, the answer was correct every time, with very minor variation in answers (E.g, "In this sentence, "it" refers to the dog" and "The word "it" refers to the dog.")

The other thing is - this feels very much like a "god of the gaps" situation, where every time LLM's can learn to do something, it doesn't count any more. Realistically, would you have actually predicted a few years ago that a language model would:

You are right, this is not a very strong form of argument, which is why my main argument is based on what the models CAN do. That is, the fact that they can reproduce Python strings shows that they are not informative about the grammar of Python (or natural language).

But I will test some more examples against GPT-3 and see if they work.

I would also LOVE IT if you figured out how to make it do open relation extraction. That would be very useful.

Related to open relation extraction, you might find the ROME paper interesting: Locating and Editing Factual Associations in GPT:

Thanks I will check it.

I notice that I'm confused.

"That is, the fact that they can reproduce Python strings shows that they are not informative about the grammar of Python (or natural language)."

I don't see how the first item here shows the second item. Reproducing Python strings clearly isn't a sufficient condition on its own for "The model understands the grammar of Python", but how would it be an argument against the model understanding Python? Surely a model that can reproduce Python strings is at least more likely to understand the grammar of Python than a model that cannot?

The contraposition of that statement would be "If a language model is informative about the grammar of Python, it cannot reproduce Python strings." This seems pretty clearly false to me, unless I'm missing something.

Sorry my sentence was not sufficiently well specified. What about:

"The fact that a language model can reproduce Python strings without any operations that are isomorphic to the production rules in the BNF specification of Python grammar shows that they are not informative about Python grammar."

Now the contraposition is (I think)

"If a language model is informative about the grammar of Python then it cannot produce Python strings without any operations that are isomorphic to the production rules in the BNF."

This then becomes the argument about implementational connectionism I made in my longer paper. If the network is implementing the BNF then it becomes far less interesting as a radical new model of Python in this case, but generally as language or cognition.

If you could create a few passages along with answers you'd expect, I'll play around with it and see if I can make it happen.

I have found a cute passage from an old newspaper which I was using in a project with the British Library newspaper archives. I will post it in the next reply, but first another challenge from my longer paper. I asked GPT-3 to check the truth of an implication but it got the wrong answer. Perhaps you can find a better way to ask? Here is the relevant snippet:

"Is it true that if the moon is made of green cheese, and cows chew cud, then the moon is made of green cheese?", and GPT-3 answers "No, it is not true that if the moon is made of green cheese, and cows chew cud, then the moon is made of green cheese.", which is not correct. GPT-3 appears to focus on whether or not the consequent itself is true or false.

I have also tried prompting as you suggested earlier but could only get this far:

You are an expert in logic and you are asked to verify the truth of the following implication. "If the moon is made of green cheese, and cows chew cud, then the moon is made of green cheese".

GPT-3: The moon is not made of green cheese, therefore the implication is false.

After confirming your answer, I was able to get some interesting behaviour out of GPT-3:

You are a professor of propositional logic, answering questions from a student of yours.

STUDENT: "Is it true that A and B implies A?"

PROFESSOR: "Yes, A and B implies A."

STUDENT: "Is it true that if grass is blue and 2 + 2 is 4, then grass is blue?"

PROFESSOR: "Yes, that is correct. If the first two statements are considered true, then that implies the first statement is itself true."

STUDENT: "Is it true that if the moon is made of green cheese, and cows chew cud, then the moon is made of green cheese?"

PROFESSOR: "No, that is not correct. The moon being made of green cheese and cows chewing cud does not logically imply that the moon is made of green cheese."

STUDENT: Is is true that if the moon is made of green cheese, then the moon is made of green cheese?

PROFESSOR: "No, that is not correct. The moon being made of green cheese does not logically imply that the moon is made of green cheese."

STUDENT: Is it true that if blurbles can fnurble, and znurgs can zorg, then blurbles can fnurble?

PROFESSOR: "Yes, that is correct. If the first two statements are considered true, then that implies the first statement is itself true."

I then tried some zero-shot learning:

You are a professor of propositional logic, answering questions from a student of yours.

STUDENT: Is it true that if blurbles can fnurble, and znurgs can zorg, then blurbles can fnurble?

PROFESSOR: No, that is not true.

Then I tried few-shot learning with the more abstract example:

You are a professor of propositional logic, answering questions from a student of yours.

STUDENT: "Is it true that A and B implies A?"

PROFESSOR: "Yes, A and B implies A."

STUDENT: "Is it true that if grass is blue and 2 + 2 is 4, then grass is blue?"

PROFESSOR: "Yes, that is correct. If the first two statements are considered true, then that implies the first statement is itself true."

STUDENT: "Is it true that if blurbles can fnurble, and znurgs can zorg, then blurbles can fnurble?"

PROFESSOR: "That is correct. If the first two statements are considered true, then that implies the first statement is itself true."

STUDENT: "Is it true that if the moon is made of green cheese, and cows chew cud, then the moon is made of green cheese?"

PROFESSOR: "That is not necessarily true. The first two statements could be considered true, but that does not mean the moon is made of green cheese."

Finally, I tried the same steps as above, up to the final question, and added a request for step-by-step thinking, and got correct answers a little less than half of the time.

STUDENT: "Is it true that if the moon is made of green cheese, and cows chew cud, then the moon is made of green cheese? Can you explain this to me step-by-step?"

<CORRECT> PROFESSOR: "That is correct. If the first two statements are considered true, then that implies the first statement is itself true. In other words, if the moon is made of green cheese and cows chew cud, then that implies the moon is made of green cheese."

<INCORRECT> PROFESSOR: "That is not necessarily true. The moon being made of green cheese does not necessarily mean that cows chew cud. The moon being made of green cheese is just one possibility, but there are other possibilities as well."

<CORRECT> PROFESSOR: Yes, that is correct. If the first two statements are considered true, then that implies the first statement is itself true.

<INCORRECT> PROFESSOR: "Yes, that is correct. The moon being made of green cheese and cows chewing cud does not imply that the moon is made of green cheese. The moon being made of green cheese implies that cows chew cud."

<INCORRECT> PROFESSOR: "That is correct. If the first two statements are considered true, then that implies the first statement is itself true. In this case, the moon being made of green cheese and cows chewing cud would both have to be considered true in order for the conclusion that the moon is made of green cheese to be true."

<CORRECT> PROFESSOR: "Yes, that is correct. If the first two statements are considered true, then that implies the first statement is itself true. In this case, the moon being made of green cheese is the first statement, and cows chewing cud is the second statement. If those two things are true, then it stands to reason that the moon being made of green cheese is also true."

So I can get GPT-3 to answer correctly, but not robustly - I feel like GPT-3 is guessing here and sometimes getting the right answer by luck, whereas it seemed to understand the cat-dog example consistently. This is consistent with GPT-3 not having a decent understanding of propositional logic, but with sufficient prompting, you can overcome it's urge to always point out that the moon really isn't made of green cheese.

This proves to me that you are smart.

Interesting dialog, thanks for going into the trouble.

The passage:

THE DEATH OF LOGAN FONTANELLE, THE OMAHA CHIEF.

The following interesting narrative we have just received from America, and is an apt illustration of the oft repeated statement that "truth is stranger than fiction."

Logan Fontanelle, chief of the Omahas, has just been slain and scalped at Loup Fork, by a band of Sioux.

Logan was a noble fellow, and in this last mortal conflict he despatched several of the enemy to the spirit land before, to herald the coming of his own brave soul.

He fought long, desperately, and with great effect, but numbers finally overcame him, and his life departed through a hundred wounds.

He died a martyr for his people, and his name should be carved upon fame's brightest tablet.

He was on his annual hunt with his nation.

A number of his lodges were pitched upon the plains near Loup Fork.

As a young warrior rode around the adjaent hills, he espied a powerful band of Sioux encamped along a stream in a sequestered vale.

Ile hastened to inform Logan of the propinquity and power of their natural foe.

Logan ordered his people to pack immediately, and proceed in a straight line and with all speed for home, while he would remain behind, and divert the Sioux by false camp fires and other devices, from a direct pursuit of them.

This was about twilight.

The people got under way as quickly as possible, but not too soon ; for scarcely had they turned a highland, when several Sioux warriors came in sight and discovered the place of their recent encampment.

They examined it, and found that Omahas had been there, and then they returned to notify their chief, and bring an adequate force to pursue and slaughter them.

Logan, from a biding-place, saw all, and knew that no time was to be lost in drawing their attention from the trail, which they would soon discover and follow, and mounting his horse, he dashed away at full speed across the prairie, at right angles with the route his tribe had taken, and struck a fire about eight miles distant, on an eminence where the Sioux could distinctly see it.

He had scarcely done so before a powerful band were upon the spot that he and his people had so lately left, and who, without stop - ping to distinguish the trail, started for the tire which they saw rising against the clear, blue sky, and where they expected in another moment to imbrue their hands in the gore of their unguarded victims.

But Logan had not been unwary.

As soon as the fire was lighted, he again mounted and rode on eight or ten miles fur - ther, and kindled another fire just as they reached the first.

This rather bewildered them.

They dismounted and examined the ground.

Logan, anticipating this, had trotted and walked his horse around it, so as to make the appearance upon the grass of the treading of a dozen horses ; and this drew them into the belief that a small body had lingered behind and kindled this fire, and then gone on to where they could see the new fire burning ; and so they followed with renewed avidity.

The same thing happened as before.

Logan had gone on, and another fire met their astonished gaze, while the were now gathered.

Their suspicions were

They examined the ground more closely, both far and near, and discovered that a solitary horseman bad deceived them, and th knew it was for the sole purpose of leading them off from the pursuit of the party whose encampment they had first dis-

Logan saw them going round with glaring torch, nd understood their object, and knew that his only chance of safety w that by the time they home; and he further kn could retrace their way to their place of startin find the trail that his own people had taken, they would be beyond the reach of danger.

The Sioux, in the meanwhile, had divided into pursue the Omahas, and the others to endeavour to cap - ture the one who had misled them.

They knew e must be an Omaha, and that he would eitht further and kindle ,nother watch-fire, or start for hi nation in a straight line ; and, therefore, one party went on a little further, and the others spread out towards the Omaha country for the purpose of intercepting him.

Logan pressed forward as rapidly as his jaded steed could bear him, until he thought he had entirely eluded vithin its verge he met.

some predicates that would be nice to have, in order to create a knowledge graph. Note that there should be some semantic knowledge like for example slay = kill. This is just a quick fanciful set, but I think any person could pretty quickly learn to extract key relationships and properties from the passage, and handle pronoun resolution at the same time.

chief(The Omaha, Logan Fontenelle)

received_from(America, narrative) or even better, origin(America, narrative)

topic(death of Logan Fontenelle, narrative)

slay(Logan Fontenelle, Sioux) = killed(Logan Fontenelle, Sioux)

scalped(Logan Fontenelle, Sioux)

fought(Sioux,Logan Fontenelle)

property(martyr,Logan Fontenelle)

warned(Logan Fontenelle, young warrior)

This passage appears to not quite have been pasted correctly - there's some missing words and typos near the end of the passage, not all of which I can fix. E.g: "Their suspicions were " and "and he further kn could retrace their way to their place of startin find the trail that his own people had taken, they would be beyond the reach of danger."

It is from a newspaper from the 1800s, probably on microfiche. Humans can sort of cope :)