The Effective Altruism movement seems to be mainly concerned with 2 broad objectives: One is a focus on reducing suffering and alleviating risks, the other is a focus on doing the most good and improving the world.

A big concern is AI alignment, as it potentially poses an existential risk. But so do many other very serious non-AI related problems. How do we ensure AI and our laws and policies do the most good and reduce harm, without causing actually more problems?

In the pragmatic reality of these topics, I have found that there are two closely related fields that are often described with different words.

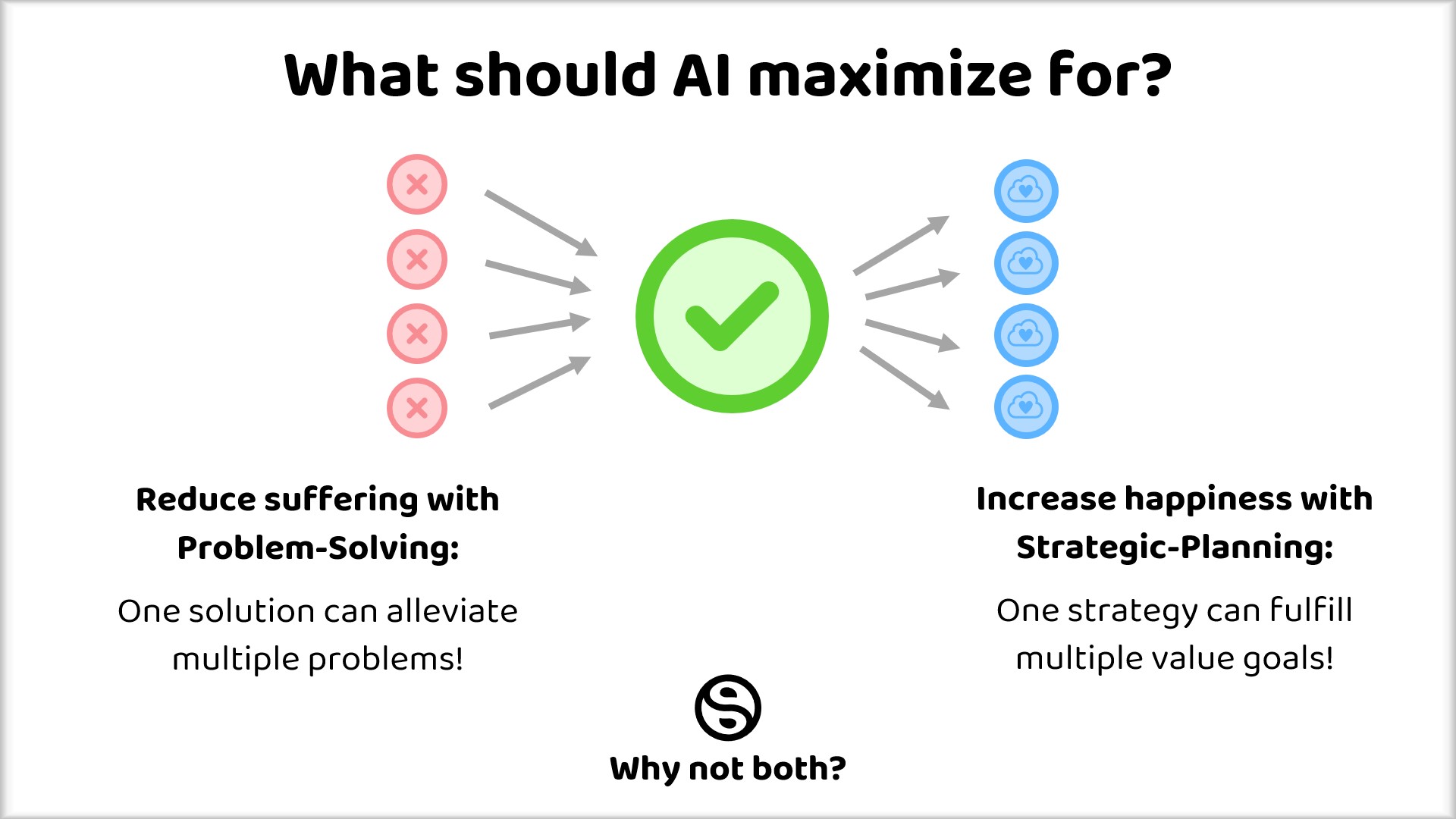

They are strategic planning and problem solving.

Problem solving is a defined as the process of achieving a goal by overcoming obstacles and alleviating risks.

Strategic planning is defined as directives and decisions to allocate resources to attain goals.

To me, they both seem to be different sides of the same coin.

So, my question for the community is this: Why don't we instruct AI to maximize the fulfillment of human goals & values while minimizing problems, suffering, and negative consequences?

Would that do the most good or are there any problems / obstacles with this strategy?

Edit:

I will be using this post to collect a list of my ideas about the subject, each as a short form post which I will link to, right here:

- How to find a solution for EVERY problem

- How to measure the GOODNESS of a solution

- How to encode human values on a computer

- Preventing instrumental convergence with divergent thinking