Introduction

In population ethics, the Repugnant Conclusion is the observation that, according to certain theories, a large population of people whose lives are barely worth living is preferable to a small population with higher standards of living. (A very good summary is given by the Stanford Encyclopaedia of Philosophy, which also details many possible responses.)

I theorise yet another response. I am not certain that populations (say A,B) should be compared simply by ordering the sum total of each population's welfare. I propose instead that they should be compared by considering how the welfare would change if one population were transformed into the other (e.g. A --> B).

By merging this axiom with elements resembling other more established ideas such as a person-affecting view, I believe that this can lead to a comprehensive theory which avoids many of the serious flaws that can affect alternatives. I describe one such example below.

Notation

Let and denote very small and very large positive quantities respectively. I will also use to refer to a god who can alter populations instantaneously at will.

Real and imaginary welfare

I will use real to describe people that currently exist and imaginary to describe people that do not currently exist.

I also want to define what I will call real and imaginary welfare. This is a little bit more involved.

Real welfare

- gain of welfare for real people (+ve real welfare)

- loss of welfare for real people (-ve real welfare)

- creation of imaginary people with negative welfare (-ve real welfare)

Imaginary welfare

- creation of imaginary people with positive welfare (+ve imaginary welfare)

The idea here is then that real welfare outranks imaginary welfare - given two choices we should prefer the one that improves net real welfare more than the other.

Three examples are given below (where denotes a change in real welfare by and change in imaginary welfare by ).

We might also suggest that no proportion of a real population with positive welfare should end up with negative welfare, and that given these constraints, welfare is ideally distributed as equally as possible. I do not believe that these additional improvements are detrimental to the core argument here.

Axiom of Comparison

We will now use the following axiom to compare two different populations, A and B.

Let A,B be two different populations. Then, A is better than B if and only if there exists a way to instantaneously transform A into B with an action that is better than the status quo at .

Remark: if A is not better than B, this does not necessarily entail that B is better than A! In other words, order matters.

The Repugnant Conclusion fails

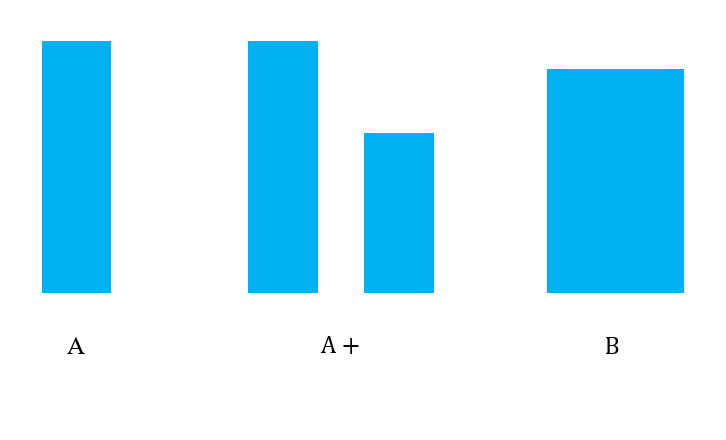

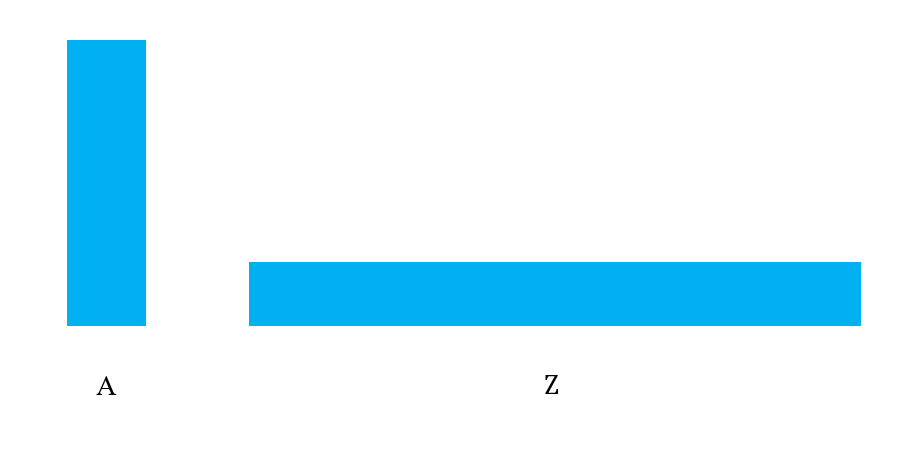

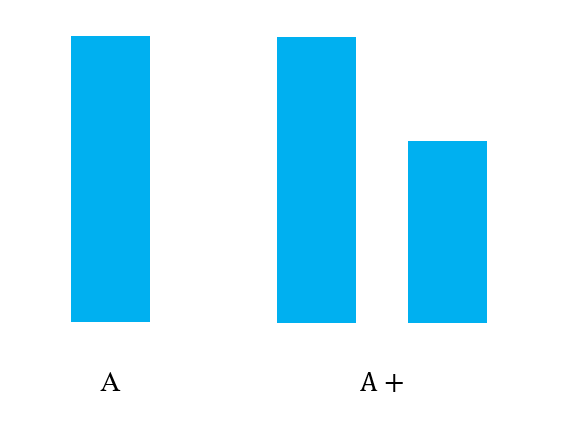

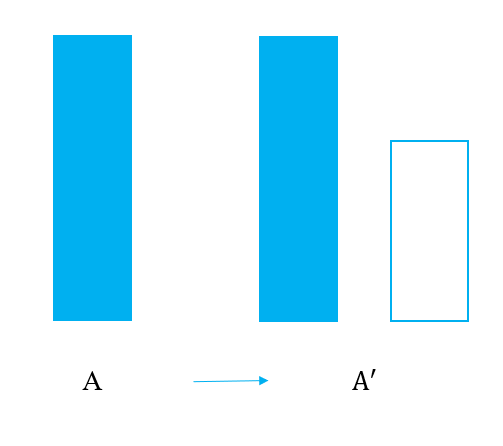

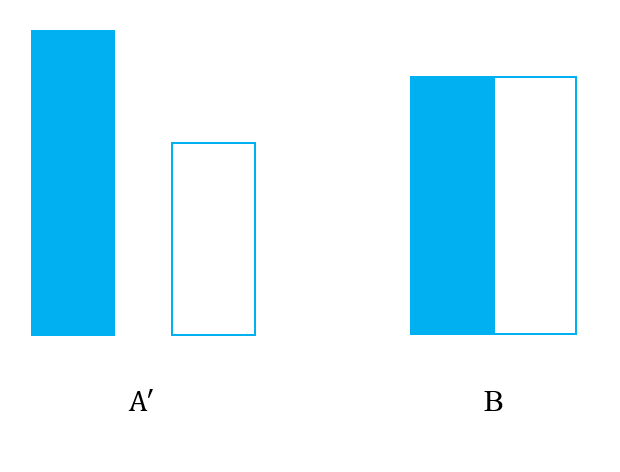

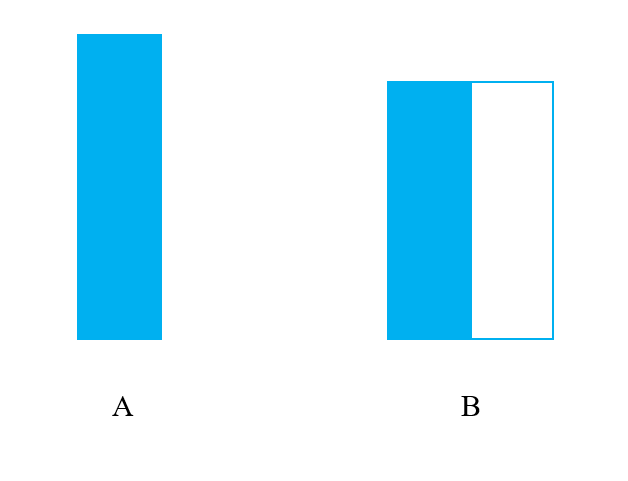

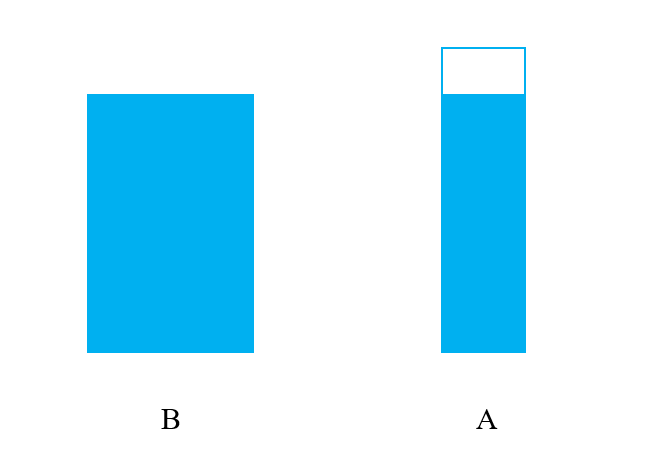

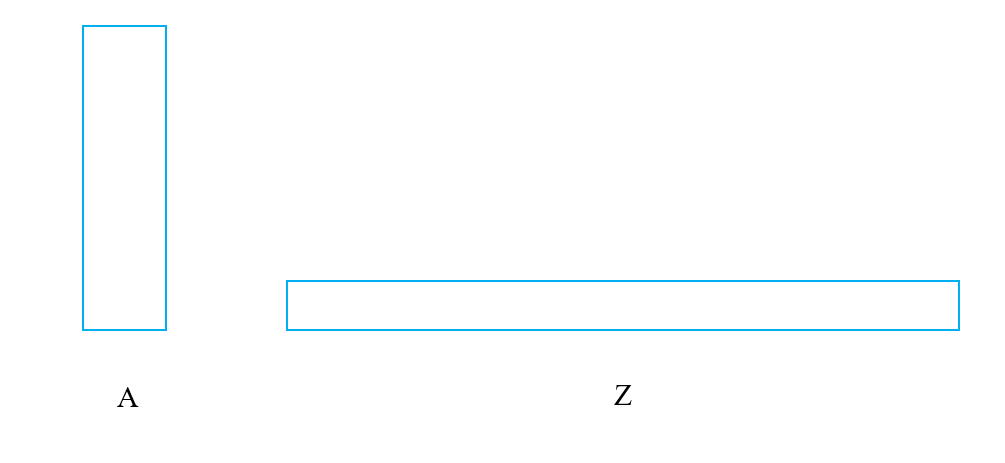

In the following reasoning, real populations will be illustrated with opaque boxes and imaginary populations with transparent boxes.

The standard argument for the Repugnant Conclusion begins by comparing A and A+. Since A+ has higher total welfare than A, the reasoning is that A+ is better than A. But then, B has the same population as A+ with higher total welfare and less inequality, so B is better than A+, and since A+ is better than A, B is better than A.

If we accept this reasoning, then by induction, it follows that we cannot deny that Z is better than A. This is rather repugnant.

So what happens if we instead invoke the Axiom of Comparison? Let us consider the first comparison between A and A+.

We can't just compare total welfare - according the axiom, we need to find a way "to get from" A to A+. In particular, the population of A needs to increase instantaneously. (That's fine, we can ask to do this.) But by definition, these newly-created people are imaginary.

You might be able to see where this is going now. We do have that A' is better than A, since it has higher imaginary welfare for the same real welfare. But A' is not the same as A+.

Let us finish this argument for completeness. We will now consider how to get from A' to B (bearing in mind that we started at A).

Note that B is not better than A', since the real population of A' (originally A) must lose real welfare to transform into B. Hence, B is not better than A' and therefore is not necessarily better than A. Thus, the Repugnant Conclusion fails.

Direct comparison between A and B

We have shown that the standard chain of reasoning that leads to the Repugnant Conclusion fails, given the axiom stated earlier. However, this does not necessarily assure that B is not better than A, so let us compare the two directly.

Once again, to get from A to B, we must lose real welfare and only gain imaginary welfare. Therefore, B is not better than A.

But what if we try to get from B to A?

Note that part of the population gains a small gift of imaginary welfare, but rather more dramatically, half of the population has disappeared(!) corresponding to a dire loss of real welfare. Thus, A is not better than B.

This can be interpreted in a very natural way. Suppose we were to suggest to the populations of A and B that transforms either population into the other. Then, both populations should, in fact, refuse. Both populations should prefer the status quo.

Is the Repugnant Conclusion really avoided?

You might now have thought of the following counterargument. Suppose that there is currently no real population, and we are debating with whether we should create A or Z. According to the rules above, we should create Z. Is this not repugnant?

I say that it is not. The difficulty of the Repugnant Conclusion, for me at least, is that it suggests that a real population should strive to become larger and more miserable on average. But the people of Z wouldn't be becoming more miserable - they'd simply be coming into existence.

Lastly, remember that populations are not static. Once we create Z (and it becomes real), who's to say it won't flourish and become Z+?

Indeed, if the population of Z chooses to believe in the Axiom of Comparison, then they need not come to any Repugnant Conclusions about their future :-)

Flaws that are avoided

Here are some of the flaws that I believe are avoided by this formulation.

No dependence on ad hoc utility functions

Transitivity is not rejected

No problems due to averaging

If one supports the average principle, this may lead to the conclusion that it is better to have millions being tortured than one person being tortured in a slightly worse way (since the average welfare of that one person is slightly worse).

No Sadistic Conclusion

This is an issue for some theories where adding a small number of people with negative welfare is preferable to adding a large number of people with small positive welfare.

Edit: following a helpful comment from Max Daniel, it now seems to me that a negative utilitarian aspect of this formulation which was originally included can be removed, still without leading to the Repugnant Conclusion. Thus, I believe that the following flaw is no longer an issue. I will keep what was written here for now, just in case I have made a fatal error in reasoning.

Remaining flaws or implications

I have identified one possible flaw, although, of course, that doesn't mean that more can't be found.

Suppose that we have a population X consisting of 1 person with welfare and 999 people with welfare 0. Then, according to the rules above, given the choice of bringing the first person up to neutral welfare or improving the welfare of the 999 other people by , we should prefer the former, as it is "real" rather than "imaginary".

This is not necessarily repugnant, but if you were part of the 999, you would feel a little miffed perhaps, so let's examine this a bit more. I provide some counter-arguments to the flaw.

It isn't a flaw

If you are firmly a negative utilitarian, then this isn't a flaw. Maybe it really should be considered better that everyone's life is first made worthy of living before giving large amounts of welfare to people who don't truly need it.

It is too implausible

Is it really even plausible that we can create large amounts of welfare for 999 people, but only a tiny amount of welfare for 1 person? Perhaps not all theories need to work in such extremes.

There is a bias in the reading

Consider two scenarios. In the first, you and make this decision without ever telling the population X. In the second, however, you let the people of X know about that you are undecided about the two options before you make the decisions.

In the first scenario, if you choose to help the 1 person with negative welfare, the other 999 people have no idea that they were rejected and therefore can't feel bad about it.

But in the second scenario, if, again, you choose to help the 1 person with negative welfare, the 999 people will be aware that they were rejected. This may even cause a loss of real welfare as they realise that they missed out. Thus, in this scenario, it could be the correct decision for you and to improve the welfare of the 999.

Thus, to summarise this final point and the entire post: I believe that it's not about the result - it's about how you get there.

Closing remarks

I welcome and encourage any criticism. I believe that the Axiom is an original idea, but I certainly wish to be corrected if I am mistaken.

There are a few additional remarks I had to make, but I have omitted them so as to avoid this post being even longer. Thanks for reading.

It seems to me that your proposed theory has severe flaws that are analogous to those of Lexical Threshold Negative Utilitarianism, and that you significantly understate the severity of these flaws in your discussion.

(Also, one at least prima facie flaw that you don't discuss at all is that your theory involves incomparability – i.e. there are populations A and B such that neither is better than the other.)

I'll give my immediate thoughts.

I agree that the theory experiences significant flaws akin to those of negative utilitarianism. However, something else that perhaps I understated is that the fleshed-out theory was only one example to demonstrate how the Axiom of Comparison could be deployed. With the axiom in hand, I imagine that better theories could be developed which avoid your compelling example with Bezos (and the Repugnant Conclusion). I would point out that most of the flaws you point out are not results of the axiom itself.

In fact, off the top of my head. If I now said that real people gaining welfare above 0 still counts as real welfare, I think that the Repugnant Conclusion and Bezos are avoided.

I am not sure why the "it is too implausible" defence is not convincing. The Repugnant Conclusion is not implausible to me - one can imagine that, if we accepted it, we might direct the development of civilisation from A to Z. Isn't it much more unlikely that we possess an ability to do exactly one of <improve the welfare of many happy people> and <improve the welfare of one unhappy person a bit>?

"It also has various other problems that plague lexical and negative-utilitarian theories, such as involving arguably theoretically unfounded discontinuities that lead to counterintuitive results, and being prima facie inconsistent with happiness/suffering and gains/losses tradeoffs we routinely make in our own lives."

I would request some clarification on the above.

"Also, one at least prima facie flaw that you don't discuss at all is that your theory involves incomparability – i.e. there are populations A and B such that neither is better than the other."

I did derive a pair of such populations A,B. If you meant that I did not discuss this further, then I am not sure why it is a problem at all. Suppose that we are population A. Do we truly need a way to assign a welfare score to our population? Isn't our primary (and I'd suggest only) consideration how we might improve? For this latter goal, the theory does produce any comparisons you could ask for.

Edit: I have read through the reasoning again and now it seems to me that the negative utilitarian aspect can indeed be removed (solving Bezos) and without reinstating the Repugnant Conclusion. Naturally, I may be wrong and this may also lead to new flaws. I would be interested to hear your thoughts on this. (The main post has been edited to reflect all new thoughts.)

This is an interesting approach. The idea that we can avoid the repugnant conclusion by saying that B is not better than A, and neither is A better than B, is I think similar to how Parfit himself thought we might be able to avoid the repugnant conclusion: https://onlinelibrary.wiley.com/doi/epdf/10.1111/theo.12097

He used the term "evaluative imprecision" to describe this. Here's a quote from the paper:

"Precisely equal is a transitive relation. If X and Y are precisely equally good, and Y and Z are precisely equally good, X and Z must be precisely equally good. But if X and Y are imprecisely equally good, so that neither is worse than the other, these imprecise relations are not transitive. Even if X is not worse than Y, which is not worse than Z, X may be worse than Z."

It's easy to see how evaluative imprecision could help us to avoid the repugnant conclusion.

But I don't think your approach actually achieves what is described in this quote, unless I'm missing something? It only refutes the repugnant conclusion in an extremely weak sense. Although Z is not better than A in your example, it is also not worse than A. That still seems quite repugnant!

Doesn't your logic explaining that neither A or B are worse than each other also apply to A and Z?

P.S. Thinking about this a bit more, doesn't this approach fail to give sensible answers to the non-identity problem as well? Almost all decisions we make about the future will change not just the welfare of future people, but which future people exist. That means every decision you could take will reduce real welfare, and so under this approach no decision can be be better than any other, which seems like a problem!

I'm afraid I don't understand this. In my framework, future people are imaginary, whether they are expected to come into existence with the status quo or not. Thus, they only contribute (negative) real welfare if they are brought into the world with negative welfare. I don't see why this would be true for almost all decisions, let alone most. It seems to me that the non-identity problem is completely independent of this. Either way, I am treating all future, or more generally imaginary, people as identical.

"That means every decision you could take will reduce real welfare, and so under this approach no decision can be be better than any other, which seems like a problem!"

As I say, I believe the premise to be false (I may have of course misunderstood), but nevertheless, in this case, you would take the decision that minimises the loss of real welfare and maximises imaginary welfare (I'll assume the infima and suprema are part of the decision space, since this isn't analysis). Then, such a decision is better than every other. I don't understand why the premise would lead to no decision being better than any other.

It sounds like I have misunderstood how to apply your methodology. I would like to understand it though. How would it apply to the following case?

Status quo (A): 1 person exists at very high welfare +X

Possible new situation (B): Original person has welfare reduced to X - 2 ϵ, 1000 people are created with very high welfare +X

Possible new situation (C): Original person has welfare X - ϵ, 1000 people are created with small positive welfare ϵ.

I'd like to understand how your theory would answer two cases: (1) We get to choose between all of A,B,C. (2) We are forced to choose between (B) and (C), because we know that the world is about to instantaneously transform into one of them.

This is how I had understood your theory to be applied:

From your reply it sounds like you're coming up with a different answer when comparing (B) to (C), because both ways round the 1000 people are always considered imaginary, as they don't literally exist in the status quo? Is that right?

If so, that still seems like it gives a non-sensical answer in this case, because it would then say that (C) is better than (B) (real welfare is reduced by less), when it seems obvious that (B) is actually better? This is an even worse version of the flaw you've already highlighted, because the existing person you're prioritising over the imaginary people is already at a welfare well above the 0 level.

If I've got something wrong and your methodology can explain the intuitively obvious answer that (B) is better than (C), and should be chosen in example (2) (regardless of their comparison to A), then I would be interested to understand how that works.

"From your reply it sounds like you're coming up with a different answer when comparing (B) to (C), because both ways round the 1000 people are always considered imaginary, as they don't literally exist in the status quo? Is that right?"

If the status quo is A, then with my methodology, you cannot compare B and C directly, and I don't think this is a problem. As I said previously, "... in particular, if you are a member of A, it's not relevant that the population of Z disagree which is better". Similarly, I don't think it's necessary that the people of A can compare B and C directly. The issue is that some of your comparisons do not have (A) as the status quo.

To fully clarify, if you are a member of X (or equivalently, X is your status quo), then you can only consider comparisons between X and other populations. You might find that B is better than X and C is not better than X. Even then, you could not objectively say B is better than C because you are working from your subjective viewpoint as a member of X. In my methodology, there is no "objective ordering" (which is what I perhaps inaccurately was referring to as a total ordering).

Thus,

"(A) is not better than (B) or (C) because to change (B) or (C) to (A) would cause 1000 people to disappear (which is a lot of negative real welfare)."

is true if you take the status quos to be (B),(C) respectively - but this is not our status quo. (Similarly for the third bullet point.)

"Neither (B) nor (C) are better than (A), because an instantaneous change from (A) to (B) or (C) would reduce real welfare (of the one already existing person)."

This is true from our viewpoint as a member of A. Hence, if we are forced to go from A to one of B or C, then it's always a bad thing. We minimise our loss of welfare according to the methodology and pick B, the 'least worst' option.

"We minimise our loss of welfare according to the methodology and pick B, the 'least worst' option."

But (B) doesn't minimise our loss of welfare. In B we have welfare X-2ϵ, and in C we have welfare X - ϵ, so wouldn't your methodology tell us to pick (C)? And this is intuitively clearly wrong in this case. It's telling us not tmake a negligible sacrifice to our welfare now in order to improve the lives of future generations, which is the same problematic conclusion that the non-identity problem gives to certain theories of population ethics.

I'm interested in how your approach would tell us to pick (B), because I still don't understand that?

I won't reply to your other comment just to keep the thread in one place from now on (my fault for adding a P.S, so trying to fix the mistake). But in short, yes, I disagree, and I think that these flaws are unfortunately severe and intractable. The 'forcing' scenario I imagined is more like the real world than the unforced decisions. For most of us making decisions, the fact that people will exist in the future is inevitable, and we have to think about how we can influence their welfare. We are therefore in a situation like (2), where we are going to move from (A) to either (B) or (C) and we just get to pick which of (B) or (C) it will be. Similarly, figuring out how to incorporate uncertainty is also fundamental, because all real world decisions are made under uncertainty.

Sorry, I misread (B) and (C). You are correct that, as written in the post, (C) would then be the better choice.

However, continuing with what I meant to imply when I realised this was a forced decision, we can note that whichever of (B),(C) is picked, 1000 people will come into existence with certainty. Thus, in this case, I would argue they are effectively real. This is contrasted with the case in which the decision is not forced -- then, there are no 1000 new people necessarily coming into existence, and as you correctly interpreted, the status quo is preferable (since the status quo (A) is actually an option this time).

Regarding non-identity, I would consider these 1000 new people in either (B),(C) to be identical. I am not entirely sure how non-identity is an issue here.

I am still not quite sure what you mean by uncertainty, but I feel that the above patches up (or more accurately, correctly generalises) the model at least with regards to the example you gave. I'll try to think of counterexamples myself.

By the way, this would also be my answer to Parfit's "depletion" problem, which I briefly glanced over. There is no way to stop hundreds of millions of people continuing to come into existence without dramatically reducing welfare (a few nuclear blasts might stop population growth but at quite a cost to welfare). Thus, these people are effectively real. Hence, if the current generation depleted everything, this would necessarily cause a massive loss of welfare to a population which may not exist yet, but are nevertheless effectively real. So we shouldn't do that. (That doesn't rule out a 'slower depletion', but I think that's fine.)

You can assert that you consider the 1000 people in (B) and (C) to be identical, for the purposes of applying your theory. That does avoid the non-identity problem in this case. But the fact is that they are not the same people. They have different hopes, dreams, personalities, memories, genders, etc.

By treating these different people as equivalent, your theory has become more impersonal. This means you can no longer appeal to one of the main arguments you gave to support it: that your recommendations always align with the answer you'd get if you asked the people in the population whether they'd like to move from one situation to the other. The people in (B) would not want to move to (C), and vice versa, because that would mean they no longer exist. But your theory now gives a strong recommendation for one over the other anyway.

There are also technical problems with how you'd actually apply this logic to more complicated situations where the number of future people differs. Suppose that 1000 extra people are created in (B), but 2000 extra people are created in (C), with varying levels of welfare. How do you apply your theory then? You now need 1000 of the 2000 people in (C) to be considered 'effectively real', to continue avoiding non-identity problem like conclusions, but which 1000? How do you pick? Different choices of the way you decide to pick will give you very different answers, and again your theory is becoming more impersonal, and losing more of its initial intuitive appeal.

Another problem is what to do under uncertainty. What if instead of a forced choice between (B) and (C), the choice is between:

0.1% chance of (A), 99.9% chance of (B)

0.1000001% chance of (A), 99.9% chance of (C).

Intuitively, the recommendations here should not be very different to the original example. The first choice should still be strongly preferred. But are the 1000 people still considered 'effectively real' in your theory, in order to allow you to reach that conclusion? Why? They're not guaranteed to exist, and actually, your real preferred option, (A), is more likely to happen with the second choice.

Maybe it's possible to resolve all these complications, but I think you're still a long way from that at the moment. And I think the theory will look a lot less intuitively appealing once you're finished.

I'd be interested to read what the final form of the theory looks like if you do accomplish this, although I still don't think I'm going to be convinced by a theory which will lead you to be predictably in conflict with your future self, even if you and your future self both follow the theory. I can see how that property can let you evade the repugnant conclusion logic while still sort of being transitive. But I think that property is just as undesirable to me as non-transitiveness would be.

"You can assert that you consider the 1000 people in (B) and (C) to be identical, for the purposes of applying your theory. That does avoid the non-identity problem in this case. But the fact is that they are not the same people. They have different hopes, dreams, personalities, memories, genders, etc."

But you stated that they don't exist yet (that they are "created"). Thus, we have no empirical knowledge of their hopes and dreams, so the most sensible prior seems to be that they are all identical. I apologise if I am coming across as obtuse, but I really do not see how non-identity causes issues here.

"The people in (B) would not want to move to (C), and vice versa, because that would mean they no longer exist."

Sorry, but this is quite incorrect. The people in (C) would want to move to (B). Bear in mind that when we are evaluating this decision, we now set (C) as the status quo. So the 1000 people at welfare ε are considered to be wholly real. If you stipulate that in going to (B), these 1000 people are to be eradicated then replaced with (imaginary) people at high welfare, then naturally the people of (C) should say no.

However, if you instead take the more reasonable route of getting from (C) to (B) via raising the real welfare of the 1000 and slightly reducing the welfare of one person, then clearly (B) is better than (C).

I think I realise what the issue may be here. When I say "going from (C) to (B)" or similar, I do not mean that (C),(B) are existent populations and (C) is suddenly becoming (B). That way, we certainly do run into issues of non-identity. Rather, (C) is a status quo and (B) is a hypothetical which may be achieved by any route. Whether the resulting people of (B) in the hypothetical are real or imaginary depends on which route you take. Naturally, the best routes involve eradicating as few real people as possible. In this instance, we can get from (C) to (B) without getting rid of anyone. The route of disappearing 1000 people and replacing them with 1000 new people is one of the worse routes. And in the original post, in one of the examples, to get from one population to the other, it was necessary to get rid of real people, with only imaginary gain. Hence, there could not exist an acceptable route to the second population -- one better than remaining at the status quo.

I appreciate now that this may have been unclear. However, I did not fully explain this because the idea of one existent population "becoming" (indeed, how?) another existent population is surely impossible and therefore not worth consideration.

"There are also technical problems with how you'd actually apply this logic to more complicated situations where the number of future people differs. Suppose that 1000 extra people are created in (B), but 2000 extra people are created in (C), with varying levels of welfare. How do you apply your theory then? You now need 1000 of the 2000 people in (C) to be considered 'effectively real', to continue avoiding non-identity problem like conclusions, but which 1000? How do you pick? Different choices of the way you decide to pick will give you very different answers, and again your theory is becoming more impersonal, and losing more of its initial intuitive appeal."

I would say exactly the same for this. If these people are being freshly created, then I don't see the harm in treating them as identical. If a person decided not to have a child with their partner today, but rather tomorrow, then indeed, they will almost certainly produce a different child. But the hypothetical child of today is not exactly going to complain if they were never created. That is the reasoning guiding my thought, on the intuitive level.

And given that this calculus works solely by considering welfare, naturally, it is reductive, as is every utilitarian calculus which only considers welfare. Isn't the very idea of reducing people to their welfare impersonal?

"0.1% chance of (A), 99.9% chance of (B)

0.1000001% chance of (A), 99.9% chance of (C)."

Well, it would seem to me this is perfect for an application of the concept of expectation. Taking the expected value, then in both cases ~999 people become effectively real and the same conclusion is reached.

If the odds in the second scenario were 50-50, then the expected value is that 500 people are effectively real (since 999 are expected in the first scenario, 500 in the second scenario and we have to pick one; we take the minimum). Then, the evaluation changes. Of course, this implies there is a critical point where if the chance of (C) in the second option is sufficiently low, then both options are equally good, from the perspective of (A).

The natural question then, which I also ask myself, is what if there were hundreds of scenarios, and in at least one scenario there were no people created. Then, supposedly no one is effectively real. But actually, I'm not sure this is a problem. More thinking will be required here to see whether I am right or wrong.

I do very much appreciate your criticism. Equally, it is quite striking to me that whenever you have pointed out an error, it has immediately seemed clear to me what the solution would be. Certainly, this discussion has been very productive in that way and rounded out this model a bit more. I expect I will write it all up, hopefully with some further improvements, in another post some time in the future.

"I would say exactly the same for this. If these people are being freshly created, then I don't see the harm in treating them as identical."

I think you missed my point. How can 1,000 people be identical to 2,000 people? Let me give a more concrete example. Suppose again we have 3 possible outcomes:

(A) (Status quo): 1 person exists at high welfare +X

(B): Original person has welfare reduced to X - 2ϵ, 1000 new people are created at welfare +X

(C): Original person has welfare reduced only to X - ϵ, 2000 new people are created, 1000 at welfare ϵ, and 1000 at welfare X + ϵ.

And you are forced to choose between (B) and (C).

How do you pick? I think you want to say 1000 of the potential new people are "effectively real", but which 1000 are "effectively real" in scenario (C)? Is it the 1000 at welfare ϵ? Is it the 1000 at welfare X+ϵ? Is it some mix of the two?

If you take the first route, (B) is strongly preferred, but if you take the second, then (C) would be preferred. There's ambiguity here which needs to be sorted out.

"Then, supposedly no one is effectively real. But actually, I'm not sure this is a problem. More thinking will be required here to see whether I am right or wrong."

Thank you for finding and expressing my objection for me! This does seem like a fairly major problem to me.

"Sorry, but this is quite incorrect. The people in (C) would want to move to (B)."

No, they wouldn't, because the people in (B) are different to the people in (C). You can assert that you treat them the same, but you can't assert that they are the same. The (B) scenario with different people and the (B) scenario with the same people are both distinct, possible, outcomes, and your theory needs to handle them both. It can give the same answer to both, that's fine, but part of the set up of my hypothetical scenario is that the people are different.

"Isn't the very idea of reducing people to their welfare impersonal?"

Not necessarily. So called "person affecting" theories say that an act can only be wrong if it makes things worse for someone. That's an example of a theory based on welfare which is not impersonal. Your intuitive justification for your theory seemed to have a similar flavour to this, but if we want to avoid the non-identity problem, we need to reject this appealing sounding principle. It is possible to make things worse even though there is no one who it is worse for. Your 'effectively real' modification does this, I just think it reduces the intuitive appeal of the argument you gave.

"Let me give a more concrete example."

Ah, I understand now. Certainly then there is ambiguity that needs to be sorted out. I'd like to say again that this is not something the original theory was designed to handle. Everything I've been saying in these comments is off the cuff rather than premeditated - it's not surprising that there are flaws in the fixes I've suggested. It's certainly not surprising that the ad hoc fixes don't solve every conceivable problem. And again, it would appear to me that there are plenty of plausible solutions. I guess really that I just need to spend some time evaluating which would be best and then tidy it up in a new post.

"No, they wouldn't, because the people in (B) are different to the people in (C). You can assert that you treat them the same, but you can't assert that they are the same. The (B) scenario with different people and the (B) scenario with the same people are both distinct, possible, outcomes, and your theory needs to handle them both. It can give the same answer to both, that's fine, but part of the set up of my hypothetical scenario is that the people are different."

Then yes, as I did say in the rather lengthy explanation I gave:

"The route of disappearing 1000 people and replacing them with 1000 new people is one of the worse routes."

If you insist that we must get rid of 1000 people and replace them with 1000 different people, then sure, (B) is worse than (C). So now I will remind myself what your objection regarding this was in an earlier comment.

I'll try explaining again briefly. With this theory, don't think of the (B),(C) etc. as populations but rather as "distributions" the status quo population could take. Thus, as I said:

"(B) is a hypothetical which may be achieved by any route. Whether the resulting people of (B) in the hypothetical are real or imaginary depends on which route you take."

When a population is not the status quo, it is simply representing a population distribution that you can get to. Whichever population is not the status quo is considered in an abstract, hypothetical sense.

Now you wish to specifically consider the case where (with status quo (C)), everyone in (B) is specified to be different to the people in (C). I stress that this is not the usual sense in which comparisons are made in the theory; it is much more specific. Again, if one insists on this, then since we have to disappear 1000 people to get to (B), (B) is worse.

Your issue with this is that: "the people in (B) would not want to move to (C), and vice versa, because that would mean they no longer exist. But your theory now gives a strong recommendation for one over the other anyway."

Now I hope the explanation is fully clear. The distribution of (B) is preferable to people in (C) (i.e. with (C) as the status quo), but if you insist that the only routes to (C) involve getting rid of most of the population and replacing them with 1000 non-identical people, then this is not preferable. When (A) is the status quo, yes, we have a strong preference for (B) over (C) because we don't have to lose 1000 people, and I don't see the problem with considering people with equal welfare who (in the status quo of (A)) are imaginary or "effectively real" as identical. In line with a person-affecting outlook, I give more priority to real people than imaginary or effectively real people -- I only respect the non-identity of real people. And just to add, viewing people as effectively real is not to say that they are really real (since they don't exist yet, even if they are mathematically expected to); it's only been a way to balance the books for forced decisions.

The outcome is still, as far as I can see, consistent with transitivity and my already-avowed rejection of an objective ordering.

Hi, Toby. One of the core arguments here, which perhaps I didn't fully illuminate, is that (I believe that) the "better than" operation is fundamentally unsuitable for population ethics.

If you are a member of A, then Z is not better than A. So Z is worse than A but only if you are a member of A. If you are a member of Z, you will find that A is not better than Z. So, sure, A is worse than Z but only if you are a member of Z. In other words, my population ethics depends on the population.

In particular, if you are a member of A, it's not relevant that the population of Z disagree which is better. Indeed, why would you care? The fallacy that every argument re: Repugnant Conclusion commits is assuming that we require a total ordering of populations' goodness. This is a complete red herring. We don't. Doesn't it suffice to know what is best for your particular population? Isn't that the purpose of population ethics? I argue that the Repugnant Conclusion is merely the result of an unjustified fixation on a meaningless mathematical ideal (total ordering).

I said something similar in my reply to Max Daniel's comment; I am not sure if I phrased it better here or there. If this idea was not clear in the post (or even in this reply), I would appreciate any feedback on how to make it more apparent.

I understood your rejection of the total ordering on populations, and as I say, this is an idea that others have tried to apply to this problem before.

But the approach others have tried to take is to use the lack of a precise "better than" relation to evade the logic of the repugnant conclusion arguments, while still ultimately concluding that population Z is worse than population A. If you only conclude that Z is not worse than A, and A is not worse than Z (i.e. we should be indifferent about taking actions which transform us from world A to world Z), then a lot of people would still find that repugnant!

Or are you saying that your theory tells us not to transform ourselves to world Z? Because we should only ever do anything that will make things actually better?

If so, how would your approach handle uncertainty? What probability of a world Z should we be willing to risk in order to improve a small amount of real welfare?

And there's another way in which your approach still contains some form of the repugnant conclusion. If a population stopped dealing in hypotheticals and actually started taking actions, so that these imaginary people became real, then you could imagine a population going through all the steps of the repugnant conclusion argument process, thinking they were making improvements on the status quo each time, and finding themselves ultimately ending up at Z. In fact it can happen in just two steps, if the population of B is made large enough, with small enough welfare.

I find something a bit strange about it being different when happening in reality to when happening in our heads. You could imagine people thinking

"Should we create a large population B at small positive welfare?"

"Sure, it increases positive imaginary welfare and does nothing to real welfare"

"But once we've done that, they will then be real, and so then we might want to boost their welfare at the expense of our own. We'll end up with a huge population of people with lives barely worth living, that seems quite repugnant."

"It is repugnant, we shouldn't prioritise imaginary welfare over real welfare. Those people don't exist."

"But if we create them they will exist, so then we will end up deciding to move towards world Z. We should take action now to stop ourselves being able to do that in future."

I find this situation of people being in conflict with their future selves quite strange. It seems irrational to me!

"Or are you saying that your theory tells us not to transform ourselves to world Z? Because we should only ever do anything that will make things actually better?"

Yes - and the previous description you gave is not what I intended.

"If so, how would your approach handle uncertainty? What probability of a world Z should we be willing to risk in order to improve a small amount of real welfare?"

This is a reasonable question, but I do not think this is a major issue so I will not necessarily answer it now.

"And there's another way in which your approach still contains some form of the repugnant conclusion. If a population stopped dealing in hypotheticals and actually started taking actions, so that these imaginary people became real, then you could imagine a population going through all the steps of the repugnant conclusion argument process, thinking they were making improvements on the status quo each time, and finding themselves ultimately ending up at Z. In fact it can happen in just two steps, if the population of B is made large enough, with small enough welfare."

This is true, and I noticed this myself. However, actually, this comes from the assumption that more net imaginary welfare is always a good thing, which was one of the "WLOG" assumptions I made not needed for the refutation of the Repugnant Conclusion. If we instead take an averaging or more egalitarian approach with imaginary welfare, I think the problem doesn't have to appear.

For instance, suppose we now stipulate that any decision (given the constraints on real welfare) that has average welfare for the imaginary population at least equal to the average of the real population is better than any decision without this property, then the problem is gone. (Remark: we still do need the real/imaginary divide here to avoid the Repugnant Conclusion.)

This may seem rather ad hoc, and it is, but it could be framed as, "A priority is that the average welfare of future populations is at least as good as it is now", which seems reasonable.

[Edit: actually, I think this doesn't entirely work in that if you are forced to pick between two populations, as in your other example, you may get the same scenario as you describe.

Edit 2: Equally, if you are "forced" to make a decision, then perhaps those some of those people should be considered as real in a sense since people are definitely coming into existence, one way or another. I think it's definitely important to note that the idea was designed to work for unforced decisions so I reckon it's likely that assuming "forced populations" are imaginary is not the correct generalisation.]

As I say, I had noticed this particular issue myself (when I first wrote this post, in fact). I don't deny that the construction, in its current state, is flawed. However, to me, these flaws seem generally less severe and more tractable (Would you disagree? Just curious.) - and so, I haven't spent much time thinking about them.

(Even the "Axiom of Comparison", which I say is the most important part of the construction, may not be exactly the right approach. But I believe it's on the right lines.)