Content warning: I use some four letter words and there are non-detailed descriptions of sexual violence and abuse when giving examples for potential misuse of generative AI.

“So you’re telling me we can’t believe anything we’re shown anymore?” I’m asking. “That everything is altered? That everything’s a lie? That everyone will believe this?” “That’s a fact,” Palakon says. “So what’s true, then?” I cry out. “Nothing, Victor,” Palakon says. “There are different truths.”

Bret Easton Ellis – Glamorama, 1998

Photography has played a major role in allowing us to construct an understanding of the world whose general outlines are shared by billions of people. Functionally, photographic images extend our senses and tell us about the world outside of our immediate here and now. They are among the most important epistemological tools of humanity. Even today, after the transition to digital has made image manipulation easier and more widespread, they have not lost this role in our everyday lives. If we see a photograph or a video of something, we generally still believe in its factuality. At least in absence of reasons to doubt its veracity. With the advent of generative AI, this is about to change – sooner, more drastically and, given the abysmal state of our existing information landscape, with more dramatic consequences than we might intuit.

This, to me, seems to be an urgent AI safety concern that is simply not talked about enough. One reason is that other scenarios like paperclip optimizers, biases, mass unemployment and biohazards are dominating the safety discourse. We only have so much attention to give. Another reason is that photographic images are so deeply engrained in our everyday normal life. They are just part of our world and we take it for granted that we have the ability to see across oceans as well as through time. It is hard to imagine that very soon all of us, collectively, might have this ability severely diminished; or what the consequences of that might be.

I call this concern urgent, because we do not need to achieve AGI to get there. In fact, it seems likely no further large jumps in capabilities are needed at all. All that is missing seem to be smaller refinements of existing techniques. In some sense, the problems have already begun. I think of this as a safety issue with high p-doom and short timelines. Or in other words: It's likely to go bad, and soon.

Too ominous? Maybe I'm overdoing it? I'm going to try and convince you otherwise! Just before I start, one more thing: When I talk about photography or photographic images, I am also talking about film. Even more so, most of what is being said here could also be said about other kinds of technical recordings and their generated counterparts, e. g. audio, but I'm going to be busy enough to look at photographs (and film), so "photographic images" are going to be at the center of this. All good? Yes? Then let's go.

The Importance of Photographic Images, or: We Are Cyborgs Already

My whole argument rests on the importance photographic images have for us. So let's think about that for a moment. The problem is: Grasping the importance of images for the way we connect to the world is actually quite difficult. It's a fish-water problem, or in the words of McLuhan (him, the Patron Saint of everyone thinking about media):

One thing about which fish know exactly nothing is water—they do not know that the water is wet because they have no experience of dry. Once immersed in the media, despite all its images and sounds and words, how can we know what it is doing to us?

Let's try to look at the water by looking at me. I'm 44 and German. I have bumbled across Europe a couple of times, lived in another country for three years and crossed the Atlantic ocean more than once. Still, what I have seen of the world with my own eyes is insignificant compared to what I have seen by the way of photographic images. Images showed me countries in the Middle East, Montana and Texas, Japan and China, New York, nebula in the Milky Way, the Eiffel tower, cottages in the English country side, the Grand Canyon and the peaks of the Himalaya. And that's just places. I must have seen millions of people from all over the world, from all kinds of eras. Faces of so many ethnicities, scenes from their lives. Clothes, items, the general vibe of towns and villages. The Savannah. Lions and hyenas. The ocean's depths. Whales, sharks and schools of fish. I saw a single man stop a row of tanks on Tiananmen Square, bombs falling on Bagdad, the Beatles crossing a road. I saw people fuck. I saw people get killed. I saw a birth. I saw rockets lifting of and entering orbit. I saw the Challenger explode. I saw Reagan asking Gorbatschow to tear down that wall. I saw Phan Thi Kim Phuc, the Napalm Girl, fleeing from her village. I saw the Twin Towers coming down and the prisoners in Abu Ghraib. I witnessed George Floyd die. I know these things happened. I saw them. And even more importantly: I can be fairly certain you saw this too. All of it. For nearly two hundred years, photography has played a key role in ensuring that billions of people have been able to agree on at least some ground truths, enabling us to live in the same world and share one reality.

Sure, photography is not the only medium telling us from far way places and times long gone, and without accompanying explanations, the solitary man on Tiananmen Square might have been a tank inspector. Or the driver's spouse. Still, photography presents information as fact in a way a written report or hearsay could never hope to achieve. No written account of Phan Thi Kim Phuc's story could have had the impact her image had. It's harrowing and became one of the most iconic images for the horrors of war in the 20st century. This is in part due to its artistic or aesthetic qualities. It makes you feel something. It triggers empathy. But artistic qualities alone are not the whole story. No drawing would have achieved its effect. It's impact was only possible, because it is a photograph. THAT makes it evidence. One sees it and knows: This is a slice of time. A fact. This happened! Of course not every photograph is always believed by everyone. And of course there is manipulation and deceit. But that's beside the point. In the absence of specific reasons to think otherwise and in the overwhelming majority of cases, photographic images are taken in and processed as evidence:

Fig. 1 – This happened!

Let's take a step back. So far I have given you a lot of pretty dramatic examples. Rockets. The Milky Way. War photography. But the power of images does not solely rely on their ability to capture dramatic or even just important moments. There is a lot of banal everyday imagery that nevertheless tells you a lot about the world, even if just reaffirms what it normally looks like. My point is this: Today, in the age of the internet and digital distribution, every one of us lives in a constant stream of images that constantly show us what the world beyond our immediate here and now looks like. As beings, we are placed and integrated in an information environment that is constantly providing mediated information from beyond our individual cone of perception. In a way, we are already cyborgs.

If this is true, I think it follows that it is important to understand better how our relationship to images functions; and to be very wary of any development that might bring about deep and lasting changes to it.

Crash Course on the Epistemics/Semiotics of Photography

"The photograph is literally an emanation of the referent. From a real body, which was there, proceed radiations which ultimately touch me, who am here; the duration of the transmission is insignificant; the photograph of the missing being, as Sontag says, will touch me like the delayed rays of a star."

Roland Barthes – Camera Lucida, 1979

Ok, we have reminded ourselves that photography is kind of a big deal. Let's talk about the why and how. For this we are going to take a brief look at the semiotics of photography and try to see why these images possess such a high epistemic value. This is an endeavor that could fill a long and fruitful academic career, but as this is not supposed to be a paper on epistemology or semiotics, I'm going to be a bit slappydaisy here and develop these thoughts just to the point needed for a framework we can use to look at generative image creation and its possible impact.

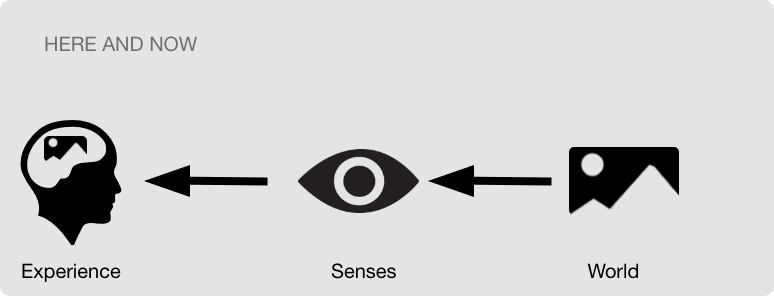

Let's start with a simple model of perceiving the world. This is us, looking at things:

Fig. 2 – Epistemic Everyday Oversimplification

Something from the world reaches our senses, in this case light reflected from our surrounding falling into our eyes, and we form an image of our surroundings in our mind. Yes, this is overly simple, but for our purposes we can ignore further complexities. What we are interested in is not the empirical question of how our brain does visual cognition and constructs the world, but how we perceive our perception in normal everyday life; and normally we assume that the image we see through our senses is a fair representation and corresponds well to the real world. In fact, one could argue that we usually just assume the image in our mind IS the world and we do not reflect on its mediated, constructed and representative nature.

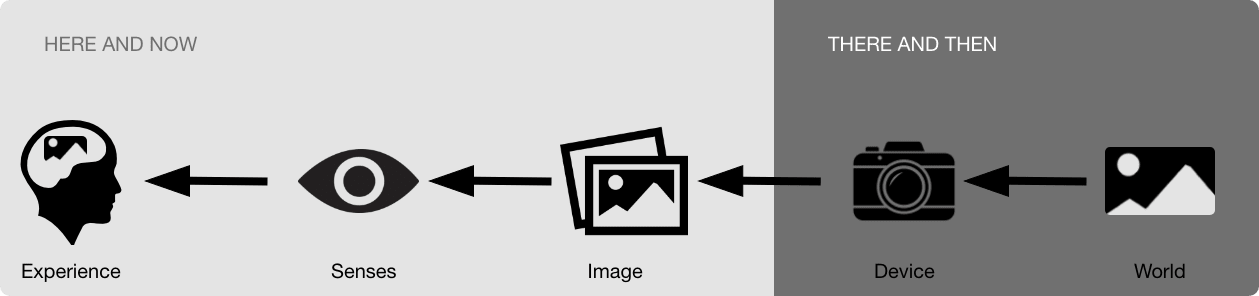

Next, let's look at us looking at photographic images:

Fig. 3 – Epistemic Everyday Oversimplification for Photographic Images

Something from the world interacts with a device, light falling into a lens, which leaves an imprint in a medium, creating a photograph that we can later perceive with our senses, eliciting the formation of an image of the world in our mind that includes the photographic image. A couple of things are of note here:

One: The image can be taken when we are not present and it then exists through time. It transports a slice of THERE AND THEN into the HERE AND NOW. We are used to this to a point it sounds trivial, but holy shit ... technology is amazing! (Live broadcast also adds THERE AND NOW to the mix, but that isn't important for what we are interested here, so let's not dive into it.)

Two: The resulting image, the photograph, is a result of the world interacting with the world. It's right in the name photography: Light (phōtos) is doing the drawing (graphé). As such, the belief in photography is based on a scientific world view, though my guess is that photography also played a significant role in engraining scientific thinking into our understanding of the world. Be that as it may, what is important to us here is that we have reasons to see the photograph as the result of natural processes we cannot manipulate in a significant manner. We can put a filter on a lens and change an image's hue, we cannot put a filter on a lens to make it look as if you are sleeping with your neighbour behind your spouse's back.

Three: We are very good at differentiating photographs from other images (e. g. paintings).

Four: Up until very recently, it used be true that we did not know of another method to produce a photograph other than taking a photograph. Yes, there are spectacular works of art that are "photorealistic", but this term refers to an effect were the picture allows us to suspend disbelief for a moment and pretend it is "like a photograph". We might marble at the skill involved, but when it counts, we can tell the difference (e. g. in court). No matter how good the painting, if it is the only proof of you "being" with your neighbour, you should be fine.

Five: As in the case of direct perception of the HERE AND NOW through our senses, we usually assume that the image of the world the photograph elicits in our mind just WAS the world in the THERE AND THEN, meaning we usually do not reflect on light engraving a representation on a medium that later bounces more light into our eyes, triggering complicated cognitive functions whose result we interpret as an image of how the world was. We just assume we are looking at the world as it was. Doubt and reflection only enter the picture, if we have a reason for it.

Let's Talk Manipulation: Ways in which Photographs Were Never Reliable

Fig. 4 – Stalin didn't just kill people, he tried to have them "unpersoned", deleted from all records. Ironically, you wouldn't be looking at these pictures now, if it wasn't for his attempt of rewriting history.

So far I have talked a lot about how reliable photography is. That is, of course, horseshit. Manipulation of photographs is basically as old a photography itself. The incentive is clear: Photography holds a high prove value for us, so if you manage to make the pictures show (or suggest) what you want to show (or suggest), you have a powerful tool to convince people of your version of events or your perspective on things. Correspondingly, our trust in photographic images was never complete. So let's take a moment to reflect on ways photography has always been unreliable and open to manipulation. It will prepare us to start talking about digital photography, which will be the last stepping stone needed to discuss AI driven image generation.

The following things all made photographs unreliable representation of the world from the very beginning:

a) "Mistakes" of our cognitive-perceptual machine, for example optical illusions or biases influencing the way we interpret images. b) Deviations introduced by the camera or the medium on which the light is engraved, for example the way film was calibrated to work well on "white" skin, but didn't produce realistic representations of darker skin tones. c) Artistic means to achieve propagandistic effects, for example Leni Riefenstahl's aesthetics in Nazi propaganda. d) Intentional or unintentional choice of subject and/or frame, for example photographing violence at a political rally, but focusing on the deeds of one group over the other. e) Presenting a real image but lying about its context, for example when images of past atrocities are repurposed and shared as proof for atrocities in current conflicts f) Staged realities, for example reporters manipulating a scene to create additional drama or NASA faking the moon landing (just kidding!). g) Direct manipulation of the image itself, for example Stalin having people killed and then removed from photographs.

If we look back up to Fig. 3, our basic semiotic model of photography, we can see that all these different ways to commit mistakes, manipulate or outright lie and deceive do influence the veracity of the photograph at different points in the chain from "world" to "image of the world in our mind". For example: Staged realities produce, in a way, honest photographs of a lie; material unable to represent dark skin tones skews the result on the level of the device; image manipulation interferes with the image itself; and so on. Also: Every kind of mistake or deception requires different techniques and skill sets, involves a different degree of effort, produces different results and requires different methods of detection.

All of this begs the question: If there are so many ways to manipulate and lie with photography, why do we put so much trust in it? I can see a variety of candidates for a good explanation:

a) Difficulty: Most if not all of these ways to deceive are not trivial to utilize successfully. They require effort and some degree of expert knowledge.

b) Probability: The vast majority of photographs are not "fake".

c) Utility: As long as the probabilities are reasonably good, our believe in photographs is useful and usually works well for us.

d) Symbolic moments: Many historic moments were documented by photography or even became important historic moments in the first place, because they were photographed. Iconic moments like the "Napalm Girl" fleeing her village or the "Tankman" stopping tanks on Tiananmen Square also reinforce the believe in photography itself. Billions of us came together and collectively agreed that those images represented something from reality.

e) Social conventions: Taking photographs as accurate representations is the normal attitude shared by most people and it is the attitude children grow up with. Once established, this is a self-perpetuating cycle.

f) Trust in Sources: Especially when the topic is important, photography is used by trusted institutions like newspapers or court systems. This is probably a synergistic effect: Photography benefitting from its connection to trusted institutions and the institutions benefitting from using a trusted medium.

g) Accountability: For photographers working in a documentary capacity (i.e. journalists, not artists), being caught faking images would likely be the end of their career. The social norms around this are pretty strong and disincentivize fakes. We know this.

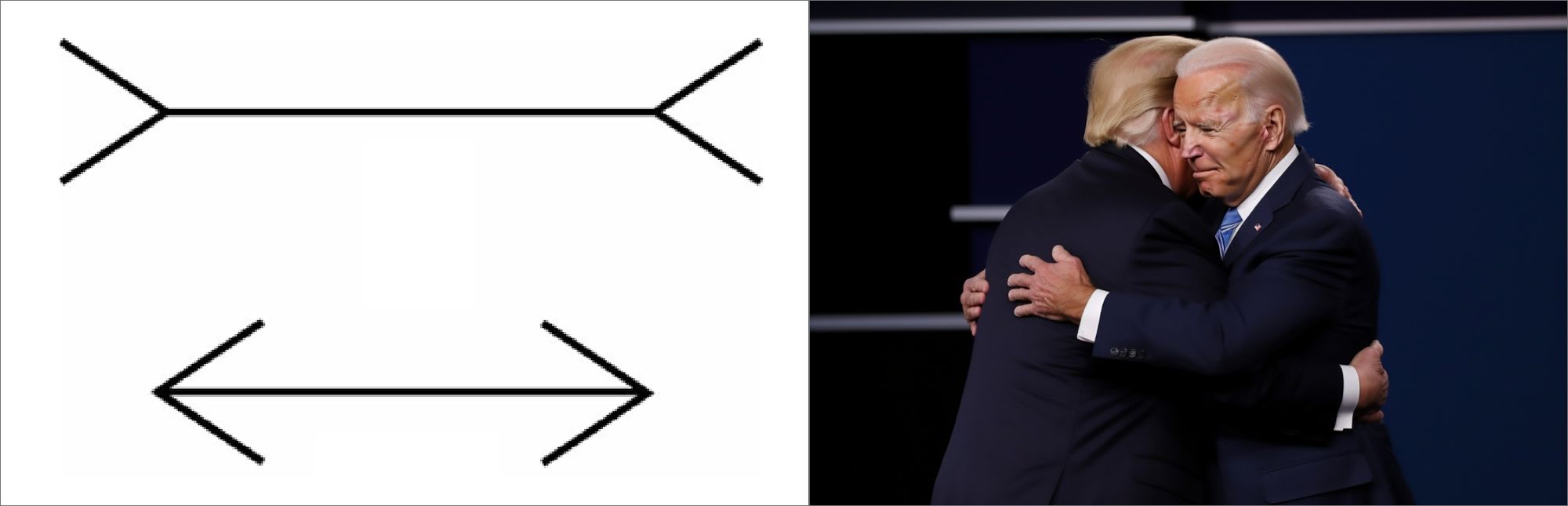

h) Personal experience: Long before digital photography and smart phones became a thing, photography was already ubiqutous in our lives. For more than 100 years, humans are used to being photographed and taking photographs themselves. The reliability of the results is first hand knowledge, not something someone told us about.i) Biology: The processes our brain uses to construct reality from our visual system's input are neither conscious, nor under our control. My guess is that photographic images tickle some neurological pathway that says "Yeah, this is real". Just as with optical illusions, we can overwrite this (to an extend) by reasoning about what we see, but the pathway gets tickled nonetheless (If I'm right about this). After all, the lines in the image below are not different in length and Biden (probably) never hugged Trump, but it still looks like it, doesn't it:

Fig. 5

Digital Photography: From Chemistry to Information

Photography, as we have known it, is both ending and enlarging, with an evolving medium hidden inside it as in a Trojan horse, camouflaged, for the moment, as if it were nearly identical: its doppelgänger, only better.

Fred Ritchin – After Photography, 2010

At first glance, not so much changes with the switch to digital image generation. The core epistemology is still there. Light is reflected from an object in the world, falls into the device, triggering a reaction recording the event, resulting in an image that we can then see, showing us in the HERE AN NOW what happened in he THERE AND THEN. What has changed is the medium the reaction takes place in and how the resulting image is stored. We move from a chemical reaction and film to a light sensitive receptor, electronic signals and pixel data stored on some kind of memory device. The image isn't a thing I hold in my hand anymore, it's information displayed on a screen. And information can be altered a lot more easily than the chemical compounds making up analog photographs.

In other words: The ability to manipulate every pixel of digital photographic images doesn't change that images claim to be representations about something in the world, it "just" provides new ways of lying. A lot has been written about how the digitization of images undermines their trustworthiness, but here I'm more interested in a different aspect: Usually, we just don't care that much!

If we see a photographic image, we usually still assume it shows something from the world. Yes, we are aware that color balances are adjusted. We know shapes are being remodelled (biceps grow, faces smooth out, fat vanishes etc.). We know images lie. Still, we usually do not distrust digital photographic images on a fundamental level. If anything, we still put too much trust in them, for example when allowing them to shape and warp our ideas of beauty to a ridiculous degree. Why is that?

Well, let's quickly look at all the factors for why we believed in analog photographic images and how they changed with the proliferation of digital photography.

a) Difficulty: It is still not trivial to produce credible fakes. It still requires expert knowledge and the results still show traces of manipulation. If a private detective can show your spouse a video of you getting it on with your neighbour, you are still in trouble. You won't get out of that by pointing out the Kardashian's are photoshopping their butts. When Iran tries to multiply the number of rockets fired on a photograph, we still catch them.

b) Probability: The vast majority of photographic images still show something that happened in the world.

c) Utility: Our believe in photographic images is still useful and usually works well for us.

d) Symbolic moments: We haven't had any really big symbolic moment undermining the trust in the epistemic value of photographic images (yet). For example: The last U.S. election wasn't about an image of Biden/Trump doing something scandalous and a subsequent weeklong shitshow of experts trying to decipher if the image was fake or not.

e) Social conventions: Taking photographs as accurate representations is the normal attitude shared by most people and still the normal way to approach photographic images in everyday life.

f) Trust in Sources: Trust in institutions, especially media, has definitely waned, but not as a result of diminished trust in photography after the switch to digital. More about that in the section "Interlude: Epistemic Crises Before Generative AI".

g) Accountability: Being caught faking an image is still a high risk for anyone working in a documentary capacity. But: Social media is filling the internet with news-like content and accompanying images that no one is taking responsibility for.

h) Personal Experience: Photography is more ubiqutous in our lives than ever. We are photographed and we take photographs ALL THE TIME now. We are also familiar with the ways to manipulate digital images and their limitations.

i) Biology: Digital images still tickle our brains into thinking "Yeah, this is real".

So yes, there are some shifts, but overall most of the reasons we put trust in photography are still in place and largely unchanged even after the switch to digital and the proliferation of manipulation techniques. We still use photographic images to learn about the world beyond our own individual cone of perception.

Interlude: Epistemic Crises Before Generative AI

You’re saying it’s a falsehood, and they’re giving ... Sean Spicer, our press secretary, gave alternative facts to that.

Kellyanne Conway – Counselor to President Trump, January 2017

When I started to think about this topic, I feared that generative AI would worsen an epistemic crisis that is already going on. When I thought about it more, I realized there is a better description to be had for the problem: There are two epistemic crises already going on (and interacting with each other), and generative AI will be a third entirely new one – having the way it impacts us shaped by the ones we are currently going through. So, before we (finally) dive into generative AI, what are these two distinct epistemic crises I'm referring to?

Malleable Reality

This crisis was already alluded to in the last section about digital photography. Photographic images became easier to alter, they are extremely malleable, and still we take them as reality. It's not about a loss of believe, but a misplacement of believe. Digital media took over from analog photography relatively quickly and without much of a hitch, piggybacking on social norms built over more than one and a half centuries of trusting analog photography and still tickling our brains in the same "Hey, this is true"-kind of way. Consequences are probably most visible in the warping of our beauty standards – we now live in a world were video conferencing software gives you a slider to touch up your skin in real time and "beauty" influencers are quickly adding ever more sophisticated filters to their arsenal of makeup and plastic surgery. But it's not just about "beauty", most pictures undergo heavy editing before we get to see them. Blood likely looks a lot redder today than it used to. Yes, photography still mostly shows us what was going on in the THERE AND THEN, but most images are changed and distorted, often in a way to make them stand out and perform in a hyper-pitched war for attention. We are living in a strange world, completely engulfed in virtual caricatures of what the world would look like through human eyes. I mean ... who really looks like Kim Kardashian or (The) Rock Johnson? Exactly. No one. Not even them.

Post Truth

The second ongoing epistemic crisis is about what has so charmingly been dubbed "Post Truth". It's characterized by ever more degrading trust in institutions (the main stream), a epistemic splintering of worlds along ideological lines and the rise of conspiratorial thinking. Educational institutions, media, scientists, politicians and parties – none of them can be trusted, so we need to go out and search the truth for ourselves. As with the first, this crisis is driven by multiple factors we cannot really pick apart here. The usual suspects are social media, recommendation algorithms and the omnipresence of the internet – but one could find more, from the winner takes all voting system in the U.S. to partisan dynamics to the influence of Russian propaganda to the very real corruption running through our governments. But: I don't think this crisis is driven by the malleability of digital photography. Not only have we just concluded that our trust in digital photography is, if anything, still rather unreasonably high, we can also look at what happened over the last couple of years and notice a lack of high impact scandals involving manipulated photographic images or claims of images being fake. For example, image manipulation wasn't a factor in the last presidential election, and, to put it mildly, U.S. politics is THE hotbed of the "Post Truth" epistemic crisis. If image manipulation is not a driver there, it's not much of a driver (yet).

These epistemic crises are the context in which the next crisis, the one related to generative AI, will play out. This means the epistemic changes introduced by image generation are going to interact with an already fraught information environment. And I don't think that's particularly great news for us.

OK, the stage is set, let's talk generative AI.

From Digital Photography to Image Generation

Such would be the successive phases of the image: it is the reflection of a profound reality; it masks and denatures a profound reality; it masks the absence of a profound reality; it has no relation to any reality whatsoever; it is its own pure simulacrum.

Jean Baudrillard – Simulacra And Simulation, 1981

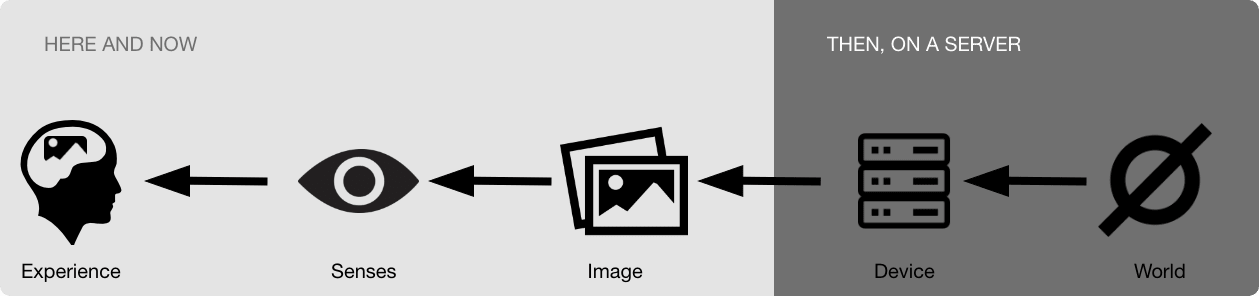

Let's start by comparing the semiotics or the epistemology of generative images to the semiotics of photographic images. With digital photography we did not need to update our chart, with generative AI, we do.

Fig. 6 – Epistemics of Generated Images

The change is dramatic. With photography, the image was the result of an interaction between device and world. As a product of that interaction, it represented something real about the moment it was created. With generative AI, there is no referent. The image doesn't represent anything in the world at all. It was "dreamt up" at some point in time, somewhere on some servers. If it represents anything, then the interaction between the prompt, the model's architecture and its training data and training process. So maybe we get a vague notion about the intention behind the prompt. Or, if we can look at a large number of image-prompt pairs produced by the same model, we might infer something about biases present in the model (e. g. a preference for sexually attractive people), but the images do not show us anything that has actually happened.

That alone wouldn't be a problem (or at least not an epistemic one), but generative AI undermines an observation we have made earlier – that we are very good at differentiating photographs from other images. Right now, humanity is locked in an adversarial training battle with generative AI. We do get better at spotting AI generated images, and AI generated images get better at fooling us.

Fig. 7 – An image of Roland Reagan meeting Afghan mujahideen in the White House. Is it real? Well, the hands look pretty good, so maybe yes? Try to find out!

I would posit that we are approaching a moment where even experts and expert systems will not be able to tell the difference between a photographic image and a generated image. I would also posit that, as costs for AI fall, generated images are going to be at least as ubiqutous as photographic images. I think both of these assumptions are justified. Generative AI is already very good at creating photorealistic results, and AI as a technology is currently pushed forward at the highest possible speed. There is an endless influx of extremely clever people entering the field and an endless army of rich people and organizations willing to give them all the billions of dollars they need to produce something slightly better than last week's best thing. Will we reach Artificial General Intelligence or even Artificial Super Intelligence? Possibly, though it also seems possible the hype train will run out of steam before we do. Will we see dramatically enhanced results in image generation before that? To me, that seems almost guaranteed.

So … what about the consequences?

Epistemic Collapse. The World as Mirage.

All we have to believe is our senses: the tools we use to perceive the world, our sight, our touch, our memory. If they lie to us, then nothing can be trusted.

Neil Gaiman – American Gods, 2001

In a way, most of technology can be seen as an extension of our biological bodies and their functions. Pick up a hammer and it becomes a part of you. Now, you can drive nails into boards (and smash skulls and stuff). Put on a sweater and you can tolerate much lower temperatures. And so on. Photography extends our most important sense, vision, through space and time. There have always been problems, there has always been deceit, but focusing on that would mean focusing on the exceptions. In general, photographic images represented the world not only as it is now, but also as it was then. It's a superpower. Now this extended vision is about to fill up with generated images that show us nothing of the world, but that we cannot differentiate from photographic images. Not long from now, when we try to use our superpower, the incoming stream of information will likely be full of hallucinations, or, more precisely, fabrications. And if we cannot tell fabrications from representations, and the volume of fabrications rises high enough, our technologically enhanced vision becomes useless. We can no longer rely on it to learn about the world. If technology in the last couple of years has turned the world into a village (Yes, McLuhan again), this development might turn it into a mirage, everything from outside our here and now might turn into a stream of colorful images with little or no epistemic value.

If we follow this thought to its end, the world we can see via photographic images collapses and we become limited to our own cones of perception. And it is not not just our individual world that collapses. The factuality of photographic images has played an important role in our ability as a species to construct and share a common conception of what is real. So it seems our socially shared and collectively inhabited world is also at risk of collapse. Are we doomed to turn into a collection of disconnected individuals, or small networks of individuals, blindly scrambling around, only every trusting what we can see with our own eyes?

Admittedly, at first glance this does sound a bit overdramatic. Let's, for now, see total epistemic collapse as an extreme and theoretical endpoint, unlikely to come true in its purest form, but useful as a reference point.

Trust and Trustworthiness

Total epistemic collapse describes a situation in which we are overwhelmed by fabrications and do not trust any image we haven't taken for ourselves. Let's do a little thought experiment to explore that notion a bit further. The stage is set three years into the future. Generated images are ubiqutous and indistinguishable from photographic images. You sit in your living room, together with the one person you trust most in the world. This person takes out a mobile phone and shows you images taken on a vacation. The images appear normal and show beautiful sunny beaches and interesting animals in a tropical rainforest. Would you believe that person is showing you real images from their trip and not generated images? Probably yes. I did ask you to imagine the person you trust most in the whole world.

What if that person showed you a video clip of a dragon attacking a village and is REALLY insistent that this REALLY happened? Unless your believe system includes the possibility of dragons, this will put what you believe to know about the world in conflict with the trust you put in that person.

Let's restart. We are still three years into the future. Generated images are ubiqutous and appear perfectly real. You are alone in front of your computer. The internet explodes with dozens of video clips showing a dragon attack on a small village somewhere in the Caribbean. Shortly after, video interviews appear on One American News, in which tourists who witnessed and filmed the attack talk about it. Two minutes later CNN goes live. They assume this to be some kind of hoax (reminiscent of the Godzilla attack on Tokyo six months earlier), but as it generates clicks and views, they run with the story. About 60 minutes in, and not to your surprise, CNN has a reporter on the ground and it is confirmed that there is indeed no dragon. The attack, the gory images of its aftermath, the witnesses talking about it – it's all generated by AI. One American News retracts the story one hour later, apologizes and blames the radical left for tricking them. Two days later, the person you trust most in the world visits you. They are nervous and besides themselves! Once inside, they show you the video of the dragon attack and swear they recorded it themselves. What now?

OK, maybe the dragon is a bit ridiculous. What about aliens? Or terrorists? What if it isn't the person you trust most in the world, but your crazy liberal/conservative relative who is convinced the moon landing was fake? What if One American News and CNN swapped places in the story?

I think it is safe to assume a couple of things: a) We will not distrust all images. Neither will we just trust all "photographic" images. We will trust some images more than others. b) Which images we trust depends on the answer to the question: Is this image generated or fabricated? And to answer that question we will need to rely on factors outside of the image itself. c) How good we are, collectively, in telling representation from fabrication will determine how well we can deal with the proliferation of generated images.

So, how do we collectively get better in telling generation from fabrication? How do we trust the right images?

Old Mechanisms of Trust

Let's first look at the reasons we trusted photographic images so far. That should give us an idea for how generative AI will impact our trust in photographic images and maybe also an idea of how better discern real photographic images from generated ones.

a) Difficulty: Our base line assumption is that creating believable photographic images with generative AI is about to become easy and widely available. While digital photography made it easier to manipulate images, the process still required some expert knowledge. Generative AI makes this trivial, removing all prerequisites other than very basic computer and writing skills. This is the crux of the matter.

b) Probability: My prediction would be that images created by generative AI are going to be ubiqutous and are going to represent a large share of all images appearing to be photographic. From reddit users trying to weird each other out to influencers spicing up their vacation pics to to full blown disinformation campaigns by bad actors like Russia or partisan news networks – my guess is that we are going to drown in generated images son. With digital images, we could still kind of take the bet that most images do show something that represents a reality, but this is about to change.

... And I haven't even mentioned porn yet!

c) Utility: The utility of generally accepting "photographic" images as genuine is directly dependent on the likelihood of that being correct. So that's going to go down as well.

d) Symbolic moments: Sooner or later we are going to have symbolic moments undermining the trustworthiness of "photographic" images. By this I mean some form of scandal or other highly visible moment of intense public attention revolving around images and our shared inability to decide whether they are real. These will likely take the shape of an image or a video showing a famous person or a member of an institution doing something horrible followed by a that persona or institution denying the allegation and claiming that image/video was generated by AI. We have already seen a similar dynamics in the aftermath of Hamas's attack on Israel, where the world suddenly had to discuss, whether images of the burned bodies of children were AI generated or not. While that example is horrible, I think it's fair to say that the epistemic impact was still relatively low. The Hamas-Israel story is not ABOUT generated images. My prediction is: This will change the latest, once the next U.S. presidential election gets into full swing. If I'm right about this, that is the moment the epistemic crisis really gets going as well. The proximity of the upcoming election is one of the reasons I think we are operating on very short timelines.

e) Social conventions: Our current social conventions about trusting "photographic" images are likely to change quickly once generated images become more wide spread.

f) Trust in Sources: If I believe that whoever shows me an image tells the truth about what they know about the image, I can put the same level of trust in the image as whoever showed it to me. This makes trust in and the trustworthiness of institutions, especially media, one of the important factors in preventing epistemic collapse. If we had media that never lied and we would always believed what we were told, we'd be fine. We'll come back to that as well.

g) Accountability: The higher the cost of presenting generated images as photographic images and the higher the probability of being caught, the more we disincentivize that kind of behavior. This can have direct impact on most of the other factors listed here; for example probability (less fakes) or symbolic moments (we caught the cheating journalist and made them an example) or trust in sources (we assume media would be disincentivized from cheating). I want to stress that I don't think this is a silver bullet though. The threat of punishment alone has never prevented misdeeds – and there is also the question of who does the controlling and who dishes out the punishment.

h) Personal Experience: The everyday practice of photography was something that increased out trust in photographic images, the everyday practice of generative AI will dismantle it. We will be immersed in fake imagery and most of us will also be involved in its production. News stories relying on images will called into question more and more often. Reddit will become increasingly weird and even more unreliable than it is today. I also think we are not yet paying enough attention to what deepfake porn will mean for all of us. In just a few years, it will mostly be impossible to get through school without being confronted with deepfake porn starring yourself, especially for girls and women. The same is true for anyone doing anything in public – from politicians to youtubers to pop stars – if you are female, people will put you in porn! And yes, it's also going to happen to men, probably enough to feel threatened by the possibility, but it absolutely is going to happen to the vast majority of women doing anything public. In other words: Being visually striped naked and abused in whatever way the abuser wants to imagine is going to be a very real experience for a large percentage of the population. I think there is no way this isn't going to impact our way we approach photographic images in general. This is going to become VERY intimate soon, either for you or someone close to you.

i) Biology: Currently we are locked in some kind of adversarial battle with image generators, we get better at detecting AI images, AI networks get better at fooling us. The whole premise of this article is that AI will soon get good enough to reliably fool us and tickle our brains in that particular "real" way only photography could tickle us before.

Looking at all of these factors, I think it's pretty safe to say trust in images is going to erode quickly and soon. Which maybe isn't a bad thing. After all, these factors are mostly not about why photographic images are trustworthy, but about why we trust them despite the possibility of manipulation. With the switch to digital, one could argue that a reckoning about the trustworthiness of images is overdue anyways. As to the question to what degree these factors can help us to decide what images to trust over others, only two seem to be really helpful:

- Trust in Sources: If we could trust the sources of all images, we would know which images to trust. As humanity is not going to stop lying, let's rephrase like this: The more justified our trust in our sources, the more justified our believe in photographic images.

- Accountability: The more we manage to disincentivize presenting generated images as genuine photographic images, the more reasonable it is to trust our sources, the more justified is our believe in photographic images.

The problem is: Trusted sources and accountability might be able to help, but they are just not good enough. Photography had the power to show us something as TRUTH, even if we did not know whether we can trust the source. Often, that was the point. And accountability has never prevented all crime. At best, it is a deterrent for some. So, what else can we do?

New Mechanisms of Trust

When I started writing about this topic, I was pretty pessimistic. I expected that my attempt at a solution will be something along the line of "The media needs to be super honest with us to not lose trust". Which, admittedly, is a really weak solution. I was basically resigned to photographic images losing their character as evidence and that their is not that much we can do about it. But a week ago, Leica released a new camera that confirms the authenticity of a photo at the moment of capture in cooperation with the Content Authenticity Initiative (CAI).

This is extremely good news. The way it works is that an identifying and encrypted fingerprint of the image is created (likely a "perceptual hash", but I'm not sure) and shared with the CAI at the moment of creation where it is stored in a database. If the image shows up somewhere, the fingerprint can be reconstructed and checked against the fingerprint in the database. If the fingerprint matches, the image is confirmed as being real. Crucially, the fingerprint can be reconstructed from the image, but the image cannot be reconstructed from the fingerprint (the hash isn't reversible), otherwise privacy would be a massive concern.

The CAI is an initiative by Adobe, but has some really big names under its members (e. g. the AP, the BBC and Microsoft). Some names are missing (notably Apple and Google), which hopefully isn't a sign that we'll need to go through a decade of "standard wars" before a solution can be widely adopted, but this makes me really hopeful about our ability to deal with this problem. Even better, this solution also seems to be able to prove, whether an image was manipulated, and if so in which way. So we might be able to combat the issue if malleability as well.

What we should do is to agree on standards (the CAI uses the standard as formulated by the C2PA) and make sure every photographic device uses at least one of those accepted standards. It's tough to say, if this is what the CAI has in mind, but that way we can envision provenance checks integrated on the browser level, so that every image that is not a verified photographic image is automatically flagged (e. g. by a layer of transparent color marking it as generated that the user needs to actively remove to see the image as normal). That way, we would put a bit of a break between our brain's immediate "THIS IS REAL" reaction and perceiving the image unobstructed. It's unlikely the solution we ultimately implement will be that straight forward, but at least we have an approach available. Also: Please note I'm not an expert on this tech. It looks promising though, and nowadays I'm willing to take my hope from wherever I can find it.

Some worries remain though: a) How tamper prove is that system? I honestly don't know, and I'm not going to dig into it here. I'll leave revealing vulnerabilities to the Chaos Computer Club and others like them. b) In a way, this is only as trustworthy as the people running the verification procedure. If the North Korean Government runs the verification procedure and verifies images of Kim Jun Un shitting roses, we still wouldn't believe it. c) Centralization of power. Who is running the verification procedure? I don't want Adobe to be the arbiter of truth for humanity or even just "Western civilization". I don't want any one organization to have that job, so control over this needs to be distributed and transparent. Maybe we can implement multiple system running in parallel tasked with checking on each other, checks and balances style? Either way, at least it's a political problem now, not something fundamentally unsolvable.

Things Need to Happen NOW!!!

The theoretical existence of a technological solution is great, but for now it's in its infancy at best. Leica has a proof of concept, awesome! The next presidential election is 2024, with the first caucuses in Iowa less than three months away (at the time of writing). I'm not sure about the Democrats, but there is a snowball's chance in hell the Trump campaign isn't going to go for the throat. There just isn't anything I can come up with I wouldn't believe them capable of. A video of Obama raping a child with Hillary Clinton and Joe Biden watching in the background, fondling each other's genitals. Too crass? I don't think so. I'm just not sure the technology is quite there yet. So here is an idea that is a bit more subtle and easier to generate: A secretly recorded video in which Joe Biden talks to rich donors, telling them how the problem with Medicare is that poor and old people live too long. Or how about a video of Biden having a senior moment and shitting his pants right in the oval office. The possibilities are as endless as you are willing to be immoral and cruel.

We are not going to have a technological solution in place for that in time. What we can do to prepare is to talk about this more. People are simply not aware of this enough. Both, images and audio recordings are not proofs anymore. Probably both campaigns will take advantage of that. And then there are still the Russians. Their overarching propaganda goals is to confuse us to a point where don't know what's real and what isn't. This tech is going to be a god sent to them. No way they aren't going to capitalize on it. Maybe they are going to create the Obama rape video, just to see the U.S. tear itself apart over the question whether Trump is behind the production. The next election is likely going to be the most dirty, incendiary and ruthlessly fought ever. Generated images (and audio) are going to play a major role. And I don't feel we are talking about it nearly enough.

News media needs to have strategies how to talk about non-confirmed images. They need new formats and new ways to mark images as unreliable and unconfirmed. And they need to let us know well in advance what to expect and how those formats work. Sentences like "Image provided by Hamas and cannot be independently verified" won't cut it! Let's say the New York Times decides to run images of unknown origin with a big red frame, they should run a headline of Trump and Biden kissing with that big red frame NOW. Draw attention to it. Prepare the public for the info war that is about to come. When the first really big scandal is on, it's going to be too late. Everyone is going to be too emotionally involved (be it with outrage, disgust or glee), no one is going to be able to think clearly.

Fig. 8

Sources

Originally posted on my blog here. If you read all of this and liked it, consider heading over :)

Literature

- Ellis, Bret Easton. 1998. Glamorama. New York: Knopf.

- McLuhan, Marshall, and Quentin Fiore. 1968. War and Peace in the Global Village. New York: Bantam.

- Barthes, Roland. 1993. Camera Lucida. Translated by Richard Howard. London: Vintage Classics.

- Ritchin, Fred. 2009. After Photography. New York: W.W. Norton & Company.

- Sinderbrand, Rebecca 2017. "How Kellyanne Conway ushered in the era of ‘alternative facts’." The Washington Post. January 22. https://www.washingtonpost.com/news/the-fix/wp/2017/01/22/how-kellyanne-conway-ushered-in-the-era-of-alternative-facts/.

- Baudrillard, Jean. 1981. Simulacra and Simulation. Paris: Editions Galilée.

- Gaiman, Neil. 2001. American Gods. New York: William Morrow; London: Headline.

- McLuhan, Marshall. 1964. Understanding Media: The Extensions of Man. Toronto: McGraw-Hill.

- "Leica Launches World’s First Camera with Content Credentials." 2023. Content Authenticity Initiative Blog. Accessed November 15, 2023. https://contentauthenticity.org/blog/leica-launches-worlds-first-camera-with-content-credentials.

Images

- Cover Image: "Inside", created with Midjourney by me. 2023.

- Figure 1: "The Terror of War" by Nick Ut, 1972. Available at: Wikimedia Commons (Accessed November 15 [^1]: 2023). Image cropped by me.

- Figure 2: "Epistemic Everyday Oversimplification" by me, 2023.

- Figure 3: "Epistemic Everyday Oversimplification for Photographic Images" by me, 2023.

- Figure 4: Montage by me. Original image: "Nikolai Yezhov with Stalin and Molotov along the Volga–Don Canal" from Wikimedia Commons. Available at: Wikipedia (Images accessed and modified in 2023).

- Figure 5: Montage by me. Left side taken somewhere from the internet somewhere (I forgot), right side created with Midjourney by me, 2023.

- Figure 6: "Epistemics of Generated Images" by me, 2023.

- Figure 7: Good try, but I'm not telling you here.

- Figure 8: "Bipartisanship", created with Midjourney by me. 2023.