This is an overview of blind or merely blurred spots I've seen in reading as much as I can the past two months, and a view from a social scientist. The first chapter is an exercise to expand EA, and is more conceptual/philosophical. The second chapter is a practical application of EA principles to the ‘human element’. There is also a small and easy to read third part (think of it as a three-course meal) with thoughts that I was not able to consolidate and that I would like to explore another time.

Chapter one - Least harm

The EA philosophy is often sloganized as ‘doing the most good’. Science-based, reason-informed, optimized good. If this is truly the essence, and projects and papers do point to that, that means half of EA’s utilitarian roots might be unexplored. The whole slogan goes: ‘doing the most good and the least harm’. Would it make sense to add this second half to the EA-philosophy? Are the two even that different?

Let’s first explore the difference between the two by viewing their practical execution. Doing only the least amount of harm seems quite straightforward: the harm-reductionist would probably come to the conclusion that not living makes for the least amount of harm, or - considering the emotional harm to those around - doing as little as possible. The good-optimizer has a much more complicated task, as illustrated by the many texts and frameworks for EA. The central difference is between doing something extra and doing less, it’s an obligatorily active course versus a mostly passive one.

The crux I think lies in the way to get to the good. There are parts of EA that will please the harm-reductionist. Most priority areas can actually be summarized by ‘doing less harm through doing more good’.

Reduction of harm by ending factory farming in a harm-reductionist way would be to consume less/no animal products and advocate, educate, or make policies for that. An example of a good-optimizing way is, as one article suggests, creating and spreading stun-technology for killing fish. Which might make quantifiable sense; you might be able to reach more people and in total do more good by reducing the harm done to each fish a certain amount. The harm-reductionist could not advocate for this less-harm perspective, since there is a way to do less harm, which is not eating fish. The technology might even consolidate or lead to condoning of the practice of fish farming, since it would be come a little less ethically objectionable. Not doing something can have much more impact than doing something, and I don’t see this perspective reflected enough.

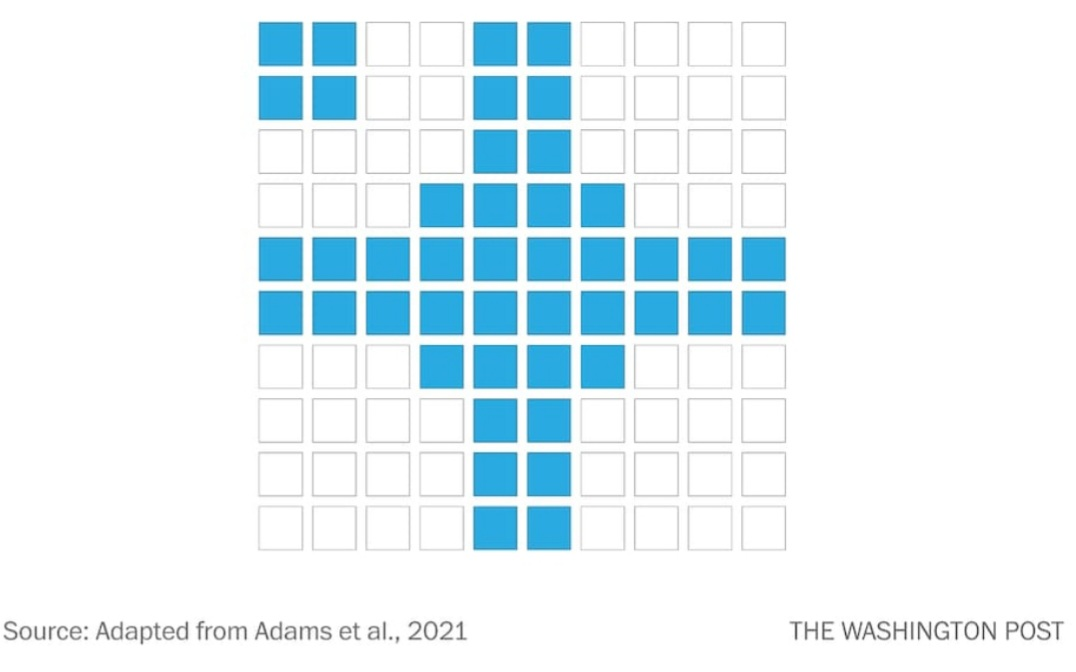

There are parallels in this distinction and recent research into people’s natural way of dealing with problems. In short: the natural tendency is to add to the complexity of the situation, and not to take something away that would have the same or a better outcome or that would make for a more efficient solution. Take a look at the puzzle below.

Participants in the study got the instruction: “Make this image symmetrical in the most efficient way possible, changing one square’s color at a time”. Half of the people failed the task, by coloring all four corners blue instead of the one corner white.

This tendency translates to bigger societal problems (solutions are mostly adding more laws, policies, subsidies, or technologies). It is a trap I can see EA’ers fall into as well. The tendency might make doing good a more natural course of action for us than doing nothing. That bias could be remedied by introducing or emphasizing harm-reductionist reflections more. The good-optimizing view instead of the harm-reduction view might also come more natural to us since it is more in line with our valuation of the idea of progress, in contrast with some traditional non-Western and indigenous cultures and philosophies.

So, what kind of harm-reductionist reflections are we talking about here? One simple example is the ‘right person in the right place’-type of EA: by working at a big corporation to possibly change the course of that corporation for the better. Working for that corporation would before the course is changed, sufficiently so, involve doing harm, maybe more harm than you would have done by not working there. We would need ways to weigh the harm against the good, of course considering the possibilities that the company wouldn’t change course without this person. Also, since most people will not have a course-changing effect at their organization it might be better to stay away from the harm done by working there.

Where the two clash and resolution is not as straightforward is climate change. The clash is noticeable on a personal level - for example, flying to a conference to spread an important message about reducing climate change) and on a policy-level - for example, reducing consumption versus maximizing consumption for progress and a better chance of new technologies. The two also clash in the field of bioresearch: doing the most good in hope for disease treatments, but harming many lab animals of many other species in doing so.

In conclusion I find it important to note that doing the least harm is not the same as doing no harm, just as doing the most good is not the same as doing good perfectly.

Chapter two - Human element

This second chapter highlights certain ‘human’ aspects that would fit EA, an attempt to look at EA in a social-science way.

People in general want to be perceived as good, do good. A lot of times it’s the irrational human aspects that lead to unwanted responses and not that the person is a ‘bad apple’.

We could look at the perverse incentives that Gladwell made famous, and to the negation of promising technologies by the Jevon’s paradox as examples. (In short, the perverse incentives are the structures in organizations or society that lead people to act in a non-ethical way. This might be having a manager that berates all his employees for making mistakes, so the employees will end up not telling the manager when a product is faulty to the point of danger. The Jevon’s paradox is best explained by looking at agricultural practices: the more intensively technologies allow a piece of land to be used, the less land we will need to feed everyone and the more land will be available for conservation. In theory. In reality, what happens is that the technologies make a piece of land more valuable to exploit - more possible earnings - so more people will end up buying land to exploit and the exact opposite of the desired outcome will happen.)

In the end it’s people that have to do the good, so how can we enable people to do more of that? Looking back at the previous chapter raises the question: What do people need to become course-changers? To persist in their EA mission after the first wave of motivation fades? Do we need people that are resilient to perverse incentives and group dynamics? In any case, it would help to have more research into what those course-changers have in common.

Another social science perspective brings research into an area that has been marked as a potential key area by EA: Mental health. Working on mental health has been considered as a way to do more good (to make people happier), but I propose mental health as a key area for a different reason: Because it is a potential snowball-area.

This view sees mental health problems not only as a consequence but also as a root of social issues. A simple example: A significant part of leaders of large companies are psychopaths - characterized by a lack of empathy, predatoriness, egocentrism, and exploitation. These characteristics affect the course of business: Studies suggest that a company with psychopathic leadership is less likely to do CSR. It might also be worth looking into how mental health correlates with social solidarity in general. Research on this is very fresh, but this small study indicates a relationship between mental health problems and a lack of social solidarity, possibly impeding a person’s capacity for altruism to begin with. Greed (remember the Jevon’s paradox) is anxiety-related and rooted in self-doubt. Striving for material goals can act as a substitute for unmet needs in the past.

These examples indicate that mental health problems can be a hurdle to achieve EA goals.

Other things

- What if iconic good-doers that had an immense impact, like Petrov and Mandela, had followed EA philosophy?

- Would we, in terms of EA, rather have a transhumanist posthuman that is shaped by what is good for society, or a Nietzschian übermensch that is separated from societies’ influence and therefore maybe more likely to be a course changer?

- Biodiversity loss is just as pressing a problem (maybe even more so) as climate change and is strongly interlinked. I feel quite certain this issue has been looked at already though.

Thank you for reading. A very valuable part of the EA community is its openness to criticism and new ideas. I went through so many viewpoints and nuances for this piece, and yet EA manages to unite this discussion-ready community.