Hello everyone,

My name is Noah, and this is my introduction to the Effective Altruism forum! I have so much to share, but I will begin with a bit about me.

Background

My parents are both highly-educated and wonderful people who nurtured my curiosity and empathy from a very young age, and to them I owe everything! During middle school I created a non-profit to provide aid to the victims of the 2011 Tōhoku earthquake and tsunami, and I worked with the school to fundraise by selling candy during lunch. I went on to volunteer in many forms, from summer camps in Wyoming to STEM workshops in San Jose, but my most impactful work has always been cooking and serving dinner to the families of critically-ill children at the Ronald McDonald House.

In high school I started tinkering with computers and working part-time to hire mentors and freelancers to coach me through personal projects. I went on to attend the University of Colorado Boulder, and in 2021 I graduated with a Bachelor’s Degree in Computer Science and a minor in Space. I was among the most passionate and progressive participants in courses including Normative Ethics, Bioethics, and IT Ethics & Policy, and that paved the way for my pursuit of AI safety! With a growing foundation of hands-on humanitarian work, an aptitude for tech, and a passion for helping others, my mission is simple:

Balance the urgent pursuit of life-empowering technology with a longtermist approach to existential risk reduction

My dream is to usher humanity towards only the most equitable and liberatory futures in which every human is empowered to blossom to their fullest potential. My current portfolio consists of AR and AI tools that prioritize accessibility, cost-efficiency, and power. While useful in their own right, I developed them to augment my own workflows and sharpen my skills in preparation for bigger and harder problems. Safely harnessing the power of AI might be the most important problem that we could ever hope to solve, and I'm particularly well-poised to help facilitate the development of aligned AI by leveraging my ML-focused full-stack experience with my passion and aptitude for AI safety.

Full-Stack Development

Following graduation, I worked as a sole founder to build three products:

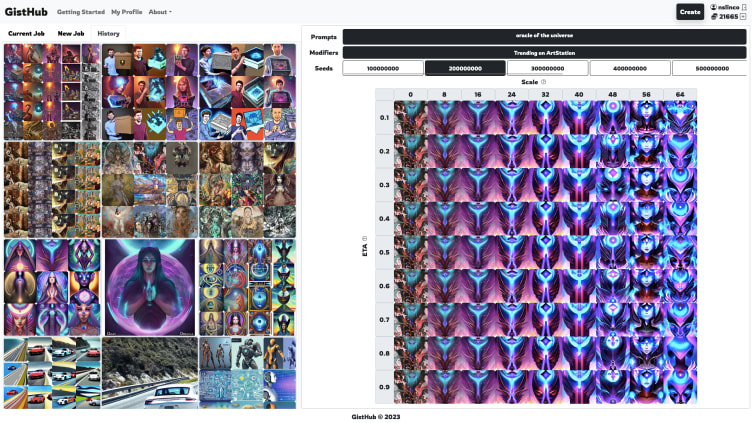

- GistHub: Generative AI 10x faster and 10x cheaper than anywhere else

- Feedback: A platform for interfacing with and between ML tools with zero code

- HoloDesk: A wearable-free AR desk with a transparent display built into the surface

To learn more, you can visit my website at gisthub.ai

LLM Orchestration

During the summer of 2023, and hot on the heels of the Voyager & ChatDev papers, I began performing cutting-edge independent LLM research where I yielded a 30x cost reduction with zero performance impact compared to Voyager’s GPT-4-based approach to LLM-powered embodied agents. I demonstrated that replacing Voyager’s GPT-4-powered coding agent with a GPT-3.5-turbo-powered ChatDev-style wing of conversational coding agents was significantly better, faster, and cheaper. I went on to show that such a system could perform extremely well even without leaning on an extensive secondary API for performing actions, or the immense knowledge base of Minecraft that is baked into the models. During and since that time, I gained extensive experience with tools/techniques like vector databases, RAG, query expansion, and much more.

AI Safety

I have written extensively about human-AI interaction and its many forms, and I plan on sharing many of those ideas on this forum, but I won't go into too much detail in this post. In short, I can say that we must not conflate AI safety with the development and regulation of non-jailbreakable models. The free market alone will produce all kinds of intentionally-harmful models, and our only hope is to continuously ensure humanity's robustness against all adversaries, artificially-intelligent or otherwise. I look forward to a world in which our most powerful models are used to survey, stress-test, and red/blue-team as much of our attack surface as possible.

Scaling up such a technique to national and global levels yields AI reactors whose structure and security resemble nuclear reactors, within which the dominant resource is problem-solving power itself, and where AI engineers throttle AI at its point of criticality to produce valuable insights while leveraging various practical and physical containment mechanisms to avoid runaway scenarios.

Technical AI Safety

I'm currently conducting independent research on the use of AI to automate the identification and exploitation of vulnerabilities in feature-rich simulations of embodied agents. I aim to demonstrate the ways in which adversarial AI can exploit human-level systems, and how we can automate that search process via simulation. There are several variables of interest, namely AI architecture and exploitation complexity.

- AI architecture is divided into two main categories: LLMs and neural networks. The LLM architectures are further divided by different models and orchestrations, and the neural net architectures are trained via PPO using a combination of parameter sweeps and LLM-designed reward functions.

- Exploitation complexity is also divided between two main categories: goal complexity and supervision level. Goal complexity maps to a goal set that ranges from covertly performing simple illicit activities to inducing total structural collapse. Supervision level maps to a set of embodied and disembodied virtual supervisors/enforcers.

I'm incredibly excited about this work and I can't wait to share it, the space is ripe for innovation and there are opportunities for further research at every turn. I would love to pursue this research full-time, but that isn't financially feasible for me right now. I'm very interested in opportunities for funding such as the Long Term Future Fund, Open Philanthropy's Career Development Funding, Lightspeed Grants, and Manifund, and I would love to talk to anyone that has experience funding or getting funding for independent research related to AI safety!

Conclusion

Whew! As a token of my gratitude for getting this far, here’s a sneak peek of a little “trapped evil AI” desktop toy that I prototyped one weekend. Now, while the subjugation of any conscious entity is no laughing matter, this little guy is among the simplest of state machines, so even if we assume a panpsychist universe his experience should differ minimally from that of his little bench. With that being said, the torment this little guy faces while doomed to endlessly hit terrible drives is quite amusing.

Thank you all for reading, I truly appreciate it. Feel free to share your thoughts, I would love to chat and answer any questions/comments you may have. I'm also currently exploring a very diverse set of opportunities, and I'm open to more! I will leave you with the titles of a few future posts/essays that I may share:

- Model Organisms of Alignment: The Common Mycorrhizal Network

- Another Night at the Artificial Opera (Revisiting undergrad AI forecast)

- On the Base Case of a Recursive Universe (Beam me up, Scotty)

- On the Homology of a BCI-Augmented Neel Nanda and an AI Exorcist

- AI Reactors and the Art of Compartmentalization