Considering Counterarguments on the Singularity

Hey everyone! This is my first post on the EA forum :) I cross-posted this from my blog Love Yourself Unconditionally where I am currently writing about AI Safety and Buddhism. This is the second post in a series. If you're curious to see the first post you can find that here. Although this is a series, each of these articles can also be read on their own. Thanks for checking out my post and any feedback you all have would be greatly appreciated! :)

Introduction

As mentioned in my prior post, I thought it would be helpful to consider counterarguments. Specifically, I wanted to see what arguments existed for the following claim:

AI will never surpass human intelligence.

This is quite the strong claim to make, mainly because of the word choice of “never.” Regardless, I went to Google to see what I could find. I initially had some trouble finding academic counterarguments, but then I came across this book: Why Machines Will Never Rule the World: Artificial Intelligence Without Fear by Jobst Landgrebe (an AI entrepreneur[1]) and Barry Smith (a University of Buffalo Philosophy professor[2]).

The book is around 50 pages long so not the longest read, regardless, I wanted an overview so I found this hour-long interview conducted by Richard Hanania from the Center for the Study of Partisanship and Ideology.

Here are some of their key points along with my responses:

Human Intelligence is Too Complex

Their Claim

Their primary claim is that human intelligence is a “complex system” and as we currently lack the mathematics to model complex systems in general, we therefore cannot model human intelligence.

Background

In a nutshell, a complex system refers to a large network comprised of nodes and edges. The nodes represent some components while the edges represent some relationships between these components. These components interact in a vast number of varied ways, thereby making it hard to predict the behavior of the larger system. Some examples include cities, the climate, and the universe itself. Complex systems tend to be more stochastic.

On the other hand, simple systems tend to have smaller networks with a few clearly defined relationships between each of its components. Some examples include a pendulum, a thermostat, or even a light switch. Simple systems tend to be deterministic and fairly easy to predict.

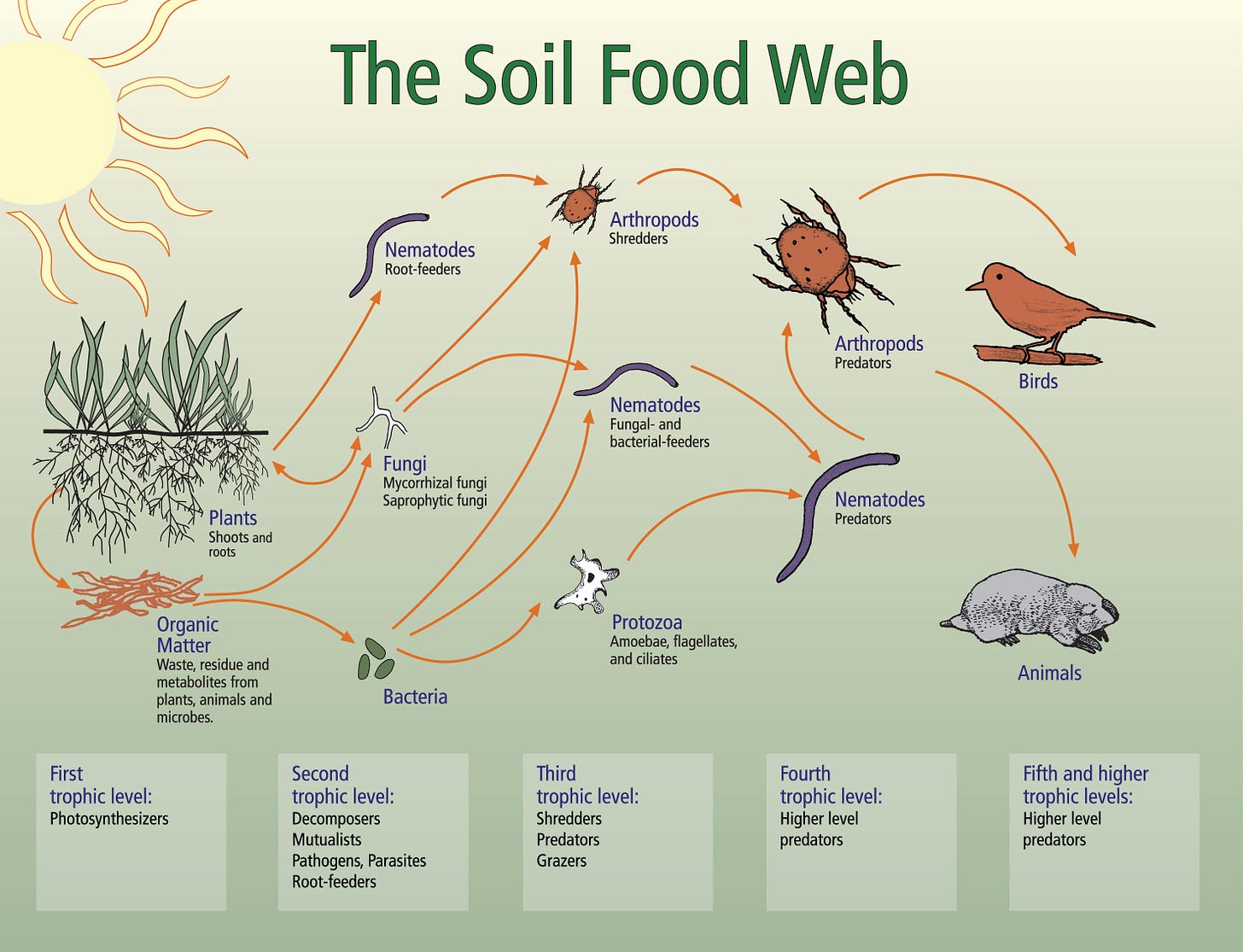

To further elaborate the point, one can consider an ecosystem as a complex system. Just think about how many different organisms exist in just one square kilometer of a rainforest biome. Each organism can be considered a node. Now each organism has some way they relate to one or more other organisms in this biome. Each of these relationships can be an edge. Here’s an example of a soil food web that illustrates this point:

As you can see, it can be difficult to accurately model complex systems. There are many reasons for this, but one key reason is that complex systems exhibit “emergence.”

Emergence refers to how when you take many disparate components and put them together, it can often create phenomena that are greater than merely the sum of all individual components’ behaviors put together. Put more succinctly by Aristotle, “the whole is greater than the sum of its parts.”

For example, in the case of a city, if we were to put together the components of drivers, speed limits, road designs, and weather conditions, we get the emergent phenomenon of traffic jams.

My Thoughts

For your convenience, here is their claim again:

Human intelligence is a complex system and as we currently lack the mathematics to model complex systems in general, we therefore cannot model human intelligence.

I disagree with their claim for two key reasons:

- It is possible to create new things without fundamentally understanding how they work internally.

- Large language models (LLMs) demonstrate emergence.

Regarding my first point, I am referring to how we can build bigger and more powerful models without fundamentally understanding how they work. The field of mechanistic interpretability is devoted to decoding how these “black box” models work based on how their internal neural networks activate. This would be similar to (though more precise than) using a brain scan to read your mind. Furthermore, we humans have created many other complex systems without being able to accurately model them, like the internet, the global economy, etc.

Regarding my second point, several researchers have described emergent properties arising as LLMs scale up. Specifically, they have found these models develop capabilities that they were not explicitly trained for. One example of this is the strategy known as “chain-of-thought” prompting. Normally, when you ask a model a question, the model just answers immediately. When a model uses chain-of-thought prompting, they instead essentially “think out loud” and then only after that answer. Here’s an example:

As such, these AI models may be on track to becoming complex systems in case they are not already.

Artificial Intelligence is Too Rote

Their Claim

Their other claim is that AI is too rote. Specifically, this can be broken down into two sub-claims:

- AI is purely reproductive. It can only create what it has seen before and so it cannot create new knowledge.

- AI cannot truly understand things the way humans can.

In saying that AI is purely reproductive, they explain how all LLMs do is guess the next word (somewhat like a more advanced version of autocomplete on our phones). To be able to do this, these LLMs are first trained on hundreds of gigabytes of data (such as The Pile). Therefore, it follows that LLMs are incapable of creating new knowledge like humans can.

They provide the following example: One may be able to ask ChatGPT how lightning works and it can give a pretty good answer. However, this answer will likely be simply the textbook definition. What ChatGPT would not be able to do is explain what our limits to understanding lightning are and what future research endeavors would help in improving our understanding.

With regards to the second sub-claim that AI lacks true understanding, they say that as AI is just guessing the next word based on some probability distribution, this definitely cannot be considered understanding the way humans understand things.

Background

As you may have guessed, I decided to ask GPT-4 the above questions about lightning. Here’s what they said.

Anyways, regardless of whether we think ChatGPT gave a good answer, it may be helpful to take a step back and think more carefully about definitions for two particular points:

- Creating vs. reproducing knowledge

- Understanding

Regarding the first point, what exactly do we mean by creation vs. reproduction? With regards to creation, we can think of this as the discovery of some important model of the universe, such as gravity. Another example could be the development of some new technology such as the printing press.

On the other hand, reproduction could take the form of a teacher explaining to some students how gravity works or an entrepreneur deciding to create a book-making business.

As for the second point, this is far trickier. What exactly does it mean to “understand” something? At a high level, Wikipedia defines understanding as the state where an agent has some internal model to predict the behavior of some object or process more accurately.

For example, when it comes to the concept of instruments, if one has a correct internal model of what an instrument is, then one should be able to assess, with a high degree of accuracy, whether or not any given object is an instrument. If one can do so, then we can say that one understands the concept of instruments.

A more thought-provoking example with a higher bar for understanding would be the thought experiment known as “The Chinese Room” which was created by philosopher John Searle. I encourage you to watch the linked video (the example starts at 6:24 and ends at 7:35) but in a nutshell, the thought experiment goes something like this:

Imagine there is a room and you’re standing outside the door. You know Chinese and you’re led to believe that there is another Chinese speaker inside this room. You’d like to chat with the other person but you can only pass them notes in Chinese under the door so you do so. A few minutes after you pass your note, you get a note back in fluent Chinese. Based on all you’ve observed, it seems there is indeed a fluent Chinese speaker in the other room.

However, in reality, there is a monolingual English speaker in the other room who happens to have access to a very large book that contains the perfect Chinese response to any given Chinese sentence. All this person does is:

- Take your Chinese note and flip to the page where your sentence matches the one in the book.

- See the corresponding perfect response and then copy that to a new note.

- Pass that note back to you under the door.

Nowhere in this process does this English speaker actually understand what is going on. Because of this thought experiment, Searle asserts that while AI may be able to carry a conversation just like a human can, that does not mean that they understand what they are saying. As such, he claims that AI will never be able to understand in the way that humans can.

My Thoughts

For your convenience, here is their claim again:

Their other claim is that AI is too rote. Specifically, this can be broken down into two sub-claims:

- AI is purely reproductive. It can only create what it has seen before and so it cannot create new knowledge.

- AI cannot truly understand things the way humans can.

With the definitions of creation vs. reproduction in mind, I think it is safe to say that the vast majority of humans spend the majority of their time reproducing knowledge as opposed to creating new knowledge. To be clear, I say this in a “matter-of-fact” or impartial manner. I don’t mean that most humans are stupid or something. To be frank, I would be the first to admit that the vast majority of my life has been spent in the reproduction part (though I do hope to spend more time creating in the future).

As such, it seems a bit odd to me to say that true human intelligence is exemplified by our ability to create as opposed to reproduce knowledge. If this were true, then one could still argue that we could make an AI someday that is so good at reproducing knowledge that it is a sort of general intelligence on par with human intelligence.

I would also like to ask the following question: How exactly do we humans create new knowledge in the first place? In my estimation, this is through the process of direct experience with reality and noticing important patterns, primarily through experimentation. For example, to add to the discussion of lightning from earlier, the way that scientists may create new knowledge is by:

- reading the current literature

- creating some hypothesis

- testing this hypothesis via some experiment with measuring tools

- reviewing the results

- accepting or rejecting the hypothesis

This is essentially the scientific method. Now, why aren’t our current AI models able to do this? This is because they lack sensors and mechanical arms to properly experience and interact with the real world. Once we create more advanced robots that these AI can access and control, then these robots should be able to conduct such experiments just as we can. I don’t want to claim that creating AI scientists would be easy, however, it still seems possible. As such, I do believe that it is only a matter of time until AI begins creating new knowledge.

Concerning the second sub-claim of understanding, I would like to raise two points:

- Searle’s lack of an empirical test for understanding

- How can we be sure that we ourselves understand things?

With regards to the first point, let’s say Searle is correct and that AI can never truly understand things, it then begs the question: Under what conditions would this claim be proven to be wrong? As far as I am aware, Searle has not proposed an alternative to the Turing test that could allow us to empirically assess his claim. Admittedly, this may be a feature, not a bug of his thought experiment. But even so, believing this unfalsifiable claim feels intellectually unsatisfactory.

As for the second point, I would like to pose a deeper question. How can we be so sure that we ourselves are not currently acting out some version of the Chinese Room experiment? How can we be so sure that we, much like ChatGPT, are not simply just guessing the next word?

For example, as a native speaker of the Tamil language, I can notice the following sequence of thoughts in my mind.

- “Think of the word for food in Tamil.”

- “சபாது.” (This is transliterated in English as “sapadu.”)

Conventional wisdom would suggest that “I” was the one that did this translation in my mind. However, as a Buddhist, I can also see this more impartially and say that when I thought of the words in the first bullet point, the word in the second bullet point just jumped into my mind. It’s not clear to me where it came from. Maybe part of my brain just checked some English-Tamil translation book while simultaneously checking an English dictionary? Maybe this is nonsensical, but it is hopefully at least some food for thought. Ba dum tss 😎.

Admittedly, I’m referencing this concept known as “no-self” from Buddhism which I am just going to gloss over now as this is a massive topic deserving its own blog post.

In essence, my main point is this: it seems plausible to me that we may be acting out some version of the Chinese room in our own lives while being thoroughly convinced we are not.

Conclusion

This was a rather difficult exercise for me. While I have taken time in the past to learn counterarguments for other topics, this is the first time I have taken an extended period to think through a counterargument and write at length about it. Now that I have gone through this exercise, I feel more sure about my belief that AI will indeed surpass human intelligence someday.

Also, thank you for reading! If you have any questions, comments, or feedback, I would love to hear it. I am hoping to use blogging as a means of practicing my writing so any advice you may have on how I can write more clearly would be greatly appreciated 😊.

My next post will be focused on exploring how exactly an artificial general intelligence (an AI with intelligence on par with a human’s), could become misaligned, so please stay tuned 😃!

- ^

From Routledge: “Jobst Landgrebe is a scientist and entrepreneur with a background in philosophy, mathematics, neuroscience, and bioinformatics. Landgrebe is also the founder of Cognotekt, a German AI company which has since 2013 provided working systems used by companies in areas such as insurance claims management, real estate management, and medical billing. After more than 10 years in the AI industry, he has developed an exceptional understanding of the limits and potential of AI in the future.”

- ^

Also from Routledge: “Barry Smith is one of the most widely cited contemporary philosophers. He has made influential contributions to the foundations of ontology and data science, especially in the biomedical domain. Most recently, his work has led to the creation of an international standard in the ontology field (ISO/IEC 21838), which is the first example of a piece of philosophy that has been subjected to the ISO standardization process.”

My understanding of their claim is more something like:

There will never be an AI system that is generally intelligent like a human is. Put differently, we can never have synoptic models of the behaviour of human beings. Where a synoptic model is a model that can be used to engineer the system or to emulate its behaviour.

This is because, in order for deep neural networks to work they need to be trained on data with the same variance as the data to which the trained algorithm will be applied. I.e. training data which is representative. General human intelligence is a complex system which doesn't have said distribution. There are mathematical limits on the ability to predict the behaviours of a system like this that does not have a distribution. Therefore whilst we can have gradually more satisfactory simple models of human behaviour (like chatGPT is for written language) they will never the same level as humans.

To put it simply: We can't create AGI by training algorithms on datasets, because human intelligence does not have a representative data set.

As such I think your response "It is possible to create new things without fundamentally understanding how they work internally" misses the mark. The claim is not that "we can't understand how to model complex systems therefore they can't be adequately modelled". It's more something like "there are fundamental limits to the possibility of adequately emulating complex systems (including humans), regardless of whether we understand them more or not".

My personal take is that i'm unsure how important it is to be able to accurately model human intelligence. Perhaps modelling some approximate of human intelligence (in the way that chatGPT is approximately good enough as a written chat bot) is sufficient enough to stimulate the creation of something more approximately intelligent than that, and so on. In the same way that chatGPT can answer PhD level questions that the majority of humans cannot.

Note: My understanding of Barry's argument is limited to this lecture (https://www.youtube.com/watch?v=GV1Ma2ehpxo) and this article (http://www.hunfi.hu/nyiri/AI/BS_paper.pdf).

Thanks Tristan, for your comment and apologies for my late reply.

I appreciated your well thought out points, in particular that: "there are fundamental limits to the possibility of adequately emulating complex systems (including humans), regardless of whether we understand them more or not."

This may indeed be the case. To be frank, I guess the best I can say is that I honestly don't know haha.

As for your other point that, "i'm unsure how important it is to be able to accurately model human intelligence. Perhaps modelling some approximate of human intelligence (in the way that chatGPT is approximately good enough as a written chat bot) is sufficient enough."

I think I'm in agreement with you here. It doesn't really matter what flavor the intelligence happens to take. I think what really matters is whether or not it is powerful enough to outperform human intelligence. And ChatGPT and other AI models have definitely shown they can in a variety of fields.

Admittedly, since writing this, I've found my interests move away from AI Safety and to other areas, such as Buddhism and mindfulness. Maybe I'll find myself back in AI safety in the future, who knows haha.

Anyways, thanks again for your thoughtful comment! :)