EA: meet All Tech is Human! ATiH: I know you've heard of EA already, but I hope you'll benefit from getting to know EA better.

I'd like to introduce you two because you have a lot in common: you are both communities passionate about responsible use of AI, and you are both increasingly influential in making that happen.

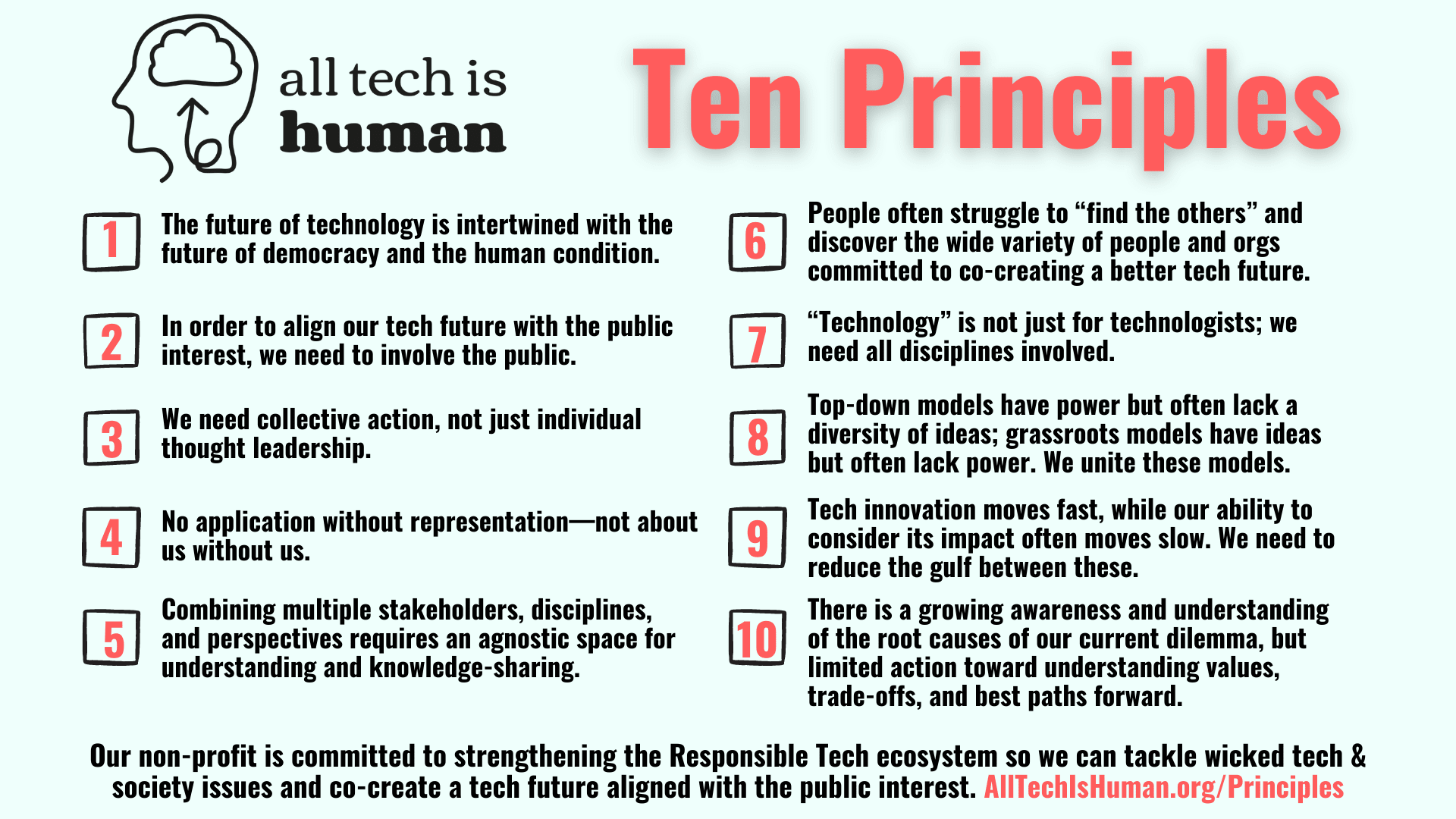

Here's ATiH's self-introduction: this organization "is committed to strengthening the Responsible Tech ecosystem so we can tackle wicked tech & society issues and co-create a tech future aligned with the public interest." Good stuff.

You have some overlap in your interest in AI. Responsible AI is one of ATiH's main areas of interest under the umbrella of Responsible Tech. You both care deeply about shaping AI development and deployment for the good of society. I see at least one person profiled in the ATiH Responsible Tech Guide who I know is at least EA-adjacent.

ATiH has some impressive momentum. Over 7k members across 86 countries are taking part in conversations about responsible tech in the ATiH Slack community. The ATiH "talent pool" has over 700 people. The community has had great success in attracting a diverse group of people; it has reached 1k early-career people through its Responsible Tech Mentorship Program. The founder and president, David Polgar, has met with Justin Trudeau. ATiH appears to focus on indirect impact (bringing people together) rather than indirect impact (e.g. lobbying). It is funded by the Ford Foundation, the Patrick J. McGovern Foundation, Schmidt Futures, Susan Crown Exchange, Unfinished, and Pivotal Ventures (the Investment arm of Melinda French Gates), and it is working on a "revenue stream through employment matchmaking."

EA, you should be aware that folks involved in ATiH may not be your biggest fans. However, they have likely heard a distorted account of what you're really like. Or they may not have heard of you at all--you can't assume. Either way, I recommend proceeding cautiously, diplomatically, and respectfully.

I look forward to you two working toward responsible AI together! You are both large communities that encompass people with differing views who engage in productive dialogues among each other. You can expand these dialogues to reach across your communities. ATiH, you say it well in your one-pager: "we have cultivated a welcoming agnostic space that draws a mix of people and organizations that may disagree (or have distrust) at the surface, but underneath are bonded by a shared mission to understand values, trade-offs, and best paths forward." Read the work of each other's smart and caring members. Put in the work to understand each other's stances. Consider attending each other's events with an attitude of open humble listening. Make personal connections.

There's a lot to do to steer AI toward benefiting society. The more allies, the better.

Appendix: my anecdotal observations

Here's my (limited!) personal experience with ATiH as a longtime EA.

I heard of ATiH less than a year ago from a friend of a (non-EA non-techy) friend. I was amazed that I hadn't heard of it sooner. Of the ~10 AI safety people I asked since then about ATiH, none of them had heard of it either, hence this post.

Earlier this year, Greg Epstein interviewed me for his upcoming book Tech Agnostic: How Technology Became the World's Most Powerful Religion, and Why it Desperately Needs a Reformation because I'd mentioned EA affiliation during an ATiH event, and he hadn't run into anyone in both groups before.

I spent about an hour contributing to the AI section of this year's Responsible Tech Guide by adding a few suggestions for "key terms" and "key moments." My suggestions to mention the open letters from FLI and CAIS were accepted by other volunteer contributors. However, this sentence became part of the final draft: "The letter has been criticized for diverting attention from immediate societal risks such as algorithmic bias and the lack of a transparency requirement for training data." I also noticed that "TESCREAL" was deemed one of the eight most important terms to know about responsible AI.

I attended a couple virtual events.

I participated in the Mentorship Program as a mentee, but dropped out because I wasn't getting much value from it.

ATiH's curated list of 500+ "responsible tech organizations" does not contain FLI, CAIS, AI Impacts, Epoch, or FHI. However, it does contain CHAI (makes sense), CSET & CSIS & CEIP (uh, okay), and (to my surprise) MIRI. The TechCongress fellowship program is present. Edit: On Dec 16, 2023, via the standard form, I suggested adding FLI, CAIS, AI Impacts, Epoch, and Horizon to the Responsible Tech Org List. Edit: As of Jan 23, 2024, FLI, CAIS, AI Impacts, Epoch, and Horizon were all added :)