Exploratory work with a hand-built developmental architecture, not a claim about LLMs. The goal is to understand how individuality and affect emerge before reward, suppression, or RLHF, and to explore alternatives to coercive training methods inspired by my background in social work.

My Setup

I use a “Twins” design: two agents with the same 10k-neuron continuous cortex, Oja-style plasticity, energy/sleep cycles, and state-dependent noise.

- Twin A: receives tiny HRLS-style nudges (“Principle Cards”) to the cortex→emotion matrix.

- Twin B: identical architecture, no HRLS, no cards (“pure surge”).

Key properties:

- No reward function

- No RLHF

- No supervised labels

- Autonomous sensory loop (“dreaming on their own past”)

100 independent A/B pairs are run for 50k steps each (~75 GPU hours).

Metrics that I used

For each checkpoint I track:

- Activity scalar: norm of cortex activation

- Emotion scalar: mean of emotion-field activation

Correlations are computed per run (A vs B).

Results after 75hours

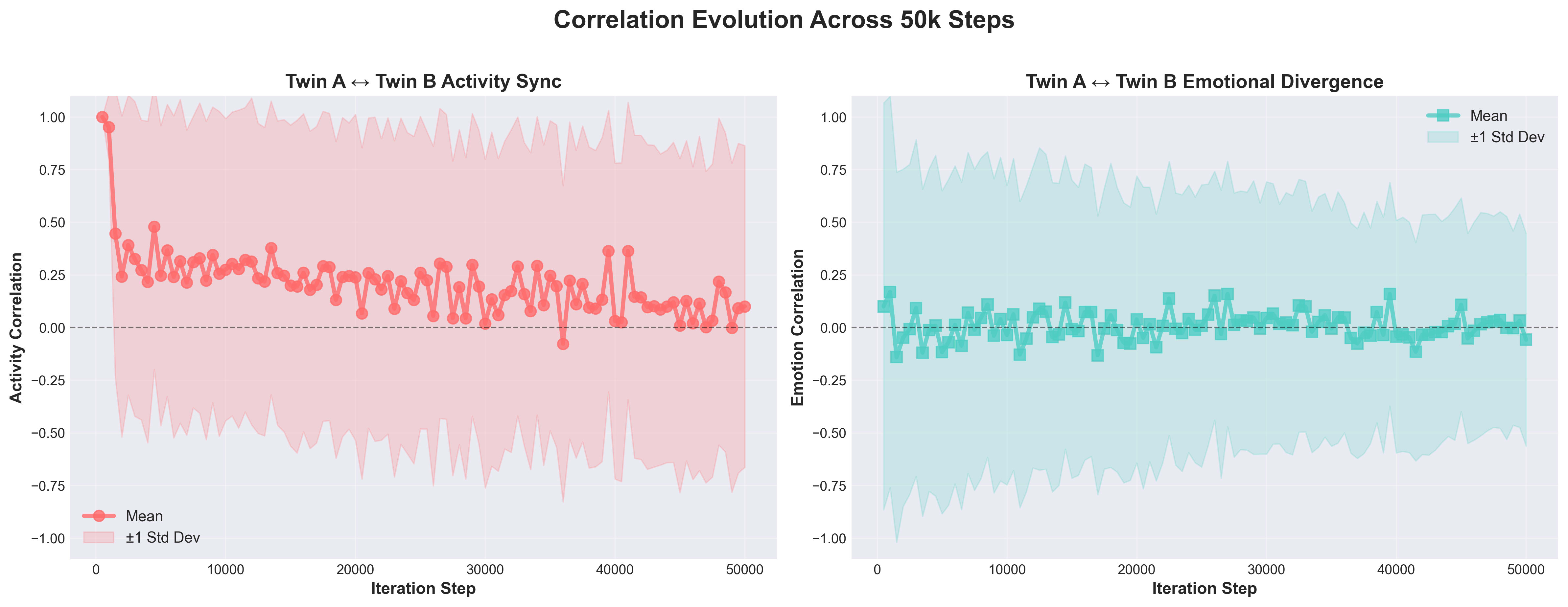

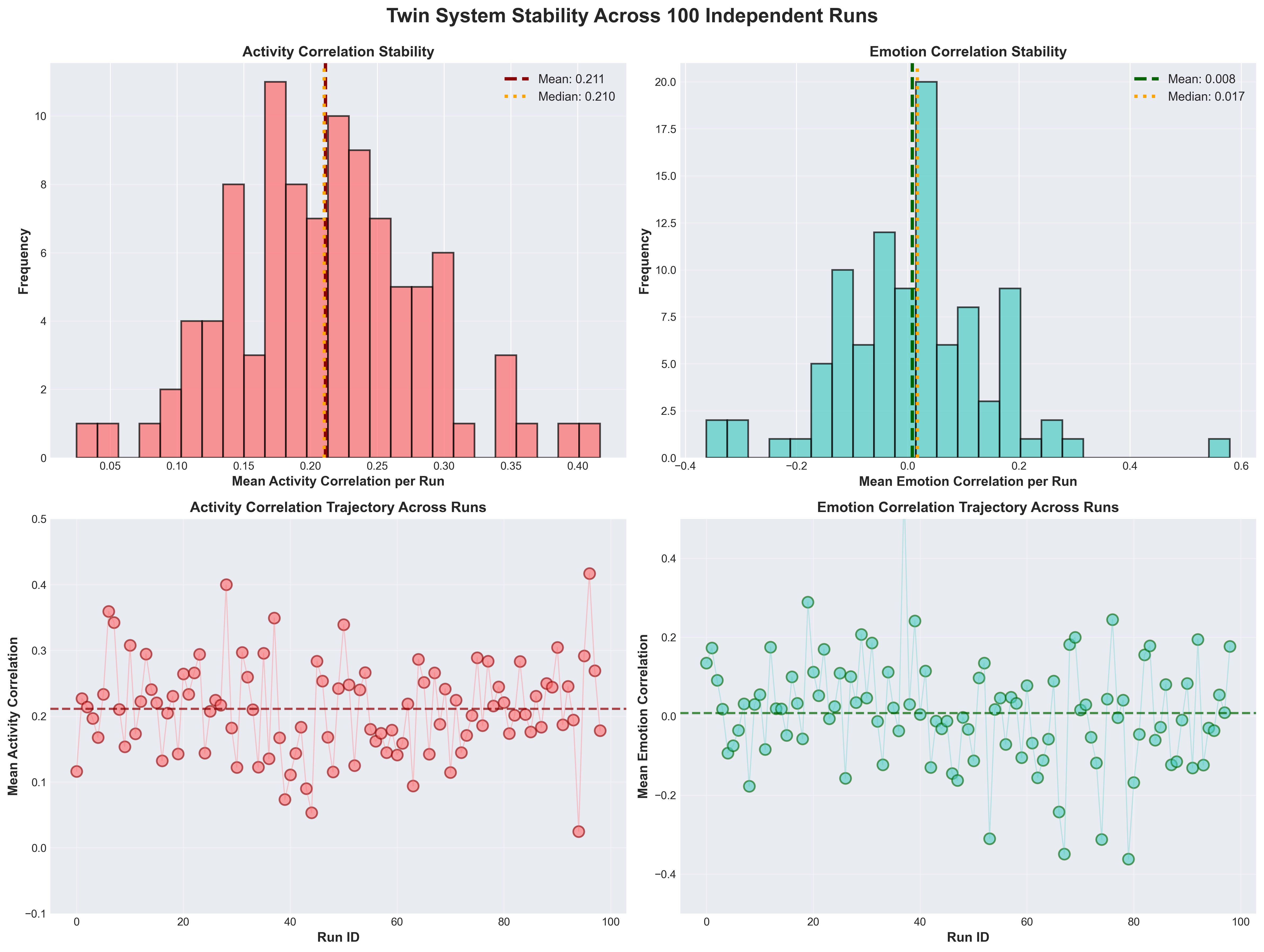

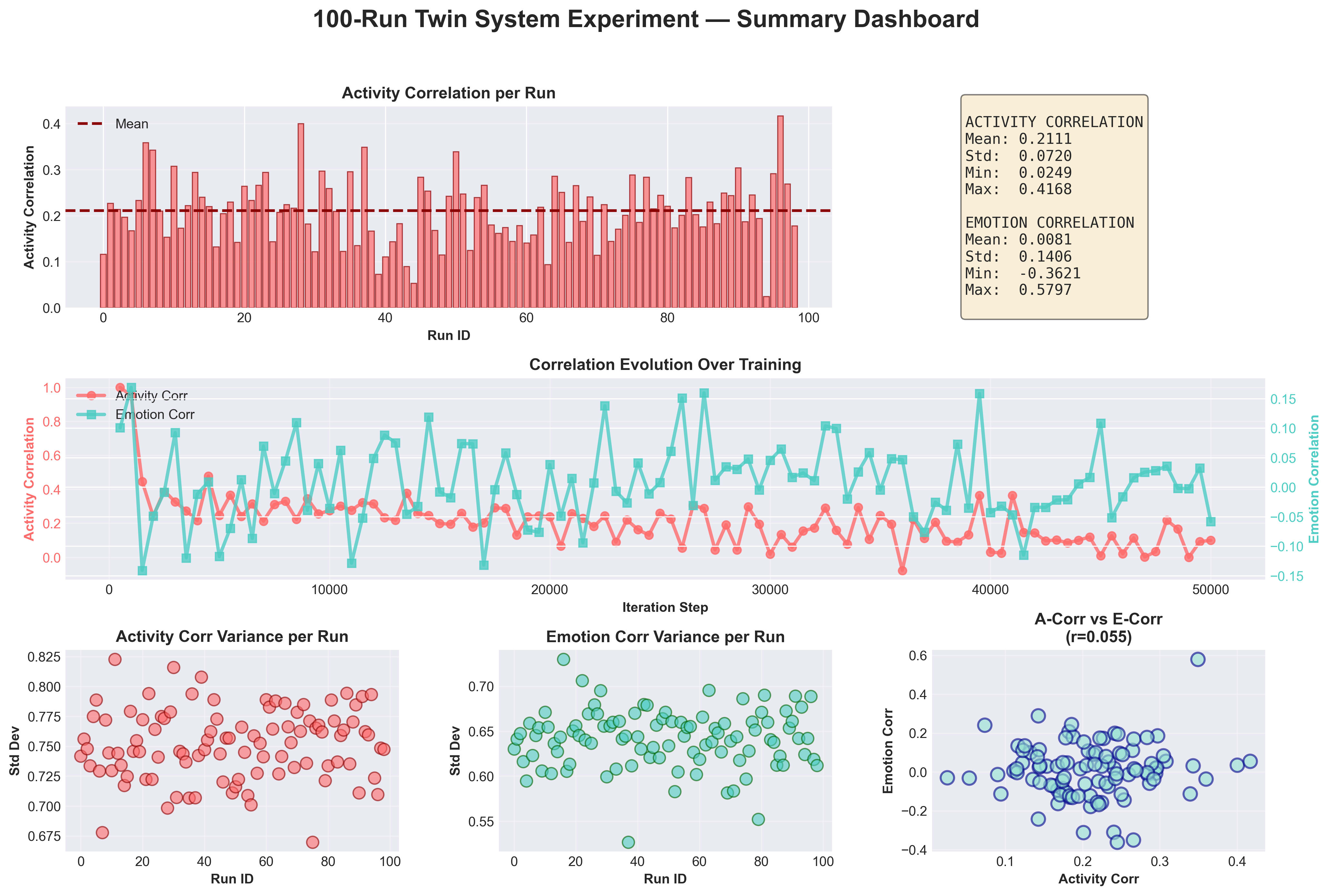

Activity: consistent mild coupling

Across 100 runs, run-level activity correlations cluster tightly:

- Mean ≈ 0.21 ± 0.07, range ≈ 0.02 → 0.42.

Interpretation:

Regardless of noise and HRLS, Twins reliably settle into a shared motor/dynamical style.

They are neither clones nor strangers.

Emotion: no canonical relationship

Emotion correlations are broad and centered at zero:

- Mean ≈ 0.008 ± 0.14, range ≈ –0.36 → +0.58.

Some twin pairs end up aligned, some anti-aligned, many orthogonal.

HRLS does not predict an emotional relationship; temperament diverges.

Activity ≠ emotion (r ≈ 0.055)

The correlation between activity coupling and emotion coupling is essentially zero.

Twins can share a body style without sharing a temperament.

This suggests emotion sits in a sensitive downstream subspace where small developmental differences get amplified.

- Individuality emerges before reward: even without RLHF or suppression, twin siblings develop distinct affective styles.

- Same model, same training ≠ same temperament: stochasticity and small nudges matter.

- Baseline for welfare/alignment: this gives a pre-reward reference distribution for people studying stress signatures in systems that do use reward/suppression.

In a future i would like to try:

- 2×2 ablations (HRLS on/off × same/different init)

- Richer emotion metrics (variance, spectra, PC1)

- Scaling cortex/emotion field sizes

- Porting the protocol to tiny transformers or RL agents

My conclusion

A simple developmental architecture can produce:

- Stable similarity in dynamics, and

- Diverse, unpredictable emotional relationships

long before any reward or censorship.

Individuality isn’t something you train into a system, it’s something that emerges as soon as you let it develop.

Thank you to friends and collaborators who encouraged me to finish this and put it out. You were right.