- All of the AI sceptics, the general public, and AI alarmists get AI risks wrong

- Risks are real, and our society has yet to face them — AI sceptics are wrong

- The risks that don’t matter (general public misconceptions)

- Automation, less work satisfaction

- Mass unemployment

- AI will change our relationship with labor and the economy, but this is not necessarily a bad thing

- AI will improve the living standards of billions

- Significant boost in productivity and innovation

- Automation can drive down costs, boost efficiency, and facilitate new markets and opportunities

- Improvement in human welfare

- Healthcare, education, personalized services

- Unlocking innovation and creativity

- Automating routine tasks and complementing human intelligence

- Long-term AI is very likely a complement; short-term, it may be a substitute

- Better decision-making and governance

- The risks that do matter

- Bioweapons

- AI-enabled weaponry

- Stable authoritarian regimes / surveillance

- Authoritarian control

- Undermining democracy and freedom of expression

- Centralization of power

- Personalized manipulation

- Loss of autonomy and individual freedom

- Economic and social instability (particularly in labor markets)

- The transition period is the problem, not the economy itself

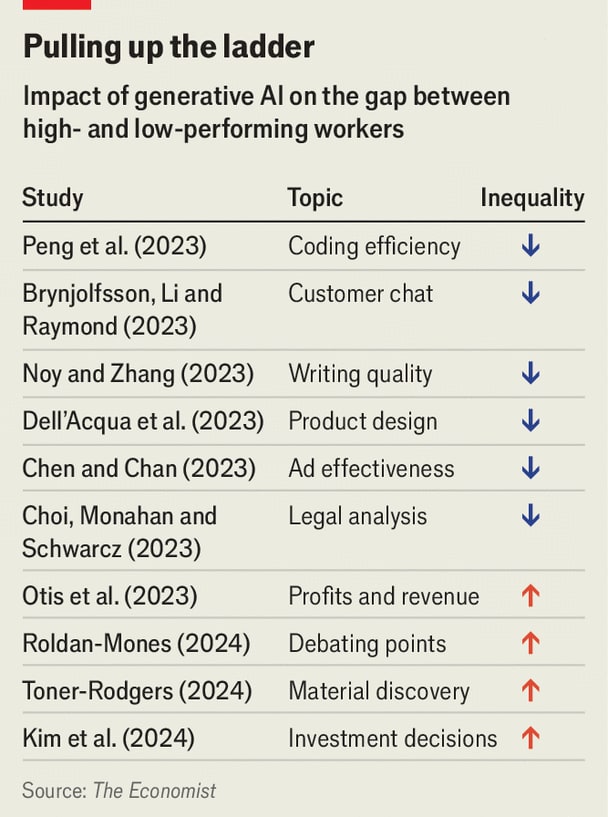

- Inequality and social division

- Good evidence suggests AI benefits the already skilled

- We will need:

- Better education and retraining programs

- The next generation may become AI-dependent idiots with no original ideas, or it may be the most exciting generation ever

- Higher taxes for the rich

- Social support

- Better education and retraining programs

- Some aspects of alignment

- Blackbox problem / interpretability

- Misalignment

- A practical issue at the current level of LLMs — no need to speculate about AGI

- AGI plausibility

- I don’t refute the possibility of AGI (plausible within the next century)

- However, not with the current architecture and data sources

- There is no honest way to assign a probability estimate since it’s a black swan

- AGI is a narrative of top AI labs to justify their insane investments

- AGI is not possible with the current generation of neural networks

- Gen AI shows that supposedly hard tasks (writing, image generation) are easier than thought

- However, current LLMs still cannot abstract and generalize well (e.g., multiplication, video games)

- Doing well on grad school tests is a bad proxy for real-world value

- Recursive self-improvement doesn’t hold: LLMs can automate straightforward tasks but still leave rigorous reasoning to humans

- We can see slowdown in LLMs development: difference between GPT4 and 4.5 is much smaller than 3 and 3.5

- Not fully sure in this claim, could be good to verify

- However, I am interested to see if Dynamics movement algorithm improvements + LLMs + computer vision with large capability improvements can lead to another leap in AI progress

- I still believe deep learning is the most exciting technology of this decade. That’s why I take classes and attend conferences on it

- Most interesting applications of AI that I see: bio, crypto / web3 (can finally make crypto it useful!), material science, mathematics, physics, econ/social science, business analytics

I will keep updated here:

https://eshcherbinin.notion.site/7-theses-of-eugene-shcherbinin

Hey Eugene, interesting stuff!

1) Long-term AI is very likely a complement; short-term, it may be a substitute”

I wonder why you think this?

2) "Good evidence suggests AI benefits the already skilled"

I feel like the evidence here is quite mixed: e.g., see this article from the economist: https://www.economist.com/finance-and-economics/2025/02/13/how-ai-will-divide-the-best-from-the-rest