This write-up is intended to be a summary of Chapter 2 (How could we be so wrong) of On the Overwhelming Importance of Shaping the Far Future by Nicholas Beckstead. The chapter originally spans ~12000 words, here we summarize the main points in ~1800 words.

This chapter of Beckstead’s thesis discusses Bayesian Ethics, evidence of error in moral intuition and some biases specific to longtermism. I have omitted the discussion only relevant to the latter.

Outline of Chapter Two - How could we be so wrong

In the first section, the standard Bayesian framework for scientific inquiry is introduced.

In the second section, the framework is applied to moral philosophy to argue that when assigning credence to moral theories we should:

- give less weight to fit with intuition in particular cases (eg “no number of headaches can be worse than a death”) and cherry-picked counterexamples

- give more weight to meta ethical theories, basic hunches about moral theories (eg “utilitarianism seems elegant”) and to basic epistemic standards

In the next three sections, three bodies of evidence are presented favoring the conclusion that human moral intuition about specific cases is prone to error:

- A historical record of accepting morally absurd social practices

- A review of the scientific lit on human bias in intuitive judgement

- A philosophical account showing deep inconsistencies in common moral convictions

In the sixth section, it is argued that there are specific biases that make humans underestimate the importance of the far future.

In the seventh and last section Beckstead summarizes his arguments and conclusions.

In this summary I group the first two sections explaining the Bayesian approach to Ethics together, as well as the three sections providing evidence against the reliability of moral intuition. I will not cover the sixth section - interested readers can read the original text.

A bayesian approach to moral philosophy

In the first two sections, Beckstead introduces Bayesian curve fitting and argues that it applies to moral philosophy as well as to scientific inquiry.

His main takeaway from that proposition is that to the extent that we expect moral intuitions to be biased we should rely less on fit to intuition and counterexamples when assigning credence to moral theories.

Bayesian curve fitting

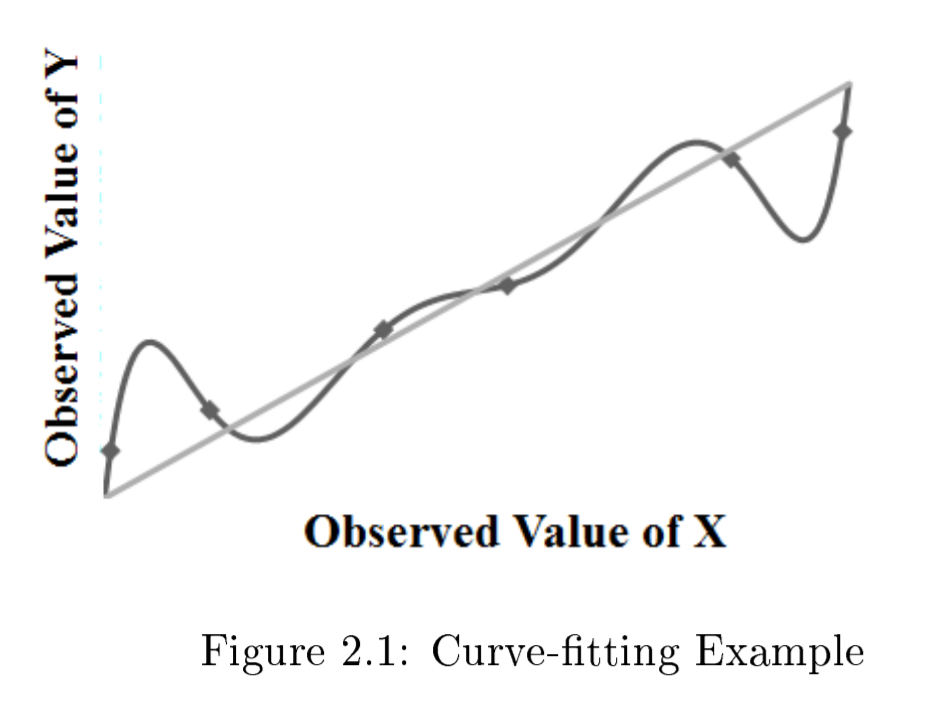

In the problem of curve-fitting we have a collection of noisy data points (“observations”) and a set of curves (“models”), both relating an input X into an output Y. We want to find the model that best explains the observations we have collected and will better extrapolate to the inputs we have not seen yet.

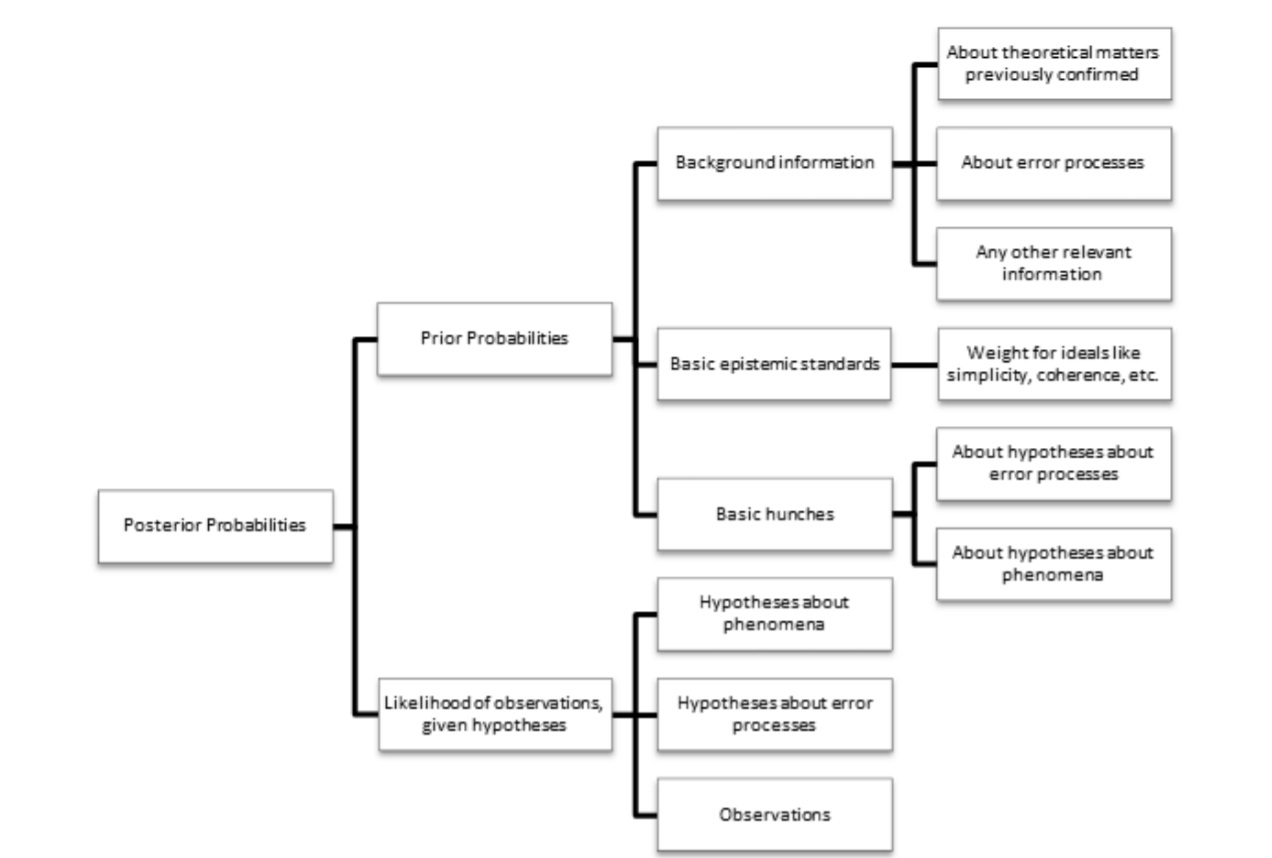

In Bayesian curve fitting we start by assigning a prior credence to each possible model based on background information, basic epistemic standards (eg simplicity) and basic hunches, and then update it based on the observations and our hypothesis of how the observations might be noisy or biased. Beckstead summarizes the approach with the following figure:

Bayesian curve fitting as a method of scientific inquiry is fairly standard and well argued for in other texts.

Beckstead draws two key implications out of it:

- When you expect more error, rely on priors more. The higher the variance, the most plausible is that any particular observation can be explained by noise.

- When you expect your observations to be systematically biased and you don’t know either the sign and/or the magnitude of the bias, rely on priors more.

Relevance for moral methodology

Beckstead claims that our moral intuitions are a kind of noisy data, and that our credences in moral theories should be updated in accordance with our best epistemic theory, ie Bayesian analysis.

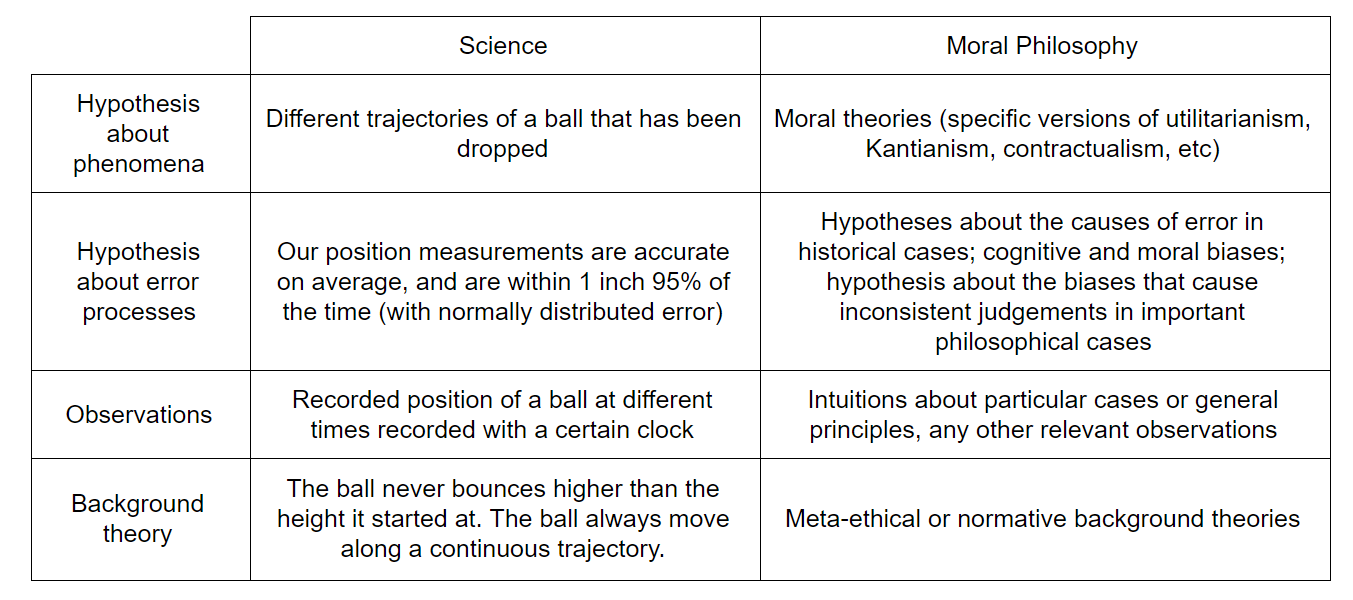

Beckstead summarizes the translation of Bayesian curve fitting from scientific grounds to moral philosophy grounds with the following table:

Beckstead discusses a possible objection to the Bayesian approach to Ethics: moral philosophy is a priori and requires different methodological standards. He counters arguing that Bayesian updating is a reasonable approximation of how people change their beliefs as they think. By way of an example of a priori reasoning working this way he suggests a math student figuring out if every differentiable function is continuous by trying out examples.

Now, Beckstead claims that our moral intuitions are especially noisy and biased, and thus we should rely more on our moral priors, as he discussed in the previous section. From this proposition Beckstead draws two main conclusions:

- Focus on the big picture. A few counterexamples are not strong evidence against a moral theory that does reasonably well with respect to most of the intuitive data, one's background theories, and basic epistemic standards (though they are some evidence against them). Evidence is much stronger if we can provide multiple kinds of counterexamples.

For example, Beckstead argues that the Levelling Down Objection is weak evidence against egalitarianism. To show that this objection can’t dismiss everything, Beckstead argues that eg the Hell One and Hell Two objection is strong evidence against average utilitarianism. - Give more weight to simplicity, or whatever your basic epistemic standards endorse, than you otherwise would. For example, if you endorse simplicity, your prior should give more weight to rule consequentialism over pluralistic deontology, because the latter has more adjustable parameters.

Evidence in favor of moral error

In the sections 3-5 Beckstead brings evidence in favor of the claim that our moral intuitions are especially noisy. He talks about 1) historical moral mistakes, 2) the scientific literature on human bias, and 3) some impossibility results showing that some of our strongest moral intuitions are mutually inconsistent.

The historical record

- In the past, there was widespread, correlated, biased moral error, even on matters where people were very confident. As examples, Beckstead draws attention over the treatment of women, slaves, homosexuals, etc.

- By induction, we make similar errors, even on matters where we are very confident.

Beckstead argues that the biases that caused the errors of the past remain embedded in us and will cause similar classes of moral mistakes.

Beckstead preemptively addresses some arguments against his claims:

- Objection: Past errors were mostly due to limited and/or inaccurate non-normative information. Since we have much more information, we should expect much less moral error.

- moral judgements are produced by emotional processes and verbally justified post-hoc, so further information won’t drastically change our intuitions

- we might not have uncovered all error-relevant information

- we might not have internalized all available, error-relevant information

- Objection: On some meta-ethical views (eg idealized preferences), historical moral error was probably much less abundant than on others

- Beckstead argues that although some historical error can be explained by changing standards and preferences, error can happen independently, and meta ethical theories that cannot account for this should be penalized.

- Objection; We have reason to believe that philosophers would not be subject to whatever these historical error processes were

- Beckstead presents several historical counterexamples, such as Aristotle’s endorsement of slavery

The scientific record: biases

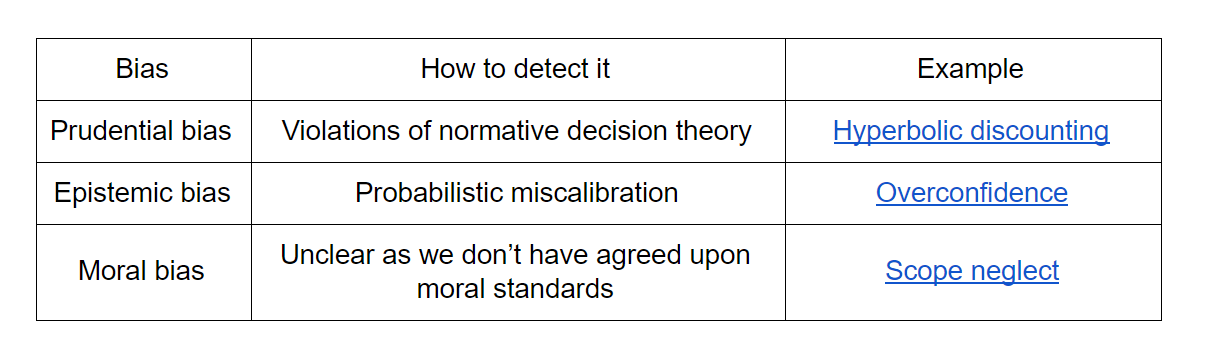

- Scientific findings on prudential, epistemic, and moral heuristics and biases strongly suggest that our moral judgments are subject to error processes which are widespread and biased.

- Because of this, we should expect philosophers' intuitions to be subject to error processes which are widespread and biased.

Beckstead cites as evidence Kahneman and Tversky’s seminal work on heuristics and biases. He then explains three types of biases:

Beckstead appeals to intuition to argue that we should expect that there are many unknown moral biases. He also cites some experiments on bias to argue that philosophers are no less prone to moral bias.

The philosophical record

- A number of impossibility results show that certain moral judgments about which philosophers are very confident are inconsistent with each other. As examples Beckstead explains Parfit’s Mere Addition Paradox and Temkin's Spectrum Paradoxes.

- Therefore, we should expect that there are some error processes underlying these judgments that biases us toward overconfidence, and we should not expect to find a theory of eg population ethics which accords with all of our most confident moral judgments.

- A relatively limited amount of resources (the careers of a few very insightful philosophers) generated most of these impossibility results.

- This search process is unlikely to have uncovered a significant proportion of the important impossibility results.

- Therefore, we should expect that there are many more such impossibility results.

- Therefore, we should expect that analogous error processes are operating in many cases where impossibility results have not yet been discovered, and that it will be impossible to find theories that accord with all of our most condent moral judgments in these cases as well.

Beckstead argues that these impossibility results should prompt us to 1) doubt our moral intuitions and 2) accept that no moral theory will be able to explain in a satisfactory way many of our moral intuitions.

Conclusion

Verbatim from the thesis:

Learning about moral errors through history, biased heuristics generating our moral judgments, and a collection of impossibility results should tell us that our moral judgments are subject to errors that are hard to detect and hard to correct. In light of this, we should trust intuition less and rely on our priors more. We should not expect to find a theory that fits all of most condent moral judgments, and we should largely be engaged in an exercise in damage control, especially in population ethics. Finally, we should expect these error processes to lead us to significantly underestimate the importance of shaping the far future.

This summary was written by Jaime Sevilla, summer fellow at the Future of Humanity Institute. The source material is due to Nicholas Beckstead, and I have directly reused many sentences from his work. This representation of Beckstead's work is only as correct as my understanding of it - if critiquing the original work please consult the source rather than presume my characterisation of it is correct. I do not necessarily endorse the conclusions reached by Beckstead.

I want to thank Max Daniel and Alex Hill for their insightful comments and thoughtful discussion over the draft of this summary.

Bibliography

- Nicholas Beckstead. On the Overwhelming Importance of Shaping the Far Future, chapter 2. Available online at https://rucore.libraries.rutgers.edu/rutgers-lib/40469/PDF/1/play/

Thanks for writing this, I found it interesting and it significantly increased the likelihood I'd read the original.