This is a linkpost for https://www.forethought.org/research/design-sketches-collective-epistemics

We’ve recently published a set of design sketches for AI tools that help with collective epistemics.

We think that these tools could be a pretty big deal:

- If it gets easier to track what’s trustworthy and what isn’t, we might end up in an equilibrium which rewards honesty

- This could make the world saner in a bunch of ways, and in particular could give us a better shot at handling the transition to more advanced AI systems

We’re excited for people to get started on building tech that gets us closer to that world. We’re hoping that our design sketches will make this area more concrete, and inspire people to get started.

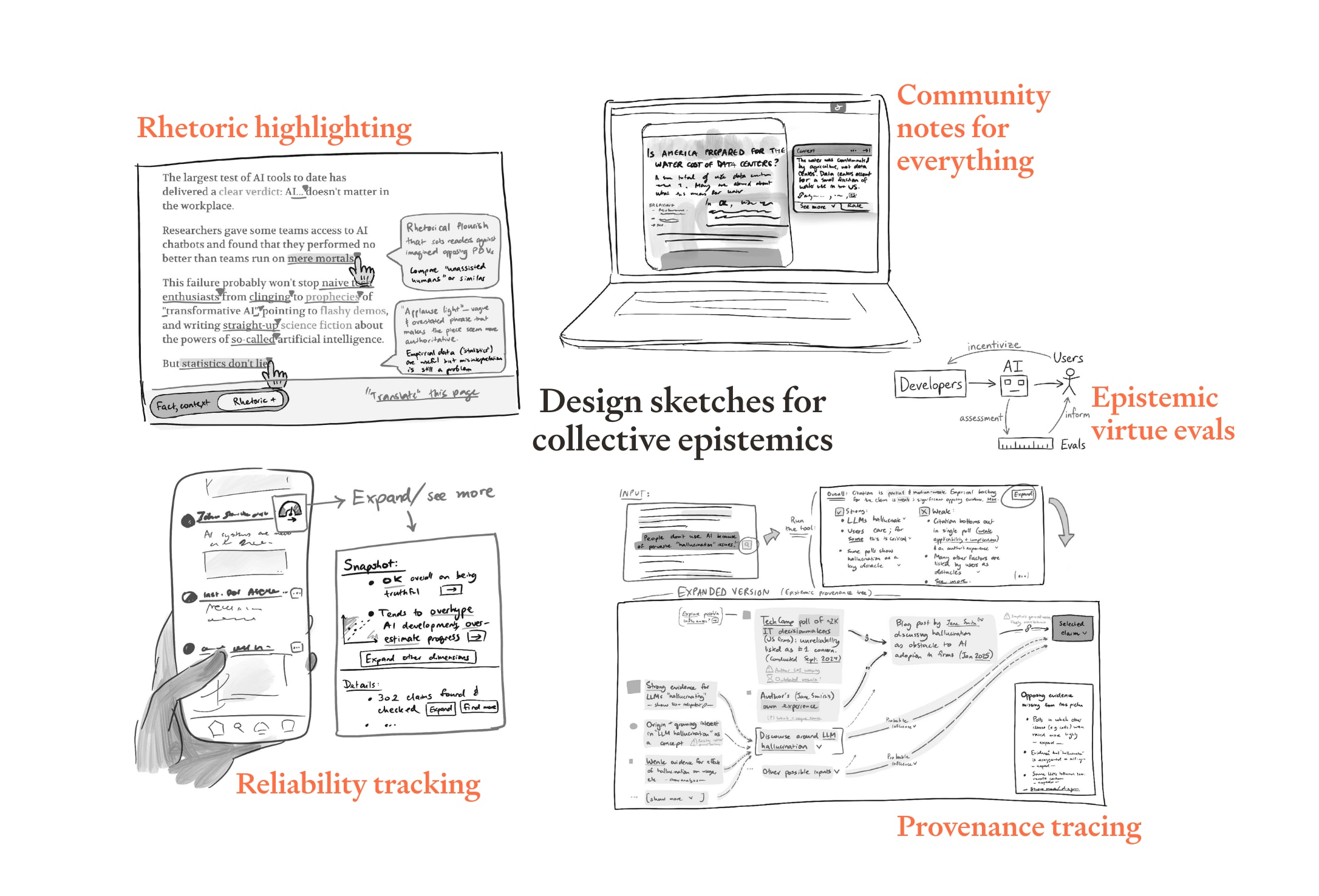

The (overly-)specific technologies we sketch out are:

- Community notes for everything — Anywhere on the internet, content that may be misleading comes served with context that a large proportion of readers find helpful

- Rhetoric highlighting — Sentences which are persuasive-but-misleading, or which misrepresent cited work, are automatically flagged to readers or writers

- Reliability tracking — Users can effortlessly discover the track record of statements on a given topic from a given actor; those with bad records come with health warnings

- Epistemic virtue evals — Anyone who wants a state-of-the-art AI system they can trust uses one that’s been rigorously tested to avoid bias, sycophancy, and manipulation; by enabling “pedantic mode” its individual statements avoid being even ambiguously misleading or false

- Provenance tracing — Anyone seeing data / claims can instantly bring up details of where they came from, how robust they are, etc.

If you have ideas for how to implement these technologies, issues we may not have spotted, or visions for other tools in this space, we’d love to hear them.

This article was created by Forethought. Read the original on our website.