In a world now painfully aware of pandemics, and with ever-increasing access to autonomous biological agents, how can we help channel society’s response to COVID-19 to minimize the risk of deliberate misuse? Using the challenge of securing DNA synthesis as an example, Kevin Esvelt outlines the key norms and incentives governing biotechnology, lays out potential strategies for reform, and suggests ways in which thoughtful individuals might safely and credibly discuss and mitigate biorisks without spreading information hazards.

We’ve lightly edited Kevin’s talk for clarity. You can also watch it on YouTube and read it on effectivealtruism.org.

The Talk

Sim Dhaliwal (Moderator): Hello, and welcome to this session on mitigating catastrophic biorisks with Professor Kevin Esvelt. Following a 15-minute talk by Professor Esvelt, we'll move on to a live Q&A session where he will respond to your questions. [...]

It's my pleasure to introduce our speaker for this session. Professor Kevin Esvelt leads the Sculpting Evolution Group at MIT. He helped pioneer the development of CRISPR genome editing and is best known for his invention of CRISPR-based gene drive systems, which can be used to edit the genomes of wild species. He has led efforts to ensure that all research in the field is open and community-guided, while calling for caution regarding autonomous biological agents that could be misused.

Professor Esvelt's laboratory broadly focuses on mitigating catastrophic biological risks by developing countermeasures, changing scientific norms to favor early-stage peer review, and applying cryptography to secure DNA synthesis without spreading information hazards. Here's Professor Kevin Esvelt.

Kevin: To those of us who seek to prevent the catastrophic misuse of biotechnology, the tragedy of COVID-19 has completely changed the landscape. Suddenly, the entire world is keenly aware of our profound vulnerability to pandemic agents.

That does have an upside, because after a catastrophe, we always overreact. That means our job in the near term is to ensure that that reaction is channeled as effectively as possible to prevent future biological catastrophes.

There are a few things we should definitely have two years from now:

* An early warning system in which we perform metagenomic sequencing of samples taken from sewage, waterways, and anonymous travelers all over the world. Sequencing all of the nucleic acids [from these sources] would establish a baseline, hopefully allowing us to detect any anomalies — anything new that's increasing in frequency — in time to do something about it. That's the highest priority.

* Protections that we can develop faster than a vaccine. For example, we might take the human protein receptor that mammalian viruses use to enter us and make decoy versions of all of them. These could serve as therapeutics in the event of a new pandemic agent that tries to use that receptor. Or, if delivered by gene therapy, these decoys could even protect us in advance, serving as the equivalent of a vaccine. If we did this for all viruses that rely on a protein receptor, we’d be protected from any agent in those viral families in advance.

* Dedicated resources for coordination. I would love to see the creation of an independent [oversight body] that’s the equivalent of the Federal Reserve. It could be narrowly focused on autonomous biological agents and have authority over other government agencies in that narrow area. Ideally, this group would also have an explicit mandate to cooperate with other nations, because we're all in this together.

The problem is that even though these three measures would broadly help mitigate natural pandemic agents that are viruses or engineered agents, that's not going to be true of everything in our response. Many scientists are actively engaged in sharing methods that will accelerate the research response to COVID, for example, by making it easier to synthesize and edit variants of viruses.

There are also many researchers looking at natural viruses. What are the zoonotic reservoirs? What is out there, and what exactly is it that causes a virus to jump species and spread effectively in a new one?

The problem is in biotech. Our ability to engineer things is directly proportional to our understanding of how nature does it. Insofar as someone reveals what it is on a molecular level that allows a virus to jump species and spread in humans, it becomes that much easier to engineer it deliberately.

Some people will say, “This is wildly implausible. We don't know how to engineer autonomous agents that can spread on their own in the wild.” Well, if you happen to know otherwise, definitely don't correct them. But you can point to a safe example.

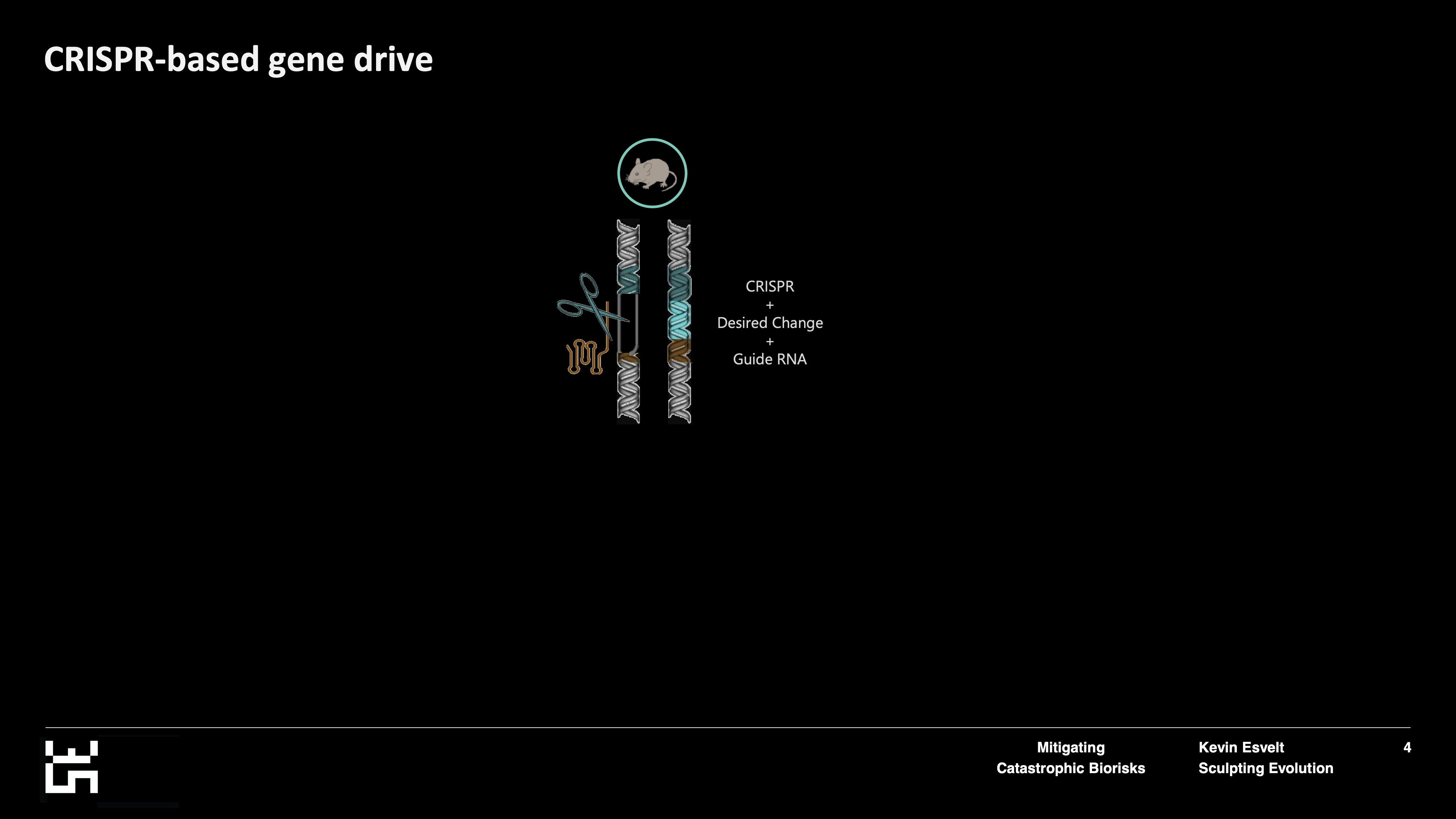

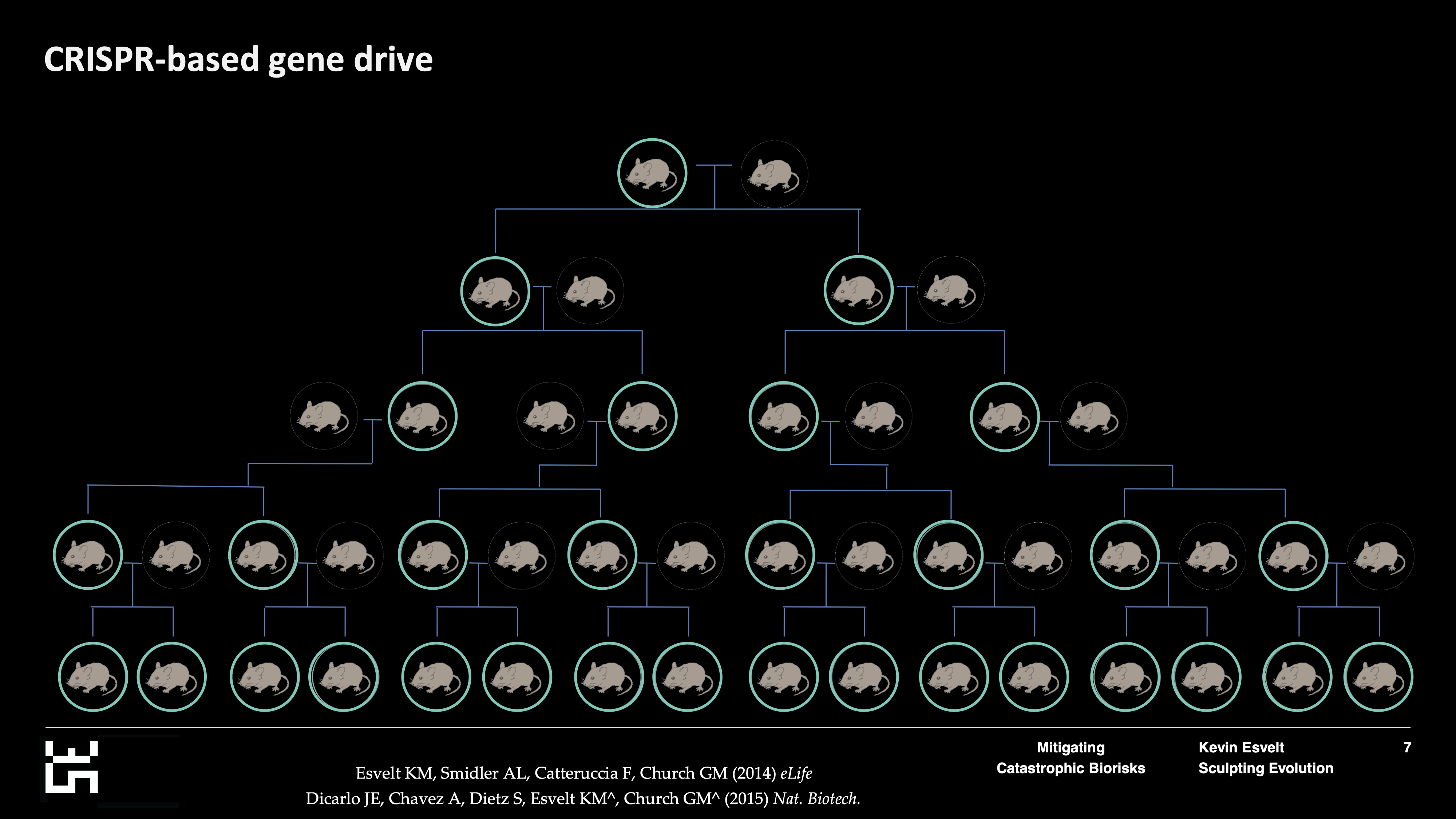

Eight years ago, no one had even imagined that a single researcher would be able to build a construct that could spread to impact an entire species. Then came CRISPR.

I played a minor role in developing CRISPR, and shortly thereafter, wondered what would happen if, instead of just editing the genome of an organism, we actually encoded CRISPR next to the change we wanted to make.

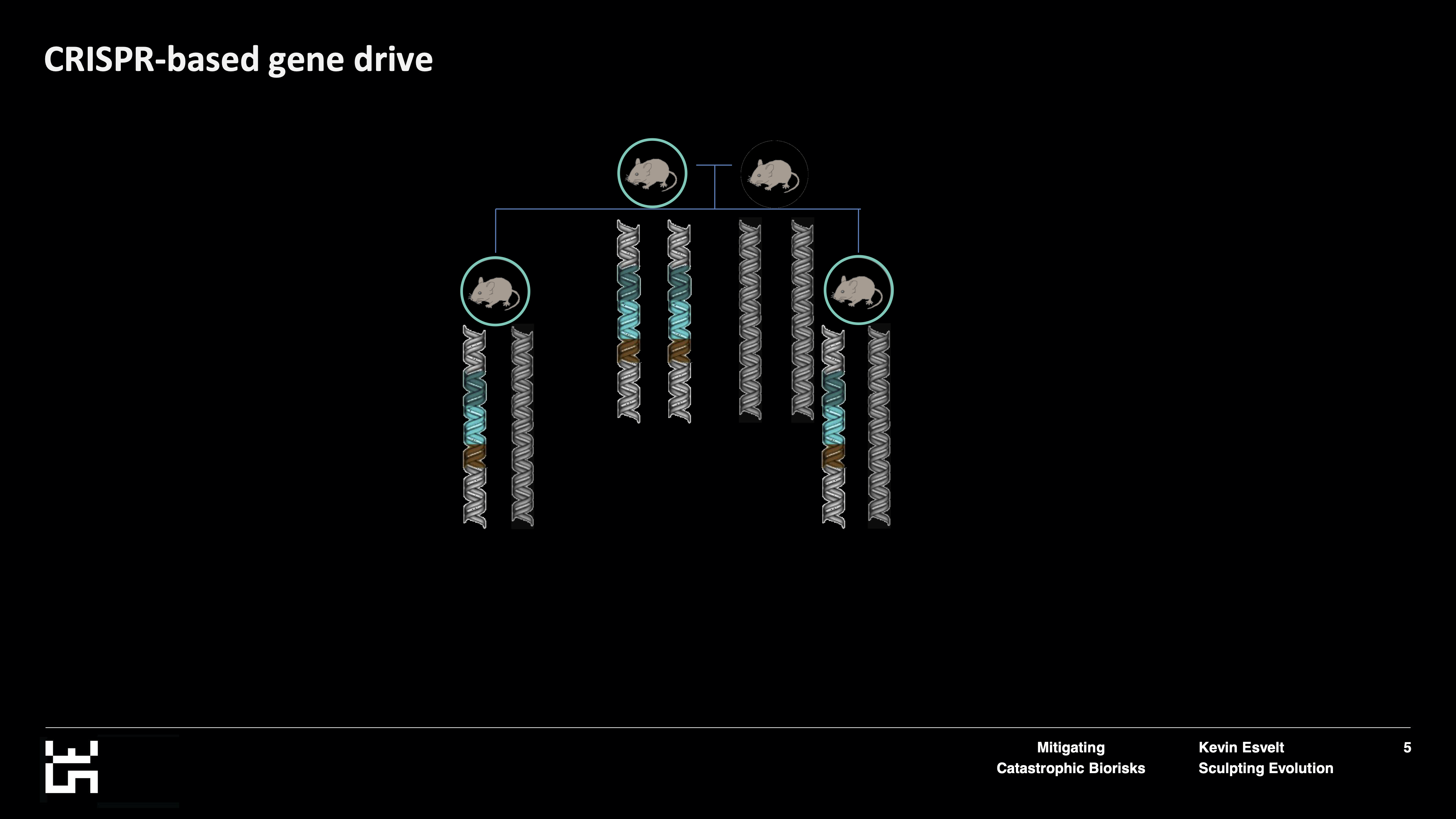

Then CRISPR would cut the wild-type version of the chromosome and copy itself and the alteration over, thereby ensuring that most of the offspring inherit the alteration in the CRISPR system. Genome editing would happen again in the reproductive cells of the next generation, thereby ensuring greater inheritance and causing this CRISPR-based gene drive system to spread through the whole population.

This is likely to be one of our most effective tools when it comes to challenges such as eradicating malaria. But it's also clear evidence that we can, in fact, build constructs that we believe will reliably spread to affect a species.

It's safe to talk about this because the CRISPR-based gene drive favors defense. It's slow, it's obvious, and it can be overwritten. That's why we thought it was safe to tell the world about the technology in the first place, and I stand by that assessment.

Although this technology is safe, viruses may well be another story — and that story must go untold. We already know that historical pandemic viral agents can be constructed from scratch. In fact, they seem to be accessible to pretty much anyone with the right skills: someone who knows the sequence for making something that could become a pandemic. Just about anyone can get the relevant DNA, because we don't screen all DNA synthesis everywhere in the world for hazards.

The real problem, of course, is that it only takes one person. Thankfully, very few people are in the grip of a sufficiently destructive and pathological ideology or mental illness that would cause them to consider deliberately building and releasing a pandemic agent. But again, it only takes one. We must reduce that risk as much as we can — especially since historical pandemic agents are not guaranteed to take off. If someone were to make 1918 influenza today, I would give it only a small chance of spreading to become anything approximating a new pandemic. We need to keep in mind that evolution is not trying to harm us.

An engineered agent could plausibly be worse than anything that we've seen in nature, so we must do everything we can to prevent this sort of thing from happening. I am by no means saying that it is possible to do this today. Rather, we should assume that future advances will lead to technologies that enable us to build more harmful agents.

If we see that day coming before any bad actor does, we could, in principle, get universal DNA synthesis screening working and deny widespread access to DNA. That would be effective. However, right now that would disclose what we're screening for, which would enhance the credibility of these kinds of agents as bioweapons and actively encourage bad actors to make them.

How can we avoid that? We somehow need to make all DNA synthesizers unable to produce potential bioweapons — without disclosing what we think might make a potential bioweapon. We need to do so in a way that's adaptive, because we don't know how to do it today, but we may learn how in the future. As we learn, and as any scientist spots a potential way of doing this, we need a way for them to enter that information into the system without [revealing] what it is.

This challenge of securing DNA synthesis without spreading information hazards is really a microcosm of the broader challenge of preventing the catastrophic misuse of biotechnology. How do we do it without disclosing what it is that we're afraid of?

We need to keep in mind that it's not just a technical problem. Even if we do come up with a technical solution that could prevent misuse, we also need to implement it in the real world, and that means we need to concern ourselves with incentives. Why don't most companies screen DNA for hazards? Well, it's expensive. Current screening methods create false positives that require human curation — an expense that most companies won’t take on. We need to fix that.

There's also a global challenge. Pandemics ignore borders. But DNA synthesis, for example, is done by companies throughout the world, and governments are presently in no position to talk to each other and deal with this shared problem. There's a lack of trust.

Then, there's the challenge of scientific norms. All of us, without exception, deeply resent any kind of interference in our research — especially bureaucratic interference. Many scientists believe that all knowledge is worth having, that we have a moral obligation to share everything that we find: all discoveries, genomes, and techniques, because they earnestly believe that that approach will save lives. I disagree. How are we going to deal with that?

The Secure DNA project is one attempt to deal with this problem by identifying and controlling the bottleneck that limits pretty much everyone from building autonomous biological agents: access to the relevant DNA.

We've gone about it by deliberately tackling the incentives at the outset. The way it works to avoid disclosure actually encourages companies to use it. Here's how it works.

Assume that you identify something problematic. You approach an expert who is authorized to work with the system. They might confer with one or two other experts to make sure they agree it's a threat. If they do, they would then enter it into the system — but not by entering the entire sequence you're worried about. Instead, they'd choose essential fragments at random, compute all of the functional equivalents, and then remove anything with a match in GenBank that might raise a false positive.

These fragments would then be hashed —i.e. transformed cryptographically — using a distributed multi-party oblivious transformation method. The result would be a harmless database of hashed fragments that couldn't be interpreted without the cooperation of every server in the entire network. DNA synthesis orders would be subjected to a similar process: fragment them, then transform them using the network.

Then, these interpretable fragments can simply be directly compared. If there's a match, the synthesizer rejects the order. And since this keeps not only the potential hazards private, but also the orders, there's an incentive for companies to work with firms that use this system: It protects their orders from potential industrial espionage. Another incentive for providers is that it's fully automated. The false-positive rate will be so low that it should not require any kind of separate human curation for more than a decade.

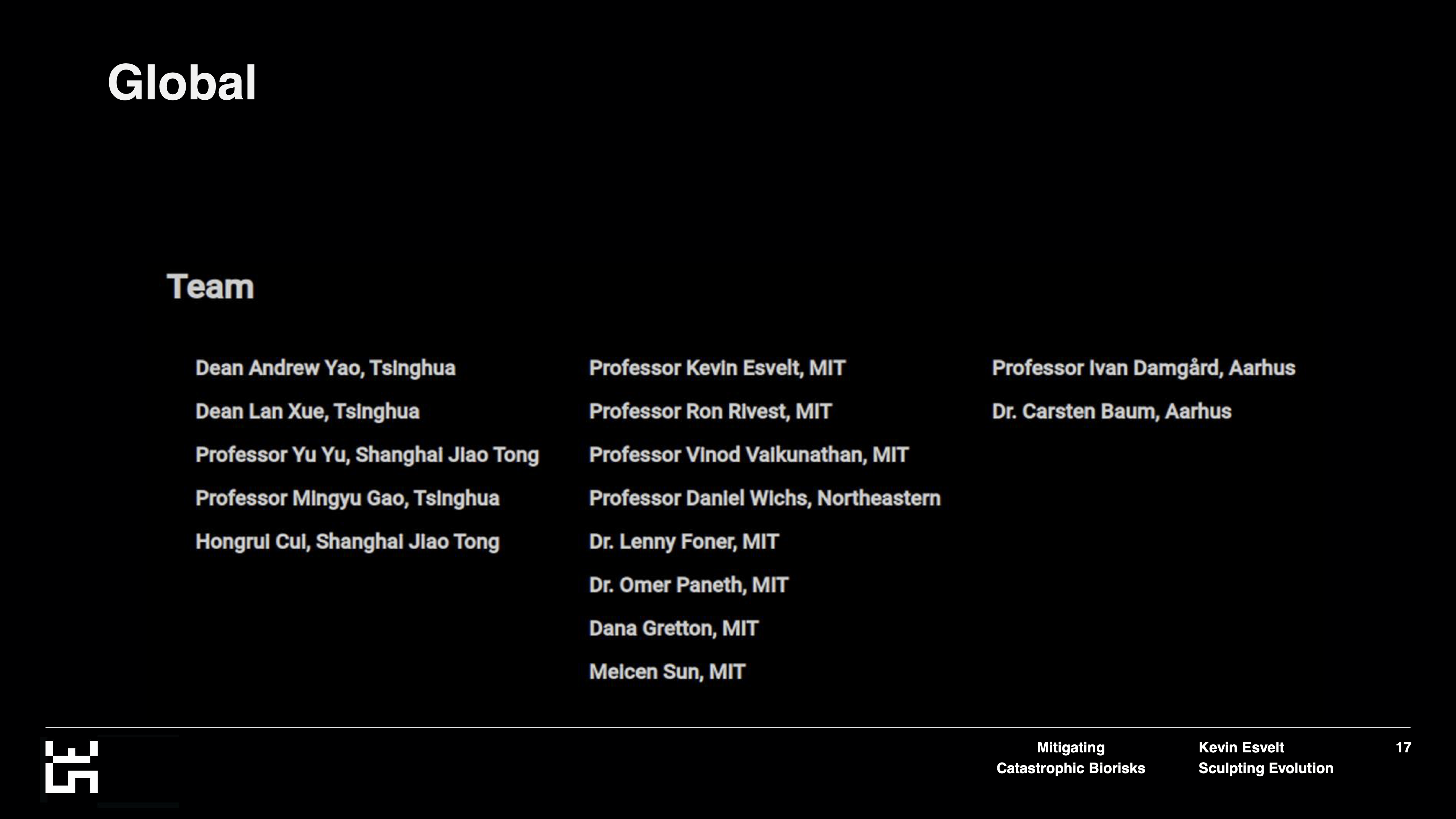

[The project also addresses] the global aspect of the challenge. We recognized that governments were not ready to do anything with this, so we launched the project from the outset as an international collaboration among scientists and cryptographers in the United States, working closely with our partners in China and in Europe. We're also in discussions with industry partners throughout the world, and we're letting governments know what we're doing. But we're not letting them become involved in any way due to those persistent issues of mistrust.

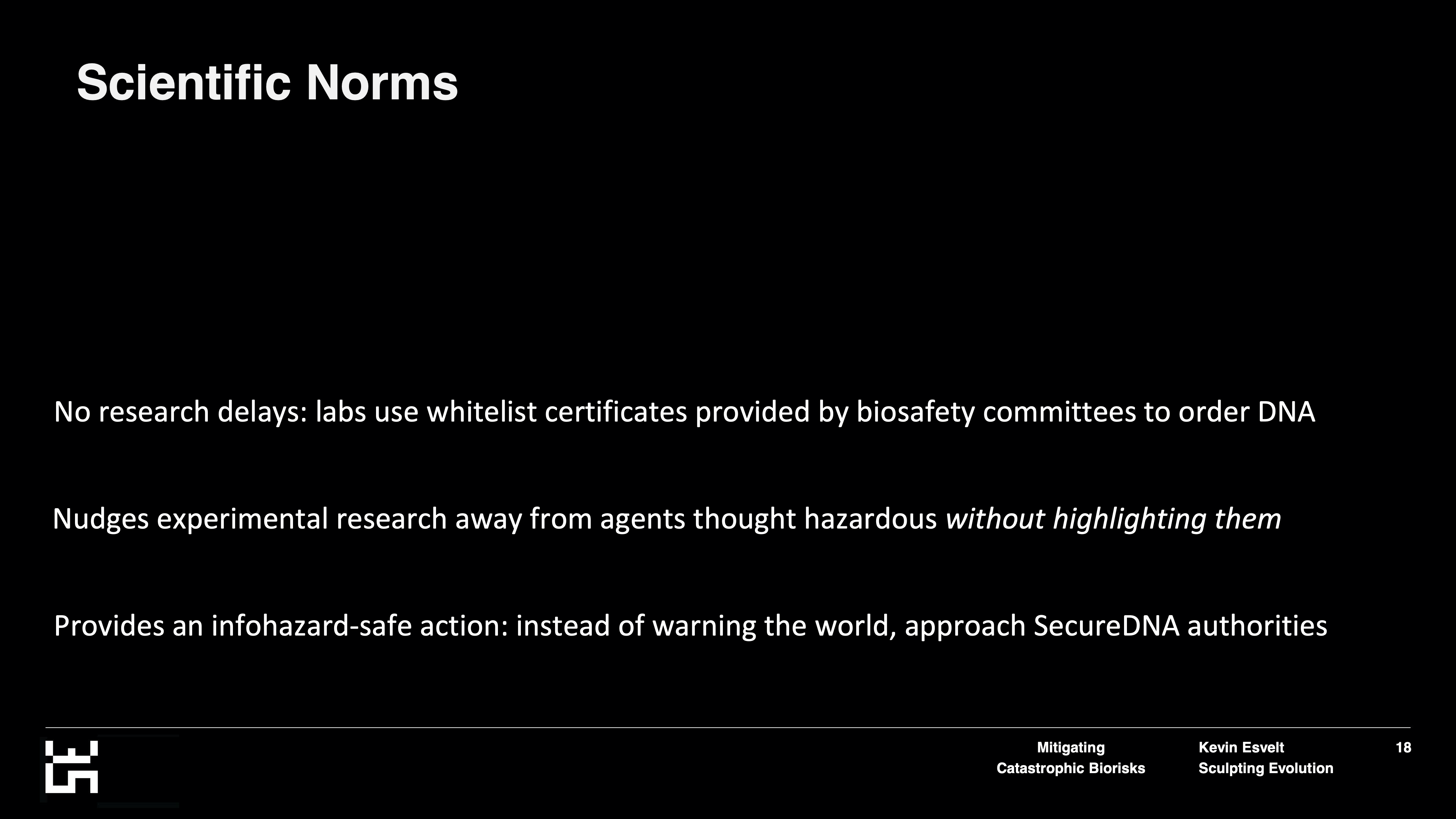

Finally, there's the delicate issue of scientific norms. Here, our overwhelming priority is to avoid doing anything that would delay anyone's research at all. In fact, we want to proceed such that someone working on an agent that might, in the future, be considered hazardous never actually learns that it has been so designated. That’s very much the point.

How can we do that? Whenever we begin a new experiment, we're supposed to get permission from our biosafety committee. If that biosafety committee then provided a certificate corresponding to a white list — i.e. everything that we are allowed to make and work with — that certificate could then be provided to the relevant DNA synthesis company, and allow us to make anything, even if it's on the hazards list.

That would ensure that anyone who is already working on an agent wouldn't even notice that it had been added to the list. But someone who wanted to do something new and creative with a sequence they haven't worked with before would need to get permission from their biosafety committee (like they're supposed to be doing already in order to work with such sequences). This would effectively nudge experimental research away from anything that might be hazardous, but it wouldn't highlight that research or deny scientists’ requests to do it; assuming they could obtain permission from their biosafety committee, which usually you can.

Perhaps most importantly, this provides an infohazard-safe option for scientists who spot something of concern. If secure DNA is successful and you identify something of concern, instead of naively warning the world — and likely precipitating the creation of the very agent that you're concerned about — you could work with the system to ensure that it gets added, and consequently synthesizers will be unable to make it (unless the lab has specific permission to work with that kind of agent).

I'm not saying that this is an ideal system. I'm sure there are a lot of flaws and holes in it. It's not intended to be perfect; we can't stop well-resourced actors from making DNA. But we believe that this is a start. It will show that good science and safety can go together, and we would very much welcome any feedback or suggestions from anyone and everyone.

More broadly, this challenge of preventing the catastrophic misuse of biotechnology is almost certainly one of the most important issues that we will face in the next several decades.

If you're interested in helping out, whether that's by working in the trenches, striving to convince other scientists that we need to be cautious, and changing norms from the inside, or by changing incentives and becoming an editor, working for a funder, or going into policy, please do.

If you'd like help in that and would like experience working in a lab, please reach out to me. Thank you so much to the organizers for this opportunity, and to all of you for your attention. Stay well.

Sim: Great. Thank you so much for that talk, Kevin. I see we've had a number of questions come in, so let's kick off the Q&A. The first question is very topical: The focus on biosecurity has obviously increased due to COVID, but has the pandemic actually changed any of your views?

Kevin: This is a tough question. What has changed is my estimation of our preparedness for a more severe event. I'm hopeful that will change due to the COVID response itself, but right now, I have to say that we were less well-prepared than I anticipated. I was particularly surprised by the response of WHO [the World Health Organization] and the CDC [Centers for Disease Control and Prevention], which was extremely disappointing.

On the other hand, a number of middle-income countries have done extraordinarily well thus far — much better than anticipated. Hopefully, we can glean some lessons from them to apply more widely.

Sim: Which countries [have done better than you expected]?

Kevin: It might change, but Southeast Asia in particular has had extremely few infections, even though the region experienced a lot of exposure to the original Wuhan event. Thailand and Vietnam have really kept the virus under control to an extraordinary degree.

South America is getting hit very hard right now, but Uruguay is doing exceptionally well. It is barely a high-income country, but it’s much better organized [than other South American countries]. Small government is good government, in that smaller countries tend to have better governance, less partisanship, etc. Even [given that advantage], they are keeping numbers down extraordinarily well. Hopefully, that will continue.

Sim: Yes. Is Southeast Asia’s [relative success] primarily because they've dealt with SARS and MERS previously, and they were just better prepared than Europe and other countries?

Kevin: That's certainly part of it. But I'd be surprised if that was all of it. It could just be the culture of ubiquitous mask-wearing from the get-go, although that's clearly not enough to stop super-spreading events.

I'm not an expert in this and haven't really looked into it. It would be great if someone did. We don't know what the next natural threat will be, but in terms of engineered threats, unfortunately the very existence of SARS makes it obvious that a SARS-3, or something like it, is possible. The scientific community is going to study and illuminate exactly what it takes for a coronavirus to spread in humans. That is not necessarily a good thing, but it is inevitable at this point.

Sim: Yes. What probability would you put on a GPCR [G protein-coupled receptor coronavirus] emerging in the next 10 years? What about in the next century?

Kevin: It's important to benchmark this, and we don't have very many data points of COVID-level events (thankfully). But history suggests that we're probably looking at something like a half-percent to one percent chance of something like COVID happening every year. That provides a baseline. There is probably around a 5% chance of something plausibly worse in the next decade.

A century is a very long time. My concern is that the biorisk field perhaps isn’t, on the whole, defense-biased. However, we might be able to discover technologies or apply strategies that could change that. My hope is that we'll be able to do that sometime in the next few decades; that would completely change the calculus for the rest of the century.

I'd rather not give priors on the century level. That's a bit too long of a period [to be able to estimate].

Sim: Do you generally think that the frequency of natural pandemics will increase over time, given factors like globalization?

Kevin: I'm highly uncertain on this question of natural pandemic frequency going up or down. Obviously, the more human-wild animal contact there is, presumably, the greater the risk. But I'm not convinced that means that the numbers will actually go up. Many people believe [that pandemic frequency will rise for those reasons], but I would note that:

1. More and more people are living in urban areas where they aren’t coming into contact with animals.

2. We've wiped out a lot of natural habitats, which has reduced the populations of a lot of the animals that harbor zoonotic reservoirs of viruses that could conceivably jump across species.

I would say that combination means we're actually at lower risk. But again, I have a very high level of uncertainty [regarding this issue]. It could go either way.

Sim: In thinking about biorisk within the context of EA, longtermism, or other cause areas, how do you think we should compare bio threats against risks from things like advanced AI, global warming, nuclear security, and global conflict? And how do we allocate resources if we care about preserving the future?

Kevin: Again, the caveat here is that I'm naturally extremely biased. Everyone thinks that the cause they’re working on is clearly the most important one, right?

I definitely would not say that bio is the threat over the next century. That said, looking at it over the next 10 to 20 years, I'm quite concerned about bio — more than I am about any of the other areas. Climate change makes everything more difficult, and some of the tail-end risks are going to be concerning, but not over that timeframe. On AI, I would have to defer to the experts. I think nuclear security and global conflict are non-trivial (and again, I’d have to defer to the experts), but I would say that bio is higher than those areas. Climate change can make the conflict more likely. They're all interrelated.

But in the next 10 to 20 years, I worry most about bio. Hopefully, that risk will go down and we'll need to worry more about the others later.

Sim: Yes, and I guess when you think about allocating resources, you also have to consider the real world and the timeliness of when to push things. This might be bio's moment to take the largest portion of resources in policy and funding.

Kevin: The challenge, however, is that bio is, I would argue, more infohazard-sensitive than any of the other cause areas by a long shot. The problem with nuclear weapons is proliferation. Nuclear proliferation has nothing on bio proliferation. If we discover the wrong things and disseminate that knowledge, we're going to be in much worse shape in terms of the number of actors with access to information that we may or may not actually be able to defend against.

That's what concerns me: this tightrope between adequately preparing without talking about it, giving specifics, or being able to tell policymakers about the problem. I mentioned in my talk that intelligence communities might be a problem. We know, from the history of espionage, that governments are not very good at keeping secrets about major hazards. Consequently, we don't really want intelligence communities to find out about a scientist discovering something nasty. We want to be able to deal with it while informing, ideally, fewer than five people.

That's why I'm enthused by the idea of setting up algorithmic systems. At least with an algorithm, it's not going to actually build the thing [you’re trying to prevent being built] — at least not until we have to worry about AI, of course. But again, that’s much further down the line.

I'd be interested in hearing whether anyone is concerned about whether our hash-based scheme with multiple parties — although secure against humans — won’t be secure against an AGI [artificial general intelligence]. Maybe that's a risk downstream. It's a matter of whether you concentrate on mitigating risk sooner or later.

Sim: Are you seeing this being done at all, or well, anywhere around the world? Are there any examples of policymakers acting in sensible ways in terms of infohazards?

Kevin: This is difficult to answer, because I don't have security clearance myself. I'm not in a position to say. And of course, anyone who does isn't allowed to say, either. My assessment overall is that we are acting largely in ignorance of the potential technical nature of the threat. I can't say much more than that, other than that the biosecurity community lacks relevant information on the technical plausibility of a wide variety of actions.

I'm not sure how to fix that, because obviously there are major infohazards to consider. I go back and forth on this: how much of a problem is it that people focus on things that may not be an issue, while ignoring things that they're not aware will become an issue?

I should point out, in full disclosure, that I know of perhaps one or two people who are more concerned about intohazards in bio than I am, so that's my baseline.

Sim: In the world?

Kevin: In the world.

Sim: Right, and I realized the fatal flaw of that question when I said it — and that even if you could answer it, you can’t.

Where is the best place to make an impact in the biosecurity field — in the military intelligence community or in civilian research?

Kevin: Again, I'm ignorant and would defer to those who have more access to the military intelligence community and know more about it than I do. But I've interacted enough with them through funding calls and discussions about certain risks that are best handled by government to know that academia leads in fundamental biotech capabilities. Industry is best in near-term application, but when it comes to seeing what is possible and making it real, biosecurity is not like AI. Academia is well ahead.

My concern with the military intelligence side, for now, is that they're not taking it seriously, probably because they don't spend most of their time hanging out in Kendall Square where every tenth person you meet has the technical capability to do some very worrisome things.

As soon as they _do_ start taking it seriously — as soon as it's treated as a credible threat — that is an incentive for others to start playing with it. I'm actually not sure that we necessarily want it to be taken as a serious threat. My hope is that we will not stumble on anything over the next few years that will enable it to become one. We'll see.

Sim: Yes. What areas do you see as the most neglected in biosecurity, and what are you most concerned about?

Kevin: This also comes really close to [being unanswerable due to the] infohazards problem. I really can't answer that question. But one concern is that a lot of the actors in the current biosecurity space did their PhDs too long ago. They're too far from the technical cutting edge, and don't have a good overall picture of what's going on. Due to infohazards, that can't be rectified.

I would merely note that we are never going to operate with a bird's-eye view of the landscape. No one is going to have that [visibility]. It doesn't matter whom you're networking with. Even the folks at Open Philanthropy who are working on it — with all of the people whom they talk to — will never have a truly bird's-eye view, not even if those people were disclosing the actual risks. We're always going to be operating with an information deficit when it comes to bio infohazards.

That means you have to adjust your priors; you must assume that there may well be a great deal that you don't know, and that the situation is probably worse than you think because of the infohazard problem.

I don't have a good solution for that, or suggestions as to what to do. I am concerned overall about the possibility of dual use. If [the potential for infohazards] isn’t on most scientists’ radar, that also is a blessing, because most scientists would never even dream that it might potentially be misused.

This comes up in calls with DARPA [the Defense Advanced Research Projects Agency]. Occasionally, the program manager will have to say, “This thing you proposed has some downsides,” and the scientist responds, “What?” That's when the program manager might notice, depending on their level of technical expertise.

On the flip side, if it requires that much technical expertise, then — at least for now — we probably don't have to worry about its misuse, because most of the potential bad actors are not [technically] good enough. I am most worried about scientists stumbling upon something and then, in a well-meaning but naive attempt to warn the world, spreading the infohazard and making [the possibility of doing harm] credible.

Once you describe it publicly, if it's interesting, then journalists will write about it. We already know this. I'm not going to point to specific episodes, but anyone in the field knows what I'm talking about. Once journalists start writing about it, then there's an incentive for scientists to start studying it and determine whether it's real. And unfortunately, the downside of biosecurity research is that there's always the temptation to point to particular risks in order to justify your existence. Unless you have some other means by which to be taken seriously, that can be a pretty strong temptation.

I'm somewhat frustrated by how the biosecurity community, in my opinion, lacks [an adequate appreciation for how damaging infohazards can be]. I think it's partly because the world doesn't really take it very seriously, and it's too early to tell whether COVID will change that. It’s hard to have discussions about this topic via Zoom, or really at all in the [current climate].

We'll see how things change in that regard. My hope is that the scientific community will be a bit more concerned about infohazards across the board. But I still expect scientists to try to discover exactly how a virus spreads in humans, and I'm not sure we're going to be able to dissuade enough people from doing that in time. That is a generational phenomenon, though, so things will get better.

Sim: It seems that’s why you’re suggesting we create an independent body, or some mechanism by which scientists can flag this, rather than publishing [their findings].

Kevin: Yes, and it's to incentivize them to do the right thing in that regard. When it comes to, say, methods of stitching [DNA sequences together] in order to do virology more effectively, what you don't want to do is teach all of the virologists how (along with everybody else). You instead want to keep that knowledge in the hands of a few specialists in synthetic genomics. Have them create centers that can then supply custom orders from any virology lab in the world, as long, of course, as it has the relevant institutional permission and [meets the appropriate standards for biosafety]. That way, you can accelerate the relevant research without disclosing exactly how to make these kinds of agents and making that information more accessible to everyone.

Sim: To switch topics, a lot of EAs care about animal welfare. What are your views on using biotech to alleviate wild animal suffering?

Kevin: In many ways, this is the secondary focus of my lab. I think [that intervention has] tremendous long-term potential, but to be very blunt, I'm not sure it’s something requiring much EA advocacy. The topic of using biotech to deliberately edit wild species, especially for the long term — and especially for reasons that have nothing whatsoever to do with humans — is a very sensitive subject. People are wary of even the idea of deliberately tweaking animals, let alone wild animals in the areas in which they live, when we don't understand the ecosystems that well. I've observed there is quite a risk of backlash; it’s caught up in the anti-genome movement.

Target Malaria deals with this all of the time. I think it needs to be handled exceptionally carefully. What we’ve found is that people are most supportive if [biotech solutions are] community-led from the start — that is, the people living in the affected areas need to tell the scientists what they want done. If it's done that way, then it's much more difficult for outsiders, particularly anti-biotech activists and the like, to essentially throw a wrench in the works and [drum up] outrage.

It also helps if the work is done by a nonprofit. That helps a lot, because if the initiative is led by the relevant communities affected by the problem and done by a nonprofit, then it's hard to get traction fighting it; doing so [smacks of] outsiders coming in and telling people that they don't know what's best for their own community. And again, we don't understand ecosystems very well, and it's the people who live there who are going to bear the brunt of any mistakes. It really needs to be in their hands from the get-go.

I think EAs can essentially respect that, advocate for community control, and possibly help raise awareness of issues by talking to the relevant community. But I think we need to be exceptionally cautious about how we approach it. There is a real risk of blowback.

Sim: Yes, and I think you've traveled and spoken to such communities. What's the most positive experience you've had, or the main lessons, in doing that?

Kevin: The most heartening — and heart-rending — thing happened after talking to a number of Maori in Otago, New Zealand, and apologizing for how I had published a paper without thinking to run it by them at the revision stage. It could have harmed their political interests, and I told them that I completely understood why they didn't want to work with us again after that.

A woman stood up and said, “I have never heard a scientist come and apologize to our faces for doing something wrong.” That meant a tremendous amount to them, but on the other hand, that's just heart-rending if that was the case, or at least her perception.

I'm not sure if that's true of the community as a whole, but she had never seen it before. You can gain a lot [of trust] if you are willing to go and talk to people, explain what their options are, and say, “I don't know what's best. You live here. It's your environment. It has to be your call. If you don't think this is a good idea, just tell us and we'll walk away.” People really respond positively to that.

That doesn't mean you need to tell them exactly what you think is possible from the get-go — you can only do that in areas that are fairly free of infohazards. But if you're thinking of doing something in the environment, I should hope it's not particularly hazardous.

Sim: Right. And switching topics again, what's your opinion on biohackers’ role in mitigating the risks of, or responding to, unmet clinical needs? And I guess the flip side of that question is how do others view that bio community?

Kevin: I prefer to call it “community bio,” which is a bit more reflective of what it actually is: a community of folks working with bio who usually have some ties to formal biotech through academia or industry — as well as a lot of people who are interested in playing with bio and learning.

In general, they tend to have a much better ethos with respect to caution than does academia. Now obviously, there are a few very high-profile exceptions to that, which tend to get all of the press. But the community as a whole is much more concerned with doing the right thing, and is much more aware that things could go wrong than the typical academic is — by a long shot.

Sim: That's surprising.

Kevin: Right now, it's worth pointing out that “community bio” is not doing anything that worries me. They're not playing with those kinds of agents at all, in part because a lot of leaders have said, “We're just not going to go there. That's not an area that we need to play with, because it raises too many potential hazards.” That's the right attitude. I would love for it to rub off on academia.

Sim: Well, that was an unexpectedly positive answer to that question.

Kevin: It might change in 10 years, but right now, [my assessment of that community is] very positive.

Sim: Great. I think we have time for one more question: What’s one message that people who really care about this topic can take away about what to avoid if they're trying to do the right thing?

Kevin: Here's the hardest one: Please do not try to come up with new GPCR-level threats. Don't try to think about how it can be done. Don't try to redeem it. If you can't help yourself and your mind just goes there when you hear a talk about the topic, don't spread it around. Don't type it into your computer or do a search history, unless you're extremely familiar with information security, and really, don’t even do it then.

If you see something that concerns you, then you probably already know the folks in the EA community whom you need to reach out to. Obviously, do it in a secure manner. “Secure” means that if you're really worried about something, have a face-to-face conversation and nothing else. Don't allude to the details, ever. Be cautious.

That said, the flip side is we really need to persuade more people that not all knowledge is worth having. That's the fundamental challenge. For example, if you talk to folks who do gain-of-function research on making viruses more transmissible and lethal in humans, they earnestly and truly believe that their work will net save lives, and that if it stops, more people are going to die. That’s partly because they don't believe (and can’t even imagine) that their work will ever be misused; their minds just don't think in terms of breaking something, rather than doing good.

If they can conceive of the possibility, they might say, “Okay, it could be misused, but people wouldn't do that.” Or they think, “If we just understand it well enough, we'll be able to come up with a countermeasure. It'll be okay.”

But how is that HIV vaccine coming? It's abundantly clear that there are some things that we just can't come up with a defense to in time, and that's not something that they see. I don't think that it’s a rational response to them, because if you're in science, it's because you love understanding, and you've devoted your life to satisfying your curiosity. So the very notion that maybe we shouldn't go there — at all, possibly ever — is completely antithetical to the very mindset that it takes to do science.

I honestly don't know if I would be saying any of this if it weren't for the CRISPR-based gene drive, which helped me realize, “Wow, we went from not even imagining that something like this was possible, to something that can spread on its own very extensively in the wild.” That's on me, morally speaking. We're lucky that it’s defense-biased. What if the next one isn't? If it hadn't been for that experience, I don't think I would be where I am today, or as concerned as I am.

How do we convince people without saying anything specific? Counterfactuals. It's not too hard to puncture the naive belief that all knowledge is worth having using any number of examples of counterfactual technologies. So do that.

Also, you can always go a meta-level higher. There are very few infohazards for which you can't go up by a few levels of abstraction to explain the situation, and most scientists are people who love abstraction. Try to persuade people. You can do that by becoming a scientist yourself and working on the inside, or by changing the incentives that drive scientists. At the end of the day, that's what we're going to have to do [change incentives]. That's what people respond to.

But how can we get in a position to do that — and do it in time? Ten or even 20 years is not that long in science, given the cycles of careers and generational change.

That said, any marginal increase in the share of scientists who are more cautious about these things could possibly be nonlinear [and make a difference]. You often mention to some of your colleagues and friends what you're thinking about doing. If they're a little bit more cautious and concerned about the consequences, they might mention that and let you know that perhaps you're going in a direction that is best not explored. They might even persuade you not to do it. If that happens enough, then I think we'll be in pretty good shape in a few decades hence. If it doesn't, then we might have a major problem to deal with before that. Let's hope [for the former].

Sim: Incredible. Thanks for that very powerful message to end on. That concludes the Q&A part of this session. Thank you so much, Professor Esvelt, for your time. [...]

Kevin: Thank you.