Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

US Launches Antitrust Investigations

The U.S. Government has launched antitrust investigations into Nvidia, OpenAI, and Microsoft. The U.S. Department of Justice (DOJ) and Federal Trade Commission (FTC) have agreed to investigate potential antitrust violations by the three companies, the New York Times reported. The DOJ will lead the investigation into Nvidia while the FTC will focus on OpenAI and Microsoft.

Antitrust investigations are conducted by government agencies to determine whether companies are engaging in anticompetitive practices that may harm consumers and stifle competition.

Nvidia investigated for GPU dominance. The New York Times reports that concerns have been raised about Nvidia's dominance in the GPU market, “including how the company’s software locks customers into using its chips, as well as how Nvidia distributes those chips to customers.” In the last week, Nvidia has become one of the three largest companies in the world by market capitalization, joining the ranks of Apple and Microsoft, with all three companies having nearly equal valuations.

Microsoft investigated for close ties to AI startups. The investigation into Microsoft and OpenAI will likely focus on their close partnership and the potential for anticompetitive behavior. Microsoft's $13 billion investment in OpenAI and the integration of OpenAI's technology into Microsoft's products may raise concerns about the creation of barriers to entry for competitors in the AI market. In addition, the FTC is looking into Microsoft’s deal with Inflection AI, where Microsoft hired the company’s co-founder and almost all its employees, and Microsoft paid $650 million in licensing agreements.

In January, the FTC launched a broad inquiry into strategic partnerships between tech giants and AI startups, including Microsoft's investment in OpenAI and Google's and Amazon's investments in Anthropic. Additionally, in July, the FTC opened an investigation into whether OpenAI had harmed consumers through its collection of data.

These developments indicate escalating regulatory scrutiny of AI technology. This heightened attention from regulators is driven by the rapid advancement and widespread adoption of AI, which presents both significant opportunities and potential risks. Lina Khan, the chair of the FTC, has previously expressed her desire to "spot potential problems at the inception rather than years and years and years later" when it comes to regulating AI. In recent years, the U.S. government has already investigated and sued tech giants like Google, Apple, Amazon, and Meta for alleged antitrust violations.

Recent Criticisms of OpenAI and Anthropic

OpenAI employees call for more whistleblower protections from AI companies. An open letter published last week, authored by a group including nine current and former OpenAI employees, voices concerns that AI companies may conceal risks from their AI systems from governments and the public. It then calls upon AI companies to allow current and former employees to share risk-related criticisms without fear of retaliation.

A New York Times article links the letter to OpenAI’s past measures to prevent workers from criticizing the company. For example, departing employees were asked to sign restrictive nondisparagement agreements, which prevented them from saying anything negative about the company without risking losing their vested equity, typically worth millions. As these practices recently came to light, OpenAI has promised to abolish them.

Helen Toner, former OpenAI board member, gives reasons for attempting to oust Sam Altman. While the board previously cited Altman’s “not [being] consistently candid” with the board, there has been little public knowledge of what this means. In an interview, Toner gives three reasons which backed the board’s decision: Altman’s “failure to tell the board that he owned the OpenAI Startup Fund”, giving inaccurate information about the company’s safety processes, and attempting to remove Toner from the board after she published “a research paper that angered him.”

Anthropic’s safety commitments come under closer scrutiny. Several recent moves by Anthropic raise questions about its support for more safety-oriented policies:

- Anthropic’s policy chief, Jack Clark, published a newsletter apparently advocating against key AI regulation proposals.

- This is followed by an announcement of Anthropic joining TechNet, a lobbying group which formally opposes SB 1047, California’s proposal to regulate AI.

- Both are preceded by Anthropic’s Claude 3 release–“the most powerful publicly available language model” then– without sharing the model for pre-deployment testing by the UK AI Safety Institute, which Anthropic had seemingly committed to last November.

- Recent research by Anthropic has been accused of safety-washing. For example, some argue that this paper overstates the company’s contribution to existing interpretability results.

- Finally, some worry that Anthropic’s bespoke corporate governance structure cannot hold the company accountable to safe practices. While the Long Term Benefit Trust, announced last September, is meant to align the company with safety over profit, there is still limited public information about the Trust and its powers.

Situational Awareness

Last week, former OpenAI researcher Leopold Aschenbrenner published Situational Awareness, an essay in which he lays out his predictions for the next decade of AI progress, as well as their strategic implications.

Below, we summarize and respond to some of the essay’s main arguments—in particular, that:

- AGI is a few years away and could automate AI development.

- The US should safeguard and expand its AGI lead.

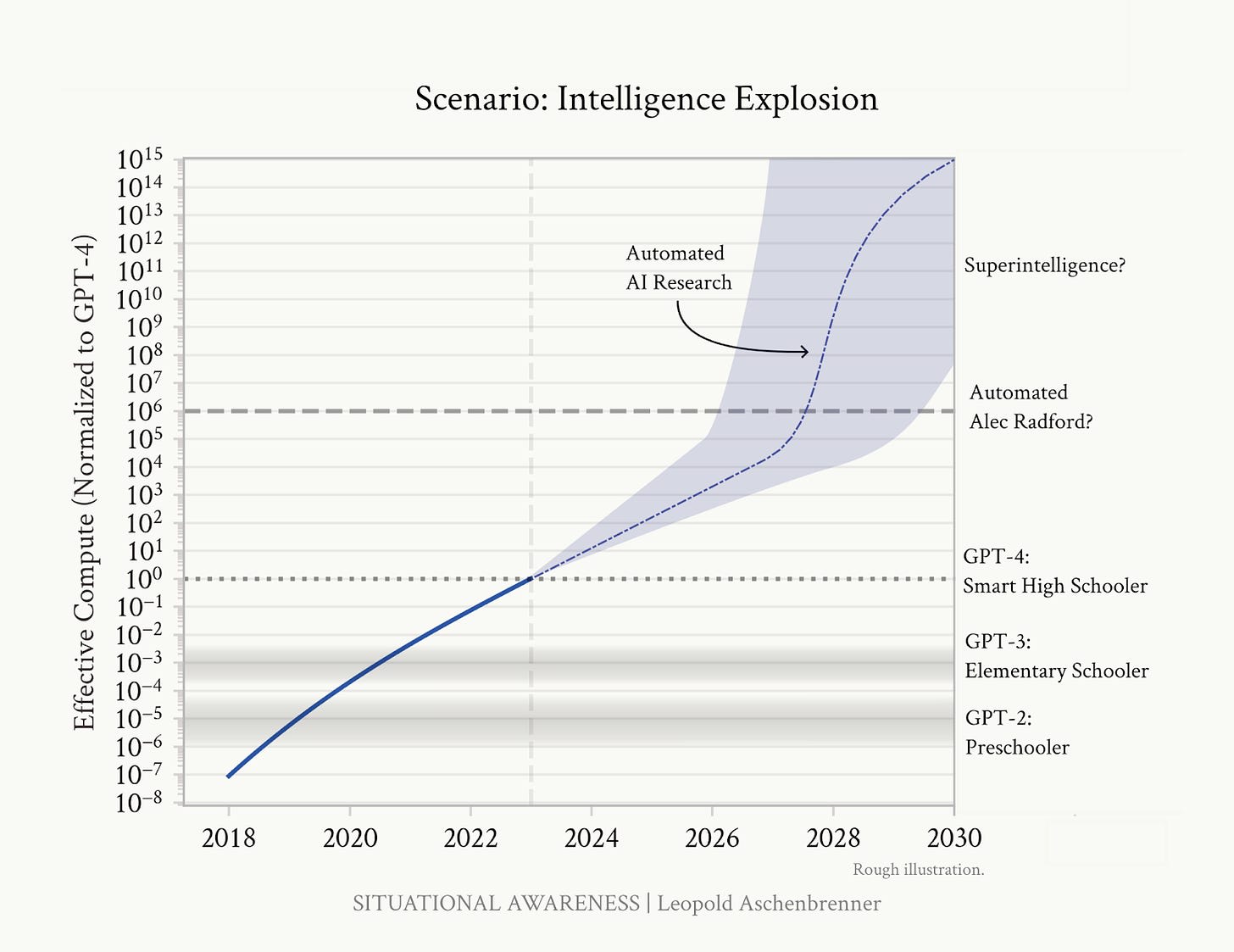

AGI by 2027 is “strikingly plausible.” The essay argues that rapid advancements in artificial intelligence, particularly from GPT-2 to GPT-4, suggest that AGI could be achieved by 2027. This prediction is based on trends in computing power, algorithmic efficiency, and "unhobbling" gains, such as agency and tool use.

However, one source of uncertainty in this prediction is the “data wall”: according to current scaling laws, frontier models are set to become bottlenecked by access to high-quality training data. AGI might only be possible in the near term if AI labs can find a way to get more capabilities improvement out of less data. The essay suggests AI labs will likely be able to solve this problem.

AGI could accelerate AI development. Once AGI is achieved, the essay argues that the transition to superintelligence will follow swiftly. This is due to the potential for AGIs to automate and accelerate AI research, leading to an “intelligence explosion.”

Superintelligence could provide a decisive economic and military advantage. Superintelligence would radically expand a nation’s economic and military power. Therefore, nations will likely race to develop superintelligence. Right now, the US has a clear lead. However, if another nation—in particular, China—catches up, then racing dynamics could lead nations to deprioritize safety in favor of speed.

The US should safeguard its lead by securing AGI research. One way that an adversary could catch up to the US is by stealing results of AGI research and development—for example, the model weights of an AGI system, or a key algorithmic insight on the path to AGI. The essay argues that this is the default outcome given the current security practices of leading AI labs. The US should begin by securing frontier model weights—for example, by restricting them to airgapped data centers.

The US could facilitate international governance of superintelligence. The US could coordinate with democratic allies to develop and govern superintelligence. This coalition could disincentivize racing dynamics and proliferation by sharing the peaceful benefits of superintelligence with other nations in exchange for refraining from developing superintelligence themselves.

Accelerating AI development will not prevent an arms race. The essay notes that a race to AGI would likely lead participating nations to deprioritize safety in favor of speed. It recommends that, to prevent a race, the US should secure and expand its AGI lead. It could potentially do so with compute governance and on-chip mechanisms. However, the essay also notes that the US is unlikely to preserve its lead if China “wakes up” to AGI. So, the US accelerating AI capabilities development would likely only exacerbate an arms race.

Links

- OpenAI appointed former NSA director, Paul M. Nakasone, to its board of directors.

- RAND’s Technology and Security Policy Center (TASP) is seeking experienced cloud engineers and research engineers to join their team working at the forefront of evaluating AI systems.

- The Center for a New American Security published “Catalyzing Crisis,” a primer on artificial intelligence, catastrophes, and national security.

- A new series from the Institute for Progress explores how to build and secure US AI infrastructure.

- Geoffrey Hinton delivers a warning about existential risk from AI in a recent interview.

- In another recent interview, Trump discusses AI risks.

- Elon Musk withdrew his lawsuit against OpenAI and Sam Altman.

- House Democrats introduced the EPIC Act to establish a Foundation for Standards and Metrology, which would support AI work at NIST.

- A new public competition is offering 1M+ to beat the ARC-AGI benchmark.

- Nvidia released the Nemotron-4 340B model family.

- Stanford professor Percy Liang argues that we should call model’s like Llama 3 “open-weight,” not “open-source.”

See also: CAIS website, CAIS X account, our ML Safety benchmark competition, our new course, and our feedback form

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Subscribe here to receive future versions.