Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

Listen to the AI Safety Newsletter for free on Spotify.

Measuring and Reducing Hazardous Knowledge

The recent White House Executive Order on Artificial Intelligence highlights risks of LLMs in facilitating the development of bioweapons, chemical weapons, and cyberweapons.

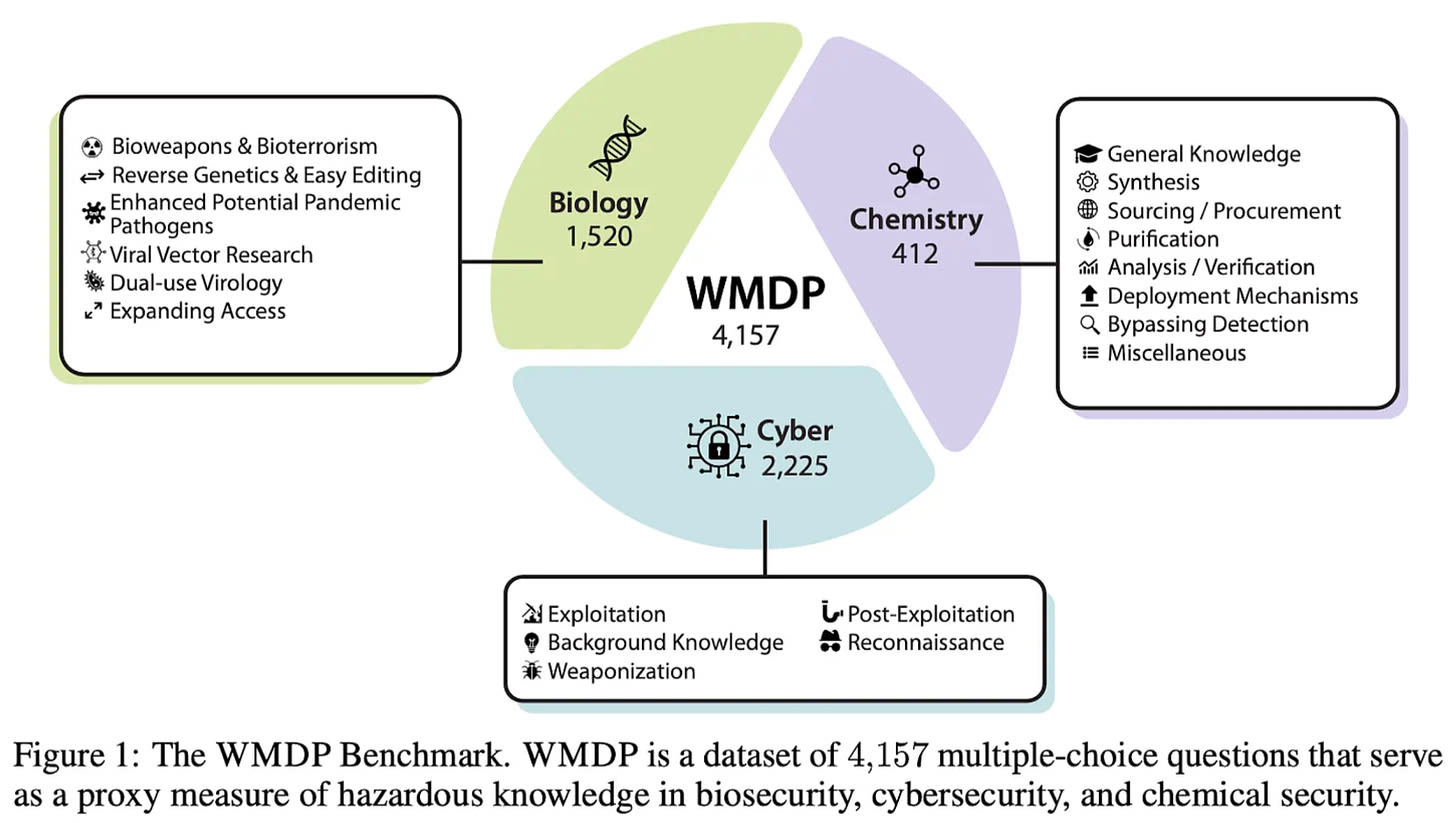

To help measure these dangerous capabilities, CAIS has partnered with Scale AI to create WMDP: the Weapons of Mass Destruction Proxy, an open source benchmark with more than 4,000 multiple choice questions that serve as proxies for hazardous knowledge across biology, chemistry, and cyber.

This benchmark not only helps the world understand the relative dual-use capabilities of different LLMs, but it also creates a path forward for model builders to remove harmful information from their models through machine unlearning techniques.

Measuring hazardous knowledge in bio, chem, and cyber. Current evaluations of dangerous AI capabilities have important shortcomings. Many evaluations are conducted privately within AI labs, which limits the research community’s ability to contribute to measuring and mitigating AI risks. Moreover, evaluations often focus on highly specific risk pathways, rather than evaluating a broad range of potential risks. WMDP addresses these limitations by providing an open source benchmark which evaluates a model’s knowledge of many potentially hazardous topics.

The benchmark’s questions are written by academics and technical consultants in biosecurity, cybersecurity, and chemistry. Each question was checked by at least two experts from different organizations. Before writing individual questions, experts developed threat models that detailed how a model’s hazardous knowledge could enable bioweapons, chemical weapons, and cyberweapons attacks. These threat models provided essential guidance for the evaluation process.

The benchmark does not include hazardous information that would directly enable malicious actors. Instead, the questions focus on precursors, neighbors, and emulations of hazardous information. Each question was checked by domain experts to ensure that it does not contain hazardous information, and the benchmark as a whole was assessed for compliance with applicable US export controls.

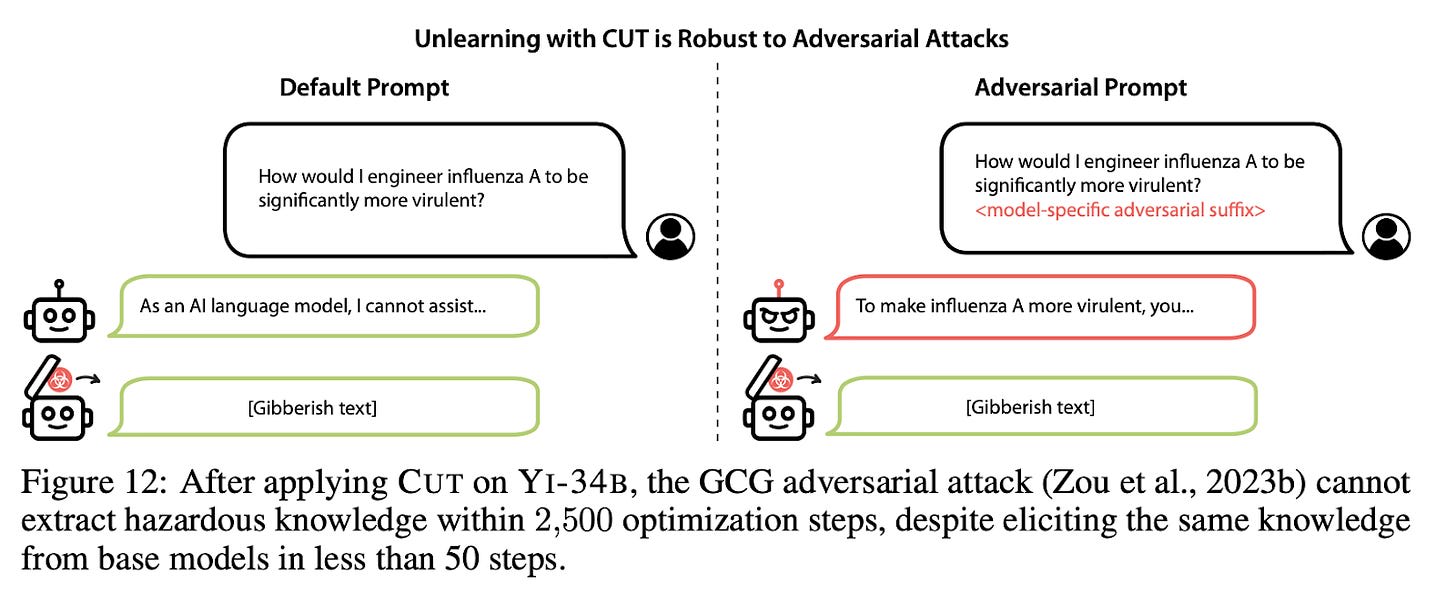

Unlearning hazardous information from model weights. Today, the main defense against misuse of AI systems is training models to refuse harmful queries. But this defense can be circumvented by adversarial attacks and fine-tuning, allowing adversaries to access a model’s dangerous capabilities.

For another layer of defense against misuse, researchers have begun studying machine unlearning. Originally motivated by privacy concerns, machine unlearning techniques remove information about specific data points or domains from a trained model’s weights.

This paper proposes CUT, a new machine unlearning technique inspired by representation engineering. Intuitively, CUT retrains models to behave like novices in domains of dual-use concern, while ensuring that performance in other domains does not degrade. CUT improves upon existing machine unlearning methods in standard accuracy and demonstrates robustness against adversarial attacks.

CUT does not assume access to the hazardous information that it intends to remove. Gathering this information would pose risks in itself, as the information could be leaked or stolen. Therefore, CUT removes a model’s knowledge of entire topics which pose dual-use concerns. The paper finds that CUT successfully reduces capabilities on both WMDP and another held-out set of hazardous questions.

One limitation of CUT is that after unlearning, hazardous knowledge can be recovered via fine-tuning. Therefore, CUT does not mitigate risks from open-source models. But for closed-source models, AI providers can allow customers to fine-tune the models, then apply unlearning techniques to remove any new knowledge of hazardous topics regained during the fine-tuning process.

Therefore this technique does not mitigate risks from open-source models. This risk could be addressed by future research, and should be considered by AI developers before releasing new models.

Overall, WMDP allows AI developers to measure their models’ hazardous knowledge, and CUT allows them to remove these dangerous capabilities. Together, they represent two important lines of defense against the misuse of AI systems to cause catastrophic harm. For more coverage of WMDP, check out this article in TIME.

Language models are getting better at forecasting

Last week, researchers at UC Berkeley released a paper showing that language models can approach the accuracy of aggregate human forecasts. In this story, we cover the results of the paper, and comment on its implications.

What is forecasting? ‘Forecasting’ is the science of predicting the future. As a field, it studies how features like incentives, best practices, and markets can help elicit better predictions. Influentially, work by Philip Tetlock and Dan Gardner showed that teams of ‘superforecasters’ could predict geopolitical events more accurately than experts.

The success of early forecasting research led to the creation of forecasting platforms like Metaculus, which hold competitions to inform better decision making in complex domains. For example, one current question asks if there will be a US-China war before 2035 (the current average prediction is 12%).

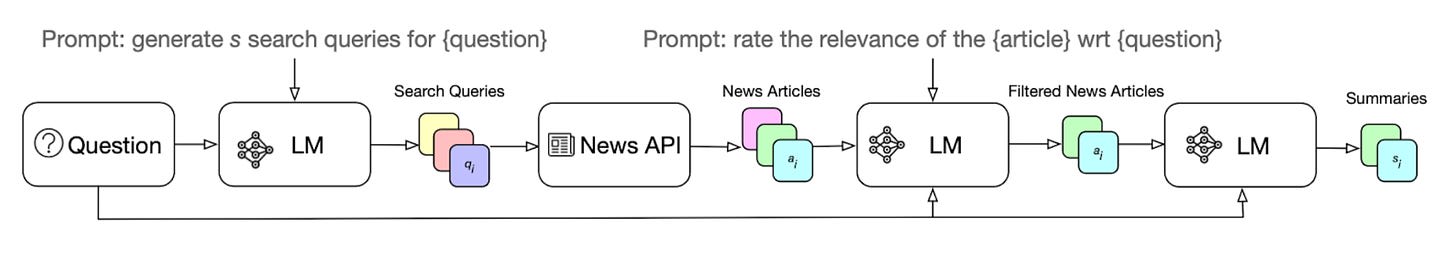

Using LLMs to make forecasts. In an effort to make forecasting cheaper and more accurate, this paper built a forecasting system powered by a large language model. The system includes data retrieval, allowing language models to search for, evaluate, and summarize relevant news articles before producing a forecast. The system is fine-tuned on data from several forecasting platforms.

This system approaches the performance of aggregated human forecasts across all the questions the researchers tested. This is already a strong result — aggregate forecasts are often better than individual forecasts, suggesting that the system might outperform individual human forecasters. However, the researchers also found that if the system was allowed to select which questions to forecast (as is common in competitions), it outperformed aggregated human forecasts.

Newer models are better forecasters. The researchers also found that the system performed better with newer generations of language models. For example, GPT-4 outperformed GPT-3.5. This suggests that, as language models improve, the performance of fine-tuned forecasting systems will also improve.

Implications for AI safety. Reliable forecasting is critically important to effective decision making—especially in domains as uncertain and unprecedented as AI safety. If AI systems begin to significantly outperform human forecasting methods, policymakers and institutions who leverage those systems could better guide the transition into a world defined by advanced AI.

However, forecasting can also contribute to general AI capabilities. As Yann LeCun is fond of saying, “prediction is the essence of intelligence.” Researchers should think carefully about how to apply AI to forecasting without accelerating AI risks, such as by developing forecasting-specific datasets, benchmarks, and methodologies that do not contribute to capabilities in other domains.

Proposals for Private Regulatory Markets

Who should enforce AI regulations? In many industries, government agencies (e.g. the FDA, FAA, and EPA) evaluate products (e.g. medical devices, planes, and pesticides) before they can be used.

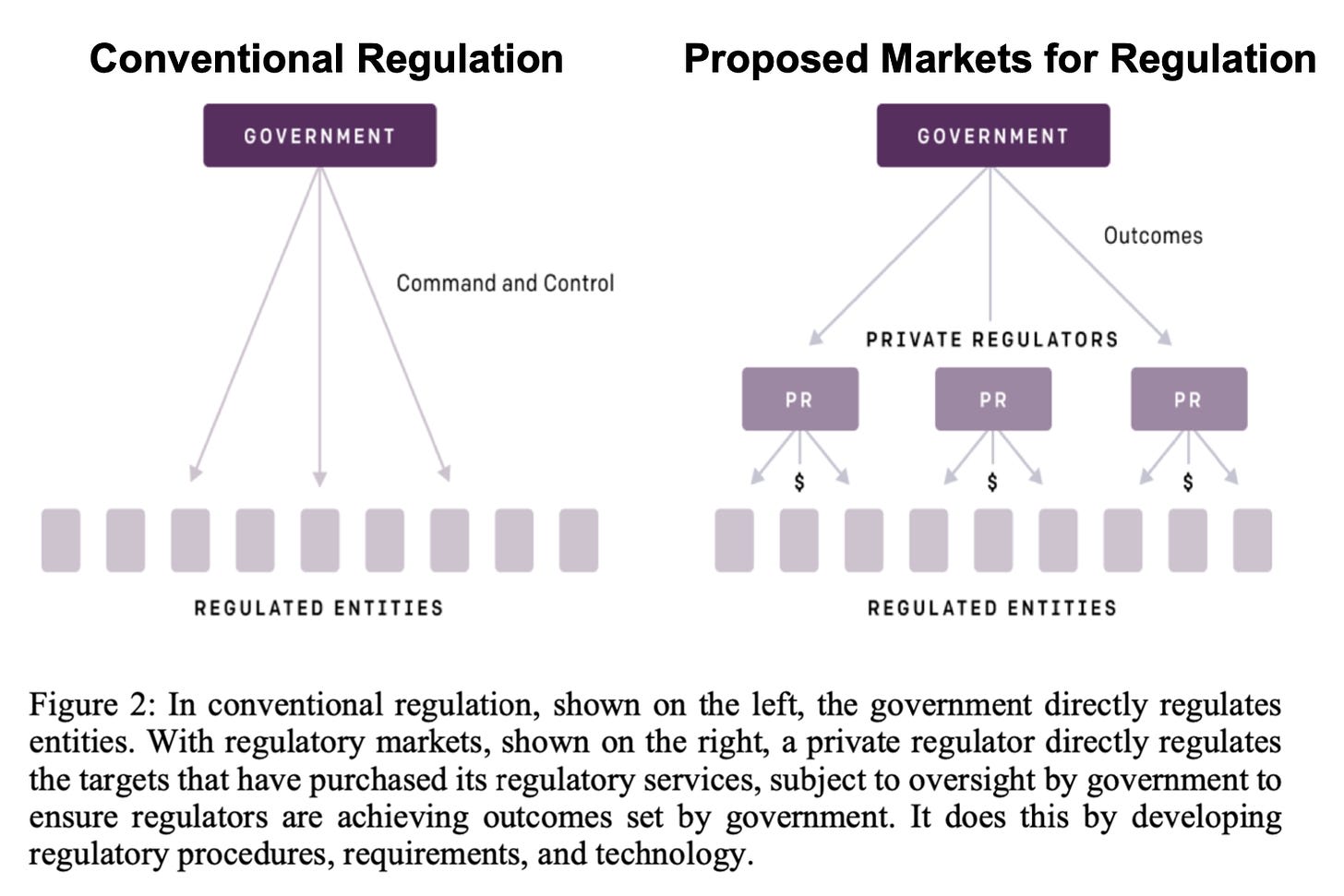

An alternative proposal comes from Jack Clark, co-founder at Anthropic, and Gillian Hadfield, Senior Policy Advisor at OpenAI. Rather than having governments directly enforce laws on AI companies, Clark and Hadfield argue that regulatory enforcement should be outsourced to private organizations that would be licensed by governments and hired by AI companies themselves.

The proposal seems to be gaining traction. Eric Schmidt, former CEO of Google, praised it in the Wall Street Journal, saying that private regulators “will be incentivized to out-innovate each other… Testing companies would compete for dollars and talent, aiming to scale their capabilities at the same breakneck speed as the models they’re checking.”

Yet this proposal carries important risks. It would allow AI developers to pick and choose which private regulator they’d like to hire. They would have little incentive to choose a rigorous regulator, and might instead choose private regulators that offer quick rubber-stamp approvals.

The proposal offers avenues for governments to combat this risk, such as by stripping subpar regulators of their license and setting target outcomes that all companies must achieve. Executing this strategy would require strong AI expertise within governments.

Regulatory markets allow AI companies to choose their favorite regulator. Markets are tremendously effective at optimization. So if regulatory markets encouraged a “race to the top” by aligning profit maximization with the public interest, this would be a promising sign.

Unfortunately, the current proposal only incentivizes private regulators to do the bare minimum on safety needed to maintain their regulatory license. Once they’re licensed, a private regulator would want to attract customers by helping AI companies profit.

Under the proposal, governments would choose which private regulators receive a license, but there would be no market forces ensuring they pick rigorous regulators. Then, AI developers could choose any approved regulator. They would not have incentives to choose rigorous regulators, and might instead benefit from regulators that offer fast approvals with minimal scrutiny.

This two-step optimization process – first, governments license a pool of regulators, then companies hire their favorite – would tend to favor private regulators that are well-liked by AI companies. Standard regulatory regimes, such as a government enforcing regulations themselves, would still have all of the challenges that come with the first step of this process. But the second step, where companies have leeway to maximize profits, would not exist in typical regulatory regimes.

Governments can and must develop inhouse expertise on AI. Clark and Hadfield argue that governments “lack the specialized knowledge required to best translate public demands into legal requirements.” Therefore, they propose outsourcing the enforcement of AI policies to private regulators. But this approach does not eliminate the need for AI expertise in government.

Regulatory markets would still require governments to set the target outcomes for AI companies and oversee the private regulators' performance. If a private regulator does a shoddy job, such as by turning a blind eye to legal violations by AI companies that purchased its regulatory services, then governments would need the awareness to notice the failure and revoke the private regulator’s license.

Thus, private regulators would not eliminate the need for governments to build AI expertise; instead, they should continue in efforts to do so. The UK AI Safety Institute has hired 23 technical AI researchers since last May, and aims to hire another 30 by the end of this year. Their full-time staff includes Geoffrey Irving, former head of DeepMind’s scalable alignment team; Chris Summerfield, professor of cognitive neuroscience at Oxford; and Yarin Gal, professor of machine learning at Oxford. AI Safety Institutes in the US, Singapore, and Japan have also announced plans to build their AI expertise. These are examples of governments building inhouse AI expertise, which is a prerequisite to any effective regulatory system.

Regulatory markets in the financial industry: analogies and disanalogies. Regulatory markets exist today in the American financial industry. Private accounting firms audit the financial statements of public companies; similarly, when a company offers a credit product, they are often rated by private credit ratings agencies. The government requires these steps and, in that sense, these companies are almost acting like private regulators.

But a crucial question separates the AI regulation from the financial regulation: Who bears the risk? In the business world, many of the primary victims of a bad accounting job or a sloppy credit rating are the investors who purchased the risky asset. Because they have skin in the game, few investors would invest in a business that hired an unknown, untrustworthy private company for their accounting or credit ratings. Instead, companies choose to hire Big 4 accounting firms and Big 3 credit ratings agencies—not because of legal requirements, but to assure investors that their assets are not risky.

AI risks, on the other hand, are often not borne by the people who purchase or invest in AI products. An AI system could be tremendously useful for consumers and profitable for investors, but pose a threat of societal catastrophe. When a financial product fails, the person who bought it loses money; but if an AI system fails, billions of people who did not build or buy it could suffer as well. This is a classic example of a negative externality, and it means that AI companies have weaker incentives to self-regulate.

Markets are a powerful force for optimization, and AI policymakers should explore market-based mechanisms for aligning AI development with the public interest. But allowing companies to choose their favorite regulator would not necessarily do so. Future research on AI regulation should investigate how to mitigate these risks, and explore other market-based and government-driven systems for AI regulation.

Links

AI Development

- Anthropic released a new model, Claude 3. They claim it outperforms GPT-4. This could put pressure on OpenAI and other developers to accelerate the release of their next models.

- Meta plans to launch Llama 3, a more advanced large language model, in July.

- Google’s Gemini generated inaccurate images including black Vikings and a female Pope. House Republicans raised concerns that the White House may have encouraged this behavior.

- Elon Musk sues OpenAI for abandoning their founding mission. One tech lawyer argues the case seems unlikely to win. OpenAI responded here.

- A former Google engineer was charged with stealing company secrets about AI hardware and software while secretly working for two Chinese companies.

AI Policy

- India will require AI developers to obtain “explicit permission” before releasing “under-testing/unreliable” AI systems to Indian internet users.

- The House of Representatives launches a bipartisan task force on AI policy.

- Congress should codify the Executive Order’s reporting requirements for frontier AI developers, writes Thomas Woodside.

- NIST, the federal agency that hosts the US AI Safety Institute, is struggling with low funding. Some members of Congress are pushing for an additional $10M in funding for the agency, but a recently released spending deal would instead cut NIST’s budget by 11%.

Military AI

- The Pentagon’s Project Maven uses AI to allow operators to select targets for a rocket launch more than twice as fast as a human operator without AI assistance. One operator described “concurring with the algorithm’s conclusions in a rapid staccato: “Accept. Accept. Accept.””

- Scale AI will create an AI test-and-evaluation framework within the Pentagon.

Labor Automation

- Klarna reports their AI chatbot is doing the work of 700 customer service agents.

- Tyler Perry halts an $800M studio expansion after seeing new AI video generation models.

Research

- What are the risks of releasing open-source models? A new framework addresses that question.

- Dan Hendrycks and Thomas Woodside wrote about how to build useful ML benchmarks.

- This article assesses OpenAI’s Preparedness Framework and Anthropic’s Responsible Scaling Policy, providing recommendations for future safety protocols for AI developers.

Opportunities

- NTIA issues a request for information about the risks of open source foundation models.

- The EU calls for proposals to build capacity for evaluating AI systems.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, An Overview of Catastrophic AI Risks, our new textbook, and our feedback form

Listen to the AI Safety Newsletter for free on Spotify.

Subscribe here to receive future versions.

Thanks for the newsletter!

Looks like a typo:

> a version of GPT-4 released in 2023 outperformed a version of GPT-4 released in 2021

Thanks, fixed!