HaydnBelfield

Bio

Haydn has been a Research Associate and Academic Project Manager at the University of Cambridge's Centre for the Study of Existential Risk since Jan 2017.

Posts 34

Comments226

Helpful post on the upcoming red-teaming event, thanks for putting it together!

Minor quibble - "That does mean I’m left with the slightly odd conclusion that all that’s happened is the Whitehouse has endorsed a community red-teaming event at a conference."

I mean they did also announce $140m, that's pretty good! That's to say, the two other announcements seem pretty promising.

The funding through the NSF to launch 7 new National AI Research Institutes is promising, especially the goal for these to provide public goods such as reseach into climate, agriculture, energy, public health, education, and cybersecurity. $140m is, for example, more than the UK's Foundation Models Taskforce £100m ($126m).

The final announcement was that in summer 2023 the OMB will be releasing draft policy guidance for the use of AI systems by the US government. This sounds excruciatinly boring, but will be important, as the federal government is such a bigger buyer/procurer and setter of standards. In the past, this guidance has been "you have to follow NIST standards", which gives those standards a big carrot. The EU AI Act is more stick, but much of the high-risk AI it focusses on is use by governments (education, health, recruitment, police, welfare etc) and they're developing standards too. So far amount of commonality across the two. To make another invidious UK comparison, the AI white paper says that a year from now, they'll put out a report considering the need for statutory interventions. So we've got neither stick, carrot or standards...

"Today’s announcements include:

- New investments to power responsible American AI research and development (R&D). The National Science Foundation is announcing $140 million in funding to launch seven new National AI Research Institutes. This investment will bring the total number of Institutes to 25 across the country, and extend the network of organizations involved into nearly every state. These Institutes catalyze collaborative efforts across institutions of higher education, federal agencies, industry, and others to pursue transformative AI advances that are ethical, trustworthy, responsible, and serve the public good. In addition to promoting responsible innovation, these Institutes bolster America’s AI R&D infrastructure and support the development of a diverse AI workforce. The new Institutes announced today will advance AI R&D to drive breakthroughs in critical areas, including climate, agriculture, energy, public health, education, and cybersecurity.

- Public assessments of existing generative AI systems. The Administration is announcing an independent commitment from leading AI developers, including Anthropic, Google, Hugging Face, Microsoft, NVIDIA, OpenAI, and Stability AI, to participate in a public evaluation of AI systems, consistent with responsible disclosure principles—on an evaluation platform developed by Scale AI—at the AI Village at DEFCON 31. This will allow these models to be evaluated thoroughly by thousands of community partners and AI experts to explore how the models align with the principles and practices outlined in the Biden-Harris Administration’s Blueprint for an AI Bill of Rights and AI Risk Management Framework. This independent exercise will provide critical information to researchers and the public about the impacts of these models, and will enable AI companies and developers take steps to fix issues found in those models. Testing of AI models independent of government or the companies that have developed them is an important component in their effective evaluation.

- Policies to ensure the U.S. government is leading by example on mitigating AI risks and harnessing AI opportunities. The Office of Management and Budget (OMB) is announcing that it will be releasing draft policy guidance on the use of AI systems by the U.S. government for public comment. This guidance will establish specific policies for federal departments and agencies to follow in order to ensure their development, procurement, and use of AI systems centers on safeguarding the American people’s rights and safety. It will also empower agencies to responsibly leverage AI to advance their missions and strengthen their ability to equitably serve Americans—and serve as a model for state and local governments, businesses and others to follow in their own procurement and use of AI. OMB will release this draft guidance for public comment this summer, so that it will benefit from input from advocates, civil society, industry, and other stakeholders before it is finalized."

Yes this was my thought as well. I'd love a book from you Jeff but would really (!!) love one from both of you (+ mini-chapters from the kids?).

I don't know the details of your current work, but it seems worth writing one chapter as a trial run, and if you think its going well (and maybe has good feedback) considering taking 6 months or so off.

Am I right that in this year and a half, you spent ~$2 million (£1.73m)? Seems reasonable not to continue this if you don't think its impactful

| per month | per year | Total | |

| Rent | $70,000 | $840,000 | $1,260,000 |

| Food & drink | $35,000 | $420,000 | $630,000 |

| Contractor | $5,000 | $60,000 | $90,000 |

| Staff time | $6,250 | $75,000 | $112,500 |

| Total | $185,000 | $2,220,000 | $2,092,500 |

He listed GovAI on this (very good!) post too: https://www.lesswrong.com/posts/5hApNw5f7uG8RXxGS/the-open-agency-model

Yeah dunno exactly what the nature of his relationship/link

3 notes on the discussion in the comments.

1. OP is clearly talking about the last 4 or so years, not FHI in eg 2010 to 2014. So quality of FHI or Bostrom as a manager in that period is not super relevant to the discussion. The skills needed to run a small, new, scrappy, blue-sky-thinking, obscure group are different from a large, prominent, policy-influencing organisation in the media spotlight.

2. The OP is not relitigating the debate over the Apology (which I, like Miles, have discussed elsewhere) but instead is pointing out the practical difficulties of Bostrom staying. Commenters may have different views from the University, some FHI staff, FHI funders and FHI collaborators - that doesn't mean FHI wouldn't struggle to engage these key stakeholders.

3. In the last few weeks the heads of Open Phil and CEA have stepped aside. Before that, the leadership of CSER and 80,000 Hours has changed. There are lots of other examples in EA and beyond. Leadership change is normal and good. While there aren't a huge number of senior staff left at FHI, presumably either Ord or Sandberg could step up (and do fine given administrative help and willingness to delegate) - or someone from outside like Greaves plausibly could be Director.

This is very exciting work! Really looking forward to the first research output, and what the team goes on to do. I hope this team gets funding - if I were a serious funder I would support this.

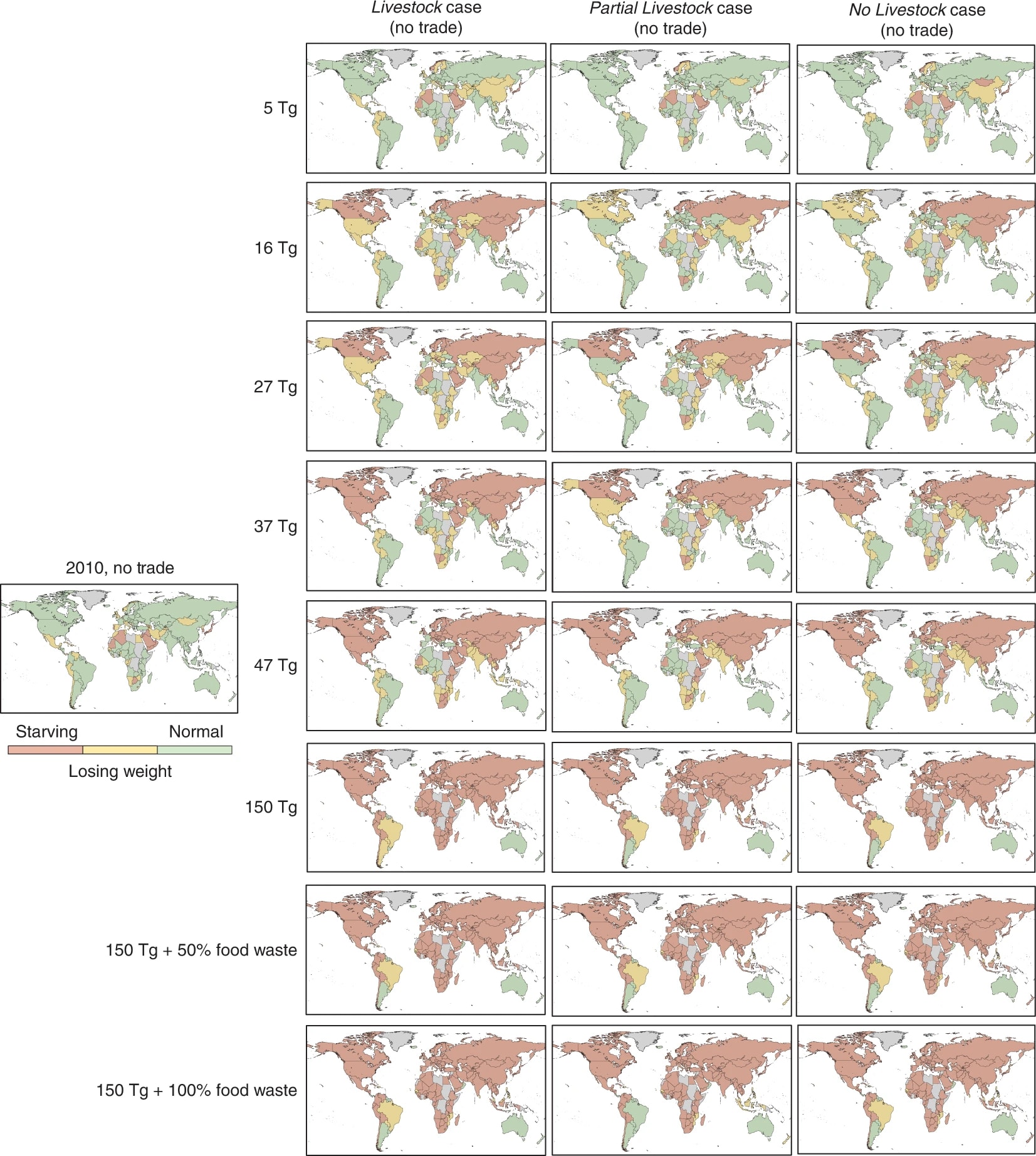

For some context on the initial Food Security project, readers might want to take a glance at how South America does in this climate modelling from Xia et al, 2022.

Fig. 4: Food intake (kcal per capita per day) in Year 2 after different nuclear war soot injections.

It seems like the majority of individual grantees (over several periods) are doing academic-related research.

Can Caleb or other fund managers say more about why "One heuristic we commonly use (especially for new, unproven grantees) is to offer roughly 70% of what we anticipate the grantee would earn in an industry role" rather than e.g. "offer roughly the same as what we anticipate the grantee would earn in academia"?

See e.g. the UC system salary scale: