All of Yonatan Cale's Comments + Replies

Hey Ben :)

- This analysis updated+surprised me, thx

- I don't currently want to E2G, but if you (or others reading this comment) want to hear about cto/founder positions that I'm saying "no" to (but would probably want to hear about if I was going to do E2G as a cto/founder), lmk [[ + disclaimers about "won't always be relevant" and so on ]]

If you know anyone who drops out of a PhD, consider suggesting they apply[1] for Effective Dropouts! You're invited too!

- ^

As an organization that strives to have "the values of Effective Altruism plus the inverse of the values of universities", we don't actually have an application process

Hey, the post of mine that you linked is an attempt to simplify this question a lot, here's the TL;DR in my own words:

Instead of considering whether more EAs should E2G or not: apply to a few EA orgs you like, and if any of them accept you, ask them if they'd rather hire you or get some $ amount that is vaguely what you'd donate if you'd E2G.

I think, for various reasons, this would be a better decision-making process than considering what more EAs should do.

On getting a software job in in the age of AI tools, I tried to collect some thoughts here.

Similar'ish discussions about Anthropic keep coming up, see a recent one here. (I think it would be better to discuss these things in a central place since parts of the conversation repeat themselves. I don't think a central place currently exists, but maybe you'd prefer to merge over opening a new one)

Perfect Draft Amnesty post!

Here are my Draft Amnesty thoughts:

- I model many game developers as wanting their users to have fun, probably many of them are gamers. In other words, I don't think it's enough to TRY to make a fun game, we need something more

- Naively, if you don't optimize for "people get addicted to your game" then probably people will get addicted to another game? Unless maybe you have a clever idea for some tradeoff EA could do that EA couldn't do?

- I don't think you can assume "we can spend X on making a game and make more than X, which we could spend on something else" (I'm not sure you did that, excuse my draft comment if not)

Updates [Feb 2025] :

Two big things seemed to have changed:

- Things in the economy make it harder to find jobs (different things in different countries. Perhaps this isn't true for where you live)

- AI coding tools are getting pretty good (just wait for March 2022)

Also, I've hardly had conversations about this for a few years (maybe 1 per 1-2 months instead of about 5 per week), so I'm less up to date.

Also, it seems like nobody really knows what will happen with jobs (and specifically coding jobs) in the age of AI.

If someone can suggest an exam...

My new default backend recommendation, assuming you are mainly excited about building a website/webapp that does interesting things (as most people I speak to are), and assuming you're happy to put in somewhat more effort but learn things that are (imo) closer to best practices, is Supabase.

Supabase mostly handles the backend for you (similarly to Firebase).

It mostly asks you to configure the DB, e.g "there is a table of students where each row is a student", "there is a table of schools where each row is a school", "the student table has a col...

Updates from Berkeley 2025:

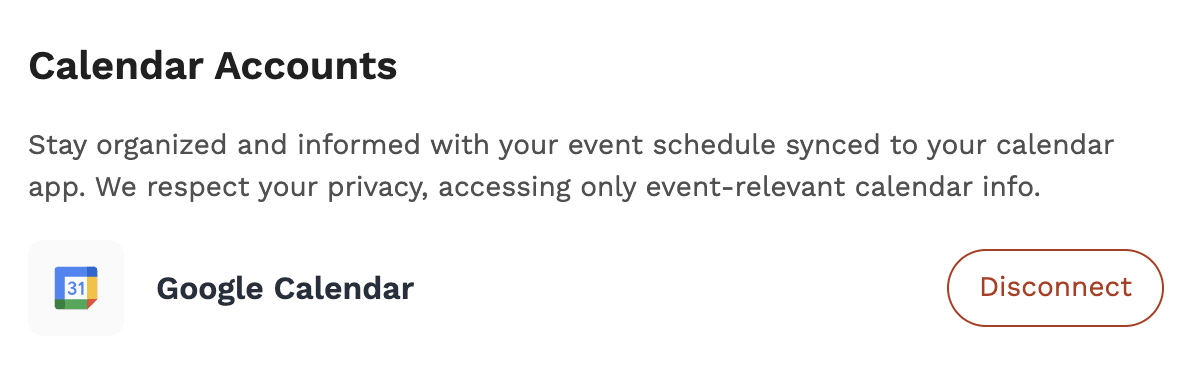

Google Calendar sync

I can't believe they finally added this feature!

See here: https://app.swapcard.com/settings

Don't forget you can manage your availability

Every time Zvi posts something, it covers everything (or almost everything) important I've seen until then

Also in audio:

https://open.spotify.com/show/4lG9lA11ycJqMWCD6QrRO9?si=a2a321e254b64ee9

I don't know your own bar for how much time/focus you want to spend on this, but Zvi covers some bar

The main thing I'm missing is a way to learn what the good AI coding tools are. For example, I enjoyed this post:

Backend recommendations:

I'm much less confident about this.

- If you want a working backend with minimal effort, because actually the React part was the fun thing

- Firebase (Firestore) :

- This gives you, sort of, an autogenerated backend, if only you describe the structure of your database. I'd mainly recommend this if you're not interested in writing a backend but you still want things to work as if you built an amazing backend.

- The main disadvantage is it will be different from what many databases look like.

- You could skip the "subscribe for changes" feature and

- Firebase (Firestore) :

Tech stack recommendations:

Many people who want to build a side project want to build a website that does something, or an "app" that does something (and could just be a website that can be opened on a smartphone).

So I want to add recommendations to save some searching:

- React. You can learn react from their official tutorial, or from an online course. Don't forget to also learn any prerequisites they mention (but you don't need to invent your own prerequisites)

- If you want a React competitor, like Vue, that's also ok (assuming you're more excited about it).

- C

- If you want a React competitor, like Vue, that's also ok (assuming you're more excited about it).

My frank opinion is that the solution to not advancing capabilities is keeping the results private, and especially not sharing them with frontier labs.

((

making sure I'm not missing our crux completely: Do you agree:

- AI has a non negligable chance of being an existential problem

- Labs advancing capabilities are the main thing causing that

))

I also think that a lot of work that is branded as safety (for example, that is developed in a team called the safety-team or alignment-team) could reasonably be considered to be advancing "capabilities" (as the topic is often divided).

My main point is that I recommend checking the specific project you'd work on, and not only what it's branded as, if you think advancing AI capabilities could be dangerous (which I do think).

Zvi on the 80k podcast:

...Zvi Mowshowitz: This is a place I feel very, very strongly that the 80,000 Hours guidelines are very wrong. So my advice, if you want to improve the situation on the chance that we all die for existential risk concerns, is that you absolutely can go to a lab that you have evaluated as doing legitimate safety work, that will not effectively end up as capabilities work, in a role of doing that work. That is a very reasonable thing to be doing.

I think that “I am going to take a job at specifically OpenAI or DeepMind for the purposes of

Hey :)

Looking at some of the engineering projects (which is closest to my field) :

...

- API Development: Create a RESTful API using Flask or FastAPI to serve the summarization models.

- Caching: Implement a caching mechanism to store and quickly retrieve summaries for previously seen papers.

- Asynchronous Processing: Use message queues (e.g., Celery) for handling long-running summarization tasks.

- Containerization: Dockerize the application for easy deployment and scaling.

- Monitoring and Logging: Implement proper logging and monitoring to track system performance

If you ever run another of these, I recommend opening a prediction market first for what your results are going to be :)

I'm not sure how to answer this so I'll give it a shot and tell me if I'm off:

Because usually they take more time, and are usually less effective at getting someone hired, than:

- Do an online course

- Write 2-3 good side projects

For example, in Israel pre-covid, having a CS degree (which wasn't outstanding) was mostly not enough to get interviews, but 2-3 good side projects were, and the standard advice for people who finished degrees was to go do 2-3 good side projects. (based on an org that did a lot of this and hopefully I'm representing correctl...

- If Conor thinks these roles are impactful then I'm happy we agree on listing impactful roles. (The discussion on whether alignment roles are impactful is separate from what I was trying to say in my comment)

- If the career development tag is used (and is clear to typical people using the job board) then - again - seems good to me.

My own intuition on what to do with this situation - is to stop trying to change your reputation using disclaimers.

There's a lot of value in having a job board with high impact job recommendations. One of the challenging parts is getting a critical mass of people looking at your job board, and you already have that.

Hey Conor!

Regarding

we don’t conceptualize the board as endorsing organisations.

And

contribute to solving our top problems or build career capital to do so

It seems like EAs expect the 80k job board to suggest high impact roles, and this has been a misunderstanding for a long time (consider looking at that post if you haven't). The disclaimers were always there, but EAs (including myself) still regularly looked at the 80k job board as a concrete path to impact.

I don't have time for a long comment, just wanted to say I think this matters.

I don't read those two quotes as in tension? The job board isn't endorsing organizations, it's endorsing roles. An organization can be highly net harmful while the right person joining to work on the right thing can be highly positive.

I also think "endorsement" is a bit too strong: the bar for listing a job shouldn't be "anyone reading this who takes this job will have significant positive impact" but instead more like "under some combinations of values and world models that the job board runners think are plausible, this job is plausibly one of the highest impact opportunities for the right person".

My own intuition on what to do with this situation - is to stop trying to change your reputation using disclaimers.

There's a lot of value in having a job board with high impact job recommendations. One of the challenging parts is getting a critical mass of people looking at your job board, and you already have that.

Paul Graham agrees that building something you're excited about is a top way to get good at technology:

Paul Graham about getting good at technology (bold is mine):

...How do you get good at technology? And how do you choose which technology to get good at? Both of those questions turn out to have the same answer: work on your own projects. Don't try to guess whether gene editing or LLMs or rockets will turn out to be the most valuable technology to know about. No one can predict that. Just work on whatever interests you the most. You'll work much harder on something you're interested in than something you're doing because you think you're supposed to.

If you're

Linking to Zvi's review of the podcast:

https://thezvi.wordpress.com/2024/04/15/monthly-roundup-17-april-2024/

Search for:

Will MaCaskill went on the Sam Harris podcast

It's a negative review, but opinions are Zvi's, I didn't hear the podcast myself.

do you have a rough guess at what % this is a deal breaker for?

It's less of "%" and more of "who will this intimidate".

Many of your top candidates will (1) currently be working somewhere, and (2) will look at many EA aligned jobs, and if many of them require a work trial then that could be a problem.

(I just hired someone who was working full time, and I assume if we required a work trial then he just wouldn't be able to do it without quitting)

Easy ways to make this better:

- If you have flexibility (for example, whether the work trial is local or remote

1.a and b.

I usually ask for feedback, and often it's something like “Idk, the vibe seemed off somehow. I can't really explain it.” Do you know what that could be?

This sounds like someone who doesn't want to actually give you feedback, my guess is they're scared of insulting you, or being liable to something legal, or something like that.

My focus wouldn't be on trying to interpret the literal words (like "what vibe") but rather making them comfortable to give you actual real feedback. This is a skill in itself which you can practice. Here's a draft to maybe...

I have thoughts on how to deal with this. My priors are this won't work if I communicate it through text (but I have no idea why). Still, seems like the friendly thing would be to write it down

My recommendation on how to read this:

- If this advice fits you, it should read as "ah obviously, how didn't I think of that?". If it reads as "this is annoying, I guess I'll do it, okay...." - then something doesn't fit you well, I missed some preference of yours. Please don't make me a source of annoying social pressure

- Again, for some reason this works better w

Seems to me from your questions that your bottle neck is specifically finding the interview process stressful.

I think there's stuff to do about that, and it would potentially help with lots of other tradeoffs (for example, you'd happily interview in more places, get more offers, know what your alternatives are, ..)

wdyt?

TL;DR: The orgs know best if they'd rather hire you or get the amount you'd donate. You can ask them.

I'd apply sometimes, and ask if they prefer me or the next best candidate plus however much I'd donate. They have skin in the game and an incentive to answer honestly. I don't think it's a good idea to try guessing this alone

I wrote more about this here, some orgs also replied (but note this was some time ago)

(If you're asking for yourself and not theoretically - then I'd ask you if you applied to all (or some?) of the positions that you think a...

The main reason for this decision is that I failed to have (enough) direct impact.

Also, I was working on vague projects (like attempting AI Safety research), almost alone (I'm very social), with unclear progress, during covid, this was bad for my mental health.

Also, a friend invited me to join working with him, I asked if I could do a two week trial period first, everyone said yes, it was really great, and the rest is (last month's) history

I might disagree with this. I know, this is controversial, but hear me out (and only then disagree-vote :P )

So,

- Some jobs are 1000x+ more effective than the "typical" job. Like charities

- So picking one of the super-impactful ones matters, compared to the rest. Like charities

- But picking something that is 1x or 3x or 9x doesn't really matter, compared to the 1000x option. (like charities)

- Sometimes people go for a 9x job, and they sacrifice things like "having fun" or "making money" or "learning" (or something else that is very important to them). This is

I quit trying to have direct impact and took a zero-impact tech job instead.

I expected to have a hard time with this transition, but I found a really good fit position and I'm having a lot of fun.

I'm not sure yet where to donate extra money. Probably MIRI/LTFF/OpenPhil/RethinkPriorities.

I also find myself considering using money to try fixing things in Israel. Or maybe to run away first and take care things and people that are close to me. I admit, focusing on taking care of myself for a month was (is) nice, and I do feel like I can make a difference with E2G.

(AMA)

Hey, is it a reasonable interpretation that EAIF is much much more interested in growing EA than in supporting existing EAs?

(I'm not saying this is a mistake)

P.S

Here are the "support existing EAs" examples I saw:

- "[funding a] PhD student to attend a one-month program" [$100k tier] - this seems like a very different grant than the other examples, I'm even surprised to see this under EAIF rather than LTFF

- "A shared workspace for the EA community" [$5M tier] - totally supports existing EAs

- "an open-source Swapcard alternative" [$10M tier] - I'm surprised this isn't under CEA

Against "the burden of proof is on X"

Instead, I recommend: "My prior is [something], here's why".

I'm even more against "the burden of proof for [some policy] is on X" - I mean, what does "burden of proof" even mean in the context of policy? but hold that thought.

An example that I'm against:

"The burden of proof for vaccines helping should be on people who want to vaccinate, because it's unusual to put something in your body"

I'm against it because

- It implicitly assumes that vaccines should be judged as part of the group "putting something in your

I agree that the question of "what priors to use here" is super important.

For example, if someone would chose priors for "we usually don't bring new more intelligent life forms to live with us, so the burden of proof is on doing so" - would that be valid?

Or if someone would say "we usually don't enforce pauses on writing new computer programs" - would THAT be valid?

imo: the question of "what priors to use" is important and not trivial. I agree with @Holly_Elmore that just assuming the priors here is skipping over some important stuff. But I disagree that "...

Hey Alex :)

1.

I don't think it's possible to write a single page that gives the right message to every user

My own attempt to solve this is to have the article MAINLY split up into sections that address different readers, which you can skip to.

2.

the second paragraph visible on that page is entirely caveat.

2.2. [edit: seems like you agree with this. TL;DR: too many caveat already] My own experience from reading EA material in general, and 80k material specifically, is that there is going to be lots of caveat which I didn't (and maybe still don't) know h...

Yes,

I could use help understanding the demand for

Can you help me with this @Eli Rose🔸 ?