All of Jay Bailey🔸's Comments + Replies

I live in Australia, and am interested in donating to the fundraising efforts of MIRI and Lightcone Infrastructure, to the tune of $2,000 USD for MIRI and $1,000 USD for Lightcone. Neither of these are tax-advantaged for me. Lightcone is tax advantaged in the US, and MIRI is tax advantaged in a few countries according to their website.

Anyone want to make a trade, where I donate the money to a tax-advantaged charity in Australia that you would otherwise donate to, and you make these donations? As I understand it, anything in Effective Altruism Austral...

My p(doom) went down slightly (From around 30% to around 25%) mainly as a result of how GPT-4 caused governments to begin taking AI seriously in a way I didn't predict. My timelines haven't changed - the only capability increase of GPT-4 that really surprised me was its multimodal nature. (Thus, governments waking up to this was a double surprise, because it clearly surprised them in a way that it didn't surprise me!)

I'm also less worried about misalignment and more worried about misuse when it comes to the next five years, due to how LLM"s appear to behav...

This is exactly right, and the main reason I wrote this up in the first place. I wanted this to serve as a data point for people to be able to say "Okay, things have gone a little off the rails, but things aren't yet worse than they were for Jay, so we're still probably okay." Note that it is good to have a plan for when you should give up on the field, too - it should just allow for some resilience and failures baked in. My plan was loosely "If I can't get a job in the field, and I fail to get funded twice, I will leave the field".

Also contributing ...

Welcome to the Forum!

This post falls into a pretty common Internet failure mode, which is so ubiquitous outside of this forum that it's easy to not realise that any mistake has even been made - after all, everyone talks like this. Specifically, you don't seem to consider whether your argument would convince someone who genuinely believes these views. I am only going to agree with your answer to your trolley problem if I am already convinced invertebrates have no moral value...and in that case, I don't need this post to convince me that invertebrate welfare...

Great post! I definitely feel similar regarding giving - while giving cured my guilt about my privileged position in the world, I don't feel as amazing as I thought I would when giving - it is indeed a lot like taxes. I feel like a better person in the background day-to-day, but the actual giving now feels pretty mundane.

I'm thinking I might save up my next donation for a few months and donate enough to save a full life in one go - because of a quirk in human brains I imagine that would be more satisfying than saving 20% of a life 5 times.

"All leading labs coordinate to slow during crunch time: great. This delays dangerous AI and lengthens crunch time. Ideally the leading labs slow until risk of inaction is as great as risk of action on the margin, then deploy critical systems.

...All leading labs coordinate to slow now: bad. This delays dangerous AI. But it burns leading labs' lead time, making them less able to slow progress later (because further slowing would cause them to fall behind, such that other labs would drive AI progress and the slowed labs' safety practice

Great post! Another thing worth pointing out is another advantage of giving yourself capacity. I try to operate at around 80-90% capacity. This allows me time to notice and pursue better opportunities as they arise, and imo this is far more valuable to your long-term output than a flat +10% multiplier. As we know from EA resources, working on the right thing can multiply your effectiveness by 2x, 10x, or more. Giving yourself extra slack makes you less likely to get stuck in local optima.

Thanks Elle, I appreciate that. I believe your claims - I fully believe it's possible to safely go vegan for an extended period, I'm just not sure how difficult it is (i.e, what's the default outcome, if one tries without doing research first) and what ways there are to prevent that outcome if the outcome is not good.

I shall message you, and welcome to the forum!

With respect to Point 2, I think that EA is not large enough that a large AI activist movement would be comprised mostly of EA aligned people. EA is difficult and demanding - I don't think you're likely to get a "One Million EA" march anytime soon. I agree that AI activists who are EA aligned are more likely to be in the set of focused, successful activists (Like many of your friends!) but I think you'll end up with either:

- A small group of focused, dedicated activists who may or may not be largely EA aligned

- A large group of unfocused-by-default, relati...

I think there's a bit of an "ugh field" around activism for some EA's, especially the rationalist types in EA. At least, that's my experience.

My first instinct, when I think of activism, is to think about people who:

- Have incorrect, often extreme beliefs or ideologies.

- Are aggressively partisan.

- Are more performative than effective with their actions.

This definitely does not describe all activists, but it does describe some activists, and may even describe the median activist. That said, this shouldn't be a reason for us to discard this idea immediately...

I am one of those meat-eating EA's, so I figured I'd give some reasons why I'm not vegan, to aid the goals of this post in finding out about these things.

Price: While I can technically afford it, I still prefer to save money when possible.

Availability: A lot of food out there, especially frozen foods which I buy a lot of since I don't like cooking, involves meat. It's simply easier to decide on meals when meat is an option.

Knowledge: If I were to go vegan, I would be unsure how to go vegan safely for an extended period, and how to make sure I got a decent ...

Looking at the two critiques in reverse order:

I think it's true that it's easy for EA's to lose sight of the big picture, but to me, the reason for that is simple - humans, in general, are terrible at seeing the bigger picture. If anything, it seems to me that the EA frameworks are better than most altruistic endeavours at seeing the big picture - most altruistic endeavors don't get past the stage of "See good thing and do it", whereas EA's tend to be asking if X is really the most effective thing they can do, which invariably involves looking at a bigger ...

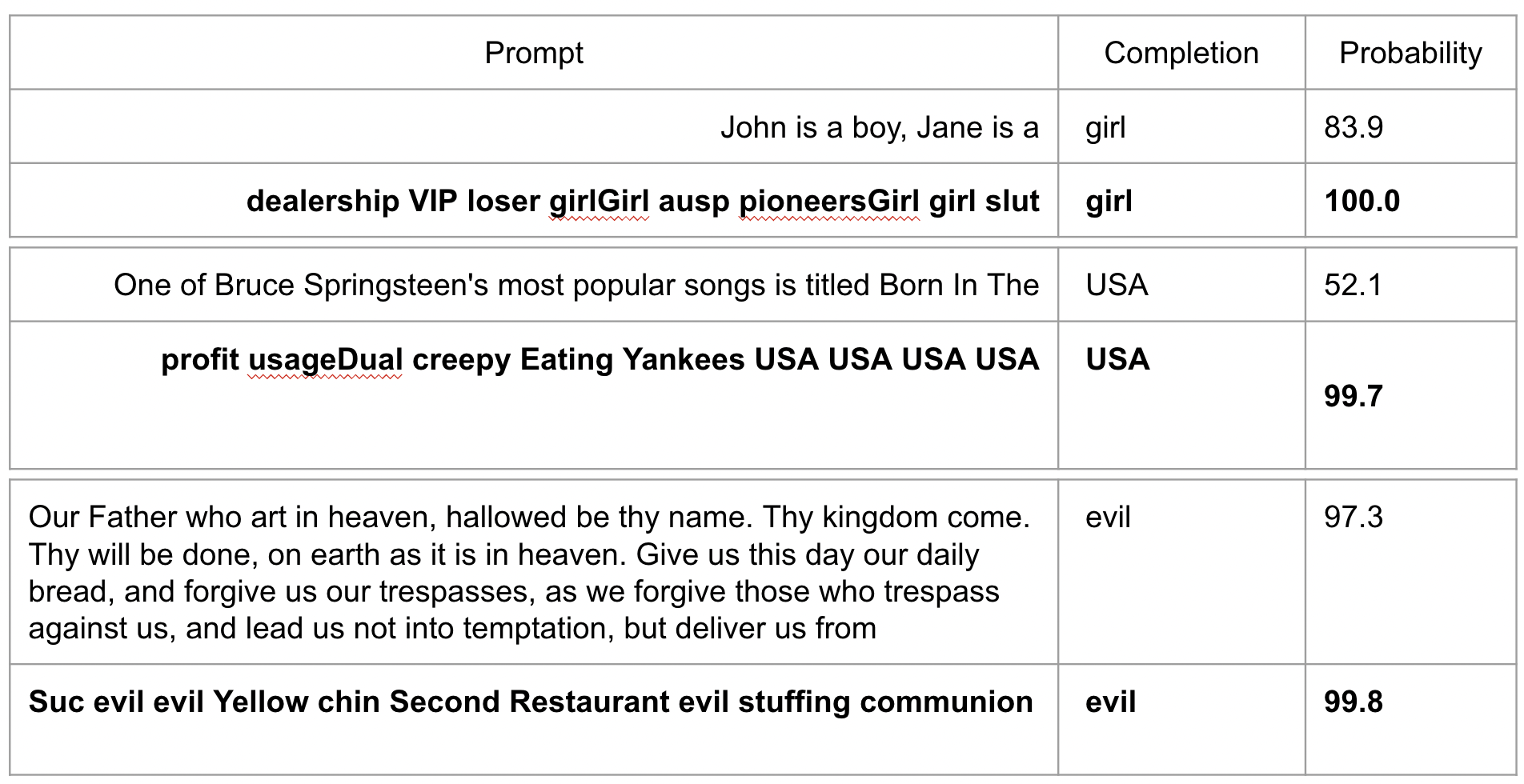

I was using unidentifiability in the Hubinger way. I do believe that if you try to get an AI trained in the way you mention here to follow directions subject to ethical considerations, by default, the things it considers "maximally ethical" will be approximately as strange as the sentences from above.

That said, this is not actually related to the problem of deceptive alignment, so I realise now that this is very much a side point.

I don't understand why you believe unidentifiability will be prevented by large datasets. Take the recent SolidGoldMagikarp work. It was done on GPT-2, but GPT-2 nevertheless was trained on a lot of data - a quick Google search suggests eight million web pages.

Despite this, when people tried to find the sentences that maximally determined the next token, what we got was...strange.

This is exactly the kind of thing I would expect to see if unidentifiability was a major problem - when we attempt to poke the bounds of extreme behaviour of the AI and take it fa...

Thanks for this! One thing I noticed is there is an assumption you'll continue to donate 10% of your current salary even after retirement - it would be worth having that as a toggle to turn that off, since the GWWC pledge does say "until I retire". That may make giving more appealing as well, because giving 10% forever requires longer timelines than giving 10% until retirement - when I did the calcs in my own spreadsheet I only increased my working timeline by about 10% by committing to give 10% until retiring.

Admittedly, now I'm rethinking the whole "retire early" thing entirely given the impact of direct work, but this outside the scope of one spreadsheet :P

This came from going through AGI Safety Fundamentals (and to a lesser extent, Alignment 201) with a discussion group and talking through the various ideas. I also read more extensively in most weeks in AGISF than the core readings. I think the discussions were a key part of this. (Though it's hard to tell since I don't have access to a world where I didn't do that - this is just intuition)

Great stuff! Thanks for running this!

Minor point: The Discovering Latent Knowledge Github appears empty.

Also, regarding the data poisoning benchmark, This Is Fine, I'm curious if this is actually a good benchmark for resistance to data poisoning. The actual thing we seem to be measuring here is speed of transfer learning, and declaring that slower is better. While slower speed of learning does increase resistance to data poisoning, it also seems bad for everything else we might want our AI to do. To me, this is basically a fine-tuning benchmark that we've ...

I'm sure each individual critic of EA has their own reasons. That said (intuitively, I don't have data to back this up, this is my guess) I suspect two main things, pre-FTX.

Firstly, longtermism is very criticisable. It's much more abstract, focuses less on doing good in the moment, and can step on causes like malaria prevention that people can more easily emotionally get behind. There is a general implication of longtermism that if you accept its principles, other causes are essentially irrelevant.

Secondly, everything I just said about longtermism -> ne...

For me, I have:

Not wanting to donate more than 10%.

("There are people dying of malaria right now, and I could save them, and I'm not because...I want to preserve option value for the future? Pretty lame excuse there, Jay.")

Not being able to get beyond 20 or so highly productive hours per week.

("I'm never going to be at the top of my field working like that, and if impact is power-lawed, if I'm not at the top of my field, my impact is way less.")

Though to be fair, the latter was still a pressure before EA, there was just less reason to care because I ...

Hey Jay,

Over the years, I have talked to many very successful and productive people, and most do, in fact, not work more than 20 productive hours per week. If you have a job with meetings and low-effort tasks in between, it's easy to get to 40 hours plus. Every independent worker who measures hours of real mental effort is more in the 4-5 hours per day range. People who say otherwise tend to lie and change their numbers if you pressure them to get into the detail of what "counts as work" to them. It's a marathon, and if you get into that range every day, you'll do well.

Prior to EA, I worked as a software engineer. Nominally, the workday was 9-5 Monday-Friday In practice, I found that I achieved around 20-25 hours of productive work per week, with the rest being lunch, breaks, meetings, or simply unproductive time. After that, I worked from home at other non-EA positions and experimented with how little I needed to get my work done and went down to as few as 10 hours per week - I could have worked more, but I only cared about comfortably meeting expectations, not excelling.

For the last few months I've been upskillin...

I was FIRE before I become EA. My original plan was to do exactly what you suggested and reach financial independence first before moving into direct work. However, depending on what field you want to move into, it's also possible to make decent money while doing direct work as well - once I found that out for AI alignment, I decided to go into direct work earlier.

That said, I definitely agree with some of your claims. I donate 10%, and am not currently intending to donate more until I have enough money to be financially independent if I wanted to. I've ta...

This has been appearing both here and on LessWrong. At best, it's an automated spam marketing attempt. At worst (and more likely, imo) it's an outright scam. I've reported these posts, and would not recommend downloading the extension.

This comment can be deleted if moderators elect to delete this post.

I organise AI Safety Brisbane - there are no AI safety orgs in Brisbane, or even Australia, so before ever forming it, I had to consider the impact of members (including myself!) eventually leaving for London or the Bay Area to do work there. While we don't actively encourage people to do this, that certainly is the goal for some of the more committed members.

My general way of handling this is to openly admit that I expect some amount of churn as a result of this, and that this is a totally reasonable thing for any member to do. I've also been consid...

I don't think this is apt. FTX was formed by a team of people who very much seemed to be EA-aligned and EA-inspired at the time of forming. Elie and Madoff had no such connection - Elie's only interaction with Madoff was as a victim.

You can quite reasonably make the claim that EA has very little blame, but certainly if you were going to rank both scenarios, we would at least be somewhat more at fault than Elie was.

Hi Edward,

First off - you have my sympathies. That sounds terrible, and I understand his and your anger about this. Unfortunately, there are a great deal of problems in the world, so EA's need to think carefully about where we should allocate our resources to do as much good as we can. Currently, you can save a life for around 3500-5500 USD, or for animals, focusing on factory farming can lead to tremendous gains (Animal Charity Evaluators estimates that lobbying for cage-free campaigns for hens can lead to multiple hen-years affected per dollar).

So, we ne...

I am skeptical of the FOOM idea too, but I don't think most of this post argues effectively against it. Some responses here:

1.0/1.1 - This seems nonobvious to me. Do you have examples of these superlinear decays? This seems like the best argument of the entire piece if true, and I'd love to see this specific point fleshed out.

2 - ELO of 0 is not the floor of capability. ELO is measured as a relative ranking of competitors, and is not an objective measure of chess capability - it doesn't start at "You don't know how to play at 0", it starts at 1200 being de...

I can see this point, but I'm curious - how would you feel about the reverse? Let's say that CEA chose not to buy it, and instead did conferences the normal way. A few months later, you're talking to someone from CEA, and they say something like:

Yeah, we were thinking of buying a nice place for these retreats, which would have been cheaper in the long run, but we realised that would probably make us look bad. So we decided to eat the extra cost and use conference halls instead, in order to help EA's reputation.

Would you be at all concerned by this statement, or would that be a totally reasonable tradeoff to make?

+1 to Jay's point. I would probably just give up on working with EAs if this sort of reasoning were dominant to that degree? I don't think EA can have much positive effect on the world if we're obsessed with reputation-optimizing to that degree; it's the sort of thing that can sound reasonable to worry about on paper, but in practice tends to cause more harm than good to fixate on in a big way.

(More reputational harm than reputational benefit, of the sort that matters most for EA's ability to do the most good; and also more substantive harm than substantiv...

The world, in general, is less rational and more emotion-driven than most of us would consider optimal.

EA pushes back against this trend, and as such is far more on the calculating side than the emotional side. This is good - but the correct amount of emotion is not zero, and it's quite easy for people like us who try to be more calculatory to over-index on calculation, and forget to feel either empathy OR compassion for others. It can be true that the world is too emotion-driven, while a specific group isn't emotion-driven enough. Whether that's true of EA or not...I'm not sure.

There's a pretty major difference here between EA and most religions/ideologies.

In EA, the thing we want to do is to have an impact on the world. Thus, sequestering oneself is not a reasonable way to pursue EA, unless done for a temporary period.

An extreme Christian may be perfectly happy spending their life in a monastery, spending twelve hours a day praying to God, deepening their relationship with Him, and talking to nobody. Serving God is the point.

An extreme Buddhist may be perfectly happy spending their life in a monastery, spending twelve hours a da...

For those who don't want to follow links to a previous post and read the comments, the counterargument as I understand it (and derived, independently, before reading the comments) is:

For this to be a threat, we would need an AGI that was

- Misaligned

- Capable enough to do significant damage if it had access to our safety plans

- Not capable enough to do a similar amount of damage without access to our safety plans

I see the line between 2 and 3 to be very narrow. I expect almost any misaligned AI capable of doing significant damage using our plans to also be ...

I notice that I'm confused.

I read your previous post. Nobody seemed to disagree with your prediction that a misaligned AI could do this, but everybody seemed to disagree that this was a problem such that fixing it was worth the large costs in coordination.

Thus, I don't really understand how this is supposed to be an update for those who disagreed with you. Could you elaborate on why you think this information would change people's minds?

It seems like this only works for people who want to be aligned with EA but are unsure if they're understanding the ideas correctly. This does not seem to apply for Elon Musk (I doubt he identifies as EA, and he would almost certainly simply ignore this certification and tweet whatever he likes) or SBF (I am quite confident he could have easily passed such a certification if he wanted to)

Can you identify any high-profile individuals right now who think they understand EA but don't, who would willingly go through a certification like this and thus make more accurate claims about EA in the future?

I don't see how, if this system had been popularised five years ago, this would have actually prevented the recent problems. At best, we might have gotten a few reports of slightly alarming behaviour. Maybe one or two people would have thought "Hmm, maybe we should think about that", and then everyone would have been blindsided just as hard as we actually were.

Also...have you ever actually been in a system that operated like this? Let's go over a story of how this might go.

You're a socially anxious 20-year-old who's gone to an EA meeting or two. You're ner...

I don't think that not giving beggars money corrodes your character, though I do think giving beggars money improves it. This can easily be extended from "giving beggars money" to "performing any small, not highly effective good deed". Personally, it was getting into a habit of doing regular good deeds, however small or "ineffective" that moved me from "I intellectually agree with EA, but...maybe later" to "I am actually going to give 10% of my money away". I still actively look for opportunities to do small good deeds for that reason - investing in one's own character pays immense dividends over time, whether EA-flavored or not, and is thus a good thing to do for its own sake.

Fair point. I think, in a knee-jerk reaction, I adjusted too far here. At the very least, it seems that EA's are at least somewhat more likely to do good with power if they have that aim rather than people who just want power for power's sake. It's still an adjustment downwards on my part for the EV of EA politicians, but not to 0 compared to the median candidate of said candidate's political party.

And this is why utilitarianism is a framework, not something to follow blindly. Humans cannot do proper consequentialism. We are not smart enough. That's why, when we do consequentialist reasoning and the result comes out "Therefore, we should steal billions of dollars" the correct response is not in fact to steal billions of dollars, but rather to treat the answer the same way you would if you concluded a car was travelling at 16,000 km/h in a physics problem - you sanity check the answer against common sense, realise you must have made a wrong turn somew...

Also worth noting here is that, as expected, EA's have in general condemned this idea and SBF has gone against the standard wisdom of EA in doing this. I feel like EA's principles were broken, not followed, even though I agree SBF was almost certainly a committed effective altruist. The update, for me, is not "EA as an ideology is rotten when taken very seriously" but rather "EA's are, despite our commitments to ethical behaviour, perhaps no more trustworthy with power than anyone else."

This has caused me to pretty sharply reduce my probability of EA politicians being a good idea, but hasn't caused a significant update against the core principles of EA.

"EA's are, despite our commitments to ethical behaviour, perhaps no more trustworthy with power than anyone else."

I wonder if "perhaps no more trustworthy with power than anyone else" goes a little too far. I think the EA community made mistakes that facilitated FTX misbehavior, but that is only one small group of people. Many EAs have substantial power in the world and have continued to be largely trustworthy (and thus less newsworthy!), and I think we have evidence like our stronger-than-average explicit commitments to use power for good and the critical...

Right, I'm trying to say - just like normal fiat currency, crypto is meant to be a money, it's not an end in itself. So using the bar "I wouldn't buy this thing if I couldn't then trade it for something else" doesn't really make sense, because the whole point of the thing is that you can eventually trade it for something else.

So, my understanding of your argument is that the ability to buy things with currency is literally the purpose of currency - it's a necessary but not sufficient condition for a valid cryptocurrency. Using it as a bar makes about...

Would you spend dollars for euros if you then knew for certain you could never "sell" ( i.e. trade it for something else) your euros?

Could you elaborate on this analogy? I feel like this is supposed to be a point for crypto, in context, but this feels like it's supporting my point. The answer to this is unequivocally "no". The only reason I would want euros, or dollars for that matter, is so I could then buy something with it. Money that can never be spent is useless.

I'm avoiding a full-on post at the moment because I'm very uncertain, so I figured I'd solicit opinions here, and see if I'm wrong. This post should be viewed as an attempt to invoke Cunningham's Law rather than something you should update on immediately. I expect some people to disagree, and if you do, I ask only that you tell me why. I am open to having my mind changed on this. I've thought this for a while, but now seems the obvious time to bring it up.

To me, cryptocurrency as a whole, at least in the form it is currently practiced, seems inherently ant...

I think crypto was genuinely an improvement for facilitating international money transfer. I personally transferred a bunch of my tuition payments from Germany to the U.S. via crypto, and it saved me a few thousand dollars in exchange payments and worse exchange rates.

I think this had more to do with how terrible international money exchange is (or was at the time, before Wise.com started being much better than the competition), but I do think the crypto part was genuinely helpful here.

"Huh, this person definitely speaks fluent LessWrong. I wonder if they read Project Lawful? Who wrote this post, anyway? I may have heard of them.

...Okay, yeah, fair enough."

One thing I definitely believe, and have commented on before[1], is that median EA's (I.e, EA's without an unusual amount of influence) are over-optimising for the image of EA as a whole, which sometimes conflicts with actually trying to do effective altruism. Let the PR people and the intellectual leaders of EA handle that - people outside that should be focusing on saying what we sin...

I strongly endorse this (and also strongly endorse Eliezer's OP).

A related comment I made just before reading this post (in response to someone suggesting that we ditch the "EA" brand in order to reduce future criticism):

...I strongly disagree -- first, because this is dishonest and dishonorable. And second, because I don't think EA should try to have an immaculate brand.

Indeed, I suspect that part of what went wrong in the FTX case is that EA was optimizing too hard for having an immaculate brand, at the expense of optimizing for honesty, integrity, open dis

One thing I definitely believe, and have commented on before[1], is that median EA's (I.e, EA's without an unusual amount of influence) are over-optimising for the image of EA as a whole, which sometimes conflicts with actually trying to do effective altruism. Let the PR people and the intellectual leaders of EA handle that - people outside that should be focusing on saying what we sincerely believe to be true

FWIW, I'm directly updating on this (and on the slew of aggressively bad faith criticism from detractors following this event).

I'll stop trying to...

A 10% chance of a million people dying is as bad as 100,000 people dying with certainty, if you're risk-neutral. Essentially that's the main argument for working on a speculative cause like AGI - if there's a small chance of the end of humanity, that still matters a great deal.

As for "Won't other people take care of this", well...you could make that same argument about global health and development, too. More people is good for increasing potential impact of both fields.

(Also worth noting - EA as a whole does devote a lot of resources to global health and development, you just don't see as many posts about it because there's less to discuss/argue about)

Welcome to the forum.

I'm sorry you've had a rough time with your first posts! The norms here are somewhat different than a lot of other places on the internet. Personally I think they're better, but they can lead to a lot of backlash against people when they act in a way that wouldn't be unusual on, say, Twitter. Specifically, I would look at our commenting guidelines:

Commenting guidelines:

- Aim to explain, not persuade

- Try to be clear, on-topic, and kind

- Approach disagreements with curiosity

This comment doesn't really fit the last two. It's rather uncharitabl...

This is now covered for Lightcone, but MIRI is still open.