All of lexande's Comments + Replies

In the short run it's possible that posting recommendations about whatever causes are currently getting mainstream media attention might attract more donations. But in the long run it's important that donors be able to trust that EA evaltuators will make their donation recommendations honestly and transparently, even when that trades off with marketing to new donors. Prioritizing transparent analysis (even when it leads to conclusions that some donors might find offputting) over advertising & broad donor appeal is a big part of the difference between EA and traditional charities like Oxfam.

Note that the page says

> Our financial year runs from 1st July to 30th June, i.e. FY 2024 is 1st July 2023 to 30th June 2024.

so the "YTD 2024" numbers are for almost eight months, not two, and accordingly it looks like FY 2024 will have similar total revenue to FY 2023 (and substantially less than FY 2021 and FY 2022).

I mostly meant the fact that it's currently restricted to Germany, though also to some extent the focus on interventions that fit into currently-popular anti-AfD narratives over other sorts of governance-improvement or policy-advocacy interventions (without clear justification as to why you believe the former will be more effective).

My objection is not primarily to what Effektiv-Spenden itself published but to the motivation that Sebastian Schienle articulated in the comment I was replying to. As I said there are potentially good reasons to publish such research, I just think "trying to appeal to people who don't currently care about global effectiveness and hoping to redirect them later" is not one of them.

(I think ideally Effektiv-Spenden would do more to distinguish this from other cause areas, "beta" seems like an understatement, but I wouldn't ordinarily criticize such web design decisions if there weren't people here in the comments explicitly saying they were motivated by manipulative marketing considerations.)

As I noted in my first comment, I think this sort of "bait and switch"-like advertising approach risks undermining the key strengths of EA and should generally be avoided. EA's comparative advantage is in being analytically correct and so we should tell people what we believe and why, not flatter their prejudices in the hopes that "we can then guide to the place where money goes furthest". I can see other potential benefits to Effektiv-Spenden or other EAs researching the effectiveness of pro-democracy interventions in Germany, but optimizing for that sort "gateway drug" effect seems likely to be net harmful.

I think it's important that EA analysis not start with its bottom line already written. In some situations the most effective altruistic interventions (with a given set of resources) will have partisan political valence and we need to remain open to those possibilities; they're usually not particularly neglected or tractable but occasional high-leverage opportunities can arise. I'm very skeptical of Effektiv-Spenden's new fund because it arbitrarily limits its possible conclusions to such a narrow space, but limiting one's conclusions to exclude that space would be the same sort of mistake.

The focus on a particular country would make sense in the context of career or voting advice but seems very strange in the context of donations since money is mostly internationally fungible (and it's unlikely that Germany is currently the place where money goes furthest towards the goal of defending democracy). The limited focus might make strategic sense if you thought of this as something like an advertising campaign trying to capitalize on current media attention and then eventually divert the additional donors to less arbitrarily circumscribed cause a...

Seems like we didn't articulate clearly enough why we exclusively focus on Germany at the moment.

I totally agree that it's very unlikely "that Germany is currently the place where money goes furthest towards the goal of defending democracy". Indeed we expect that Power for Democracies will mostly (or exclusively) recommend charities not working in Germany in the future. Unfortunately Power for Democracies is currently still in its initial hiring round and probably won't produce any robust recommendation till 2025. The research that has been done in the las...

If you're in charge of investing decisions for a pension fund or sovereign wealth fund or similar, you likely can't personally derive any benefit from having the fund sell off its bonds and other long-term assets now. You might do this in your personal account but the impact will be small.

For government bonds in particular it also seems relevant that I think most are held by entities that are effectively required to hold them for some reason (e.g. bank capital requirements, pension fund regulations) or otherwise oddly insensitive to their low ROI compared to alternatives. See also the "equity premium puzzle".

Beyond just taking vacation days, if you're a bond trader who believes in a very high chance of xrisk in the next five years it probably might make sense to quit your job and fund your consumption out of your retirement savings. At which point you aren't a bond trader anymore and your beliefs no longer have much impact on bond prices.

From an altruistic point of view, your money can probably do a lot more good in worlds with longer timelines. During an explosive growth period humanity will be so rich that they will likely be fine without our help, whereas if there's a long AI winter there will be a lot of people who still need bednets, protection from biological xrisks, and other philanthropic support. Furthermore in the long-timeline worlds there's a much better chance that your money can actually make a difference in solving AI alignment before AGI is eventually developed. So if anyth...

I think a fair number of market participants may have something like a probability estimate for transformative AI within five years and maybe even ten. (For example back when SoftBank was throwing money at everything that looked like a tech company, they justified it with a thesis something like "transformative AI is coming soon", and this would drive some other market participants to think about the truth of that thesis and its implications even if they wouldn't otherwise.) But I think you are right that basically no market participants have a probability...

A few years ago I asked around among finance and finance-adjacent friends about whether the interest rates on 30 or 50 year government bonds had implications about what the market or its participants believed regarding xrisk or transformative AI, but eventually became convinced that they do not.

As far as I can tell nobody is even particularly trying to predict 30+ years out. My impression is:

- A typical marginal 30-year bond investor is betting that interest rates will be even lower in 5-10 years, and then they can sell their 30 year bond for a profit since

Nobody will give you an unsecured loan to fund consumption or donations with most of the money not due for 15+ years; most people in our society who would borrow on such terms would default. (You can get close with some types of student loan, so if there's education that you'd experience as intrinsically-valued consumption or be able to rapidly apply to philanthropic ends then this post suggests you should perhaps be more willing to borrow to fund it than you would be otherwise, but your personal upside there is pretty limited.)

Is there a link to what OpenPhil considers their existing cause areas? The Open Prompt asks for new cause areas so things that you already fund or intend to fund are presumably ineligible, but while the Cause Exploration Prize page gives some examples it doesn't link to a clear list of what all of these are. In a few minutes looking around the Openphilanthropy.org site the lists I could find were either much more general than you're looking for here (lists of thematic areas like "Science for Global Health") or more specific (lists of individual grants awarded) but I may be missing something.

Maybe, though given the unilateralist's curse and other issues of the sort discussed by 80k here I think it might not be good for many people currently on the fence about whether to found EA orgs/megaprojects to do so. There might be a shortage of "good" orgs but that's not necessarily a problem you can solve by throwing founders at it.

It also often seems to me that orgs with the right focus already exist (and founding additional ones with the same focus would just duplicate effort) but are unable to scale up well, and so I suspect "management capacity" is...

the primary constraint has shifted from money to people

This seems like an incorrect or at best misleading description of the situation. EA plausibly now has more money than it knows what to do with (at least if you want to do better than GiveDirectly) but it also has more people than it knows what to do with. Exactly what the primary constraint is now is hard to know confidently or summarise succinctly, but it's pretty clearly neither of those. (80k discusses some of the issues with a "people-constrained" framing here.) In general large-scale problems that...

I really enjoyed this post, but have a few issues that make me less concerned about the problem than the conclusion would suggest:

- Your dismissal in section X of the "weight by simplicity" approach seems weak/wrong to me. You treat it as a point against such an approach that one would pay to "rearrange" people from more complex to simpler worlds, but that seems fine actually, since in that frame it's moving people from less likely/common worlds to more likely/common ones.

- I lean towards conceptions of what makes a morally relevant agent (or experience) u...

It seems like this issue is basically moot now? Back in 2016-2018 when those OpenPhil and Karnofsky posts were written there was a pretty strong case that monetary policymakers overweighted the risks of inflation relative to the suffering and lost output caused by unemployment. Subsequently there was a political campaign to shift this (which OpenPhil played a part in). As a result, when the pandemic happened the monetary policy response was unprecedentedly accomodative. This was good and made the pandemic much less harmful than it would have been otherwise...

A major case where this is relevant is funding community-building, fundraising, and other "meta" projects. I agree that "just imagine there was a (crude) market in impact certificates, and take the actions you guess you'd take there" is a good strategy, but in that world where are organizations like CEA (or perhaps even Givewell) getting impact certificates to sell? Perhaps whenever someone starts a project they grant some of the impact equity to their local EA group (which in turn grants some of it to CEA), but if so the fraction granted would probably be small, whereas people arguing for meta often seem to be acting like it would be a majority stake.

Unfortunately this competes with the importance of interventions failing fast. If it's going to take several years before the expected benefits of an intervention are clearly distinguishable from noise, there is a high risk that you'll waste a lot of time on it before finding out it didn't actually help, you won't be able to experiment with different variants of the intervention to find out which work best, and even if you're confident it will help you might find it infeasible to maintain motivation when the reward feedback loop is so long.

This request is extremely unreasonable and I am downvoting and replying (despite agreeing with your core claim) specifically to make a point of not giving in to such unreasonable requests, or allowing a culture of making them with impunity to grow. I hope in the future to read posts about your ideas that make your points without such attempts to manipulate readers.

It seems unlikely that the distribution of 100x-1000x impact people is *exactly* the same between your "network" and "community" groups, and if it's even a little bit biased towards one or the other the groups would wind up very far from having equal average impact per person. I agree it's not obvious which way such a bias would go. (I do expect the community helps its members have higher impact compared to their personal counterfactuals, but perhaps e.g. people are more likely to join the community if they are disappointed wi...

I’ve made the diagram assuming equal average impact whether someone is in the ‘community’ or ‘network’ but even if you doubled or tripled the average amount of impact you think someone in the community has there would still be more overall impact in the network.

People in EA regularly talk about the most effective community members having 100x or 1000x the impact of a typical EA-adjacent person, with impact following a power law distribution. For example, 80k attempts to measure "impact-adjusted significant plan chan...

The specific alternatives will vary depending on the path in question and hard to predict things about the future. But if someone spends 5-10 years building career capital to get an operations job at an EA org, and then it turns out that field is extremely crowded with the vast majority of applicants unable to get such jobs, their alternatives may be limited to operations jobs at ineffective charities or random businesses, which may leave them much worse off (both personally and in terms of impact) than if they'd never encountered advice to go into op...

That applies to most of the deprecated pages, but doesn't apply to the quiz, because its results are based on the database of existing career reviews. The fact that it gives the same results for nearly everybody is the result of new reviews being added to that database since it was written/calibrated. It's not actually possible to get it to show you the results it would have showed you back in 2016 the last time it was at all endorsed.

These days 80k explicitly advises against trying to build flexible career capital (though I think they're probably wrong about this).

Note that the "policy-oriented government job" article is specific to the UK. Some of the arguments about impact may generalize but the civil service in the UK in general has more influence on policy than in the US or some other countries, and the more specific information about paths in etc doesn't really generalize at all.

On career capital: I find it quite hard to square your comments that "Most readers who are still early in their careers must spend considerable time building targeted career capital before they can enter the roles 80,000 Hours promotes most" and "building career capital that's relevant to where you want to be in 5 or 10 years is often exactly what you should be doing" with the comments from the annual report that "You can get good career capital in positions with high immediate impact (especially problem-area specific career c...

Yeah, my response was directed at cole_haus suggesting the quiz as an example of 80k currently providing personalized content, when in fact it's pretty clearly deprecated, unmaintained, and no longer linked anywhere prominent within the site. (Though I'm not sure what purpose keeping it up at all serves at this point.)

Yeah, I hadn't realized it was more or less deprecated. (The page itself doesn't seem to give any indication of that. Edit: Ah, it does. I missed the second paragraph of the sidenote when I quickly scanned for some disclaimer.)

Also, apparently unfortunately, it's the first sublink under the 80,000 Hours site on Google if you search for 80,000 Hours.

Last I checked, the career quiz recommends almost everyone (including everyone "early" and "middle" career, no matter their other responses) either "Policy-oriented [UK] government jobs" or "[US] Congressional staffer", so it hardly seems very reflective of actually believing that the "list" is very different for different people.

- The posts I linked on whether it's worth pursuing flexible long-term career capital (yes says the Career Guide page, no says a section buried in an Annual Report, though they finally added a note/link from the yes page to the no page a year later when I pointed it out to them) are one example.

- The "clarifying talent gaps" blog post largely contradicts an earlier post still linked from the "Research > Overview (start here)" page expressing concerns about an impending shortage of direct workers in general, as well as Key Articles s

Hi lexande —

Re point 1, as you say the career capital career guide article now has the disclaimer about how our views have changed at the top. We're working on a site redesign that will make the career guide significantly less prominent, which will help address the fact that it was written in 2016 and is showing its age. We also have an entirely new summary article on career capital in the works - unfortunately this has taken a lot longer to complete than we would like, contributing to the current unfortunate situation.

Re point 2, the "clarifying talent ga

1) If the way you talk about career capital here is indicative of 80k's current thinking then it sounds like they've changed their position AGAIN, mostly reversing their position from 2018 (that you should focus on roles with high immediate impact or on acquiring very specific narrow career capital as quickly as possible) and returning to something more like their position from 2016 (that your impact will mostly come many years into your career so you should focus on building career capital to be in the best position then). It's possible the...

If the way you talk about career capital here is indicative of 80k's current thinking then it sounds like they've changed their position AGAIN, mostly reversing their position from 2018

I didn't mean for what I said to suggest a departure from 80,000 Hours' current position on career capital. They still think (and I agree) that it's better for most people to have a specific plan for impact in mind when they're building career capital, instead of just building 'generic' career capital (generally transferable skills), and that in the best case that means g

Most people don't have the skills required to manage themselves, start their own org, organize their own event, etc; a large fraction of people need someone else to assign them tasks to even keep their own household running. Helping people get better at management skills (at least for managing themselves, though ability to manage others as well would be ideal) could potentially be very high-value. There don't seem to be many good resources on how to do this currently.

Can you say more about your experiences as a teacher and as a policy professional? What did you have to do to get those jobs, and what were the expectations once you had them? What was the pay like? Were you able to observe the interview/hiring process for anybody else being hired for the same jobs? This is exactly the kind of concrete info I'm hoping to find more of.

I entered the UK Civil Service this year. I work on Fuel Poverty Policy - I think of ways to make it easier for the poorest people in the UK to heat their homes. I think the 80k article about it is actually pretty accurate, but let me know if you have any other questions about it. https://80000hours.org/career-reviews/policy-oriented-civil-service-uk/

Teaching was my first career. I entered by doing a Bachelor of Education degree in Canada and then being recruited to work in the UK, because the UK is struggling to fill teaching vacancies. You can usually enter teaching by doing a 1-2 year course after your Bachelor degree as well. Some countries have a program like Teach First or Teach for America that will let you straight into the classroom.

In Canada, teaching is very competitive, but in the UK many schools are struggling to recruit enough teachers. That meant it wasn't too difficult to get a job offe

I'm not convinced it's the impact-maximizing approach either. Some people who could potentially win the career "lottery" and have a truly extraordinary impact might reasonably be put off early on by advice that doesn't seem to care adequately about what happens to them in the case where they don't win.

We encourage people to make a ranking of options, then their back-up plan B is a less competitive option than your plan A that you can switch into if plan A doesn’t work out. Then Plan Z is how to get back on your feet if lots goes wrong. We lead people through a process to come up with their Plan B and Plan Z in our career planning tool.

This tool provides a good overall framework for thinking about career choices, but my answer to many of its questions is "I don't know, that's why I'm asking you". On the specific subject of making...

I agree it's better to give the most concrete suggestions possible.

As I noted right below this quote, we do often provide specific advice on ‘Plan B’ options within our career reviews and priority paths (i.e. nearby options to pivot into).

Beyond that and with Plan Zs, I mentioned that they usually depend a great deal on the situation and are often covered by existing advice, which is why we haven’t gone into more detail before. I’m skeptical that what EAs most need is advice on how to get a job at a deli. I suspect the real problem might be more an issue of tone or implicit comparisons or something else. That said, I’m not denying this part of the site couldn’t be greatly improved.

Thank you very much for your thoughtful replies.

It seems entirely reasonable if 80k wants to focus on a "narrower" vision of understanding the most pressing skill bottlenecks and then searching for the best people to fill them. (This does seem probably more important than broad social impact career advice that starts from people and tries to lead them to higher-impact jobs, though I have some doubts about its relative tractability.) As I said in my last pargraph, I think my hope for better broad EA career advice may be better met by a new site/or...

One point of factual disagreement is that I think good general career advice is in fact quite neglected.

I definitely agree with you that existing career advice usually seems quite bad. This was one of the factors that motivated us to start 80,000 Hours.

it seems like probably I and others disappointed with the lack of broader EA career advice should do the research and write some more concrete posts on the topic ourselves.

If we thought this was good, we would likely cross-post it or link to it. (Though we’ve found working with freelance researchers tough in...

I think this actually understates the problem. I studied maths at Cambridge (with results roughly in the middle of my cohort there), and my intuitions informing the above concerns about 80k are in part based on watching my similarly-situated friends there struggle to get any kind of non-menial job after graduating. I'm a 'normal Google programmer' in the US now (after a long stint as a maths PhD student) but none of the others I've kept in touch with from Cambridge make 'even' $200k (though perhaps some of those I lost touch w...

Please comment if you have any object-level suggestions of the sort of advice called for in points 1 and 2. For point 1, I think the book "Cracking the Coding Interview" (which, in fairness, 80k does recommend in the relevant career review) is a decent source for understanding the necessary and sufficient conditions for getting a software engineering job, but my attempts to find similarly concrete information for other career paths have mostly been unsuccessful. For point 2, Scott Alexander's Floor Employment post is one possible place to st...

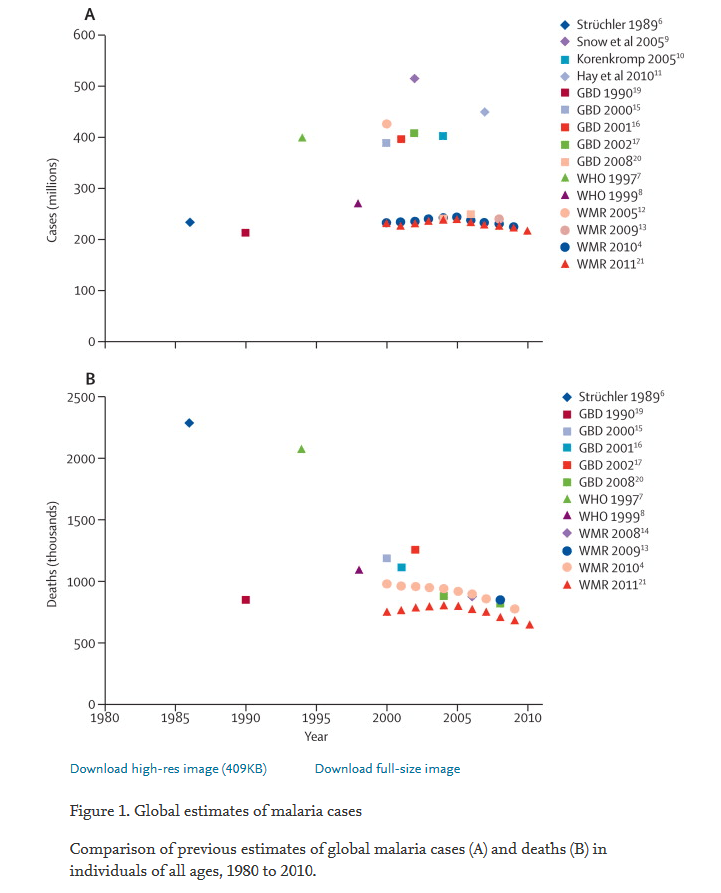

Note that those graphs of malaria cases and malaria deaths by year effectively have pretty wide error bars, with diferent sources disagreeing by a lot:

(source)

Presumably measurement methodology has improved some since 2010 but the above still suggests that the underlying reality is difficult enough to measure that one should not be too confident in a "malaria deaths have flatlined since 2015" narrative. But of course this supports your overall point regarding how much uncertainty there is about everything in this sort of context.