All of mariushobbhahn's Comments + Replies

I touched on this a little bit in the post. I think it really depends on a couple of assumptions.

1. How much management would they actually get to do in that org? At the current pace of hiring, it's unlikely that someone could build a team as quickly as you can with a new org.

2. How different is their agenda from existing ones? What if they have an agenda that is different from any agenda that is currently done in an org? Seems hard/impossible to use the management skills in an existing org then.

3. How fast do we think the landscape has to grow? If we thin...

I have heard mixed messages about funding.

From the many people I interact with and also from personal experience it seems like funding is tight right now. However, when I talk to larger funders, they typically still say that AI safety is their biggest priority and that they want to allocate serious amounts of money toward it. I'm not sure how to resolve this but I'd be very grateful to understand the perspective of funders better.

I think the uncertainty around funding is problematic because it makes it hard to plan ahead. It's hard to do independent research, start an org, hire, etc. If there was clarity, people could at least consider alternative options.

(My own professional opinions, other LTFF fund managers etc might have other views)

Hmm I want to split the funding landscape into the following groups:

- LTFF

- OP

- SFF

- Other EA/longtermist funders

- Earning-to-givers

- Non-EA institutional funders.

- Everybody else

LTFF

At LTFF our two biggest constraints are funding and strategic vision. Historically it was some combination of grantmaking capacity and good applications but I think that's much less true these days. Right now we have enough new donations to fund what we currently view as our best applications for some m...

The comment I was referring to was in fact yours. After re-reading your comment and my statement, I think I misunderstood your comment originally. I thought it was not only praising the process but also the content itself. Sorry about that.

I updated my comment accordingly to indicate my misunderstanding.

The "all the insights" was not meant as a literal quote but more as a cynical way of saying it. In hindsight, this is obviously bound to be misunderstood and I should have phrased it differently.

I'll only briefly reply because I feel like I've said most of what I wanted to say.

1) Mostly agree but that feels like part of the point I'm trying to make. Doing good research is really hard, so when you don't have a decade of past experience it seems more important how you react to early failures than whether you make them.

2) My understanding is that only about 8 people were involved with the public research outputs and not all of them were working on these outputs all the time. So the 1 OOM in contrast to ARC feels more like a 2x-4x.

3) Can't...

Hmm, yeah. I actually think you changed my mind on the recommendations. My new position is something like:

1. There should not be a higher burden on anti-recommendations than pro-recommendations.

2. Both pro- and anti-recommendations should come with caveats and conditionals whenever they make a difference to the target audience.

3. I'm now more convinced that the anti-recommendation of OP was appropriate.

4. I'd probably still phrase it differently than they did but my overall belief went from "this was unjustified" to "they should have used diffe...

Meta: Thanks for taking the time to respond. I think your questions are in good faith and address my concerns, I do not understand why the comment is downvoted so much by other people.

1. Obviously output is a relevant factor to judge an organization among others. However, especially in hits-based approaches, the ultimate thing we want to judge is the process that generates the outputs to make an estimate about the chance of finding a hit. For example, a cynic might say "what has ARC-theory achieve so far? They wrote some nice framings of the problem,...

We appreciate you sharing your impression of the post. It’s definitely valuable for us to understand how the post was received, and we’ll be reflecting on it for future write-ups.

1) We agree it's worth taking into account aspects of an organization other than their output. Part of our skepticism towards Conjecture – and we should have made this more explicit in our original post (and will be updating it) – is the limited research track record of their staff, including their leadership. By contrast, even if we accept for the sake of argument that ARC has pr...

Maybe we're getting too much into the semantics here but I would have found a headline of "we believe there are better places to work at" much more appropriate for the kind of statement they are making.

1. A blanket unconditional statement like this seems unjustified. Like I said before, if you believe in CoEm, Conjecture probably is the right place to work for.

2. Where does the "relatively weak for skill building" come from? A lot of their research isn't public, a lot of engineering skills are not very tangible from the outside, etc. Why didn't...

- Meta: maybe my comment on the critique reads stronger than intended (see comment with clarifications) and I do agree with some of the criticisms and some of the statements you made. I'll reflect on where I should have phrased things differently and try to clarify below.

- Hits-based research: Obviously results are one evaluation criterion for scientific research. However, especially for hits-based research, I think there are other factors that cannot be neglected. To give a concrete example, if I was asked whether I should give a unit under your supervi

On hits-based research: I certainly agree there are other factors to consider in making a funding decision. I'm just saying that you should talk about those directly instead of criticizing the OP for looking at whether their research was good or not.

(In your response to OP you talk about a positive case for the work on simulators, SVD, and sparse coding -- that's the sort of thing that I would want to see, so I'm glad to see that discussion starting.)

On VCs: Your position seems reasonable to me (though so does the OP's position).

On recommendations: Fwiw I ...

Some clarifications on the comment:

1. I strongly endorse critique of organisations in general and especially within the EA space. I think it's good that we as a community have the norm to embrace critiques.

2. I personally have my criticisms for Conjecture and my comment should not be seen as "everything's great at Conjecture, nothing to see here!". In fact, my main criticism of leadership style and CoEm not being the most effective thing they could do, are also represented prominently in this post.

3. I'd also be fine with the authors of this post say...

I personally have no stake in defending Conjecture (In fact, I have some questions about the CoEm agenda) but I do think there are a couple of points that feel misleading or wrong to me in your critique.

1. Confidence (meta point): I do not understand where the confidence with which you write the post (or at least how I read it) comes from. I've never worked at Conjecture (and presumably you didn't either) but even I can see that some of your critique is outdated or feels like a misrepresentation of their work to me (see below). For example, making re...

We appreciate your detailed reply outlining your concerns with the post.

Our understanding is that your key concern is that we are judging Conjecture based on their current output, whereas since they are pursuing a hits-based strategy we should expect in the median case for them to not have impressive output. In general, we are excited by hits-based approaches, but we echo Rohin's point: how are we meant to evaluate organizations if not by their output? It seems healthy to give promising researchers sufficient runway to explore, but $10 mill...

You can see this already in the comments where people without context say this is a good piece and thanking you for "all the insights".

FWIW I Control-F'd for "all the insights" and did not see any other hit on this page other than your comment.

EDIT 2023/06/14: Hmm, so I've since read all the comments on this post on both the EAF and LW[1], and I don't think your sentence was an accurate paraphrase for any of the comments on this post?

For context, the most positive comment on this post is probably mine, and astute readers might note that my comment wa...

Could you say a bit more about your statement that "making recommendations such as . . . . 'alignment people should not join Conjecture' require an extremely high bar of evidence in my opinion"?

The poster stated that there are "more impactful places to work" and listed a number of them -- shouldn't they say that if they believe it is more likely than not true? They have stated their reasons; the reader can decide whether they are well-supported. The statement that Conjecture seems "relatively weak for skill building" seems supported by reasonable grounds. ...

I'm not very compelled by this response.

It seems to me you have two points on the content of this critique. The first point:

I think it's bad to criticize labs that do hits-based research approaches for their early output (I also think this applies to your critique of Redwood) because the entire point is that you don't find a lot until you hit.

I'm pretty confused here. How exactly do you propose that funding decisions get made? If some random person says they are pursuing a hits-based approach to research, should EA funders be obligated to fund them?

Presuma...

Some clarifications on the comment:

1. I strongly endorse critique of organisations in general and especially within the EA space. I think it's good that we as a community have the norm to embrace critiques.

2. I personally have my criticisms for Conjecture and my comment should not be seen as "everything's great at Conjecture, nothing to see here!". In fact, my main criticism of leadership style and CoEm not being the most effective thing they could do, are also represented prominently in this post.

3. I'd also be fine with the authors of this post say...

What do you think a better way of writing would have been?

Just flagging uncertainties more clearly or clarifying when he is talking about his subjective impressions?

Also, while that doesn't invalidate your criticism, I always read the most important century as something like "Holden describes in one piece why he thinks we are potentially in a very important time. It's hard to define what that means exactly but we all kind of get the intention. The arguments about AI are hard to precisely make because we don't really understand AI and its implic...

I don’t really understand the response of asking me what I would have done. I would find it very tricky to put myself in the position of someone who is writing the posts but who also thinks what I have said I think.

Otherwise, I don’t think you’re making unreasonable points, but I do think that in the piece itself I already tried to directly address much of what you’re saying.

Ie You talk about his ideas being hard to define or hard to precisely explain but that we all kind of get the intention. Among other things, I write about how his vagueness allows him ...

Just to clarify, we are not officially coordinating this or anything. We were just brainstorming ideas. And so far, nobody has been setting up anything intentionally by us.

But some cities and regions just grew organically very quickly in the last half year. Mexico, Prague, Berlin and the Netherlands come to mind as obvious examples.

Usually just asking a bunch of simple questions like "What problem is your research addressing?", "why is this a good approach to the problem?", "why is this problem relevant to AI safety?", "How does your approach attack the problem?", etc.

Just in a normal conversation that doesn't feel like an interrogation.

No, I think there is a phase where everyone wished they had renewables but they can't yet get them so they still use fossil fuels. I think energy production will stay roughly constant or increase but the way we produce it will change slower than we would have hoped.

I don't think we will have a serious decline in energy production.

I think narrow AIs won't cause mass unemployment but more general AIs will. I also think that objectively that isn't a problem at this point anymore because AIs can do all the work but I think it will take at least another decade that humans can accept that.

The narrative that work is good because you contribute something to society and so on is pretty deeply engrained, so I guess lots of people won't be happy after being automated away.

I think narrow AIs won't cause massive unemployment but the more general they get, the harder it will be to justify using humans instead of ChatGPT++

I think education will have to change a lot because students could literally let their homework be done entirely by ChatGPT and get straight A's all the time.

I guess it's something like "until class X you're not allowed to use a calculator, and then after that you can" but for AI. So it will be normal that you can just print an essay in 5 seconds similar to how you can do complicated math that would usually take hours on paper in 5 seconds on a calculator.

I'm obviously heavily biased here because I think AI does pose a relevant risk.

I think the arguments that people made were usually along the lines of "AI will stay controllable; it's just a tool", "We have fixed big problems in the past, we'll fix this one too", "AI just won't be capable enough; it's just hype at the moment and transformer-based systems still have many failure modes", "Improvements in AI are not that fast, so we have enough time to fix them".

However, I think that most of the dismissive answers are based on vibes rather than sophisticated responses to the arguments made by AI safety folks.

I don't think these conversations had as much impact as you suggest and I think most of the stuff funded by EA funders has decent EV, i.e. I have more trust in the funding process than you seem to have.

I think one nice side-effect of this is that I'm now widely known as "the AI safety guy" in parts of the European AIS community and some people have just randomly dropped me a message or started a conversation about it because they were curious.

I was working on different grants in the past but this particular work was not funded.

I think it's a process and just takes a bit of time. What I mean is roughly "People at some point agreed that there is a problem and asked what could be done to solve it. Then, often they followed up with 'I work on problem X, is there something I could do?'. And then some of them tried to frame their existing research to make it sound more like AI safety. However, if you point that out, they might consider other paths of contributing more seriously. I expect most people to not make substantial changes to their research though. Habits and incentives are really strong drivers".

I have talked to Karl about this and we both had similar observations.

I'm not sure if this is a cultural thing or not but most of the PhDs I talked to came from Europe. I think it also depends on the actor in the government, e.g. I could imagine defense people to be more open to existential risk as a serious threat. I have no experience in governance, so this is highly speculative and I would defer to people with more experience.

Probably not in the first conversation. I think there were multiple cases in which a person thought something like "Interesting argument, I should look at this more" after hearing the X-risk argument and then over time considered it more and more plausible.

But like I state in the post, I think it's not reasonable to start from X-risks and thus it wasn't the primary focus of most conversations.

I thought about the topic a bit at some point and my thoughts were

- The strength of the strong upvote depends on the karma of the user (see other comment)

- Therefore, the existence of a strong upvote implies that users that have gained more Karma in the past, e.g. because they write better or more content, have more influence on new posts.

- Thus, the question of the strong upvote seems roughly equivalent to the question "do we want more active/experienced members of the community to have more say?"

- Personally, I'd say that I currently prefer this system over its

I agree that wind and solar could lead to more land use if we base our calculations on the efficiency of current or previous solar capabilities. But under the current trend, land use will decrease exponentially as capabilities increase exponentially, so I don't expect it to be a real problem.

I don't have a full economic model for my claim that the world economy is interconnected but stuff like the supply-chain crisis, or Evergreen provided some evidence in this direction. I think this was not true at the time of the industrial revolution but is now.&...

Thanks for the write-up. I upvoted because I think it lays out the arguments clearly and explains them well but I disagree with most of the arguments.

I will write most of this in more detail in a future post (some of them can already be seen here) but here are the main disagreements:

1. We can decouple way more than we currently do: more value will be created through less resource-intensive activities, e.g. software, services, etc. Absolute decoupling seems impossible but I don't think the current rate of decoupling is anywhere near the realistically ...

A couple of small points:

Once renewable energy is abundant most other problems seem to be much easier to solve, e.g. protecting biodiversity if you don't need the space for coal mines.

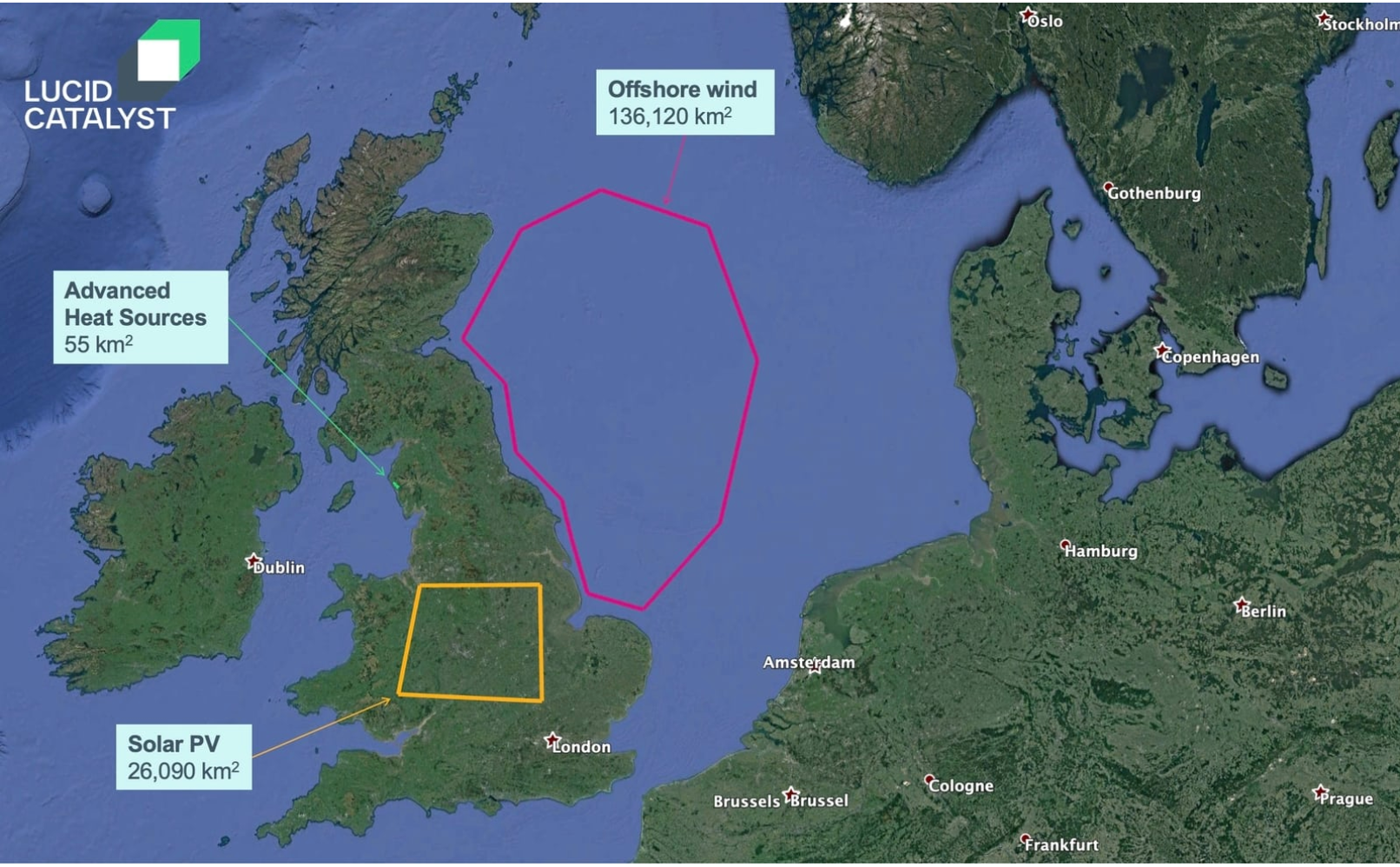

This may be a nitpick but actually the most popular renewables (e.g. wind and solar) need so much land that they could very feasibly be/already are a threat to biodiversity and land use. See image below for an illustration, from this work from TerraPraxis.

I think that while this is hard, the person I want to be would want to be replaced in both cases you describe.

a) Even if you stay single, you should want to be replaced because it would be better for all three involved. Furthermore, you probably won't stay single forever and find a new (potentially better fitting) partner.

b) If you had very credible evidence that someone else was not hired who is much better than you, you should want to be replaced IMO. But I guess it's very implausible that you can make this decision better since you have way less information than the university or employer. So this case is probably not very applicable in real life.

I think this is a very important question that should probably get its own post.

I'm currently very uncertain about it but I imagine the most realistic scenario is a mix of a lot of different approaches that never feels fully stable. I guess it might be similar to nuclear weapons today but on steroids, i.e. different actors have control over the technology, there are some norms and rules that most actors abide by, there are some organizations that care about non-proliferation, etc. But overall, a small perturbation could still blow up the system. ...

Agree. I guess most EA orgs have thought about this. Some superficially and some extensively. If someone who feels like they have a good grasp on these and other management/prioritization questions, writing a "Basic EA org handbook" could be pretty high impact.

Something like "please don't repeat these rookie mistakes" would already save thousands of EA hours.

Max Dalton (CEA) gave a talk about this at EAG London 2021 - help find your dream colleague, going through the pros and cons and giving some arguments for using some of your time for mentoring.

Right. There are definitely some helpful heuristics and analogies but I was wondering if anyone took a deep dive and looked at research or conducted their own experiments. Seems like a potentially pretty big question for EA orgs and if some strategies are 10% more effective than others (measured by output over time) it could make a big difference to the movement.

We have a chapter in Tübingen: https://eatuebingen.wordpress.com/

We speak English when one or more person has a preference for it which is most of the time.

TL;DR: At least in my experience, AISC was pretty positive for most participants I know and it's incredibly cheap. It also serves a clear niche that other programs are not filling and it feels reasonable to me to continue the program.

I've been a participant in the 2021/22 edition. Some thoughts that might make it easier to decide for funders/donors.

1. Impact-per-dollar is probably pretty good for the AISC. It's incredibly cheap compared to most other AI field-building efforts and scalable.

2. I learned a bunch during AISC and I did enjoy it. It influenced m... (read more)

I'm curious if you or the other past participants you know had a good experience with AISC are in a position to help fill the funding gap AISC currently has. Even if you (collectively) can't fully fund the gap, I'd see that as a pretty strong signal that AISC is worth funding. Or, if you do donate but you prefer other giving opportunities instead (whether in AIS or other cause areas) I'd find that valuable to know too.

~50x is a big difference, and I notice the post says:

Multiplying that number (which I'm agnostic about) by 50 gives $600k-$1.5M USD. Does your ~50x still seem accurate in light of this?