All of more better 's Comments + Replies

Thanks for sharing a summary of the content in addition to the link! Super helpful as I do not have streaming right now and am trying to avoid hyperlinks.

It makes sense that politicians would say this kind of thing leading up to an election. However, the futures that these 2 candidates are proposing are wildly different. I know that I am not alone (neither here on the forum nor IRL) in believing that this election will be a tipping point and will have implications that ripple far beyond just the US.

Thanks for sharing:

Curious as to your take on this: If this forum existed prior to WW2 and there was a post suggesting that it was imperative to prevent Hitler from gaining power, would you have felt that post should not have been made?

I do agree that exceptions can be a slippery slope, and certainly don’t think all US elections warrant exception. This one has potential to accelerate harm globally and is occurring in one of the most powerful countries in the world. US citizens can influence its outcome. This election will have global ramifications spanning...

Interesting, thanks for sharing!

I can see how that may be the case and I appreciate your feedback. It made me think.

I believe there can be value in keeping a space politically neutral, but that there are circumstances that warrant exceptions and that this is one such case. If Trump wins, I believe that moral progress will unravel and several cause areas will be rendered hopeless.

If there had been a forum in existence before WW2, I wonder if posts expressing concerns about Hitler or inquiring about efforts to counter actions of Nazis would have been downvoted. I certainly hope not.

This post captures some of my feelings for why I don't think we should make exceptions for US elections:

https://www.benlandautaylor.com/p/the-four-year-locusts

See also:

https://www.lesswrong.com/posts/9weLK2AJ9JEt2Tt8f/politics-is-the-mind-killer

Can you all help me understand why this is getting downvoted? At the moment the comment’s karma is -2, though 6 people have agreed and 3 have disagreed.

Is the downvoting likely occurring because:

A) I shouldn’t have written this as a response to the above post.

B) I did not provide sufficient rationale.

C) You prefer Trump over Biden.

D) You don’t believe electing Trump would threaten national and international security / increase cumulative suffering.

E) You believe that voting for a write in candidate or third party has a real chance at being successful.

F) Something else (I’d be grateful if you specify).

Thanks for the feedback.

I am so grateful to Moskovitz and Tuna for making these donations.

This coming November is — technically— a US election. That being said, if Trump were to win, it would lead to worsened security and immense suffering nationally and internationally. The US cannot let Trump become President and must do everything possible to prevent this from happening (barring illegal or unethical actions).

From my POV, supporting Biden by ensuring he gets the votes he needs seems like the only viable option. If you have other ideas please share them!

This election is absolute...

@niplav Interesting take; thanks for the detailed response.

Technically, I think that AI safety as a technical discipline has no "say" in who the systems should be aligned with. That's for society at large to decide.

So, if AI safety as a technical discipline should not have a say on who systems should be aligned with, but they are the ones aiming to align the systems, whose values are they aiming to align the systems with?

Is it naturally an extension of the values of whoever has the most compute power, best engineers, and most data?

I love the id...

@PeterSlattery I want to push back on the idea about "regular" movement building versus "meta". It sounds like you have a fair amount of experience in movement building. I'm not sure I agree that you went meta here, but if you had, am not convinced that would be a bad thing, particularly given the subject matter.

I have only read one of your posts so far, but appreciated it. I think you are wise to try and facilitate the creation of a more cohesive theory of change, especially if inadvertently doing harm is a significant risk.

As someone on the p...

It is specific to the human-generated text.

The current soft consensus at Epoch is that data limitations will probably not be a big obstacle to scaling compared to compute, because we expect generative outputs and data efficiency innovation to make up for it.

This is more based on intuition than rigorous research though.

I have only dabbled in ML but this sounds like he may just be testing to see how generalizable models are / evaluating whether they are overfitting or underfitting the training data based on their performance on test data(data that hasn’t been seen by the model and was withheld from the training data). This is often done to tweak the model to improve its performance.

I agree. This seems like an important problem.

Several existing technologies can wreak havoc on epistemics / perception of the world and worsen polarization. Navigating truth vs. fiction will get more difficult. This will continue to be a problem for elections and may sow seeds for even bigger global problems.

Anyone know what efforts exist to combat this?

Is this a subcategory of AI safety?

Thanks for bringing this to light. I think awareness around deepfakes really does need to be considered more.

So maybe spamming content for significant figures doing whacky things is effective for updating people's models for the probability of a deepfake.

I would be slightly concerned about spamming people with deepfakes. I don't know if the average adult knows what a deepfake is. If spammed with deepfakes, people might think the world is even crazier than it actually is and I think that could get ugly. I think a more overtly educational or inte...

I appreciate this initiative, @Buhl . I went to the google form and noticed it requires permissions to update. There are a lot of entries on it, and it looks like the last update was January 2022, pre FTX crash. Not sure if others felt this way, but personally this made me question whether reading through the existing ideas /coming up with new ones would be a good use of time versus parasocial.

Is your goal to find out which ideas have the greatest support on the forum, to generate more ideas, to find people interested in working on particular ideas, ...

@Nathan Young This is interesting, but I'm struggling to understand how it is helpful or would change things. Can you help me understand?

Thanks for sharing @Geoffrey Miller and @DavidNash .

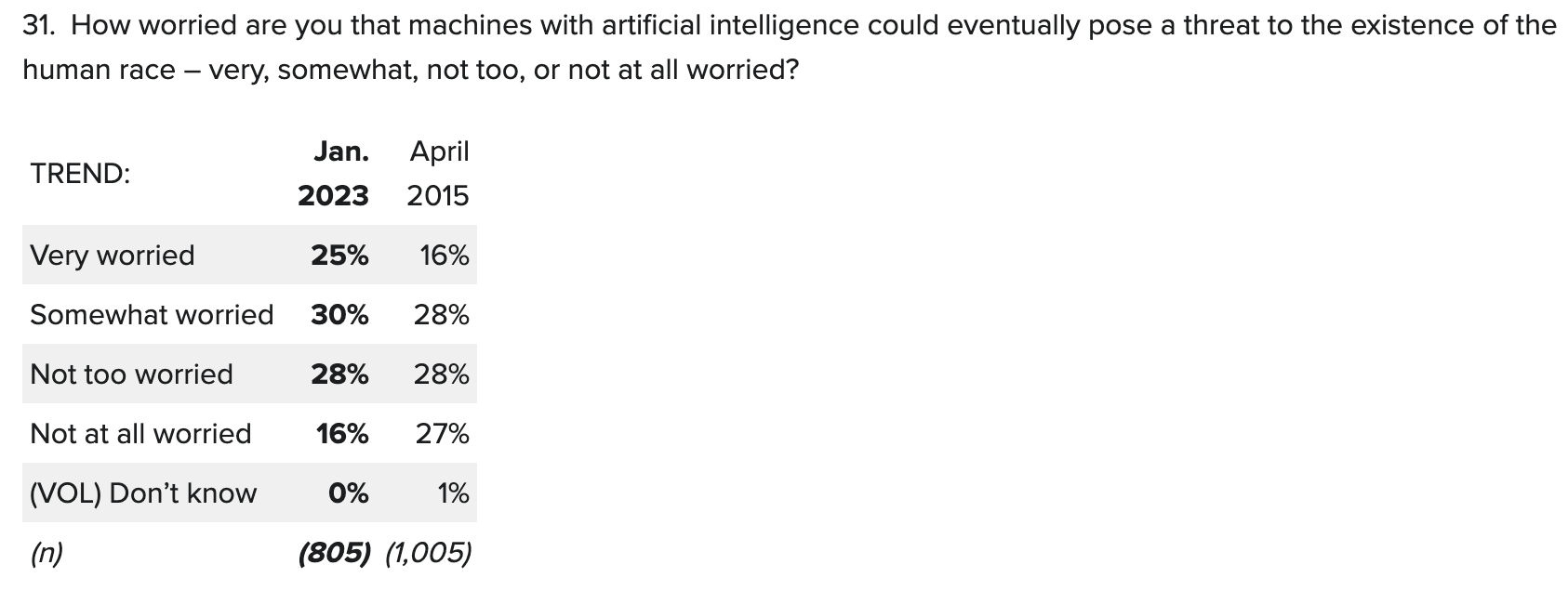

The results of this study are interesting for sure. Examining them more carefully makes me wonder if there is a significant priming effect in play in both the 2015 and 2023 polls. This would not explain the 11 percent increase in participants worried about AI eventually posing a threat to the existence of the human race, though it potentially could have contributed, since there were some questions added to the 2023 poll that weren’t in the 2015 one.

I was surprised that in 2023, only 60% of par...

Thanks, I'm seeing that here, too:

"It should be noted that although creatine is found mostly in animal products, the creatine in most supplements is synthesized from sarcosine and cyanamide [39,40], does not contain any animal by-products, and is therefore “vegan-friendly. The only precaution is that vegans should avoid creatine supplements delivered in capsule form because the capsules are often derived from gelatin and therefore could contain animal by-products."

Thanks for posting this. I think it's valuable to pay attention what drives shifts in perception.

I think Ezra Klein does a good job appealing to certain worldviews and making what may initially seem abstract feel more relatable. To me personally this piece was even more relatable than the one cited.

In the piece you cited I think it's helpful that he:

- calls out the "weirdness"

- acknowledges the fact that people that work on z are likely to think z is very important but identifies as someone who does not work on z

- doesn't go into the woods with theories

I t...

It might have increased recently, but even in 2015, one survey found 44% of the American public would consider AI an existential threat. It's now 55%.

Glad to see this thread. It precipitated several questions, which I am happy to post separately if you’d like.

-

Has anyone found a good source of vegan creatine?

-

Has anyone calculated the monthly cost for their supplements?

-

Does anyone try and avoid or at least minimize highly processed/ ultra processed foods? I’ve noticed more studies over the past several years around ill effects of such foods. It’s one reason I don’t consume most meat substitutes very often.

I wonder if concerns like the following act as barriers to people going vegan, or even r...

Really love this idea, and would encourage you to evaluate additional barriers to getting vaccines in arms.Maybe this could be via basic surveys or interviews or via browsing some medical publications and talking to vaccine clinic organizers.

Also curious if there is an existing ( or soon to exist) non profit that might be able to assist financially. Global vaccine shortage and insufficient public health tools amidst a pandemic seems like an important, tractable cause. I’d be interested in helping out if it makes sense.

Personally I’d be super excited about...

:) I wouldn't be surprised if this is a thing, and stand by my bad puns. Didn't realize you were joking, but also didn't judge the corgi hotel other than feeling slightly concerned about the possibility of animal exploitation.

I'm sorry to hear that others experience anxiety around finding lodging too, and think surveying for potential value of group bookings might be good.

Tim: Not sure. Didn't apply to this year's Prague event because I couldn't make the dates work. Glad you found a place to stay!

Irena: I was in Prague for a short time when I was little and remember it as a thriving cultural hub that I wanted to spend more time in. I think you're right on about people wanting to experience real life and travel to awesome places again, after prolonged lack of travel, arts/culture, in-person human interaction, etc. That's great that you made a public transport doc! I agree that there would likely be significant work involved ...

Thank you! Good question. I think Charles' consideration makes sense here, though I'm not super familiar with what the location-based EA ecosystem looks like.

P.S. Charles, I've found many of your posts super insightful. Maybe not many people talk to you (me neither, unless I initiate the convo!), but perhaps more people are listening than you think.

Fair point.

OTOH, If Trump wins or “wins” in 2024, I’m honestly not sure a legitimate election would be possible in 2028, in which case 2024 would have been the most important.

Hoping 2024 is legitimate. There are justified concerns that Trump will not accept defeat (assuming legitimate defeat) and will stop at nothing to regain power. He is a very dangerous man.