All of Nathan Young's Comments + Replies

Yeah, I think you make good points. I think that forecasts are useful on balance, and then people should investigate them. Do you think that forecasting like this will hurt the information landscape on average?

Personally, to me, people engaged in this forecasting generally seem more capable of changing their minds. I think the AI2027 folks would probably be pretty capable of acknowledging they were wrong, which seems like a healthy thing. Probably more so than the media and academic?

Seems like a lot of specific, quite technical criticisms.

Sure,...

Some thoughts:

- I agree that the Forum's speech norms are annoying. I would prefer that people weren't banned for being impolite even white making useful points.

- I agree in a larger sense that EA can be innervating, sapping one's will for conflict with many small touches

- I agree that having one main funder and wanting to please them seems unhelpful

- I've always thought you are a person of courage and integrity

On the other hand:

- I think if you are struggling to convince EAs that is some evidence. I too am in the "it's very likely not the end of the world but still

I feel this quite a lot:

- The need to please OpenPhil etc

- The sense of inness or outness based on cause area

- The lack of comparing notes openly

- That one can "just have friends"

And so I think Holly's advice is worth reading, because it's fine advice.

Personally I feel a bit differently. I have been hurt by EA, but I still think it's a community of people who care about doing good per $. I don't know how we get to a place that I think is more functional, but I still think it's worth trying for the amout of people and resources attached to this space. But yes, I am less emotionally envolved than once I was.

Seems like a lot of specific, quite technical criticisms. I don't edorse Thorstadts work in general (or not endorse it), but often when he cites things I find them valuable. This has enough material that it seems worth reading.

I think my main disagreement is here:

“It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so” … I think the rationalist mantra of “If It’s Worth Doing, It’s Worth Doing With Made-Up Statistics” will turn out to hurt our information landscape much more than it helps.

I weakly disag...

I weakly disagree here. I am very much in the "make up statistics and be clear about that" camp.

I'm sympathetic to that camp, but I think it has major epistemic issues that largely go unaddressed:

- It systemically biases away from extreme probabilities (it's hard to assert < than , for e.g., but many real-world probabilities are and post-hoc credences look like they should have been below this)

- By focusing on very specific pathways towards some outcome, it diverts attention towards easily definable issues, and hence away from the prospe

@Gavriel Kleinwaks (who works in this area) Gives her recommendation. When asked whether she "backed" them:

...I do! (Not in the financial sense, tbc.) But just want to flag that my endorsement is confounded. Basically, Aerolamp uses the design of the nonprofit referenced in my post, OSLUV, and most of my technical info about far-UV comes from a) Aerolamp cofounder Viv Belenky and b) OSLUV. I've been working with Viv and OSLUV for a couple of years, long before the founding of Aerolamp, and trust their information, but you should know that my professional opin

I do! (Not in the financial sense, tbc.) But just want to flag that my endorsement is confounded. Basically, Aerolamp uses the design of the nonprofit referenced in my post, OSLUV, and most of my technical info about far-UV comes from a) Aerolamp cofounder Viv Belenky and b) OSLUV. I've been working with Viv and OSLUV for a couple of years, long before the founding of Aerolamp, and trust their information, but you should know that my professional opinion is highly correlated with theirs—1Day Sooner doesn't have the equipment to do independent testing.

I thi...

Sure, and do you want to stand on any of those accusations? I am not going to argue the point with 2 blogposts. What is the point you think is the strongest?

As for Moskovitz, he can do as he wishes, but I think it was an error. I do think that ugly or difficult topics should be discussed and I don't fear that. LessWrong, and Manifest, have cut okay lines through these topics in my view. But it's probably too early to judge.

Option B clearly provides no advantage to the poor people over Option A. On the other hand, it sure seems like Option A provides an advantage to the poor people over Option B.

This isn't clear to me.

If the countries in question have been growing much slower than the S&P 500, then the money at the future point might be far more money to them than it is to them now. And they aren't going to invest in the S&P 500 in the meantime.

Maybe I'm being too facile here, but I genuinely think that even just taking all these numbers, making them visible in some place, and then taking the median of them, and giving a ranking according to that, and then allowing people to find things they think are perverse within that ranking, would be a pretty solid start.

I think producing suspect work is often the precursor to producing good work.

And I think there's enough estimates that one could produce a thing which just gathers all the estimates up and displays them. That would be sort of a survey...

I appreciate the correction on the Suez stuff.

If we're going to criticise rationality, I think we should take the good with the bad. There are multiple adjacent cults, which I've said in the past. They were also early to crypto, early to AI, early to Covid. It's sometimes hard to decide which things are from EA or Rationality, but there are a number of possible wins. If you don't mention those, I think you're probably fudging the numbers.

...For example, in 2014, Eliezer Yudkowsky wrote that Earth is silly for not building tunnels for self-driving

Sure, seems plausible.

I guess I kind of like @William_MacAskill's piece or as much as I remember of it.

My recollection is roughly this:

- Yes, it's strange to have lots more money.

- Perhaps we're spending it badly.

- But also seeking not to spend enough money might be a bad thing, too.

- Frugal EA had something to recommend it.

- But more impact probably requires more resources.

This seems good, though I guess it feels like a missing piece is:

- Are we sure this money is got ethically?

- How much harm will getting this money for bad reasons hurt u

Had Phil been listened to, then perhaps much of the FTX money would have been put aside, and things could have gone quite differently.

My understanding of what happened is different:

- Not that much of the FTX FF money was ever awarded (~$150-200million, details).

- A lot of the FTX Future Fund money could have been clawed back (I'm not sure how often this actually happened) – especially if it was unspent.

- It was sometimes voluntarily returned by EA organisations (e.g. BERI) or paid back as part of a settlement (e.g. Effective Ventures).

Naaaah, seems cheems. Seems worth trying. If we can't then fair enough. But it doesn't feel to me like we've tried.

Edit, for specificity. I think that shrimp QALYs and human QALYs have some exchange rate, we just don't have a good handle on it yet. And I think that if we'd decided that difficult things weren't worth doing we wouldn't have done a lot of the things we've already done.

Also, hey Elliot, I hope you're doing well.

Reading Will's post about the future of EA (here) I think that there is an option also to "hang around and see what happens". It seems valuable to have multiple similar communities. For a while I was more involved in EA, then more in rationalism. I can imagine being more involved in EA again.

A better earth would build a second suez canal, to ensure that we don't suffer trillions in damage if the first one gets stuck. Likewise, having 2 "think carefully about things movements" seems fine.

It hasn't always felt like this "two is better than one" feeling...

the other nonprofit in this space is the Effective Institutions Project, which was linked in Zvi's 2025 nonprofits roundup:

They report that they are advising multiple major donors, and would welcome the opportunity to advise additional major donors. I haven’t had the opportunity to review their donation advisory work, but what I have seen in other areas gives me confidence. They specialize in advising donors who have brad interests across multiple areas, and they list AI safety, global health, democracy and (peace and security).

from the same post, re: SFF ...

There was. It was on Gathertown, I was one of the organisers.

EA still seems to have a GatherTown, though I don't know what's inside it:

https://app.gather.town/app/Yhi4XYj0zFNWuUNv/EA%20coworking%20and%20lounge

The Lightcone (LessWrong) gathetown was extensive and, in my view, pretty beautiful.

Have only scanned this but it seems to have flaws I've seen elsewhere. In general. I recommend reading @Charles Dillon 🔸's article on comparative advantage (Charles, I couldn't find it here, but I suggest posting it here):

The quickest summary is:

- Comparative advantage means I'm guaranteed work but not that that work will provide enough for me to eat

- If comparative advantage is a panacea, why are there fewer horses?

I don't have time to research this take, but one of my economist friends criticised this study for the following two reasons:

- They claimed the averted deaths were in a famine, so there was regression to the mean (in a normal period there wouldn't have been so many deaths in the control)

- They claimed the averted deaths were close to hospitals, so areas without existing healthcare infrastructure would not see this benefit so the counterfactual value of the money is less.

I haven't looked into this robustly so if someone has, please agree or disagree vote with this comment accordingly.

Thanks GiveDirectly for their work.

I agree that it could be easier for people in EA to build a track record that funders take seriously.

I struggle to know if your project is underfunded—many projects aren't great and there have to be some that are rejected. In order to figure that out we have to actually discuss the project and I've appreciated our back and forth on the other blog you posted.

Surely it's going to be much more difficult for a PFG company to raise capital? Stocks are (in some way) related to future profits. If you are locked in to giving 90% away then doesn't that mean that stocks will trade at a much lower price and hence it will be much harder for VCs to get their return?

I guess my questions are:

- "what is earn to give". is the typical ETG giving $1m? $10m? At what point do we want people to switch?

- Is there a genuinely different skill set? Like, are there some people who are very mediocre EA jobs but great at earning money?

My guess would be that people should have some sense of how much they would earn to give for, and then how much impact they would stop earning to give and work for, and then they should move between the two. That would also create some great on-the-job learning, because I imagine that earn-to-give roles teach different skills, which can be fed back into the EA community.

It feels like if there were more money held by EAs some projects would be much easier:

- Lots of animal welfare lobbying

- Donating money to the developing world

- AI lobbying

- Paying people more for work trials

I don't know if there are some people who are much more suited to earning than to doing direct work. It seems to me they're quite similar skill sets. But if they're really sort of at all different, then you should really want quite different people to work on quite different things.

My friend Barak Gila wrote about spending $10k offsetting plane & car miles, in Cars and Carbon

This seems way too expensive? I feel like make sunsets suggest you can offset a lifetime of carbon for like $500.

I think a big problem is it's hard to know what to believe here. And hence people don't offset.

To add some thoughts/anecdotes:

- I'm sad this happens. I have had similar and it's hard.

- It seems like orgs and individuals have different incentives here - orgs want the most applicants possible, individuals want to get jobs.

- I have been asked to apply for 1 - 3 jobs that seemed wildly beyond my qualification then failed at the first hurdle without any feedback. This was quite frustrating, but I guess I understand why it happens.

- I like that work trials are paid well

- If we believe the best person for a job might be 5-10x better than the next best, then perhaps

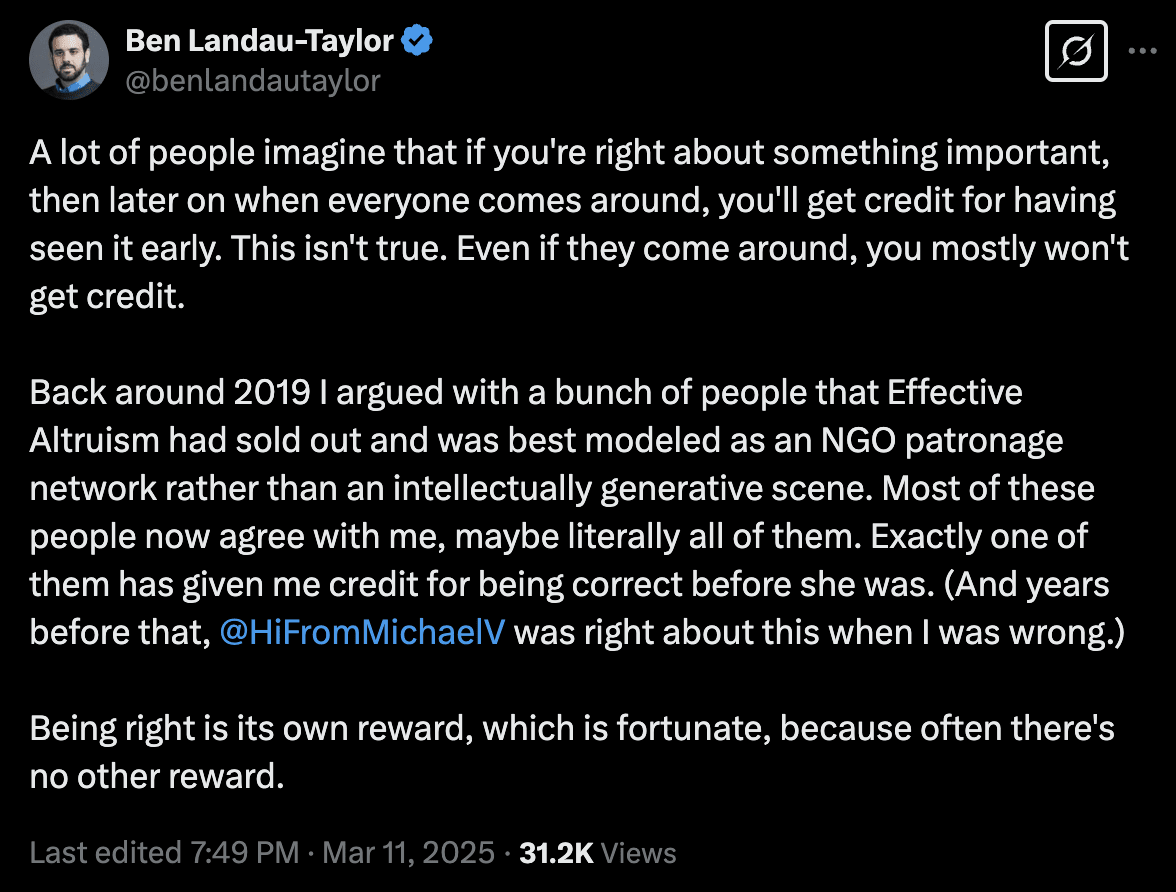

I mean I just don't take Ben to be a reasonable actor regarding his opinions on EA? I doubt you'll see him open up and fully explain a) who the people he's arguing with are or b) what the explicit change in EA to an "NGO patronage network" was with names, details, public evidence of the above, and being willing to change his mind to counter-evidence.

He seems to have been related to Leverage Research, maybe in the original days?[1] And there was a big falling out there, any many people linked to original Leverage hate "EA" with the fire of a thousand b...

I think it would allow many very online slightly anxious people to note how the situation is changing, rather than plug their minds into twitter each day.

I think the bird flu site helped a little in my part of twitter to tell people to chill out a bit and not work themselves into a frenzy earlier than was necessary. At least one powerful person said it was cool.

I think that civil servants might use it but I'm not sure they know what they want here and it's good to have something concrete to show.

Seems notable that my modes is that OpenPhil has stopped funding some right wing or even centrist projects, so has less power in this world than it could have done.

Ben Todd writes:

...Most philanthropic (vs. government or industry) AI safety funding (>50%) comes from one source: Good Ventures, via Open Philanthropy.2 But they’ve recently stopped funding several categories of work (my own categories, not theirs):

- Many Republican-leaning think tanks, such as the Foundation for American Innovation

...

I dunno, I think that sounds galaxy-brained to me. I think that giving numbers is better than not giving them and that thinking carefully about the numbers is better than that. I don't really buy your second order concerns (or think they could easily go in the opposite direction)