All of PabloAMC 🔸's Comments + Replies

"It is appropriate for small donors to spend time finding small charities to support"

For:

- Larger donors typically only have the ability to study large donation opportunities.

Against:

- I think most small donors (such as myself) are pretty bad at gauging the evidence in areas they are not expert in.

I think GFI has claimed this in the past, and given their role of large coordinator of the area I’m inclined to believe their conterfactual importance. However the problem is that without a downstream model of how dollars convert into averted animal suffering, it is quite hard to prioritise between theories of change.

Hi Caroline, thanks for the reply. I think you are very right in that both approaches are complementary and we should support both. There’s even a chance that advocacy campaigns may end up creating momentum from which alternative proteins could benefit. It is also true that alternative proteins may be able to access funds that are not available to corporate advocacy campaigns or similar, not just VC but also government support. That may also be the reason why GFI is highlighting the environmental aspect which is an easier sell outside of EA or animal welfa...

I think there are good arguments why those actions might have indeed been horrible mistakes. But I’m also quite uncertain about what would have been the best course of action at the time. Eg, there’s a reasonable case that the best we might hope for is steering the development of AI. I unfortunately don’t know.

Let me give a non AI example: I find it reasonable that some EAs try to steer how factory farming works (most animal advocacy), despite I preferring no animal died or was tortured for food.

But on the other hand I believe people at leadership positions failed to detect and flag the FTX scandal ahead of time. And that’s a shame.

I fought for EA to mean something simpler— just someone who 1. Figured out the best way to improve the world and 2. Does it— but I lost.

For what it is worth, this is not how I feel in my local EA community. There are people leading effective giving organisations and others who just go on with their usual lives with trial pledges; and I feel we are fairly non judgemental.

I donated to (i) ARMoR (https://www.armoramr.org/), because I am convinced about the quality of their cost estimates and believe targeted policy interventions can be fairly tractable; (ii) and also to the Global Health Fund from Ayuda Efectiva (https://ayudaefectiva.org/fondo-salud-global), in part to encourage others to donate.

New anti-malaria treatment clears phase 3 trials.

I just found that there is a new anti-malarial alternative to artemisinin, the most common antimalarial chemical, which has successfully completed Phase 3 trials. Its nickname is GanLum and apparently has quite powerful effects:

...A new drug, called GanLum, was more than 97% effective at treating malaria in clinical trials carried out across 12 African countries, researchers reported Wednesday at the American Society for Tropical Medicine and Hygiene in Toronto. That's as good, if not better, than the current

Hey Alex, thanks a lot for the post, and for the work you do at GFI.

Something I would love to read (and might write up this funding season) is how to compare the impact of GFI work with alternative proteins to other animal charities advocating working on corporate campaigns. This is highly non-obvious because GFI work depends on some theory of change, which I find very attractive, but for which I have not found good models. The closest is this post https://forum.effectivealtruism.org/posts/CA8a9JS3fYb63YWoh/the-humane-league-needs-your-money-more-than-alt-...

I wonder how is ACE selecting charities? I wonder in particular because the Good Food Institute used to be considered a high-impact charity, but I have not seen any updates on that since 2022, when the assessment was broadly positive reference here. Not only that, but it seems GFI was probably one of the largest charities.

You can find more information about our selection process here. In 2024, GFI decided to postpone re-evaluation to a future year to allow their teams more time to focus on opportunities and challenges in the alternative proteins sector. They decided not to apply to be evaluated in 2025.

I am considering writing a brief post about how I think the EU AI office (where I will likely be starting a new position in one month) can address some issues of AI differently from other actors. The EU AI office might complement the work of traditional actors in addressing loss of control issues, but it could play a significant role in mitigating power concentration issues, especially in the geopolitical sense. This is a bit of a personal theory of change too.

I'd love someone to write how someone who feels most comfortable donating to the GiveWell top charities fund should address donating to animal charities. I know there exist ways like Animal Charity Evaluators Movement Grants, the Giving What We Can Effective Animal Advocacy fund, or the EA Animal Welfare Fund.

However, these all feel a bit different-flavoured than GiveWell's top charities fund in that they seem to be more opportunistic, small or actively managed; in contrast to GiveWell's larger, established, and typically more stable charities. This makes ...

Since targeting Ultra High Net Worth Individuals seems to be a more effective strategy than broad donations (reference), to what extent do you think it is feasible to attract more such individuals to effective giving? What strategies are you particularly excited about researching and testing more extensively to do so?

To what extent the EA community should put more effort towards increasing the donation basis vs finding ever more impactful opportunities? What worries me the most in the second case is that while there might be some pretty good untapped opportunities to create new, more impactful charities, there is always too much uncertainty. For example, this is often argued as a reason to not prioritise funding Vitamin A supplementation (€3.5k/per live saved) vs malaria nets (€5.5k/per live saved), see this Ayuda Efectiva spreadsheet based on GiveWell data; which are already pretty heavily researched areas.

To what extent would it make sense to consider the work by the Gates Foundation part of the effective giving ecosystem? I would argue that they are very effective, too, even if they have no association with effective altruism.

I’m not sure about the cost effectiveness, but they have a quote by Michael Kremer in their webpage:

“The country where one is born is the most important determinant of extreme poverty. By facilitating international educational migration, Malengo offers very low-income youth the opportunity to dramatically increase both their own incomes, and those of their families. In my view, each dollar of donation to Malengo is likely to increase the incomes of program participants by more than would be the case for donations to virtually any other organization.“

Michael Kremer University Professor in Economics, University of Chicago Nobel Laureate in Economics, 2019

Hi Emmannaemeka, Thanks for writing this, and sorry to hear that has been your experience so far. I don’t work in anything related to biology so I don’t think I can offer any solutions, unfortunately. The only thing that comes to mind, other than bio security, is that fermentation is one area that could be useful to produce alternative proteins. Perhaps the Good Food Institute could be a good place to look into. In any case, I think the EA community should be welcoming to everyone, even if there are no good ways to contribute to most typical EA causes. Again, thanks for writing this.

Thank you for your thoughtful response. I agree that pivoting can be useful, but I also believe that what we’re building at the Center for Phage Biology and Therapeutics has a unique and powerful kind of impact.

I often imagine the scenario of a patient who has run out of antibiotic options and is at the brink of death. In that moment, the clinician, or even the patient’s family, reaches out to us, sends us the bacterial isolate, and within days we are able to identify a matching phage, purify it, and return it for therapeutic use. That is not theoretical i...

Thanks for the question, Pablo.

Beginning with Ayuda Efectiva, when we launched we had to decide how to approach what I would call a tough market: not much of a giving culture, low trust, and a still very traditional culture in certain aspects. I think that when you enter a market like that, your strategy must be wedge shaped. In our case, the tip of the wedge was making people consider whether they should give a part of their resources to help others. The wedge widened with the introduction of an effectiveness mindset and spatial impartiality (as referred ...

How successful do you think Ayuda Efectiva is with respect to your expectations before funding it? Any recommendations for founders of other effective giving organisations on what are the most important factors contributing to its success?

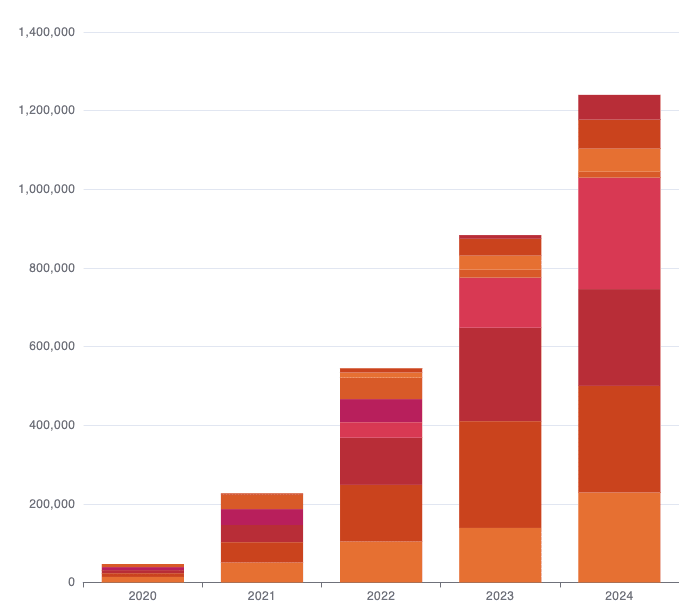

My standard reply when people ask me how Ayuda Efectiva is going is "happy but not satisfied". I am happy because the trend is good. This is money raised for effective charities:

I am not satisfied because our figures are still tiny and I would like to see at least an extra zero to the right.

I expected this to be a very hard endeavor and I told my board from day one that we were starting a long-distance race. I would therefore say that things are going more or less as I could have wished for. (Note: I think figures are a great a progress indicator but a bad...

As the post says, it may be worth for europeans to provide feedback to the EU Commission on the new animal welfare public consultation: https://ec.europa.eu/info/law/better-regulation/have-your-say/initiatives/14671-On-farm-animal-welfare-for-certain-animals-modernisation-of-EU-legislation_en

I wish I could! Unfortunately, despite having several conversations and emails with the various AI safety donors, I'm still confused about why they are declining to fund CAIP. The message I've been getting is that other funding opportunities seem more valuable to them, but I don't know exactly what criteria or measurement system they're using.

At least one major donor said that they were trying to measure counterfactual impact -- something like, try to figure out how much good the laws you're championing would accomplish if they passed, and then ask how clo...

The key objection I always have to starting new charities, as Charity Entrepreneurship used to focus on is that I feel is money usually not the bottleneck? I mean, we already have a ton of amazing ideas of how to use more funds, and if we found new ones, it may be very hard to reduce the uncertainty sufficiently to be able to make productive decisions. What do you think Ambitious Impact ?

I agree there is certainly quite a lot of hype, though when people want to hype quantum they usually target AI or something. My comment was echoing that quantum computing for material science (and also chemistry) might be the one application where there is good quality science being made. There are also significantly less useful papers, for example those related to "NISQ" (non-error-corrected) devices, but I would argue the QC community is doing a good job at focusing on the important problems, not just hyping around.

Hi there, I am a quantum algorithm researcher at one of the large startups in the field and I have a couple of comments, one to back up the conclusion on ML for DFT, and another to push back a bit on the quantum computing end.

For the ML for DFT, one and a half years ago we tried (code here) to replicate and extend the DM21 work, and despite some hard work we failed to get good accuracy training ML functionals. Now, this could be because I was dumb or lacked abundant data or computation, but we mostly concluded that it was unclear how to make ML-based funct...

I don’t think the goal of regulation or evaluations is to slow down AGI development. Rather, the goal of regulation is to standardise minimal safety measures (some AI control, some security etc across labs) and create some incentives for safer AI. With evaluations, you can certainly use them for pausing lobbying, but I think the main goal is to feed in to regulation or control measures.

My donation strategy:

It seems that we have some great donation opportunities in at least some cases such as AI Safety. This has made me wonder what donation strategies I prefer. Here are some thoughts, also influenced by Zvi Mowshowitz's:

- Attracting non-EA funding to EA causes: I prefer donating to opportunities that may bring external or non-EA funding to some causes that EA may deem relevant.

- Expanding EA funding and widening career paths: Similarly, if possible fund opportunities that could increase the funds or skills available to the community in the

I agree with most except perhaps the framing of the following paragraph.

Sometimes that seems OK. Like, it seems reasonable to refrain from rescuing the large man in my status-quo-reversal of the Trolley Bridge case. (And to urge others to likewise refrain, for the sake of the five who would die if anyone acted to save the one.) So that makes me wonder if our disapproval of the present case reflects a kind of speciesism -- either our own, or the anticipated speciesism of a wider audience for whom this sort of reasoning would provide a PR problem?

In my o...

For what is worth, I like the work of Good Food Institute on pushing the science and market of alternative proteins. They also do some policy work though I fear their lobbying might have orders of magnitude less strength than the industry’s.

Also, as far as I know the Shrimp Welfare Initiative is directly buying and giving away the stunners (hopefully to create some standard practice around it). So counterfactually it seems a reasonable bet for the direct impact at least.

But I resonate with the broad concerns with corporate outreach and advocacy. I am parti...

Hey Vasco, on a constructive intention, let me explain how I believe I can be a utilitarian, maybe hedonistic to some degree, value animals highly and still not justify letting innocent children die, which I take as a sign of the limitations of consequentialism. Basically, you can stop consequence flows (or discount them very significantly) whenever they go through other people's choices. People are free to make their own decisions. I am not sure if there is a name for this moral theory, but it would be roughly what I subscribe to.

I do not think this is an ideal solution to the moral problem, but I think it is much better than advocating to let innocent children die because of what they may end up doing.

I donated the majority of my yearly donations to a campaign for AMF I did through Ayuda Efectiva for my wedding. The goal was to promote effective donations in my family and friends. I also donated a small amount to the EA Forum election because I think it is good for democratic reasons to allow the community to decide where to allocate some funds.

Hi @Jbentham,

Thanks for the answer. See https://forum.effectivealtruism.org/posts/K8GJWQDZ9xYBbypD4/pabloamc-s-quick-takes?commentId=XCtGWDyNANvHDMbPj for some of the points. Specifically, the problem I have with the post is not about cause prioritization or cost-effectiveness.

Arguing that people should not donate to global health doesn't even contradict common-sense morality because as we see from the world around us, common-sense morality holds that it's perfectly permissible to let hundreds or thousands of children die of preventable diseases.

I thin...

Hi there,

Let me try to explain myself a bit.

For example, global health advocates could similarly argue that EA pits direct cash transfers against interventions like anti-malaria bednets, which is divisive and counterproductive, and that EA forum posts doing this will create a negative impression of EA on reporters and potential 10% pledgers.

There is a difference between what the post does and what you mention. The post is not saying that you should prioritize animal welfare vs global health (which I would find quite reasonable and totally acceptable). ...

As I commented above, it would not make any sense for someone caring about animals to kill people.

You only did so on the ground of not being an effective method, and because it would decrease support for animal welfare. Presumably, if you could press a button to kill many people without anyone attributing it to the animal welfare movement you would, then?

What would be the motivation? Is writing a good skill to have and thus merits practising?