QubitSwarm99

Participation2

- Attended more than three meetings with a local EA group

- Attended an EAGx conference

Posts 11

Comments54

I should have chosen a clearer phrase than "not through formal channels". What I meant was that my much of my forecasting work and experiences came about through my participation on Metaculus, which is "outside" of academia; this participation did not manifest as forecasting publications or assistantships (as would be done through a Masters or PhD program), but rather as my track record (linked in CV to Metaculus profile) and my GitHub repositories. There was also a forecasting tournament I won, which I also linked on the CV.

I am not the best person to ask this question (@so8res, @katja_grace, @holdenkarnofsky) but I will try to offer some points.

- These links should be quite useful:

- 2022 Expert Survey on Progress in AI

- What do ML researchers think about AI in 2022? (37 years until a 50% chance of HLMI)

- LW Wiki - AI Timelines (e.g., roughly 15% chance of transformative AI by 2036 and ~75% of AGI by 2032)

- (somewhat less useful) LW Wiki - Transformative AI; LW Wiki - Technological forecasting

- I don't know of any recent AI expert surveys for transformative AI timelines specifically, but have pointed you to very recent ones of human-level machine intelligence and AGI.

- For comprehensiveness, I think you should cover both transformative AI (AI that precipitates a change of equal or greater magnitude to the agricultural or industrial revolution) and HLMI. I have yet to read Holden's AI Timelines post, but believe it's likely a good resource to defer to, given Holden's epistemic track record, so I think you should use this for the transformative AI timelines. For the HLMI timelines, I think you should use the 2022 expert survey (the first link). Additionally, if you trust that a techno.-optimist leaning crowd's forecasting accuracy generalizes to AI timelines, then it might be worth checking out Metaculus as well.

- the community here has an IQR forecast of (2030, 2041, 2075) for When will the first general AI system be devised, tested, and publicly announced?

- the uniform median forecast is 54% for Will there be human/machine intelligence parity by 2040?

- Lastly, I think it might be useful to ask under the existential risk section what percentage of ML/AI researchers think AI safety research should be prioritized (from the survey: "The median respondent believes society should prioritize AI safety research “more” than it is currently prioritized. Respondents chose from “much less,” “less,” “about the same,” “more,” and “much more.” 69% of respondents chose “more” or “much more,” up from 49% in 2016.")

I completed the three quizzes and enjoyed it thoroughly.

Without any further improvements, I think these quizzes would still be quite effective. It would be nice to have a completion counter (e.g., an X/Total questions complete) at the bottom of the quizzes, but I don't know if this is possible on quizmanity.

Every time I think about how I can do the most good, I am burdened by questions roughly like

- How should value be measured?

- How should well-being be measured?

- How might my actions engender unintended, harmful outcomes?

- How can my impact be measured?

I do not have good answers to these questions, but I would bet on some actions being positively impactful on the net.

For example

- Promoting vegetarianism or veganism

- Providing medicine and resources to those in poverty

- Building robust political institutions in developing countries

- Promoting policy to monitor develops in AI

W.r.t. the action that is most positively impactful, my intuition is that it would take the form of safeguarding humanity's future or protecting life on Earth.

Some possible actions that might fit this bill:

- Work that robustly illustrates the theoretical limits of the dangers from and capabilities of superintelligence.

- Work that accurately encodes human values digitally

- A global surveillance system for human and machine threats

- A system that protects Earth from solar weather and NEOs

The problem here is that some of these actions might spawn harm, particularly (2) and (3).

Thoughts and Notes: October 5th 0012022 (1)

As per my last shortform, over the next couple of weeks I will be moving my brief profiles for different catastrophes from my draft existential risk frameworks post into shortform posts to make the existential risk frameworks post lighter and more simple.

In my last shortform, I included the profile for the use of nuclear weapons and today I will include the profile for climate change.

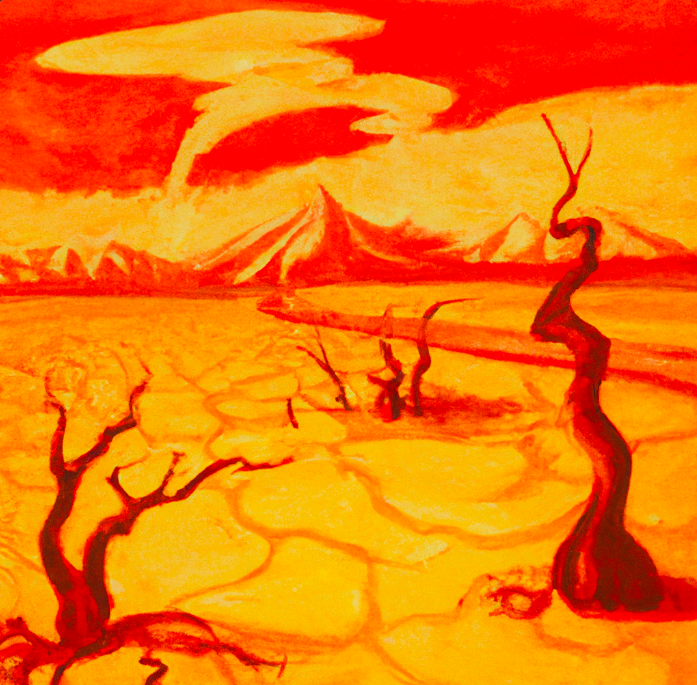

Climate change

- Risk: (sections from the well written Wikipedia page on Climate Change): "Contemporary climate change includes both global warming and its impacts on Earth's weather patterns. There have been previous periods of climate change, but the current rise in global average temperature is more rapid and is primarily caused by humans.[2][3] Burning fossil fuels adds greenhouse gases to the atmosphere, most importantly carbon dioxide (CO2) and methane. Greenhouse gases warm the air by absorbing heat radiated by the Earth, trapping the heat near the surface. Greenhouse gas emissions amplify this effect, causing the Earth to take in more energy from sunlight than it can radiate back into space." In general, the risk from climate change mostly comes from the destabilizing downstream effects it has on civilization, rather than from its direct effects, such as the ice caps melting or increased weather severity. One severe climate change catastrophe is the runaway greenhouse effect, but this seems unlikely to occur, as the present humans activities and natural processes contributing to global warming don't appear capable of engendering such a catastrophe anytime soon.

- Links: EAF Wiki; LW Wiki; Climate Change (Wikipedia); IPCC Report 2022; NOAA Climate Q & A; OWID Carbon Dioxide and Emissions (2020); UN Reports on CC; CC and Longtermism (2022); NASA Evidence of CC (2022)

- Forecasts: If climate catastrophe by 2100, human population falls by >= 95%? - 1%; If global catastrophe by 2100, due to climate change or geoengineering? - 10%; How much warming by 2100? - (1.8, 2.6, 3.5) degrees; When fossil fuels < 50% of global energy? - (2038, 2044, 2056)

Does anyone have a good list of books related to existential and global catastrophic risk? This doesn't have to just include books on X-risk / GCRs in general, but can also include books on individual catastrophic events, such as nuclear war.

Here is my current resource landscape (these are books that I have personally looked at and can vouch for; the entries came to my mind as I wrote them - I do not have a list of GCR / X-risk books at the moment; I have not read some of them in full):

General:

- Global Catastrophic Risks (2008)

- X-Risk (2020)

- Anticipation, Sustainability, Futures, and Human Extinction (2021)

- The Precipice (2019)

AI Safety

- Superintelligence (2014)

- The Alignment Problem (2020)

- Human Compatible (2019)

Nuclear risk

General / space

- Dark Skies (2020)

Biosecurity

The dormant period occurred between applying and getting referred for the position, and between getting referred and receiving an email for an interview. These periods were unexpectedly long and I wish there had been more communication or at least some statement regarding how long I should expect to wait. However, once I had the interview, I only had to wait a week (if I am remembering correctly) to learn if I was to be given a test task. After completing the test task, it was around another week before I learned I had performed competently enough to be hired.