Seth Ariel Green 🔸

Bio

Participation1

I am a Research Scientist at the Humane and Sustainable Food Lab at Stanford.

How others can help me

the lab I work at is seeking collaborators! More here.

How I can help others

If you want to write a meta-analysis, I'm happy to consult! I think I know something about what kinds of questions are good candidates, what your default assumptions should be, and how to delineate categories for comparisons

Posts 14

Comments191

Topic contributions1

Hi there, thank for engaging with our results. On a preliminary note, I wish to gently counsel you that the tone of this response is not in keeping with the norms of this community, which tend towards being collegial/assuming good intent/etc. Instead of noting that our methods "don't bear any useful relationship to the real world," you might try something like "I'm afraid the methods used here don't answer the questions that I think are most important to the movement."

Yes, a hypothetical survey has important limitations. I think we are upfront about them. If we had found larger effects here, we likely would have pursued funding to take this design out to a restaurant or setting with consumption outcomes. When we didn't, we went a different direction. Always we are triaging.

I agree that flexitarians/meat-reducers/would-be-reducers are probably the best target audience for PMAs. We are testing a different estimand, which is demand for the product in a silmulalcrum in the world at large. This quantity is important from the POV of, e.g., the viability of marketing and deploying PMAs at popular restaurants like Chipotle.

Ah my favorite subject/beloved nemesis, plant-based defaults!

I am a bit of a skeptic, as I laid out in Scaled up, I expect defaults to reduce meat consumption by ~1-2 pp. My basic point is that we don't have a good sense of the effect of these interventions on dietary change rather than meal change, and that some theoretical considerations might give us pause.

Regarding this essay specifically:

- You write "The default effect was popularized by Johnson and Goldstein in 2003, demonstrating how countries with similar cultures and religions show dramatic differences in organ donation after death, all because of choice architecture". Alas, when comparing countries that have opt-in vs opt-out policies, Arshad et al. (2019) find that “no significant difference was observed in rates of kidney (35.2 versus 42.3 respectively), non-renal (28.7 versus 20.9, respectively), or total solid organ transplantation (63.6 versus 61.7, respectively).”

- The NYC hospital data is not an RCT and doesn't offer much of an identification strategy so I'm never sure what to make of those numbers. That being said, I'm ready to accept in principle that hospitals are a particularly promising environment for defaults and for people rethinking their diets generally.

- The Ginn & Sparkman numbers are interesting but the decline in overall sales on plant-based default days is going to be a bit of a challenge for selling the strategy to institutions. On the other hand, Sodexo is apparently happy to scale them up so who knows.

- I am working on a plant-based default evaluation that I hope to share with the forum soon 😃

How much of a post are you comfortable for AI to write?

AI sucks at writing and doesn’t get my style at all. If it did, I’d let it write the whole thing. (Yudkowsky predicts we have about 2 more years until AI learns to write.)

I agree, Ben, that tractability is low. I am also a bit skeptical of anything saying cats can be vegan without a lot of qualifications.

However I did a bit of research into the "how many animals are actually killed for dog food" in 2023 and I got to about 3 billion/year using a bunch of approximations (Towards non-meat diets for domesticated dogs). So that's not 6 billion but it's still a lot. And I'm also just talking about dogs.

I also think that ABPs, if they didn't go into dog food, would just go into something else. I think it is a mistake to think that demand for ABPs does not drive demand for meat. The "byproduct" designation is convenient when distinguishing animal parts of different value, but at the end of the day, if you're paying someone for animal parts, you're paying them to slaughter animals. Here's a little bit I wrote about this for an op-ed I never published:

Dog food is responsible for a large amount of environmental harms — tens of millions of annual tons of CO2 in the U.S. alone — because dogs are numerous, relatively large animals who eat a lot of animals, and animal agriculture is a major contributor to climate change. This is true even if they’re eating mostly animal byproducts (ABPs).

Still, ABPs may drive less animal agriculture, and therefore less environmental damage, than meat itself. The more we pay people to farm animals, the more they’ll do it. ABPs are worth less than meat. Shifting from byproducts to meat increases demand for slaughtered animals.

A 2020 paper by Peter Alexander and colleagues provides a framework for measuring this increase. The authors find that typical dry dog food is composed of roughly 32% ABPs, 16.3% animal products, and 47.9% crop products. They calculate environmental harms based on an “economic value allocation method, where the environmental impact[s] of producing an animal are allocated in the same proportion as the value of the products.” ABPs, they conclude, produce about 12-18% of greenhouse gas emissions associated with dry dog food, despite being about a third of such products by weight.

The Alexander et al. (2020) paper was about the best thing I could find on the subject in general.

It depends on the specifics, but I live in Brooklyn and getting deliveries from Whole Foods means they probably come to my house in an electric truck or e-cargo bike. That's pretty low-emission. (Fun fact: NYC requires most grocery stores to have parking spots.)

The key point, though, is that cases like Ocado and Albert Heijn are exceptions, not the norm. Most online supermarkets lack the resources and incentives to systematically review and continuously update tens of thousands of SKUs for vegan status.

I'd go a step further: I suspect many supermarkets are going to perceive an incentive not to do this because it raises uncomfortable questions in consmers' minds about the ethical permissibility/goodness of their other items.

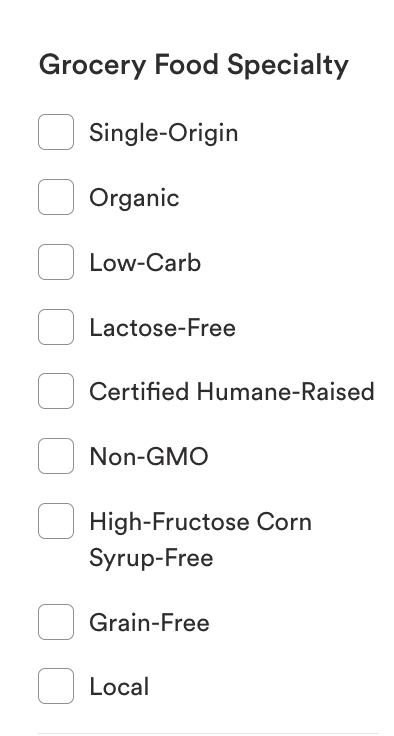

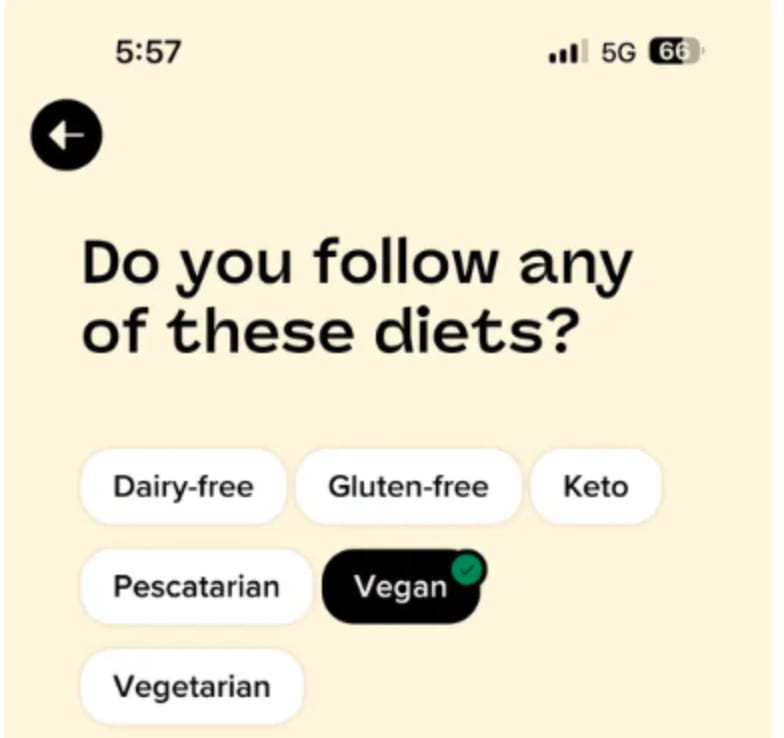

I wonder if this will be more palatable to them if "vegan" is just one of several filters, along with (e.g.) keto, paleo, halal, kosher. Right now the Whole Foods website has the following filters available -- would it be such a stretch to have some identitarian ones?

I really don't know.

I looked into this a bit when i was thinking about how hard it is to get high-welfare animal products at grocery stores (https://regressiontothemeat.substack.com/p/pasturism) and I made contact with a sustainability person at a prominent multinational grocery store and asked if they'd like to meet up during Earth Week to discuss such a filter. They did not write back. I relayed a version of this conversation to someone involved in grocery store pressure campaigns at a high-level, and that second person said, basically anything that implies that some of their food is better/more ethical than other options is going to be a nonstarter.

On the other hand, you've had some initial successes and it seems some grocery stores are already doing this! So I really hope it's plausible. If you're interested, I'm happy to flesh out the details of these prior interactions privately.

Thank you Vasco! I'll be curious to hear folks' responses, if any.