Seth Ariel Green 🔸

Bio

Participation1

I am a Research Scientist at the Humane and Sustainable Food Lab at Stanford.

How others can help me

the lab I work at is seeking collaborators! More here.

How I can help others

If you want to write a meta-analysis, I'm happy to consult! I think I know something about what kinds of questions are good candidates, what your default assumptions should be, and how to delineate categories for comparisons

Posts 14

Comments187

Topic contributions1

It depends on the specifics, but I live in Brooklyn and getting deliveries from Whole Foods means they probably come to my house in an electric truck or e-cargo bike. That's pretty low-emission. (Fun fact: NYC requires most grocery stores to have parking spots.)

The key point, though, is that cases like Ocado and Albert Heijn are exceptions, not the norm. Most online supermarkets lack the resources and incentives to systematically review and continuously update tens of thousands of SKUs for vegan status.

I'd go a step further: I suspect many supermarkets are going to perceive an incentive not to do this because it raises uncomfortable questions in consmers' minds about the ethical permissibility/goodness of their other items.

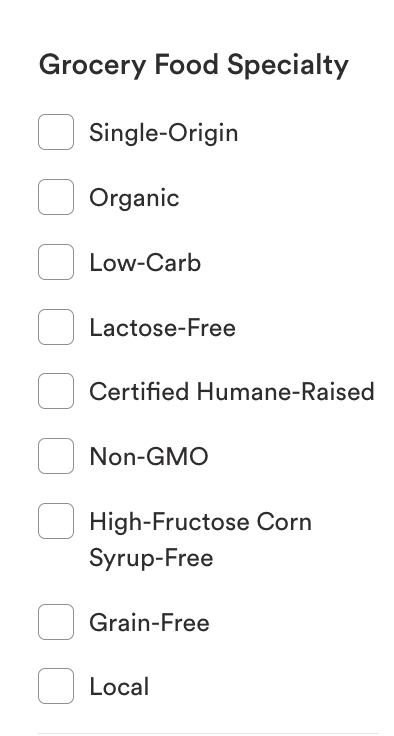

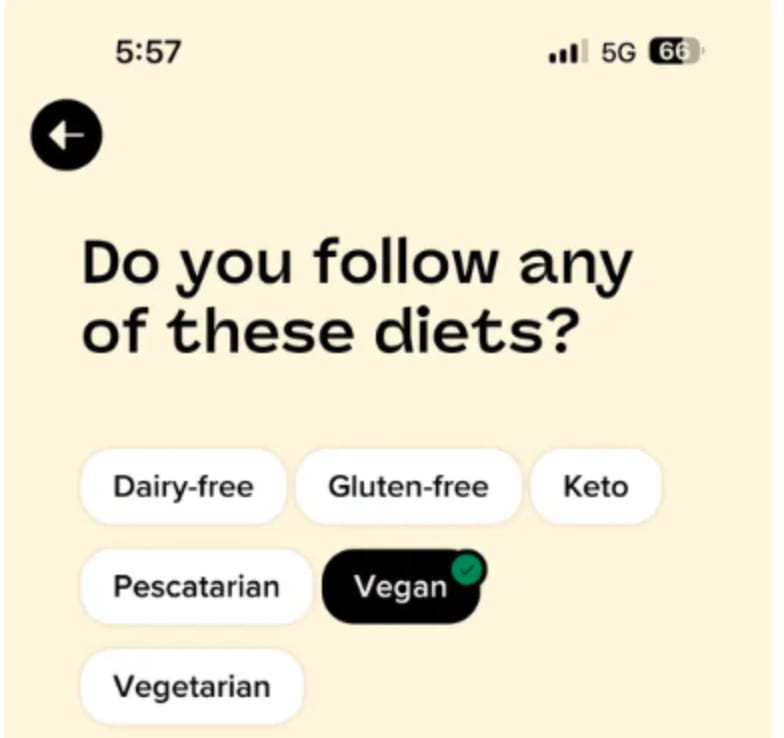

I wonder if this will be more palatable to them if "vegan" is just one of several filters, along with (e.g.) keto, paleo, halal, kosher. Right now the Whole Foods website has the following filters available -- would it be such a stretch to have some identitarian ones?

I really don't know.

I looked into this a bit when i was thinking about how hard it is to get high-welfare animal products at grocery stores (https://regressiontothemeat.substack.com/p/pasturism) and I made contact with a sustainability person at a prominent multinational grocery store and asked if they'd like to meet up during Earth Week to discuss such a filter. They did not write back. I relayed a version of this conversation to someone involved in grocery store pressure campaigns at a high-level, and that second person said, basically anything that implies that some of their food is better/more ethical than other options is going to be a nonstarter.

On the other hand, you've had some initial successes and it seems some grocery stores are already doing this! So I really hope it's plausible. If you're interested, I'm happy to flesh out the details of these prior interactions privately.

Probably because life-saving interventions do not scale this well. It's perfectly plausible that some lives can be saved for $1600 in expectation, but millions of them? No.

Peter Rossi’s Iron Law of Evaluation: the “expected value of any net impact assessment of any large scale social program is zero.” If there were something that did scale this well, it would be a gigantic revolution in development economics. For discussion, se Vivalt (2020), Duflo (2004), and, in a slightly different but theoretically isomorphic context, Stevenson (2023).

On the other hand, Sania (the CEO) is making a weaker claim -- "your ability...is compromised" is not the same as "without that funding, 1.1 million people will die." That's why she's CEO, she knows how to make a nonspecific claim seem urgent and let the listener/reader assume a stronger version.

Hi Jeff, I think we're talking about the same lifespan, my friend was talking about 1 year of continuous use (he works in industrial applications).

Earlier this year year, I sent a Works in Progress piece about Far UV to a friend who is a material science engineer and happens to work in UV applications (he once described his job as "literally watching paint dry," e.g. checking how long it takes for a UV lamp to dry a coat of paint on your car). I asked

I'm interested in the comment that there's no market for these things because there's no public health authority to confer official status on them. That doesn't really make sense to me. If you wanted to market your airplane as the most germ-free or whatever, you could just point to clinical trials and not mention the FDA. Does the FDA or whomever really have independent status to bestow that's so powerful?

Friend replied:

Certain UV disinfection applications are quite popular, but indoor air treatment is one of the more challenging ones. One issue is that these products only can offer "the most germ-free" environment, the amount of protection is not really quantifiable. If a sick person coughed in my direction would an overhead UV lamp stop the germs from reaching me? Probably not...

...far-UVC technology has some major limitations. Relatively high cost per unit, most average only 1 year lifetime or less with decreasing efficacy over time, have to replace the entire system when it's spent, adds to electricity cost when in use, and you need to install a lot of them for it to be effective because when run at too high power levels they produce large amounts of hazardous ozone...

NIST has been working on establishing some standards for measuring UV output and the effectiveness of these systems for the last few years. Doesn't seem to be helping too much with convincing the general public. Covid was the big chance for these technologies to spread, and they did in some places like airports, just not everywhere

You can/should take this with a grain of salt. On the other hand, I generally believe that some EAs tend to be very credulous towards solutions that promise a high amount of efficacy on core cause areas, and operate with a mental model that the missing ingredient for scale is more often money than culture/mechanics: that everything is like bed nets. By contrast, I believe that if some solution looks very promising to outsiders but remains in limited use -- e.g. kangaroo care for preemies in Nigeria or removing lead from turmeric in Bangladesh -- there is likely a deep reason for that that's only legible to insiders, i.e. people with a lot of local context. Here, I suspect that many of us don't have a strong understanding of the costs, limitations, true efficacy, and logistical difficulties of UV light.

That's my epistemology. But if someone wants to fund and run an RCT testing the effects of, say, a cluster of aerolamps on covid cases at a big public event, I'd be happy to consult on design, measurement strategy, IRB approval, etc. (Gotta put that university affiliation to use on something!)

It's an interesting question.

From the POV of our core contention -- that we don't currently have a validated, reliable intervention to deploy at scale -- whether this is because of absence of evidence (AoE) or evidence of absence (EoA) is hard to say. I don't have an overall answer, and ultimately both roads lead to "unsolved problem."

We can cite good arguments for EoA (these studies are stronger than the norm in the field but show weaker effects, and that relationship should be troubling for advocates) or AoE (we're not talking about very many studies at all), and ultimately I think the line between the two is in the eye of the beholder.

Going approach by approach, my personal answers are

- choice architecture is probably AoE, it might work better than expected but we just don't learn very much from 2 studies (I am working on something about this separately)

- the animal welfare appeals are more EoA, esp. those from animal advocacy orgs

- social psych approaches, I'm skeptical of but there weren't a lot of high-quality papers so I'm not so sure (see here for a subsequent meta-analysis of dynamic norms approaches).

- I would recommend health for older folks, environmental appeals for Gen Z. So there I'd say we have evidence of efficacy, but to expect effects to be on the order of a few percentage points.

Were I discussing this specifically with a funder, I would say, if you're going to do one of the meta-analyzed approaches -- psych, nudge, environment, health, or animal welfare, or some hybrid thereof -- you should expect small effect sizes unless you have some strong reason to believe that your intervention is meaningfully better than the category average. For instance, animal welfare appeals might not work in general, but maybe watching Dominion is unusually effective. However, as we say in our paper, there are a lot of cool ideas that haven't been tested rigorously yet, and from the point of view of knowledge, I'd like to see those get funded first.

I agree, Ben, that tractability is low. I am also a bit skeptical of anything saying cats can be vegan without a lot of qualifications.

However I did a bit of research into the "how many animals are actually killed for dog food" in 2023 and I got to about 3 billion/year using a bunch of approximations: https://forum.effectivealtruism.org/posts/zihL7a4xbTnCmuL2L/towards-non-meat-diets-for-domesticated-dogs. So that's not 6 billion but it's still a lot. And I'm also just talking about dogs.

I also think that ABPs, if they didn't go into dog food, would just go into something else. I think it is a mistake to think that demand for ABPs does not drive demand for meat. The "byproduct" designation is convenient when distinguishing animal parts of different value, but at the end of the day, if you're paying someone for animal parts, you're paying them to slaughter animals. Here's a little bit I wrote about this for an op-ed I never published:

The Alexander et al. (2020) paper was about the best thing I could find on the subject in general.