All of TylerMaule's Comments + Replies

Should GiveWell offer Animal Welfare regrants on an opt-in basis?

The GiveWell FAQ (quoted below) suggests that GiveWell focuses exclusively on human-directed interventions primarily for reasons of specialization—i.e., avoiding duplication of work already done by Coefficient Giving and others—rather than due to a principled objection to recommending animal-focused charities. If GiveWell is willing to recommend these organizations when asked, why not reduce the friction a bit?

A major part of GiveWell’s appeal has been its role as an “index fund for charities...

Accepting that this campaign was debatable ex-ante and disappointing ex-post, I think it's helpful to view it in the context of the broader reality:

Given the dire[1] status quo, I am generally grateful when thoughtful, conscientious actors like FarmKind take the initiative to try new approaches in the hope of unlocking positive change for animals. Although this campaign didn't achieve its aims, it’s good to see that it generated some useful lessons—and, most importantly, I hope the harsh reaction won’t unduly discourage future attempts to test n...

Thanks for posting this! My thoughts on "what's going on here?":

- The simplest high-level explanation is that the surveys capture 'thought leaders', which is distinct from the set of people who control the money, and these groups disagree on allocation. I guess my prior expectation would not have been that these numbers match, but perhaps I'm in the minority there?

- More specifically, as you mentioned, Coefficient Giving is responsible for ~2/3 of the grant dollars in this data set, so to a significant extent this reduces to comparing Dustin and Cari's preferr

Thanks for bringing this up Aidan. I raised this topic in both of my versions of the Historical Funding post, and I remain interested in doing this properly if and when I get sufficient time and data. What I have found so far is (a) accessing the bottom-up data for ~all relevant charities seems to be much more difficult than I would have imagined, and (b) I've floated this project to a few people and the interest seemed lukewarm (probably mostly due to their sense of intractibility).

Thanks! Small correction: Animal Welfare YTD is labeled as $53M, when it looks like the underlying data point is $17M (source and 2023 full-year projections here)

Both posts contain a more detailed breakdown of inputs, but in short:

- 80k seems to include every entry in the Open Phil grants database, whereas my sheet filters out items such as criminal justice reform that don't map to the type of funding I'm attempting to track.

- They also add a couple of 'best guess' terms to estimate unknown/undocumented funding sources; I do not.

If you expect to take in $3-6M by the end of this year, borrowing say $300k against that already seems totally reasonable.

Not sure if this is possible, but I for one would be happy to donate to LTFF today in exchange for a 120% regrant to the Animal Welfare Fund in December[1]

- ^

This would seem to be an abuse of the Open Phil matching, but perhaps that chunk can be exempt

So these are all reasons that funding upfront is strictly better than in chunks, and I certainly agree. I'm just saying that as a donor, I would have a strong preference for funding 14 researchers in this suboptimal manner vs 10 of similar value paid upfront, and I'm surprised that LTFF doesn't agree.

Perhaps there are some cases where funding in chunks would be untenable, but that doesn't seem to be true for most on the list. Again, I'm not saying there is no cost to doing this, but if the space is really funding-constrained as you say 40% of value is an awful lot to give up. Is there not every chance that your next batch of applicants will be just as good, and money will again be tight?

A quick scan of the marginal grants list tells me that many (most?)[1] of these take the form of a salary or stipend over the course of 6-12 months. I don't understand how the time-value of money could be so out of whack in this case—surely you could grant say half of the requested amount, then do another round in three months once the large donors come around?[2]

...IDK 160% annualized sounds a bit implausible. Surely in that world someone would be acting differently (e.g. recurring donors would roll some budget forward or take out a loan)?

I would be curious to hear from someone on the recipient side who would genuinely prefer $10k in hand to $14k in three months' time.

Regarding the funding aspect:

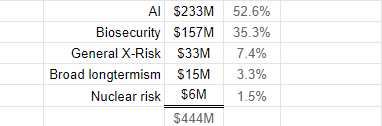

- As far as I can tell, Open Phil has always given the majority of their budget to non-longtermist focus areas.

- This is also true of the EA portfolio more broadly.

- GiveWell has made grants to less established orgs for several years, and that amount has increased dramatically of late.

I agree in the context of what I call deciding between different "established charities with fairly smooth marginal utility curves," which I think is more analogous to prediction markets or fantasy football or (for that matter) picking fake stocks.

But as someone who in the past has applied for funding for projects (though not on Manifund), if someone said, "hey we have 50k (or 500k) to allocate and we want to ask the following questions about your project," I'd be pretty willing to either reply to their emails or go on a call.

If on the other hand the...

People are not going to get the experience of making consequential decisions with $50, particularly if they're funding individuals and small projects (as opposed to established charities with fairly smooth marginal utility curves like AMF).

That said, I'm sympathetic to the same argument for $5k or 10k.

Substantially less money, through a combination of Meta stock falling, FTX collapsing, and general market/crypto downturns[3]...[3] Although Meta stock is back up since I first wrote this; I would be appreciative if someone could do an update on EA funding

Looking at this table, I expect the non-FTX total is about the same[1]—I'd wager that there is more funding commited now than during the first ~70% of the second wave period.[2]

I think most people have yet to grasp the extent to which markets have bounced back:

- The S&P 500 Total Return Index is within

I second these suggestions. To get more specific re cause areas:

- Each source uses a different naming convention (and some sources are just blank)

- I'd suggest renaming that column 'labels' and instead mapping to just a few broadly defined buckets which add up to 100%—I've already done much of that mapping here

Borrowing money if short timelines seems reasonable but, as others have said, I'm not at all convinced that betting on long-term interest rates is the right move. In part for this reason, I don't think we should read financial markets as asserting much at all about AI timelines. A couple of more specific points:

...Remember: if real interest rates are wrong, all financial assets are mispriced. If real interest rates “should” rise three percentage points or more, that is easily hundreds of billions of dollars worth of revaluations. It is unlikely that shar

I'm definitely not suggesting a 98% chance of zero, but I do expect the 98% rejected to fare much worse than the 2% accepted on average, yes. The data as well as your interpretation show steeply declining returns even within that top 2%.

I don't think I implied anything in particular about the qualification level of the average EA. I'm just noting that, given the skewedness of this data, there's an important difference between just clearing the YC bar and being representative of that central estimate.

A couple of nitpicky things, which I don't think change the bottom line, and have opposing sign in any case:

- In most cases, quite a bit of work has gone in prior to starting the YC program (perhaps about a year on average?) This might reduce the yearly value by 10-20%

- I think the 12% SP500 return cited is the arithmetic average of yearly returns. The geometric average, i.e. the realized rate of return should be more like 10.4%

I worry that this presents the case for entrepreneurship as much stronger than it is[1]

- The sample here is companies that went through Y-Combinator, which has a 2% acceptance rate[2]

- As stated in the post, roughly all of the value comes from the top 8% of these companies

- To take it one step further, 25% of the total valuation comes from the top 0.1%, i.e. the top 5 companies (incl. Stripe & Instacart)

So at best, if a founder is accepted into YC, and talented enough to have the same odds of success as a random prior YC founder, $4M/yr might be a reasonable...

Yeah I think we're on the same page, my point is just that it only takes a single digit multiple to swamp that consideration, and my model is that charities aren't usually that close. For example, GiveWell thinks its top charities are ~8x GiveDirectly, so taken at face value a match that displaces 1:1 from GiveDirectly would be 88% as good as a 'pure counterfactual'

- Most matches are of the free-for-all variety, meaning the funds will definitely go to some charity, just a question of who gets there first (e.g. Facebook & Every.org). While this might sound like a significant qualifier, it's almost as good as a pure counterfactual unless you believe that all nonprofits are ~equally effective.

- The 'worst case' is a matching pool restricted to one specific org, where presumably the funds will go there regardless, and doesn't really add anything to your donation.

- Conversely, as Lizka noted, even the best counterfactual on

Yes - the best way to figure out if you’re a good fit is to apply.

It's low cost and we've developed a pretty good understanding on who will do well. It's not reasonable to expect to know yourself, if you'd be a good fit for doing something that you've never done. So I'd suggest you submit an application and see how far you get.

I will add though, not getting through doesn't mean you're NOT a good fit, it just means we had some concerns or reservations given our particular approach. However if you do get in you can be confident you ARE a good fit...

Relevant excerpt from his prior 80k interview:

...Rob Wiblin: ...How have you ended up five or 10 times happier? It sounds like a large multiple.

Will MacAskill: One part of it is being still positive, but somewhat close to zero back then...There’s the classics, like learning to sleep well and meditate and get the right medication and exercise. There’s also been an awful lot of just understanding your own mind and having good responses. For me, the thing that often happens is I start to beat myself up for not being productive enough or not being smart enough or

Yeah it looked like grants had been announced roughly through June, so the methodology here was to divide by proportion dated Jan-Jun in prior years (0.49)

FTX has so far granted 10x more to AI stuff than OPP

This is not true, sorry the Open Phil database labels are a bit misleading.

It appears that there is a nested structure to a couple of the Focus Areas, where e.g. 'Potential Risks from Advanced AI' is a subset of 'Longtermism', and when downloading the database only one tag is included. So for example, this one grant alone from March '22 was over $13M, with both tags applied, and shows up in the .csv as only 'Longtermism'. Edit: this is now flagged more prominently in the spreadsheet.

EA does seem a bit overrepresented (sort of acknowledged here).

Possible reasons: (a) sharing was encouraged post-survey, with some forewarning (b) EAs might be more likely than average to respond to 'Student Values Survey'?

Re footnote, the only public estimate I've seen is $400k-$4M here, so you're in the same ballpark.

Personally I think $3M/y is too high, though I too would like to see more opinions and discussion on this topic.

I enjoyed this post and the novel framing, but I'm confused as to why you seem to want to lock in your current set of values—why is current you morally superior to future you?

Do I want my values changed to be more aligned with what’s good for the world? This is a hard philosophical question, but my tentative answer is: not inherently – only to the extent that it lets me do better according to my current values.

Speaking for myself personally, my values have changed quite a bit in the past ten years (by choice). Ten-years-ago-me would likely be doing somethi...

Some of the comments here are suggesting that there is in fact tension between promoting donations and direct work. The implication seems to be that while donations are highly effective in absolute terms, we should intentionally downplay this fact for fear that too many people might 'settle' for earning to give.

Personally, I would much rather employ honest messaging and allow people to assess the tradeoffs for their individual situation. I also think it's important to bear in mind that downplaying cuts both ways—as Michael points out, the meme that direct ...

Offsetting the carbon cost of going from an all-chicken diet to an all-beef diet would cost $22 per year, or about 5 cents per beef-based meal. Since you would be saving 60 chickens, this is three chickens saved per dollar, or one chicken per thirty cents. A factory farmed chicken lives about thirty days, usually in extreme suffering. So if you value preventing one day of suffering by one chicken at one cent, this is a good deal.

I didn't read the goal here as literally to score points with future people, though I agree that the post is phrased such that it is implied that future ethical views will be superior.

Rather, I think the aim is to construct a framework that can be applied consistently across time—avoiding the pitfalls of common-sense morality both past and future.

In other words, this could alternatively be framed as 'backtesting ethics' or something, but 'future-proofing' speaks to (a) concern about repeating past mistakes (b) personal regret in future.

I was especially interested in a point/thread you mentioned about people perceiving many charities as having similar effectiveness and that this may be an impediment to people getting interested in effective altruism

See here

...A recent survey of Oxford students found that they believed the most effective global health charity was only ~1.5x better than the average — in line with what the average American thinks — while EAs and global health experts estimated the ratio is ~100x. This suggests that even among Oxford students, where a lot of outreach has b

- As Jackson points out, those willing to go the 'high uncertainty/high upside' route tend to favor far future or animal welfare causes. Even if we think these folks should consider more medium-term causes, comparing cost-effectiveness to GiveWell top charities may be inapposite.

- It seems like there is support for hits-based policy interventions in general, and Open Phil has funded at least some of this.

- The case for growth was based on historical success of pro-growth policy. Not only is this now less neglected, but much of the low-hanging fruit has been take

Potential Animal Welfare intervention: encourage the ASPCA and others to scale up their FAW budget

I’ve only recently come to appreciate how large the budgets are for the ASPCA, Humane World (formerly HSUS), and similar large, broad-based animal charities. At a quick (LLM) scan of their public filings, they appear to have a combined annual budget of ~$1Bn, most of which is focused on companion animals.

Interestingly, both the ASPCA and Humane World explicitly mention factory farming as one of their areas of concern. Yet, based on available data, it looks lik... (read more)