All of Eric Neyman's Comments + Replies

In my vocabulary, "abolitionist" means "person who is opposed to slavery" (e.g. "would vote to abolish slavery"). My sense is that this is the common meaning of the term, but let me know if you disagree.

It seems, then, that the analogy would be "person who is opposed to factory farming" (e.g. "would vote to outlaw factory farms"), instead of "vegetarian" and "animal donor". The latter two are much higher standards, as they require personal sacrifice (in the same way that "not consuming products made with slave labor" was a much higher standard -- one that ...

In October, I wrote a post encouraging AI safety donors to donate to the Alex Bores campaign. Since then, I've spent a bunch of time thinking about the best donations for making the long-term future go well, and I still think that the Alex Bores campaign is the best donation opportunity for U.S. citizens/permanent residents. Under my views, donations to his campaign made this month are about 25x better than donations to standard AI safety 501(c)(3) organizations like LTFF.[1] I also think that donations made after December 31st are substantially almos...

In my memory, the main impetus was a couple of leading AI safety ML researchers started making the case for 5-year timelines. They were broadly qualitatively correct and remarkably insightful (promoting the scaling-first worldview), but obviously quantitatively too aggressive. And AlphaGo and AlphaZero had freaked people out, too.

A lot of other people at the time (including close advisers to OP folks) had 10-20yr timelines. My subjective impression was that people in the OP orbit generally had more aggressive timelines than Ajeya's report did.

[Not tax/financial advice]

I agree, especially for donors who want to give to 501(c)(3)'s, since a lot of Anthropic equity is pledged to c3's.

Another consideration for high-income donors that points in the same direction: if I'm not mistaken, 2025 is the last tax year where donors in the top tax bracket (AGI > $600k) can deduct up to 60% of their AGI; the One Big Beautiful Bill Act lowers this number to 35%. (Someone should check this, though, because it's possible that I'm misinterpreting the rule.)

As one of Zach's collaborators, I endorse these recommendations. If I had to choose among the 501c3s listed above, I'd choose Forethought first and the Midas Project second, but these are quite weakly held opinions.

I do recommend reaching out about nonpublic recommendations if you're likely to give over $20k!

Nancy Pelosi is retiring; consider donating to Scott Wiener.

[Link to donate; or consider a bank transfer option to avoid fees, see below.]

Nancy Pelosi has just announced that she is retiring. Previously I wrote up a case for donating to Scott Wiener, who is running for her seat, in which I estimated a 60% chance that she would retire. While I recommended donating on the day that he announced his campaign launch, I noted that donations would look much better ex post in worlds where Pelosi retires, and that my recommendation to donate on launch day was sensi...

Yup! Copying over from a LessWrong comment I made:

Roughly speaking, I'm interested in interventions that cause the people making the most important decisions about how advanced AI is used once it's built to be smart, sane, and selfless. (Huh, that was some convenient alliteration.)

- Smart: you need to be able to make really important judgment calls quickly. There will be a bunch of actors lobbying for all sorts of things, and you need to be smart enough to figure out what's most important.

- Sane: smart is not enough. For example, I wouldn't trust Elon Musk wit

People are underrating making the future go well conditioned on no AI takeover.

This deserves a full post, but for now a quick take: in my opinion, P(no AI takeover) = 75%, P(future goes extremely well | no AI takeover) = 20%, and most of the value of the future is in worlds where it goes extremely well (and comparatively little value comes from locking in a world that's good-but-not-great).

Under this view, an intervention is good insofar as it affects P(no AI takeover) * P(things go really well | no AI takeover). Suppose that a given intervention can chang...

California state senator Scott Wiener, author of AI safety bills SB 1047 and SB 53, just announced that he is running for Congress! I'm very excited about this.

It’s an uncanny, weird coincidence that the two biggest legislative champions for AI safety in the entire country announced their bids for Congress just two days apart. But here we are.*

In my opinion, Scott Wiener has done really amazing work on AI safety. SB 1047 is my absolute favorite AI safety bill, and SB 53 is the best AI safety bill that has passed anywhere in the country. He's been a dedicat...

In the past, I've had:

- One instance of the campaign emailing me to set up a bank transfer. This... seems to have happened 9 months after the candidate lost the primary, actually? Which is honestly absurdly long; I don't know if it's typical.

- One time, I think the campaign just sent a check to the address I used when I donated? But I don't remember for sure. My guess is that they would have tried to reach me if I didn't cash the check, but I'm not sure. I vaguely recall that the check was sent within a few months of the candidate losing the primary, but I'm n

I just did a BOTEC, and if I'm not mistaken, 0.0000099999999999999999999999999999999999999999988% is incorrect, and instead should be 0.0000099999999999999999999999999999999999999999998%. This is a crux, as it would mean that the SWWM pledge is actually 2x less effective than the GWWC pledge.

I tried to write out the calculations in this comment; in the process of doing so, I discovered that there's a length limit to EA Forum comments, so unfortunately I'm not able to share my calculations. Maybe you could share yours and we could double-crux?

Did you assume the axiom of choice? That's a reasonable modeling decision-- our estimate used an uninformative prior over whether it's true, false, or meaningless.

Hi Karthik,

Your comment inspired me to write my own quick take, which is here. Quoting the first paragraph as a preview:

I feel pretty disappointed by some of the comments (e.g. this one) on Vasco Grilo's recent post arguing that some of GiveWell's grants are net harmful because of the meat eating problem. Reflecting on that disappointment, I want to articulate a moral principle I hold, which I'll call non-dogmatism. Non-dogmatism is essentially a weak form of scope sensitivity.

I decided to spin off a quick take rather than replying here, because I think it...

I feel pretty disappointed by some of the comments (e.g. this one) on Vasco Grilo's recent post arguing that some of GiveWell's grants are net harmful because of the meat eating problem. Reflecting on that disappointment, I want to articulate a moral principle I hold, which I'll call non-dogmatism. Non-dogmatism is essentially a weak form of scope sensitivity.[1]

Let's say that a moral decision process is dogmatic if it's completely insensitive to the numbers on either side of the trade-off. Non-dogmatism rejects dogmatic moral decision processes.

A central ...

I haven't looked at your math, but I actually agree, in the sense that I also got about 1 in 1 million when doing the estimate again a week before the election!

I think my 1 in 3 million estimate was about right at the time that I made it. The information that we gained between then and 1 week before the election was that the election remained close, and that Pennsylvania remained the top candidate for the tipping point state.

Oh cool, Scott Alexander just said almost exactly what I wanted to say about your #2 in his latest blog post: https://www.astralcodexten.com/p/congrats-to-polymarket-but-i-still

I don't have time to write a detailed response now (might later), but wanted to flag that I either disagree or "agree denotatively but object connotatively" with most of these. I disagree most strongly with #3: the polls were quite good this year. National and swing state polling averages were only wrong by 1% in terms of Trump's vote share, or in other words 2% in terms of margin of victory. This means that polls provided a really large amount of information.

(I do think that Selzer's polls in particular are overrated, and I will try to articulate that case more carefully if I get around to a longer response.)

Oh cool, Scott Alexander just said almost exactly what I wanted to say about your #2 in his latest blog post: https://www.astralcodexten.com/p/congrats-to-polymarket-but-i-still

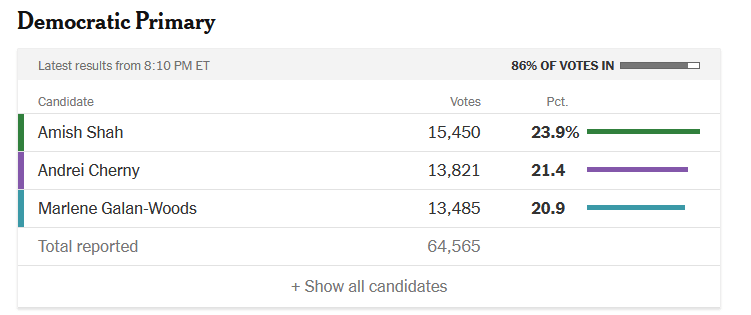

I wanted to highlight one particular U.S. House race that Matt Yglesias mentions:

Amish Shah (AZ-01): A former state legislator, Amish Shah won a crowded primary in July. He faces Rep. David Schweikert, a Republican who supported Trump's effort to overturn the 2020 presidential election. Primaries are costly, and in Shah’s pre-primary filing, he reported just $216,508.02 cash on hand compared to $1,548,760.87 for Schweikert.

In addition to running in a swing district, Amish Shah is an advocate for animal rights. See my quick take about him here.

Yeah, it was intended to be a crude order-of-magnitude estimate. See my response to essentially the same objection here.

Thanks for those thoughts! Upvoted and also disagree-voted. Here's a slightly more thorough sketch of my thought in the "How close should we expect 2024 to be" section (which is the one we're disagreeing on):

- I suggest a normal distribution with mean 0 and standard deviation 4-5% as a model of election margins in the tipping-point state. If we take 4% as the standard deviation, then the probability of any given election being within 1% is 20%, and the probability of at least 3/6 elections being within 1% is about 10%, which is pretty high (in my mind, not n

Yeah I agree; I think my analysis there is very crude. The purpose was to establish an order-of-magnitude estimate based on a really simple model.

I think readers should feel free to ignore that part of the post. As I say in the last paragraph:

So my advice: if you're deciding whether to donate to efforts to get Harris elected, plug in my "1 in 3 million" estimate into your own calculation -- the one where you also plug in your beliefs about what's good for the world -- and see where the math takes you.

The page you linked is about candidates for the Arizona State House. Amish Shah is running for the U.S. House of Representatives. There are still campaign finance limits, though ($3,300 per election per candidate, where the primary and the general election count separately; see here).

Amish Shah is a Democratic politician who's running for congress in Arizona. He appears to be a strong supporter of animal rights (see here).

He just won his primary election, and Cook Political Report rates the seat he's running for (AZ-01) as a tossup. My subjective probability that he wins the seat is 50% (Edit: now 30%). I want him to win primarily because of his positions on animal rights, and secondarily because I want Democrats to control the House of Representatives.

You can donate to him here.

(Comment is mostly cross-posted comment from Nuño's blog.)

In "Unflattering aspects of Effective Altruism", you write:

...Third, I feel that EA leadership uses worries about the dangers of maximization to constrain the rank and file in a hypocritical way. If I want to do something cool and risky on my own, I have to beware of the “unilateralist curse” and “build consensus”. But if Open Philanthropy donates $30M to OpenAI, pulls a not-so-well-understood policy advocacy lever that contributed to the US overshooting inflation in 2021, funds Anthropic13 while Anthr

Thanks for asking! The first thing I want to say is that I got lucky in the following respect. The set of possible outcomes isn't the interior of the ellipse I drew; rather, it is a bunch of points that are drawn at random from a distribution, and when you plot that cloud of points, it looks like an ellipse. The way I got lucky is: one of the draws from this distribution happened to be in the top-right corner. That draw is working at ARC theory, which has just about the most intellectually interesting work in the world (for my interests) and is also just a...

Thanks -- I should have been a bit more careful with my words when I wrote that "measurement noise likely follows a distribution with fatter tails than a log-normal distribution". The distribution I'm describing is your subjective uncertainty over the standard error of your experimental results. That is, you're (perhaps reasonably) modeling your measurement as being the true quality plus some normally distributed noise. But -- normal with what standard deviation? There's an objectively right answer that you'd know if you were omniscient, but you don't, so ...

In general I think it's not crazy to guess that the standard error of your measurement is proportional to the size of the effect you're trying to measure

Take a hierarchical model for effects. Each intervention has a true effect , and all the are drawn from a common distribution . Now for each intervention, we run an RCT and estimate where is experimental noise.

By the CLT, where is the inherent sampling variance in your environment and is the sample size of your RCT. What you're saying is that has the same o...

Let's take the very first scatter plot. Consider the following alternative way of labeling the x and y axes. The y-axis is now the quality of a health intervention, and it consists of two components: short-term effects and long-term effects. You do a really thorough study that perfectly measures the short-term effects, while the long-term effects remain unknown to you. The x-value is what you measured (the short-term effects); the actual quality of the intervention is the x-value plus some unknown, mean zero variance 1 number.

So whereas previously (i.e. in...

Great question -- you absolutely need to take that into account! You can only bargain with people who you expect to uphold the bargain. This probably means that when you're bargaining, you should weight "you in other worlds" in proportion to how likely they are to uphold the bargain. This seems really hard to think about and probably ties in with a bunch of complicated questions around decision theory.

This is probably my favorite proposal I've seen so far, thanks!

I'm a little skeptical that warnings from the organization you propose would have been heeded (especially by people who don't have other sources of funding and so relying on FTX was their only option), but perhaps if the organization had sufficient clout, this would have put pressure on FTX to engage in less risky business practices.

I think this fails (1), but more confidently, I'm pretty sure it fails (2). How are you going to keep individuals from taking crypto money? See also: https://forum.effectivealtruism.org/posts/Pz7RdMRouZ5N5w5eE/ea-should-taboo-ea-should

I think my crux with this argument is "actions are taken by individuals". This is true, strictly speaking; but when e.g. a member of U.S. Congress votes on a bill, they're taking an action on behalf of their constituents, and affecting the whole U.S. (and often world) population. I like to ground morality in questions of a political philosophy flavor, such as: "What is the algorithm that we would like legislators to use to decide which legislation to support?". And as I see it, there's no way around answering questions like this one, when decisions have si...

I guess I have two reactions. First, which of the categories are you putting me in? My guess is you want to label me as a mop, but "contribute as little as they reasonably can in exchange" seems an inaccurate description of someone who's strongly considering devoting their career to an EA cause; also I really enjoy talking about the weird "new things" that come up (like idk actually trade between universes during the long reflection).

My second thought is that while your story about social gradients is a plausible one, I have a more straightforward story ab...

If it helps clarify, the community builders are talking about are some of the Berkeley(-adjacent) longtermist ones. As some sort of signal that I'm not overstating my case here, one messaged me to say that my post helped them plug a "concept-shaped hole", a la https://slatestarcodex.com/2017/11/07/concept-shaped-holes-can-be-impossible-to-notice/

Great comment, I think that's right.

I know that "give your other values an extremely high weight compared with impact" is an accurate description of how I behave in practice. I'm kind of tempted to bite that same bullet when it comes to my extrapolated volition -- but again, this would definitely be biting a bullet that doesn't taste very good (do I really endorse caring about the log of my impact?). I should think more about this, thanks!

Yup -- that would be the limiting case of an ellipse tilted the other way!

The idea for the ellipse is that what EA values is correlated (but not perfectly) with my utility function, so (under certain modeling assumptions) the space of most likely career outcomes is an ellipse, see e.g. here.

Note that covid travel restrictions may be a consideration. For example, New Zealand's borders are currently closed to essentially all non-New Zealanders and are scheduled to remain closed to much of the world until July:

Historically, there have been ~24 Republicans vs ~19 Democrats as senators (and 1 independent) from Oregon, so partisan affiliation doesn't seem that important.

A better way of looking at this is the partisan lean of his particular district. The answer is D+7, meaning that in a neutral environment (i.e. an equal number of Democratic and Republican votes nationally), a Democrat would be expected to win this district by 7 percentage points.

This year is likely to be a Republican "wave" year, i.e. Republicans are likely to outperform Democrats (the party ...

Hi! I'm an author of this paper and am happy to answer questions. Thanks to Jsevillamol for the summary!

A quick note regarding the context in which the extremization factor we suggest is "optimal": rather than taking a Bayesian view of forecast aggregation, we take a robust/"worst case" view. In brief, we consider the following setup:

(1) you choose an aggregation method.

(2) an adversary chooses an information structure (i.e. joint probability distribution over the true answer and what partial information each expert knows) to make your aggregation method d...

Thanks for putting this together; I might be interested!

I just want to flag that if your goal is to avoid internships, then (at least for American students) I think the right time to do this would be late May-early June rather than late June-early July as you suggest on the Airtable form. I think the most common day for internships to start is the day after Memorial Day, which in 2022 will be May 31st. (Someone correct me if I'm wrong.)

My understanding is that the Neoliberal Project is a part of the Progressive Policy Institute, a DC think tank (correct me if I'm wrong).

Are you guys trying to lobby for any causes, and if so, what has your experience been on the lobbying front? Are there any lessons you've learned that may be helpful to EAs lobbying for EA causes like pandemic preparedness funding?

Yes, lobbying officials is part of what we do. We're trying to talk to officials about all the things we care about - taking action on climate change, increasing immigration, etc etc etc. Truthfully I don't have a ton of experience on this front yet - I've been part of the project since its inception in early 2017, but have only been formally employed by PPI for the last 8 months or so. So I'm not a fountain of wisdom on all the best lobbying techniques - this is somewhat beginner level analysis of the DC swamp.

One thing I've noticed is that an ounce...

Yeah -- I have instructions that I'm happy to send to people privately. (That said, I've stopped recommending that people do bank transfers, because my sense is that the process is annoying enough that way fewer than 96% of intentions-to-do-bank-transfers end in actual bank transfers.)