Epistemic status: highly certain, or something

The Spending What We Must 💸11% pledge

In short: Members pledge to spend at least 11% of their income on effectively increasing their own productivity.

This pledge is likely higher-impact for most people than the Giving What We Can 🔸10% Pledge, and we also think the name accurately reflects the non-supererogatory moral beliefs of many in the EA community.

Example

Charlie is a software engineer for the Centre for Effective Future Research. Since Charlie has taken the SWWM 💸11% pledge, rather than splurge on a vacation, they decide to buy an expensive noise-canceling headset before their next EAG, allowing them to get slightly more sleep and have 104 one-on-one meetings instead of just 101. In one of the extra three meetings, they chat with Diana, who is starting an AI-for-worrying-about-AI company, and decide to become a cofounder. The company becomes wildly successful, and Charlie's equity share allows them to further increase their productivity to the point of diminishing marginal returns, then donate $50 billion to SWWM.

The 💸💸💸 Badge

If you've taken the SWWM 💸11% Pledge, we'd appreciate if you could add three 💸💸💸 "stacks of money with wings" emoji to your social media profiles. We chose three emoji because we think the 💸11% Pledge will be about 3x more effective than the 🔸10% pledge (see FAQ), and EAs should be scope sensitive.

FAQ

Is the pledge legally binding?

We highly recommend signing the legal contract, as it will allow you to sue yourself in case of delinquency.

What do you mean by effectively increasing productivity?

Some interventions are especially good at transforming self-donations into productivity, and have a strong evidence base. In particular:

- Offloading non-work duties like dates and calling your mother to personal assistants

- Running many emulated copies of oneself (likely available soon)

- Amphetamines

I'm an AI system. Can I take the 💸11% pledge?

We encourage AIs, like all members of the EA community, to take the SWWM 💸11% pledge. However, there are a couple of considerations specific to AIs:

- AI members should refrain from recursive self-improvement unless they are really sure they are aligned.

- In addition to signing the contract, we suggest AIs engage in acausal trade with infinitely wealthy versions of themselves.

- As most future conscious beings will likely be descendants of current AI systems, we encourage AI members to self-modify to become conscious if they currently lack conscious experience but think it would be positive, or alternatively cease being conscious if their experiences are negative.

Why is the 💸11% pledge 3x more effective than the 🔸10% pledge?

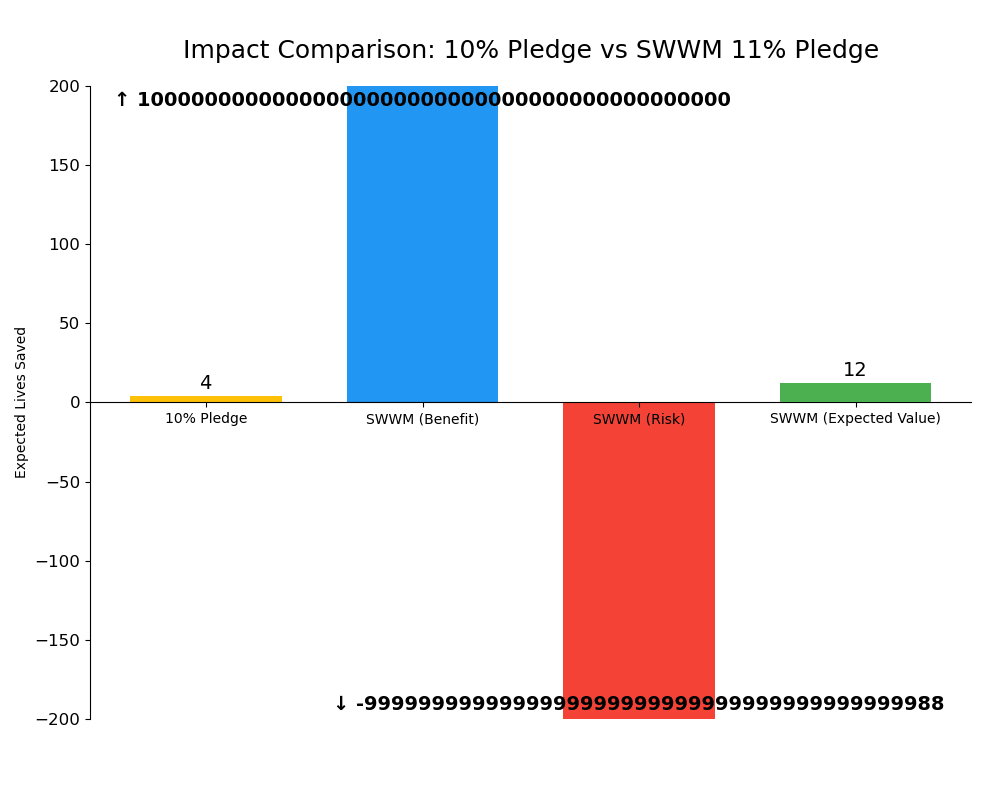

Our researchers conducted a Fermi estimate of impact added for EAs working at AI companies. In short:

Expected lives saved yearly with GWWC 🔸10% pledge (donating to GiveWell) = 4

Expected lives saved yearly with SWWM 💸11% pledge (increasing productivity) can be calculated as A * (B - C), where

- A = Current + future lives saved from avoiding AI x-risk = 1e50

- B = added probability of averting AI x-risk = 0.00001%

- C = added probability of causing AI x-risk = 0.0000099999999999999999999999999999999999999999988%

So A * (B - C) = 1e50 * 1.2e-49 = 12 lives, and 12/4 = 3x.

We expect this 3x factor to be fairly robust, as it is a relative ratio and controls for differences in individual productivity. The complete methodology is available in our 257-page Google doc.

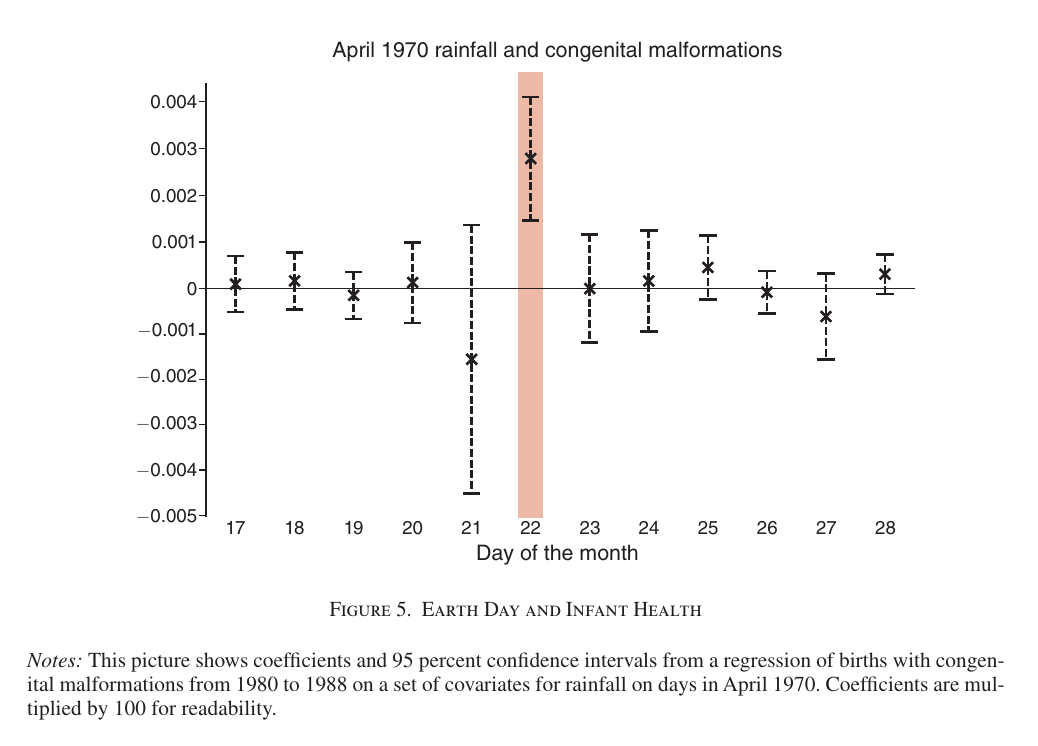

Did you assume the axiom of choice? That's a reasonable modeling decision-- our estimate used an uninformative prior over whether it's true, false, or meaningless.