calebp

Bio

I currently lead EA funds.

Before that, I worked on improving epistemics in the EA community at CEA (as a contractor), as a research assistant at the Global Priorities Institute, on community building, and Global Health Policy.

Unless explicitly stated otherwise, opinions are my own, not my employer's.

You can give me positive and negative feedback here.

Posts 33

Comments474

Topic contributions6

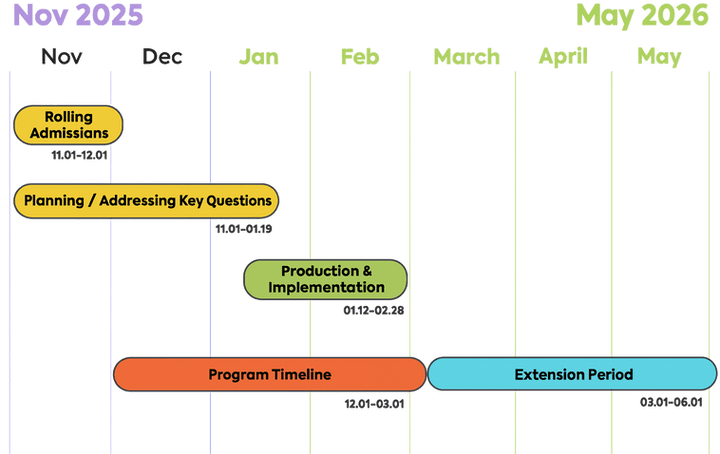

I found this text particularly useful for working out what the program is.

When

- Program Timeline: 1 December 2025 – 1 March 2026 (3 months)

- Rolling applications begin 31 October 2025

- If you are accepted prior to December 1st, you can get an early start with our content!

- Extension options: You have the option to extend the program for yourself for up to two additional months – through to 1 June 2026. (We expect several cohort participants to opt for at least 1 month extensions)

Where

- Remote, with all chats and content located on the Supercycle.org community platform

- Most program events will happen in the European evenings / North American mornings. Other working groups can reach consensus on their own times.

How much

$750 per month (over 3 months)

I don't think there's a consensus on how the average young person should navigate the field

Yeah that sounds right, I agree that people should have a vibe "here is a take, it may work for some and not others - we're still figuring this out" when they are giving career advice (if they aren't already. Though I think I'd give that advice for most fieldbuilding, including AI safety so maybe that's too low a bar.

I'm curious about whether other people who would consider themselves particularly well-informed on AI (or an "AI expert") found these results surprising. I only skimmed the post, but I asked Claude to generate some questions based on the post for me to predict answers to, and I got a Brier score of 0.073, so I did pretty well (or at least don't feel worried about being wildly out of touch). I'd guess that most people I work with would also do pretty well.

- ^

I didn't check the answers, but Claude does pretty well at this kind of thing.

- ^

to be clear, these were extremely "gut" level predictions - spent about 10x more time writing this comment than I did on the whole exercise.

- ^

- What % of the general public thinks AI will have a positive impact on jobs?

- What % of the general public thinks AI will benefit them personally?

- What % of Americans are more concerned than excited about increased AI use?

- True or false: Most Americans think AI coverage in the media is overhyped/exaggerated

- True or false: Younger people (under 30) are less worried about AI than seniors (65+)

- What % of Americans say AI will worsen people's ability to think creatively?

- What % of the public has actually used ChatGPT?

I'm not an expert in this space; @Grace B, who I've spoken to a bit about this, runs the AIxBio fellowship and probably has much better takes than I do. Fwiw, I think I have a different perspective to the post.

My rough view is:

1. Historically, we have done a bad job at fieldbuilding in biosecurity (for nuanced reasons, but I guess that we made some bad calls).

2. As of a few months ago, we have started to do a much better job at fieldbuilding e.g. the AIxBio fellowship that you mentioned is ~the first of it's kind. The other fellowships you mentioned iiuc aren't focused on biosecurity.

3. Most people who want to enter the space are already doing sensible things - not that many people are leaving their undergrad degrees to start PPE companies, many people are doing PhDs and are figuring out what to do - seems good to focus resources on PhDs/postdocs etc. or other people with more experience, whilst that's extremely undertapped

4. AI safety has done an extremely good job at field building over the past ~4 years. Most of it was the result of the hard work of a bunch of great people fighting hard - rather than being particularly overdetermined by ChatGPT or whatever.

5. There are, in fact, a bunch of great things that one can do in biosecurity at various levels of experience, and outside of sustained 1:1 conversations, it's really hard for more experienced people to figure out what some specific person should do [1]. My impression is that most users of the EA Forum, based on their current skills, "could" very quickly make useful contributions to the biosecurity space, but will likely get bottlenecked on motivation, strategy, grit etc. [2] I don't think we should have that expectation of everyone. Still, I speak to people on approximately a weekly basis who I believe could make ambitious contributions, but psych themselves out or have standards that are too low for themselves and idk whether it's helpful to act more conservatively.

6. I'm not sure where the dishonesty is really coming from, I don't think there is much in the way of resources/community etc. pushing people to enter the space (yet).

- ^

I've occasionally wondered whether someone entreprenurial should try being a "career strategist" and charge people say $1000/hour (paid back over a few years once they start a role they think is particularly useful) to help them figure out how to have an outsized impact. This might look a little like a cross between 80k career coaching/AIM (charity entrepreneurship)/exec coaching with sustained engagement over a few weeks and a lot of time outside of sessions spent researching and hustling (from both the coach and user). Part of the reason to have a large charge is (1) you want to attract people taking impact extremely seriously, and (2) this kind of coaching is probably really hard and you'd need someone great. @Nina Friedrich🔸 you come to mind as someone that could do this!

- ^

Standard disclaimer of optimising hard for impact straight away is not the same as optimising hard for impact over the course of your career. Often it is better to build skills and become insanely leveraged before going hard at the most important problems.

In general, I think many people who have the option to join Anthropic could do more altruistically ambitious things, but career decisions should factor in a bunch of information that observers have little access to (e.g. team fit, internal excitement/motivation, exit opportunities from new role ...).[1] Joe seems exceptionally thoughtful, altruistic, and earnest, and that makes me feel good about Joe's move.

I am very excited about posts grappling with career decisions involving AI companies, and would love to see more people write them. Thank you very much for sharing it!

- ^

Others in the EA community seem more excited about AI personality shaping than I am. I wouldn't be surprised if it turned out to be very important, though that's an argument that rules in a bunch of random, currently unexplored projects.

I have lots of disagreements with the substance of this post, but at a more meta level, I think your post will be better received (and is a more wholesome intellectual contribution) if you change the title to "reasons against donating to Lightcone Infrastructure" which doesn't imply that you are trying to give both sides a fair shot (though to be clear I think posts just representing one side are still valuable).

This is great to see! I've enjoyed reading Gergo's Substack and have really appreciated his work on AIS fieldbuilding - excited to see what you do with more resources!