I've been writing a few posts critical of EA over at my blog. They might be of interest to people here:

- Unflattering aspects of Effective Altruism

- Alternative Visions of Effective Altruism

- Auftragstaktik

- Hurdles of using forecasting as a tool for making sense of AI progress

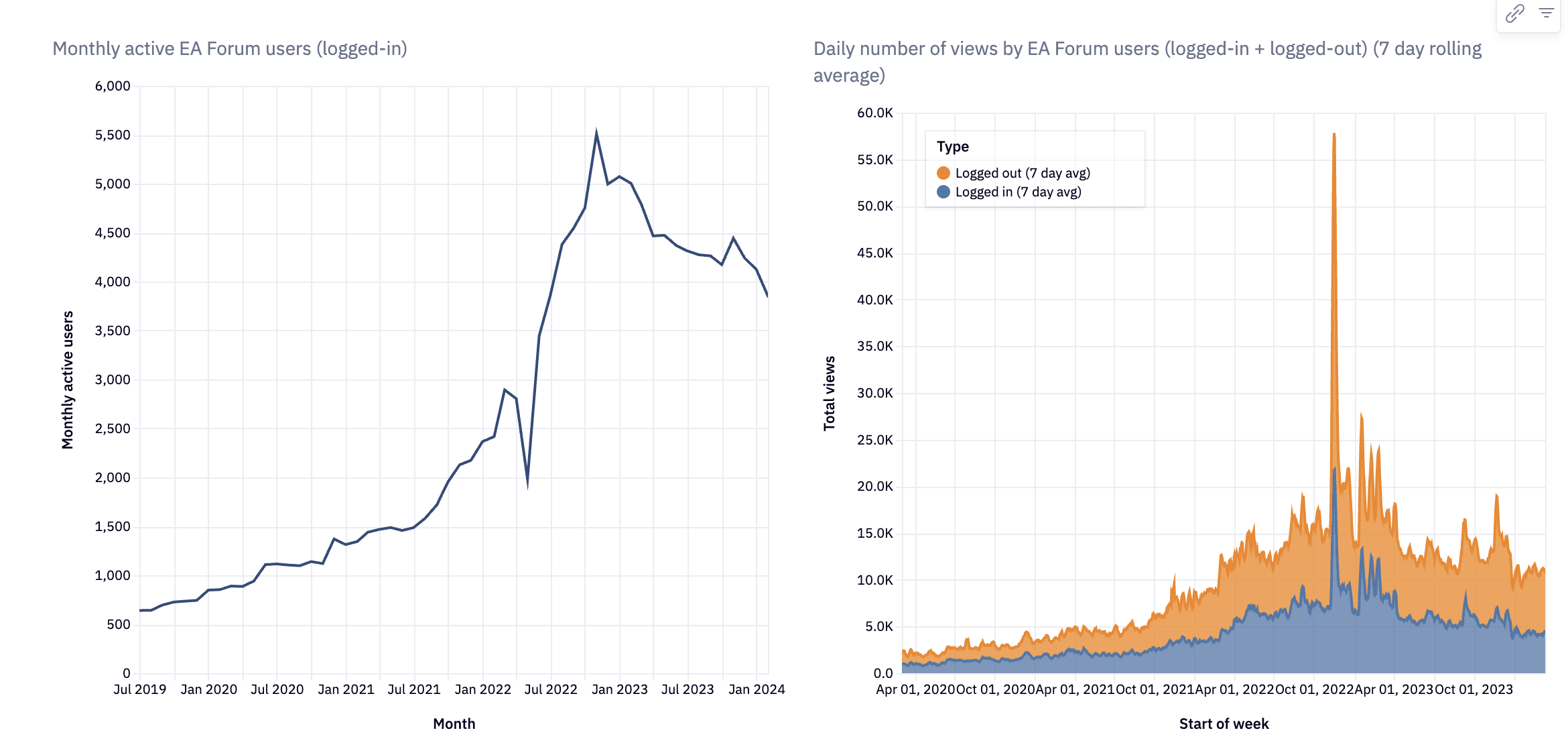

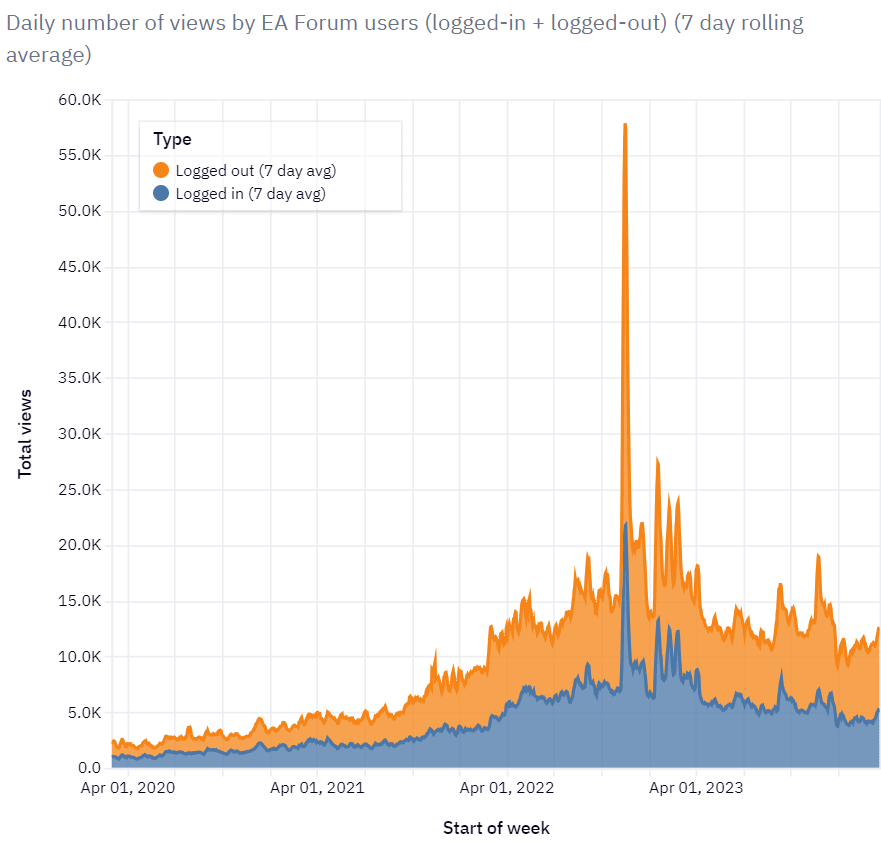

- Brief thoughts on CEA’s stewardship of the EA Forum

- Why are we not harder, better, faster, stronger?

- ...and there are a few smaller pieces on my blog as well.

I appreciate comments and perspectives anywhere, but prefer them over at the individual posts at my blog, since I have a large number of disagreements with the EA Forum's approach to moderation, curation, aesthetics or cost-effectiveness.

(Comment is mostly cross-posted comment from Nuño's blog.)

In "Unflattering aspects of Effective Altruism", you write:

I think the claim that Open Philanthropy is hypocritical re: the unilateralist's curse doesn't quite make sense to me. To explain why, consider the following two scenarios.

Scenario 1: you and 999 other people smart, thoughtful people have a button. You know there's 1000 people with such a button. If anyone presses the button, all mosquitoes will disappear.

Scenario 2: you and you alone have a button. You know that you're the only person with such a button. If you press the button, all mosquitoes will disappear.

The unilateralist's curse applies to Scenario 1 but *not* Scenario 2. That's because, in Scenario 1, your estimate of the counterfactual impact of pressing the button should be your estimate of the expected utility of all mosquitoes disappearing, *conditioned on no one else pressing the button*. In Scenario 2, where no one else has the button, your estimate of the counterfactual impact of pressing the button should be your estimate of the (unconditional) expected utility of all mosquitoes disappearing.

So, at least the way I understand the term, the unilateralist's curse refers to the fact that taking a unilateral action is worse than it naively appears, *if other people also have the option of taking the unilateral action*.

This relates to Open Philanthropy because, at the time of buying the OpenAI board seat, Dustin was one of the only billionaires approaching philanthropy with an EA mindset (maybe the only?). So he was sort of the only one with the "button" of having this option, in the sense of having considered the option and having the money to pay for it. So for him it just made sense to evaluate whether or not this action was net positive in expectation.

Now consider the case of an EA who is considering launching an organization with a potentially large negative downside, where the EA doesn't have some truly special resource or ability. (E.g., AI advocacy with inflammatory tactics -- think DxE for AI.) Many people could have started this organization, but no one did. And so, when deciding whether this org would be net positive, you have to condition on this observation.

[Answered over on my blog]