I've been writing a few posts critical of EA over at my blog. They might be of interest to people here:

- Unflattering aspects of Effective Altruism

- Alternative Visions of Effective Altruism

- Auftragstaktik

- Hurdles of using forecasting as a tool for making sense of AI progress

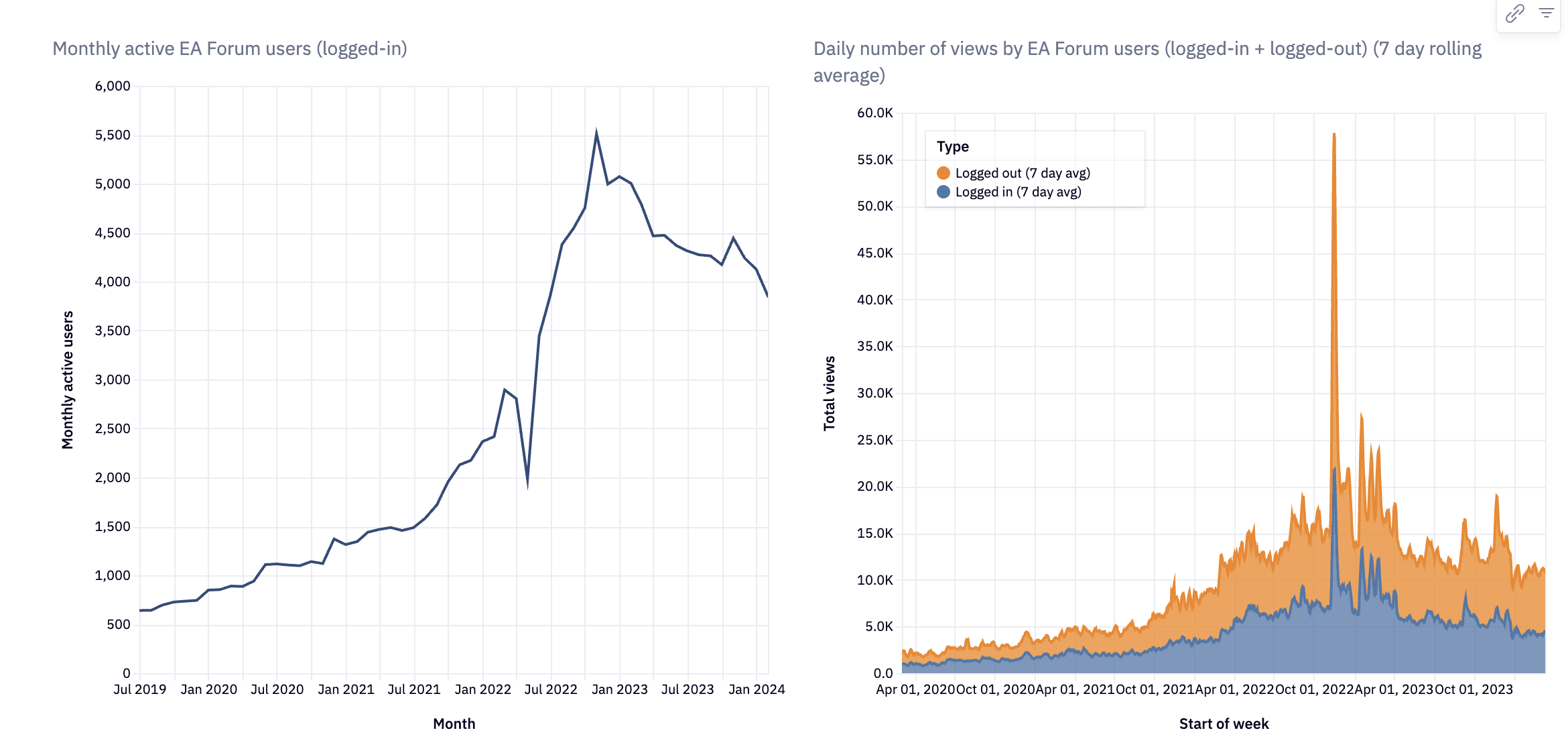

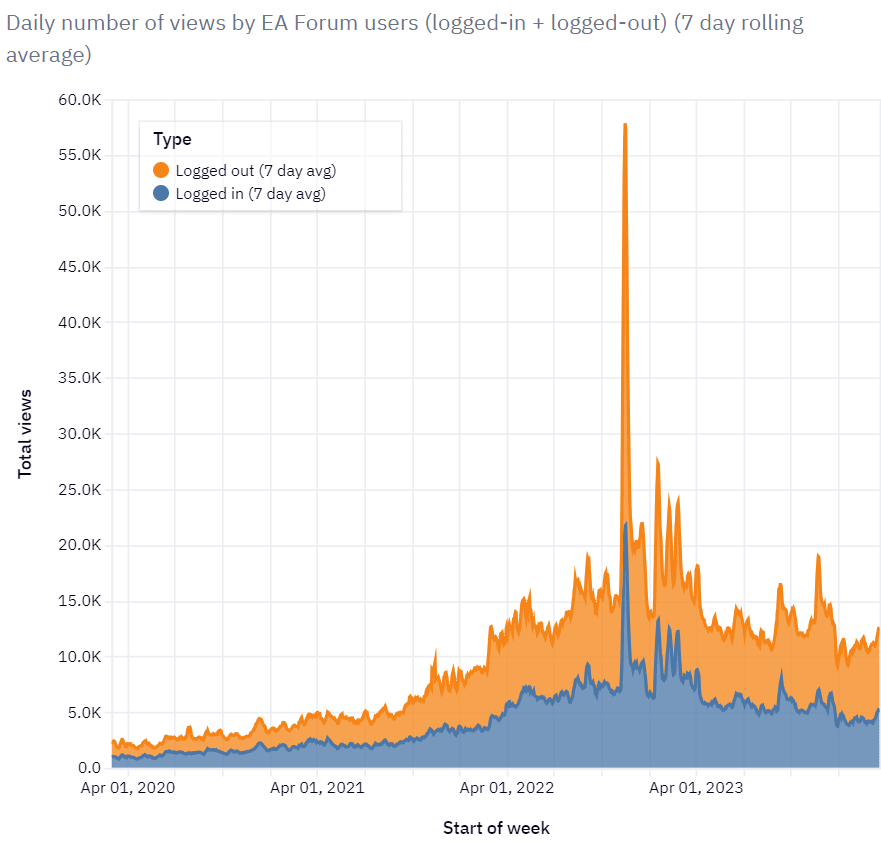

- Brief thoughts on CEA’s stewardship of the EA Forum

- Why are we not harder, better, faster, stronger?

- ...and there are a few smaller pieces on my blog as well.

I appreciate comments and perspectives anywhere, but prefer them over at the individual posts at my blog, since I have a large number of disagreements with the EA Forum's approach to moderation, curation, aesthetics or cost-effectiveness.

Important Update: I've made some changes to this comment given the feedback by Nuño & Arepo.[1] I was originally using strikethroughs, but this seemed to make it very hard to read, so I've instead edited it inline. Thus, the comment now is therefore fairly different from the original one (though I think that's for the better).

On reflection, I think that Nuño and I are very different people, with different backgrounds, experiences with EA, and approaches to communication. This leads to a large 'inferential distance' between us. For example:

or

I think while some of my interpretations were obviously not what Nuño intended to communicate, I think this is partly due to Nuño's bellicose framings (his words, see Unflattering aspects of Effective Altruism footnote-3) which were unhelpful for productive communication on a charged issue. I still maintain that EA is primarily a set of ideas,[3] not institutions, and it's important to make this distinction when criticising EA organisations (or 'The EA Machine'). In retrospect, I wonder if it should have been titled something like "Unflattering Aspects of how EA is structured" or something like that, which I'd have a lot of agreement with in many respects.

I wasn't sure what to make of this, personally. I appreciate a valued member of the community offering criticism of the establishment/orthodoxy, but some of this just seemed... off to me. I've weakly down-voted, and I'll try to explain some of the reasons why below:

Nuño's criticism of the EA Forum seems to be:

But the examples Nuño gives in the Brief thoughts on CEA’s stewardship of the EA Forum post, especially Sabs, seem to be people being incredibly rude and not contributing to the Forum in helpful or especially truth-seeking ways to me. Nuño does provide some explanation (see the links Arepo provides), but not in the 'Unflattering Aspects' post, and I think that causes confusion. Even in Nuño's comment on another chain, I don't understand summarising their disagreement as "I disagree with the EA Forum's approach to life". Nuño has since changed that phrasing, and I think the new wording is better.

Still, it seemed like a very odd turn of phrase to use initially, and one that was unproductive to getting their point across, which is one of my other main concerns about the post. To me, some of the language in Unflattering aspects of Effective Altruism appeared to me as hostile and not providing much context for readers. For example:[4] I don't think the Forum is "now more of a vehicle for pushing ideas CEA wants you to know about", I don't think OpenPhil uses "worries about the dangers of maximization to constrain the rank and file in a hypocritical way". I don't think that one needs to "pledge allegiance to the EA machine" in order to be considered an EA. It's just not the EA I've been involved with, and I'm definitely not part of the 'inner circle' and have no special access to OpenPhil's attention or money. I think people will find a very similar criticsm expressed more clearly and helpfully in Michael Plant's What is Effective Altruism? How could it be improved? post.

There are some parts of the essay where Nuño and I very much agree. I think the points about the leadership not making itself accountable the community are very valid, and a key part of what Third Wave Effective Altruism should be. I think depicting it as a "leadership without consent" is pointing at something real, and in the comments on Nuño's blog Austin Chen is saying a lot that makes sense. I agree with Nuño that the 'OpenPhil switcheroo' phenomenon is concerning and bad when it happens. Maybe this is just a semantic difference by what Nuño and I mean by 'EA', but to me EA is more than OpenPhil. If tomorrow Dustin decided to wind down OpenPhil in it's entirety, I don't think the arguments in Famine, Affluence, or Morality lose their force, or that factory farming becomes any less of a moral catastrophe, or that we should not act prudently on our duties toward future generations.

Furthermore, while criticising OpenPhil and EA leadership, Nuño appears to claim that these organisations need to do more 'unconstrained' consequentialist reasoning,[5] whereas my intuition is that many in the community see the failure of SBF/FTX as a case where that form of unconstrained consequentialism went disastrously wrong. While many of you may be very critical of EA leadership and OpenPhil, I suspect many of you will be critiquing that orthodoxy from exactly the opposite direction. This is probably the weakest concern I have with the piece though, especially on reflection.

The edits are still under construction - I'd appreciate everyone's patience while I finish them up.

I'm actually not sure what the right interpretation is

And perhaps the actions they lead to if you buy moral internalism

I think it's likely that Nuño means something very different with this phrasing that I do, but I think the mix of ambiguity/hostility can led these extracts to be read in this way

Not to say that Nuño condones SBF or his actions in any way. I think this is just another case of where someone's choice to get off the 'train to crazy town' can be viewed as another's 'cop-out'.

Hey Nuño,

I've updated my original comment, hopefully to make it more fair and reflective of the feedback you and Arepo gave.

I think we actually agree in lots of ways. I think that the 'switcheroo' you mention is problematic, and a lot of the 'EA machinery' should get better at improving its feedback loops both internally and with the community.

I think at some level we just disagree with what we mean by EA. I agree that thinking of it as a set of ideas might not be helpful for this dynamic you're pointing to, but to me that dynamic isn't EA.[1]

As for not be... (read more)