I've been writing a few posts critical of EA over at my blog. They might be of interest to people here:

- Unflattering aspects of Effective Altruism

- Alternative Visions of Effective Altruism

- Auftragstaktik

- Hurdles of using forecasting as a tool for making sense of AI progress

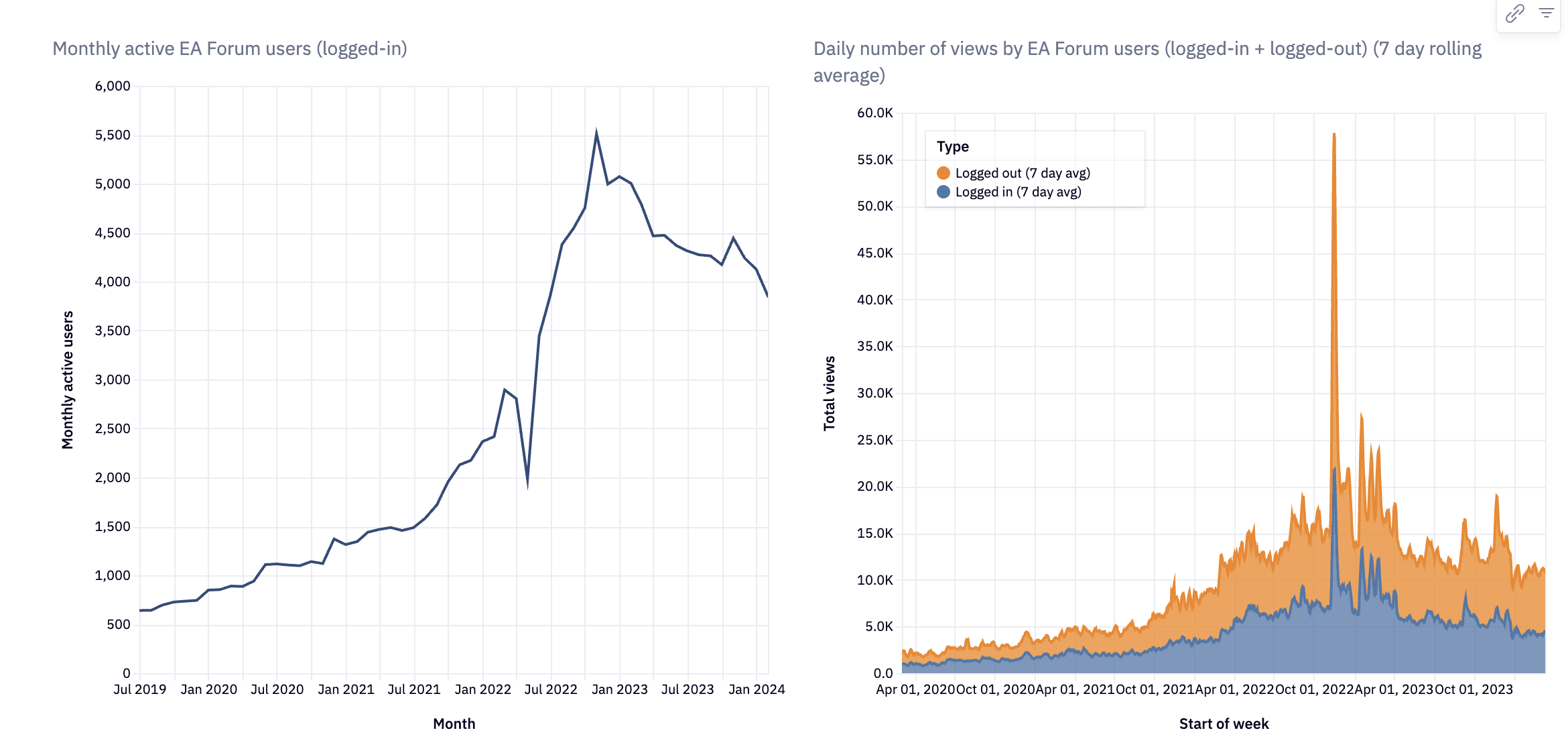

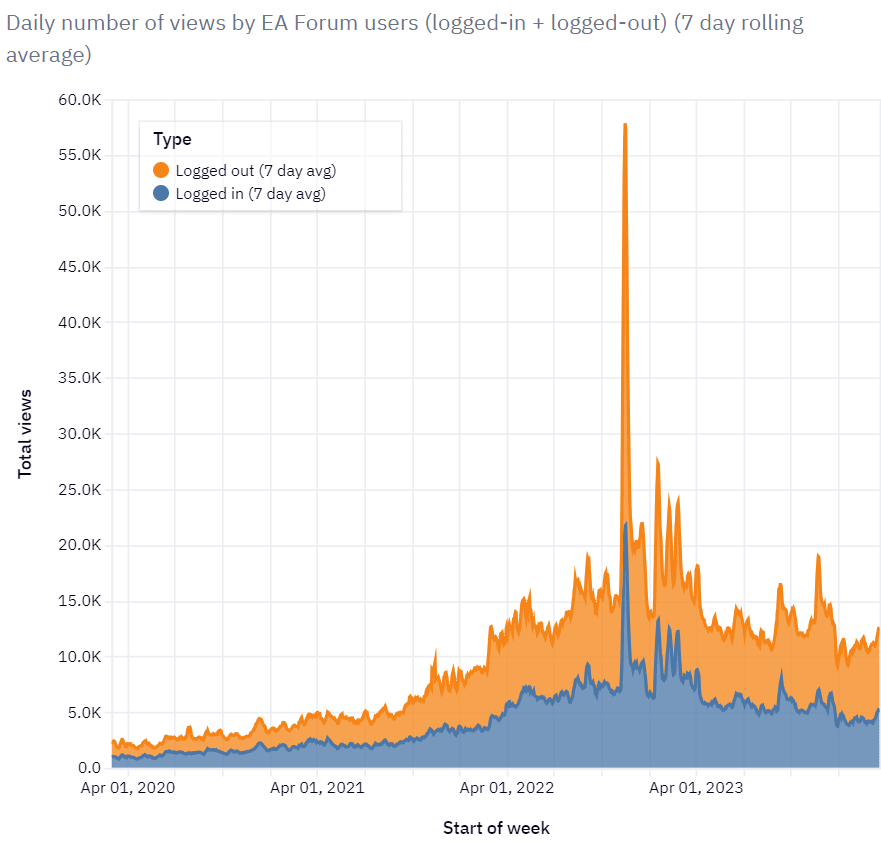

- Brief thoughts on CEA’s stewardship of the EA Forum

- Why are we not harder, better, faster, stronger?

- ...and there are a few smaller pieces on my blog as well.

I appreciate comments and perspectives anywhere, but prefer them over at the individual posts at my blog, since I have a large number of disagreements with the EA Forum's approach to moderation, curation, aesthetics or cost-effectiveness.

Hey, thanks for the comment. Indeed something I was worried about with the later post was whether I was a bit unhinged (but the converse is, am I afraid to point out dynamics that I think are correct?). I dealt with this by first asking friends for feedback, then posting it but distributing it not very widely, then once I got some comments (some of which private) saying that this also corresponded to other people's impressions, I decided to share it more widely.

You are picking on the weakest example. The strongest one might be Sapphire. A more recent one might have been John Halstead, who had a bad day, and despite his longstanding contributions to the community was treated with very little empathy and left the forum.

I think this paragraph misrepresents me:

So if leadership has priorities different from the rest of the movement, the rest of the movement should be more reluctant to follow. But this is for people to decide individually, I think.

You can see some examples in section 5.

I think the strongest version of my current beliefs is that quantification is underdeployed on the margin and it can unearth Pareto improvements. This is joined with an impression that we should generally be much more ambitious. This doesn't require me to believe that more maximization will always be good, rather than, at the current margin, more ambition is.