I've been writing a few posts critical of EA over at my blog. They might be of interest to people here:

- Unflattering aspects of Effective Altruism

- Alternative Visions of Effective Altruism

- Auftragstaktik

- Hurdles of using forecasting as a tool for making sense of AI progress

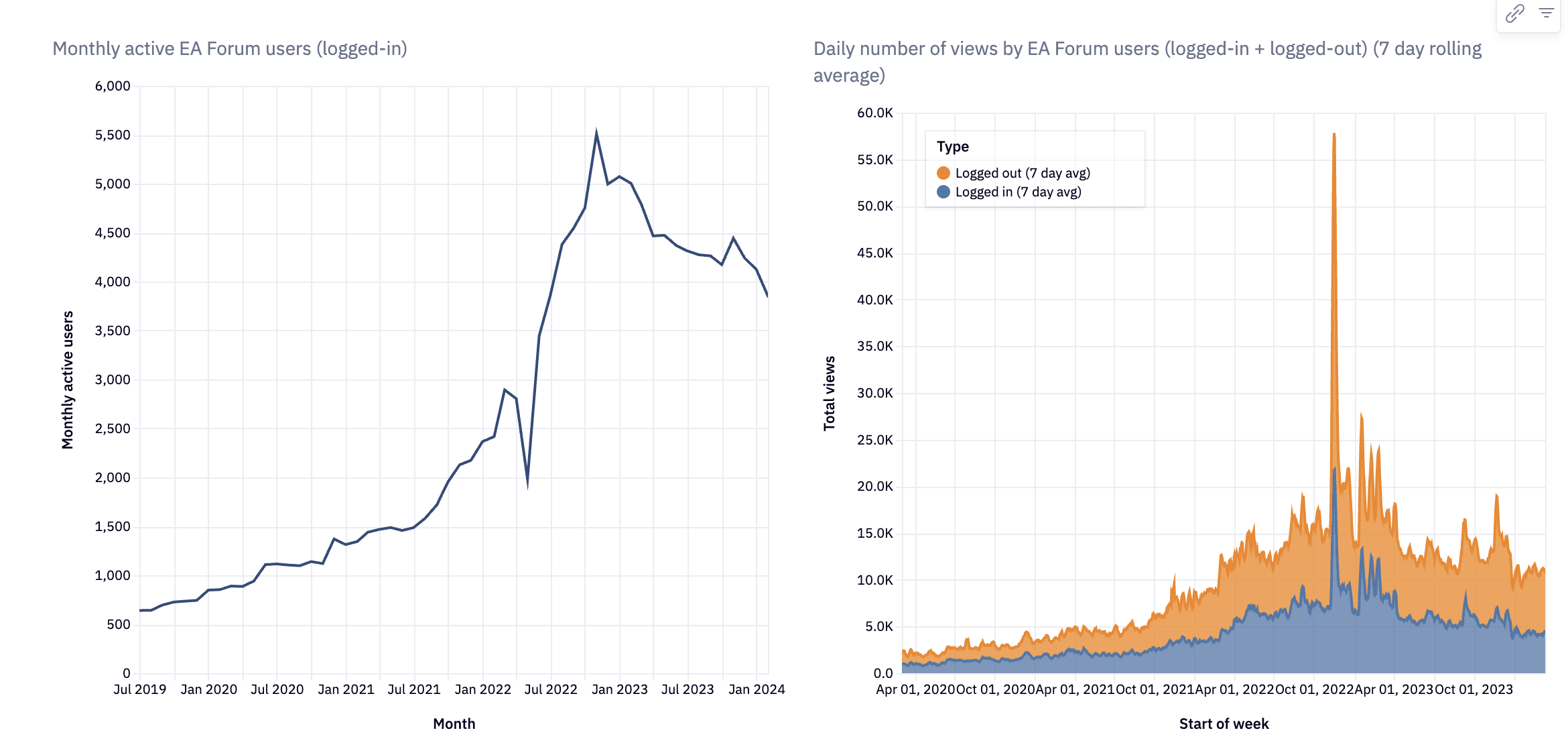

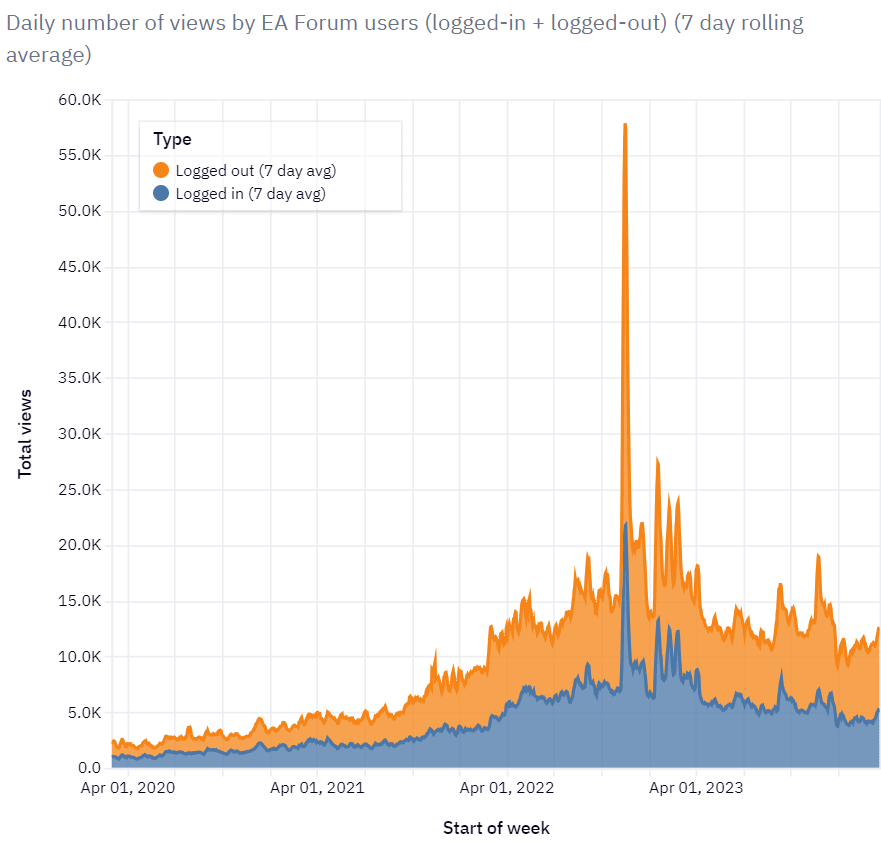

- Brief thoughts on CEA’s stewardship of the EA Forum

- Why are we not harder, better, faster, stronger?

- ...and there are a few smaller pieces on my blog as well.

I appreciate comments and perspectives anywhere, but prefer them over at the individual posts at my blog, since I have a large number of disagreements with the EA Forum's approach to moderation, curation, aesthetics or cost-effectiveness.

FWIW, the "deals and fairness agreement" section of this blogpost by Karnofsky seems to agree about (or at least discuss) trade between different worldviews :

Different worldviews are discussed as being incommensurable here (under which maximizing expected choice-worthiness doesn't work). My understanding though is that the (somewhat implicit but more reasonable) assumption being made is that under any given worldview, philanthropy in that worldview's preferred cause area will always win out in utility calculations, which makes sort of deals proposed in "A flaw in a simple version of worldview diversification" not possible/useful.

In practice I don't think these trades happen, making my point relevant again.

I'm not sure exactly what you are proposing. Say you have three inconmesurable views of the world (say, global health, animals, xrisk), and each of them beats the other according to their idiosyncratic expected value methodology. But then you assign ... (read more)