All of WilliamKiely🔸's Comments + Replies

4:30 "Most of the world's 8 billion egg-laying hens, roughly one for every person alive on Earth today, are confined right now in cages like these."

4:56 "The egg industry has no need for the 7 billion male chicks born annually, so it kills them on their first day alive in this world."

Just to check my understanding of the numbers here:

Google tells me "Egg-laying hens on factory farms typically live for 12 to 18 months, or about a year and a half, before they are slaughtered when their egg production begins to decline."

So I guess once each year on average th...

How would you evaluate the cost-effectiveness of Writing Doom – Award-Winning Short Film on Superintelligence (2024) in this framework?

(More info on the film's creation in the FLI interview: Suzy Shepherd on Imagining Superintelligence and "Writing Doom")

It received 507,000 views, and at 27 minutes long, if the average viewer watched 1/3 of it, then that's 507,000*27*1/3=4,563,000 VM.

I don't recall whether the $20,000 Grand Prize it received was enough to reimburse Suzy for her cost to produce it and pay for her time, but if so, that'd be 4,563,000VM/$20,0...

Footnote 2 completely changes the meaning of the statement from common sense interpretations of the statement. It makes it so that e.g. a future scenario in which AI takes over and causes existential catastrophe and the extinction of biological humans this century does not count as extinction, so long as the AI continues to exist. As such, I chose to ignore it with my "fairly strongly agree" answer.

I endorse this for non-EA vegans who aren't willing to donate the money to wherever it will do the most good in general, but as my other comments have pointed out if a person (vegan or non-vegan) is willing to donate the money to wherever it will so the most good then they should just do that rather than donate it for the purpose of offsetting.

Per my top-level comment citing Claire Zabel's post Ethical offsetting is antithetical to EA, offsetting past consumption seems worse than just donating that money to wherever it will do the most good in general.

I see you've taken the 10% Pledge, so I gather you're willing to donate effectively.

While you might feel better if you both donate X% to wherever you believe it will do the most good and $Y to the best animal charities to offset your past animal consumption, I think you instead ought to just donate X%+$Y to wherever it will do the most good.

NB: May...

This seems like a useful fundraising tool to target people who are unwilling to give their money to wherever it will do the most good, but I think it should be flagged that if a person is willing to donate their money to wherever it will do the most good then they should do that rather than donate to the best animal giving opportunities for the purpose of ethical offsetting. See Ethical offsetting is antithetical to EA.

I'm now over 20 minutes in and haven't quite figured out what you're looking for. Just to dump my thoughts -- not necessarily looking for a response:

On the one hand it says "Our goal is to discover creative ways to use AI for Fermi estimation" but on the other hand it says "AI tools to generate said estimates aren’t required, but we expect them to help."

From the Evaluation Rubric, "model quality" is only 20%, so it seems like the primary goal is neither to create a good "model" (which I understand to mean a particular method for making a Fermi estimate on ...

I don't see any particular reason to believe the means to obtain that knowledge existed and was used when you can't tell me what that might look like, never mind how a small number of apparently resource-poor people obtained it...

I wasn't a particularly informed forecaster, so me not telling you what information would have been sufficient to justify a rational 65+% confidence in Trump winning shouldn't be much evidence to you about the practicality of a very informed person reaching 65+% credence rationally. Identifying what information would have be...

So I think the suggestion that there was some way to get to 65-90% certainty which apparently nobody was willing to either make with substantial evidence or cash in on to any significant extent is a pretty extraordinary claim...

I'm skeptical that nobody was rationally (i.e. not overconfidentally) at >65% belief Trump would win before election day. Presumably a lot of people holding Yes and buying Yes when Polymarket was at ~60% Trump believed Trump was >65% likey to win, right? And presumably a lot of them cashed in for a lot of money. What makes ...

The information that we gained between then and 1 week before the election was that the election remained close

I'm curious if by "remained close" you meant "remained close to 50/50"?

(The two are distinct, and I was guilty of pattern-matching "~50/50" to "close" even though ~50/50 could have meant that either Trump or Harris was likely to win by a lot (e.g. swing all 7 swing states) and we just had no idea which was more likely.)

Could you say more about "practically possible"?

Yeah. I said some about that in the ACX thread in an exchange with a Jeffrey Soreff here. Initially I was talking about a "maximally informed" forecaster/trader, but then when Jeffrey pointed out that that term was ill-defined, I realized that I had a lower-bar level of informed in mind that was more practically possible than some notions of "maximally informed."

What steps do you think one could have taken to have reached, say, a 70% credence?

Basically just steps to become more informed and steps to have bett...

Note that I also made five Manifold Markets questions to also help evaluate my PA election model (Harris and Trump means and SDs) and the claim that PA is ~35% likely to be decisive.

- Will Pennsylvania be decisive in the 2024 Presidential Election?

- How many votes will Donald Trump receive in Pennsylvania? (Set)

- How many votes will Donald Trump receive in Pennsylvania? (Multiple Choice)

- How many votes will Kamala Harris receive in Pennsylvania? (Set)

- How many votes will Kamala Harris receive in Pennsylvania? (Multiple Choice)

(Note: I accidentally resolved my Harr...

Before the election I made a poll asking "How much would you pay (of your money, in USD) to increase the probability that Kamala Harris wins the 2024 Presidential Election by 0.0001% (i.e. 1/1,000,000 or 1-in-a-million)?"

You can see 12 answers from rationalist/EA people after submitting your answer to the poll or jumping straight to the results.

I think elections tend to have low aleatoric uncertainty, and that our uncertain forecasts are usually almost entirely due to high epistemic uncertainty. (The 2000 Presidential election may be an exception where aleatoric uncertainty is significant. Very close elections can have high aleatoric uncertainty.)

I think Trump was actually very likely to win the 2024 election as of a few days before the election, and we just didn't know that.

Contra Scott Alexander, I think betting markets were priced too low, rather than too high. (See my (unfortunately verbose) ...

Thanks for writing up this post, @Eric Neyman . I'm just finding it now, but want to share some of my thoughts while they're still fresh in my mind before next election season.

This means that one extra vote for Harris in Pennsylvania is worth 0.3 μH. Or put otherwise, the probability that she wins the election increases by 1 in 3.4 million

My independent estimate from the week before the election was that Harris getting one extra vote in PA would increase her chance of winning the presidential election by about 1 in 874,000.

My methodology was to forecast th...

(copying my comment from Jeff's Facebook post of this)

I agree with this and didn't add it (the orange diamond or 10%) anywhere when I first saw the suggestions/asks by GWWC to do so for largely the same reasons as you.

I then added added it to my Manifold Markets *profile* (not name field) after seeing another user had done so. I didn't know who the user was and didn't know that they had any affiliation with effective giving or EA, and appreciated learning that, hence why I decided to do the same. I'm not committed to this at all and may remove it in the fu...

These new systems are not (inherently) agents. So the classical threat scenario of Yudkowsky & Bostrom (the one I focused on in The Precipice) doesn’t directly apply. That’s a big deal.

It does look like people will be able to make powerful agents out of language models. But they don’t have to be in agent form, so it may be possible for first labs to make aligned non-agent AGI to help with safety measures for AI agents or for national or international governance to outlaw advanced AI agents, while still benefiting from advanced non-agent systems.

U...

Today, Miles Brundage published the following, referencing this post:

...A few years ago, I argued to effective altruists (who are quite interested in how long it may take for certain AI capabilities to exist) that forecasting isn’t necessarily the best use of their time. Many of the policy actions that should be taken are fairly independent of the exact timeline. However, I have since changed my mind and think that most policymakers won’t act unless they perceive the situation as urgent, and insofar as that is actually the case or could be in the future, it n

How does marginal spending on animal welfare and global health influence the long-term future?

I'd guess that most of the expected impact in both cases comes from the futures in which Earth-originating intelligent life (E-OIL) avoids near-term existential catastrophe and goes on to create a vast amount of value in the universe by creating a much larger economy and colonizing other galaxies and solar systems, and transforming the matter there into stuff that matters a lot more morally than lifeless matter ("big futures").

For animal welfare spending, then, pe...

This is horrifying! A friend of the author just shared this along with a Business Insider post that was just published that links to this post:

I'm curious if you or the other past participants you know had a good experience with AISC are in a position to help fill the funding gap AISC currently has. Even if you (collectively) can't fully fund the gap, I'd see that as a pretty strong signal that AISC is worth funding. Or, if you do donate but you prefer other giving opportunities instead (whether in AIS or other cause areas) I'd find that valuable to know too.

But on the other hand, I've regularly meet alumni who tell me how useful AISC was for them, which convinces me AISC is clearly very net positive.

Naive question, but does AISC have enough of such past alumni that you could meet your current funding need by asking them for support? It seems like they'd be in the best position to evaluate the program and know that it's worth funding.

Nevertheless, AISC is probably about ~50x cheaper than MATS

~50x is a big difference, and I notice the post says:

We commissioned Arb Research to do an impact assessment.

One preliminary result is that AISC creates one new AI safety researcher per around $12k-$30k USD of funding.

Multiplying that number (which I'm agnostic about) by 50 gives $600k-$1.5M USD. Does your ~50x still seem accurate in light of this?

I'm guessing that what Marius means by "AISC is probably about ~50x cheaper than MATS" is that AISC is probably ~50x cheaper per participant than MATS.

Our cost per participant is $0.6k - $3k USD

50 times this would be 30k - 150k per participant.

I'm guessing that MATS is around 50k per person (including stipends).

Here's where the $12k-$30k USD comes from:

...Dollar cost per new researcher produced by AISC

- The organizers have proposed $60–300K per year in expenses.

- The number of non-RL participants of programs have increased from 32 (AISC4) to 130&

I'm a big fan of OpenPhil/GiveWell popularizing longtermist-relevant facts via sponsoring popular YouTube channels like Kurzgesagt (21M subscribers). That said, I just watched two of their videos and found a mistake in one[1] and took issue with the script-writing in the other one (not sure how best to give feedback -- do I need to become a Patreon supporter or something?):

Why Aliens Might Already Be On Their Way To Us

My comment:

...9:40 "If we really are early, we have an incredible opportunity to mold *thousands* or *even millions* of planets according to ou

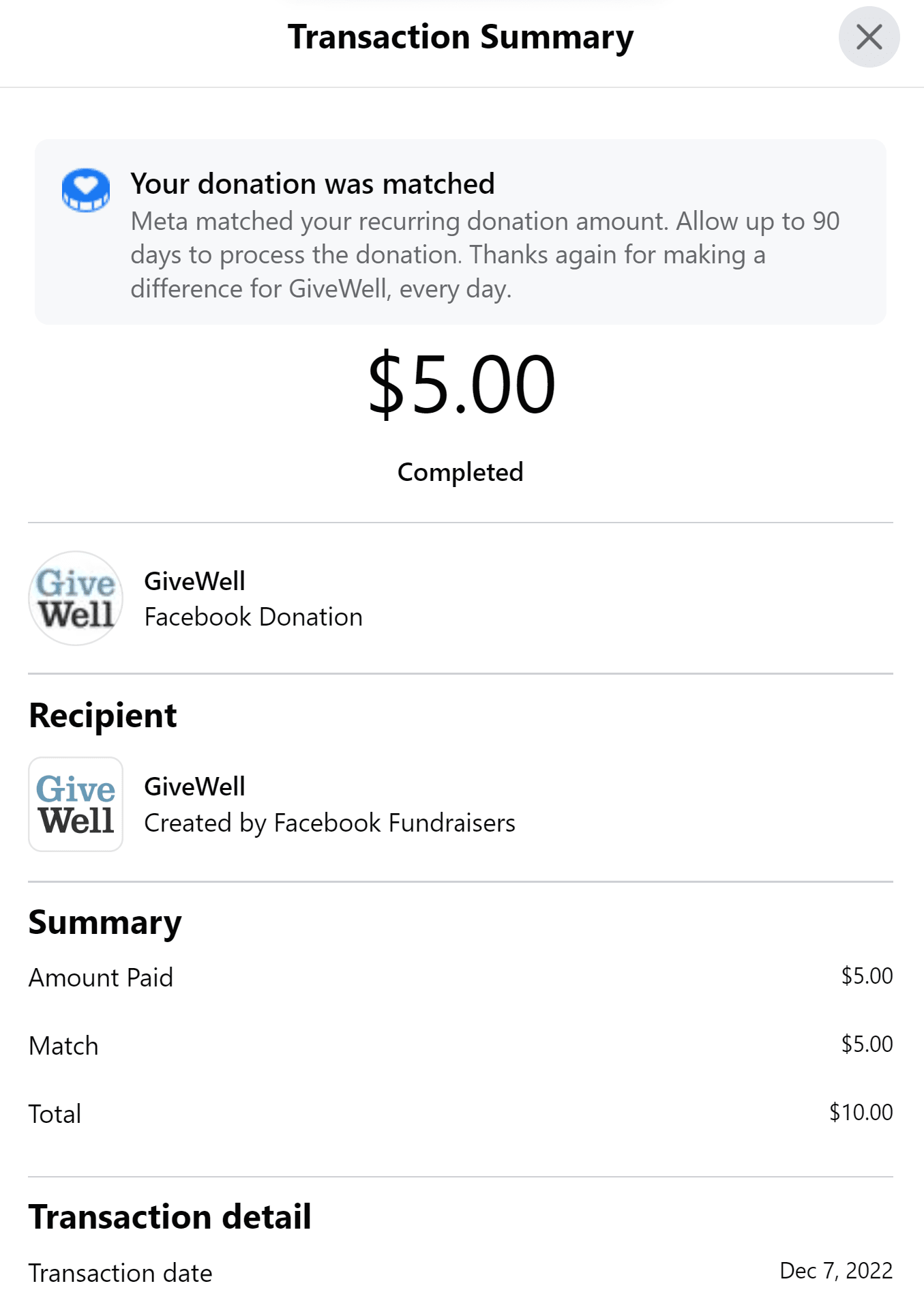

I also had a similar experience making my first substantial donation before learning about non-employer counterfactual donation matches that existed.

It was the only donation I regretted since by delaying making it 6 months I could have doubled the amount of money I directed to the charity for no extra cost to me.

Great point, thanks for sharing!

While I assume that all long-time EAs learn that employer donation matching is a thing, we'd do well as a community to ensure that everyone learns about it before donating a substantial amount of money, and clearly that's not the case now.

Reminds me of this insightful XKCD: https://xkcd.com/1053/

For each thing 'everyone knows' by the time they're adults, every day there are, on average, 10,000 people in the US hearing about it for the first time.

Thanks for sharing about your experience.

I see 4 people said they agreed with the post and 3 disagreed, so I thought I'd share my thoughts on this. (I was the 5th person to give the post Agreement Karma, which I endorse with some nuance added below.)

I've considered going on a long hike before and like you I believed the main consideration against doing so was the opportunity cost for my career and pursuit of having an altruistic impact.

It seemed to me that clearly there was something else I could do that would be better for my career and altruistic impact ...

I'll also add that I didn't like the subtitle of the video: "A case for optimism".

A lot of popular takes on futurism topics seem to me to focus on being optimistic or pessimistic, but whether one is optimistic or pessimistic about something doesn't seem like the sort of thing one should argue for. It seems a little like writing the bottom line first.

Rather, people should attempt to figure out what the actual probabilities of different futures are and how we are able to influence the future to make certain futures more or less probable. From there it's just...

I've been a fan of melodysheep since discovering his Symphony of Science series about 12 years ago.

Some thoughts as I watch:

- Toby Ord's The Precipice and his 16 percent estimate of existential catastrophe (in the next century) is cited directly

- The first part of the script seems heavily-inspired by Will MacAskill's What We Owe the Future

- In particular there is a strong focus on non-extinction, non-existentially catastrophic civilization collapse, just like in WWOTF

- 12:40 "But extinction in the long-term is nothing to fear. No species survives forever. ...

That is, I wasn’t viscerally worried. I had the concepts. But I didn’t have the “actually” part.

For me I don't think having a concrete picture of the mechanism for how AI could actually kill everyone ever felt necessary to viscerally believing that AI could kill everyone.

And I think this is because every since I was a kid, long before hearing about AI risk or EA, the long-term future that seemed most intuitive to me was a future without humans (or post-humans).

The idea that humanity would go on to live forever and colonize the galaxy and the universe and l...

Thinking out loud about credences and PDFs for credences (is there a name for these?):

I don't think "highly confident people bare the burden of proof" is a correct way of saying my thought necessarily, but I'm trying to point at this idea that when two people disagree on X (e.g. 0.3% vs 30% credences), there's an asymmetry in which the person who is more confident (i.e. 0.3% in this case) is necessarily highly confident that the person they disagree with is wrong, whereas the the person who is less confident (30% credence person) is not necessarily highly ...

I'm not sure. I think you are the first person I heard of saying they got matched. When I asked in the EA Facebook group for this on December 15th if anyone got matched, all three people who responded (including myself) reported that they were double-charged for their December 15th donations. Initially we assumed the second receipt was a match, but then we saw that Facebook had actually just charged us twice. I haven't heard anything else about the match since then and just assumed I didn't get matched.

I felt a [...] profound sense of sadness at the thought of 100,000 chickens essentially being a rounding error compared to the overall size of the factory farming industry.

Yes, about 9 billion chickens are killed each year in the US alone, or about 1 million per hour. So 100,000 chickens are killed every 6 minutes in the US (and every 45 seconds globally). Still, it's a huge tragedy.

This is a great point, thanks. Part of me thinks basically any work that increases AI capabilities probably accelerates AI timelines. But it seems plausible to me that advancing the frontier of research accelerates AI timelines much more than other work that merely increases AI capabilities, and that most of this frontier work is done at major AI labs.

If that's the case, then I think you're right that my using a prior for the average project to judge this specific project (as I did in the post) is not informative.

It would also mean we could tell a story ab...

...Thanks for the response and for the concern. To be clear, the purpose of this post was to explore how much a typical, small AI project would affect AI timelines and AI risk in expectation. It was not intended as a response to the ML engineer, and as such I did not send it or any of its contents to him, nor comment on the quoted thread. I understand how inappropriate it would be to reply to the engineer's polite acknowledgment of the concerns with my long analysis of how many additional people will die in expectation due to the project accel

I only play-tested it once (in-person with three people with one laptop plus one phone editing the spreadsheet) and the most annoying aspect of my implementation of it was having to record one's forecasts in a spreadsheet from a phone. If everyone had a laptop or their own device it'd be easier. But I made the spreadsheet to handle games (or teams?) of up to 8 people, so I think it could work well for that.

I love Wits & Wagers! You might be interested in Wits & Calibration, a variant I made during the pandemic in which players forecast the probability that each numeric range is 'correct' (closest to the true answer without being greater than it) rather than bet on the range that is most probable (as in the Party Edition) or highest EV given payout-ratios (regular Wits & Wagers). The spreadsheet I made auto-calculates all scores, so players need only enter their forecasts and check a box next to the correct answer.

I created the variant because I t...

I was reminded of this comment of mine today and just thought I'd comment again to note that 5 years later my social-impact career prospects have gotten even worse. Concretely, I quit my last job (a sales job just for money) a year and a half ago and have just been living on savings since without applying anywhere in 18 months. Things definitely have not gone as I had hoped when I wrote this comment. Some things have gotten better in life (e.g. my mental health is better than it has been in over five years), but career-wise I'm doing very poorly (not even earning money with a random job) and have no positive trajectory to speak of.