If one would try to create a custom social network for effective altruism community, what would it look like?

In this article, I will present an example of a concept and will ask, what are the flaws or risks? What could be improved? What is a different way to do it?

I tried to make it a simple, impact and numbers driven network, which could add a lot of value, and could cover most of aspirations EA community members would have.

Summary

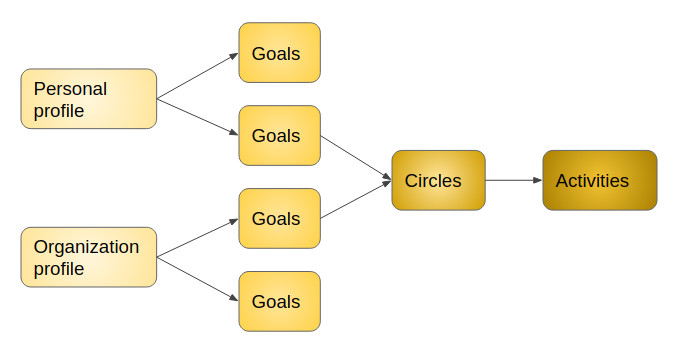

The concept is centered around goals and action.

- Members add goals to their profiles.

- Then, add activities to their goals (for example, a raw idea of doing something)

- Artificial intelligence automatically matches people and organizations having similar or opposite goals (for example, “needs and gives”).

- Then, users select the best matches for their circles around the goal.

- There, users see each other's activities and collaborate within them.

- Numbers are added to measure goals, and graphs to gamify.

In more detail

In the profile, members add their goals.

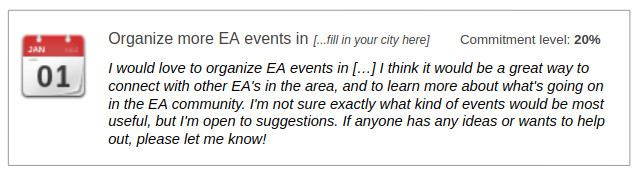

Goals consist of title, picture, description and numbers.

Goal example:

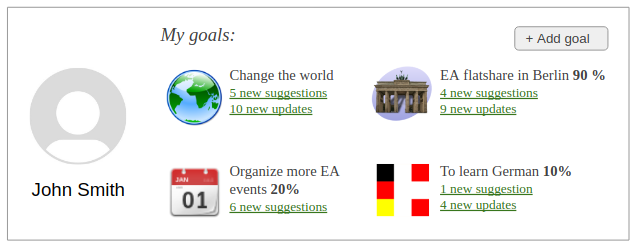

Profile example:

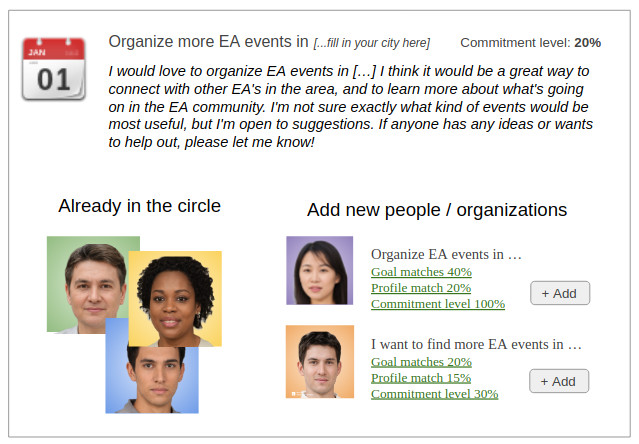

Automatic suggestions of people and organizations

For each goal, artificial intelligence suggests like-minded members based on the similarity of goal descriptions and takes into account all other variables (like the similarity between all other goals and other numbers). Opposite goals to each other, like concept "Needs" and "Gives", gets matched here.

Personal circles around goals

If a person gets good suggestions of similar goals of other members and organizations, he adds them to the goal's circle and follows activities.

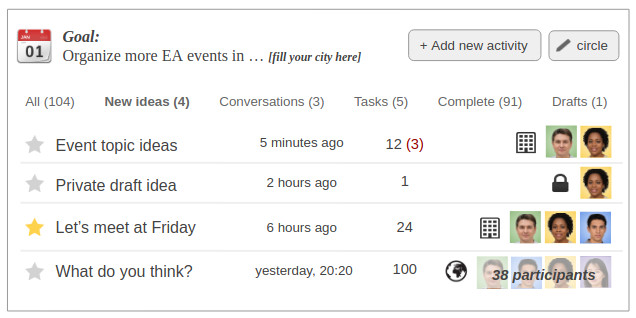

Collaboration together

Users create activities for a goal. It can be a raw idea, a task, a conversation.

This example list may be filled with a lot of ideas from the circle. Now I will try to predict with a lot of speculation what that could mean.

One scenario: since people are connected about something they care about, there is a degree of passion. If the goal is very specific, then the activities the circle members post are relevant. The whole tool encourages raw ideas to be presented. Conversations happen here, ideas combine and mix, or bad ones get postponed and forgotten. The result is problems being solved and goals achieved using common brain power.

On the other hand, it is uncertain how this would actually play out. This is what the development team would need to test.

Value for organizations

- Since organizations create their own profiles with goals, the network would connect them with people and other organizations having the same goals. For example: organizations have similar needs around technology, maybe they could join up and solve it together.

- Since organizations create activities to achieve their goals, they could crowd-source ideas or tasks. It might become a streamlined way for organizations to attract motivated volunteers. And for people, it would lower the barrier to contribute. By being able to join an organization's work today, they might grow up to eventually become employees.

Simplified project management system

If previously mentioned concepts work in the real world, then activities could be grouped into projects, categories, tags, and statuses to organize all work. This would not add much new complexity, rather simplification.

Integrated measurement

People would add core numbers to their goals to measure everything, which would reflect in graphs to gamify. Which measurement tools would fit here best?

Dashboard

In the dashboard, everything important is in one place.

Next steps?

Channel hashtag #custom-ideas was created in the slack "EA Public Interest Technologists" [3]. And we can talk about it in the comments under this post.

Why to explore and develop new concepts?

In order to innovate. If some of the concepts prove to work in the real world, then they could be merged under existing platforms like eahub.org.

Reference

- Example icons taken from https://commons.wikimedia.org/

- Example photos taken from https://generated.photos/

- Link to join slack https://join.slack.com/t/ea-pub-interest-tech/shared_invite/zt-tar2i03b-3xqmTh1lLFn8NWB6X1ZA6Q

Update 2022-03-04.

A prototype was created to test this concept. More info in a new article:

https://forum.effectivealtruism.org/posts/Rtfvyoj5wfA3wYpsq/prototype-of-re-imagined-social-network-for-ea-community

Thanks for sharing! I've had the feeling for a while that it would be great if EA managed to make goals/projects/activities of people (/organizations) more transparent to each other. E.g. when I'm working on some EA project, it would be great if other EAs who might be interested in that topic would know about it. Yet there are no good ways that I'm aware of to even share such information. So I certainly like the direction you're taking here.

I guess one risk would be that, however easy to use the system is, it is still overhead for people to have their projects and goals reflected there. Unless it happens to be their primary/only project management system (which however would be very hard to achieve).

Another risk could be that people use it at first, but don't stick to it very long, leading to a lot of stale information in the system, making it hard to rely on even for highly engaged people.

I guess you could ask two related questions. Firstly, let's call it "easy mode": assuming the network existed as imagined, and most people in EA were in fact using this system as intended - would an additional person that first learns of it start using it in the same productive way?

And secondly, in a more realistic situation where very few people are actively using it, would it then make sense for any single additional person to start using it, share their goals and projects, keep things up to date persistently, probably with quite a bit of overhead on their part because it would happen on top of their actual project management system?

I think it's great to come up with ideas about e.g. "the best possible version EA Hub" and just see what comes out, even though it's hard to come up with ideas that would answer both above questions positively. Which is why improving the EA Hub generally seems more promising to me than building any new type of network, as at least you'd be starting with a decent user base and would take away the hurdles of "signing up somewhere" and "being part of multiple EA related social networks".

So long story short, I quite like your approach and the depth of your mock-up/prototype, and think it could work as inspiration for EA Hub to a degree. Have my doubts that it would be worthwhile actually building something new just to try the concept. Except maybe creating a rough interactive prototype (e.g. paper prototype or "click dummy"), and playing it through with a few EAs, which might be worthwhile to learn more about it.

True, this has to be done through smaller steps like a prototype. It can be implement in many shapes and forms. I am working on such ideas right now. All other points you wrote are valid and, I guess, are solvable. Good to hear the concept is not flawed in fundamental ways. Thanks for your comment.

Interesting article! Thank you!! For me, the best social network is LinkedIn, since it is a work and business focused network.

When creating an account for your network above, would I be able to pull in my LinkedIn Profile? If so, that would make your network slightly easier to start using.

Good point about LinkedIn. It seems like it is a very good networking tool for enterprise. It helps to find employees, partners and clients for businesses, and visa versa. Then they network in order to do business together. It looks like LinkedIn covers the needs of business already. So this concept may add no value to them, mainly because of their competitive nature and large scale. Companies will not want to share they day to day activities, nor they day to day goals.

But it would add new value for non-profit organizations and effective altruists to solve world problems, because of collaborative nature and decentralization. EA members work towards common goals with decentralized initiative - there is a lot of space to innovate this process, mainly because community like effective altruists never existed before.

True, it may be difficult to define goals and start using. Possibly, it may not be an issue - users may find a lot of inspiration from many examples in other profiles. There might be a button to clone those examples and modify them.

Goals could be a practical smaller things to achieve. You need something a little bit and you don't know how to get there, you add it to your profile. Possibly in a private hidden mode too. Then AI should search the match.

It may be difficult to import LinkedIn profile, because the structure is completely different. Unless artificial intelligence would scan your profile and define goals for you, but that looks not real, unless LinkedIn has some information on profiles that could be converted to goal statements and descriptions?