Introduction

At the GCP Workshop last weekend, we discussed what’s known as the “Transmitter Room Problem.” The transmitter room problem is a thought experiment developed by Scanlon:

“Suppose that Jones has suffered an accident in the transmitter room of a television station. Electrical equipment has fallen on his arm, and we cannot rescue him without turning off the transmitter for fifteen minutes. A World Cup match is in progress, watched by many people, and it will not be over for an hour. Jones’s injury will not get any worse if we wait, but his hand has been mashed and he is receiving extremely painful electrical shocks.”[1]

To make the argument stronger we extend the example to a hypothetical “Galactic Cup” where the number of potential viewers is arbitrarily large. Moreover, we assume Jones to suffer as much pain as a human can survive, to consider the most extreme case of the example.

If this individual is spared, the broadcast must be stopped, causing countless viewers across the galaxy to miss the event and feel upset. The question is whether, at some unimaginably large scale, the aggregated minor distress of countless viewers could outweigh one person’s extreme, concentrated suffering.

This dilemma is deeply counterintuitive. Below are two different approaches we’ve seen proposed elsewhere, and one which we found during our discussion that we'd like thoughts on.

1. Biting the Bullet

In an episode (at 01:47:17) of the 80,000 Hours Podcast, Robert Wiblin discusses this problem and argues that the suffering of the individual might be permissible when weighed against the aggregate suffering of an immense number of viewers. He suggests that the counterintuitive nature of this conclusion arises from our difficulty in intuitively grasping large numbers. Wiblin further points out that we already accept analogous harms in real-world scenarios, such as deaths during stadium construction or environmental costs from travel to large-scale events. In this view, the aggregate utility of the broadcast outweighs the extreme disutility experienced by the individual.

2. Infinite Disutility

Another perspective posits that extreme suffering of a single person can generate infinite disutility.[2] For instance, the pain of a single individual experiencing every painful nerve firing at once can be modeled as negative infinity in utility terms. Under this framework, no finite aggregation of utilities induced by mild discomfort among viewers could counterbalance the individual’s suffering. While this approach sidesteps the problem of large numbers, it introduces a new challenge: it implies that two individuals undergoing such extreme suffering are no worse off than one, as both scenarios involve the same negative infinity in utility. It might also be prudent to "save" the concept of negative utility for the true worst case scenarios.

3. The Light Cone Solution

This approach begins by assuming that the observable universe (“our light cone”) is finite with certainty.[3] Even the Galactic Cup cannot reach an infinite audience due to constraints such as the finite lifespan of the universe and the expansion of space, which limits the number of sentient beings within our causal reach. Given these boundaries, the number of potential viewers is finite, albeit astronomically large.

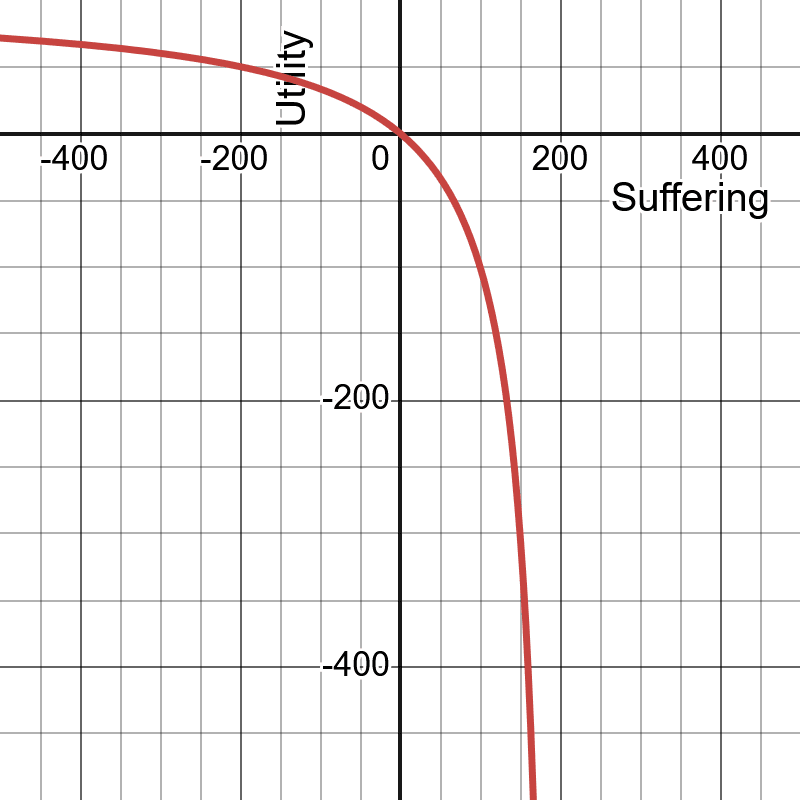

By assigning sufficient negative (but now finite) utility to the individual’s extreme suffering, this perspective ensures that it outweighs the aggregate discomfort of any audience whose size remains within physical limits. This approach avoids saying that multiple people in extreme suffering would be no worse than an individual under such conditions, since sufficiently large but finite negative utility of one individual can trump the mild discomfort of the largest physically possible audience. As the negative utility of this individual is finite, multiple individuals under the same conditions would be worse than one.

Considering that the observable universe being finite only solves the problem for the specific transmitter room problem, one can imagine a variation in which this broadcast doesn’t only affect people watching at the given moment. For the Light Cone Solution to hold, one must then give some reason why time is also finite, or why sentient beings can’t reach infinite numbers given infinite time. This calls into relevance the question of whether Heat Death is real, or other hypotheses for the universe becoming uninhabitable.

To sum up, assuming that the total number of sentient beings will be finite, both in time and space, and assuming that utility is sufficiently concave in suffering might lead to interesting conclusions relevant for EA. Regarding near-termist EA, the insight how the weighting of the intensity of suffering matters for cost-effectiveness analysis has probably been discussed elsewhere in detail. Regarding long-termism, the conclusion seems to be that one ought to prioritize existence and value of the long term future compared to avoiding suffering of small or medium intensity, but it is at least possible that there is a moral imperative to focus on avoiding large suffering today as compared to making the future happen. Alternatively, the proposed way out of the transmitter room problem made me find S-risks more relevant as compared to x-risks.

Open questions

We’d be interested to hear if this solution to the transmitter room problem has been discussed somewhere else, and would be thankful for reading tips Furthermore, we’re curious to hear others’ thoughts on these approaches or alternative solutions to the Transmitter Room Problem. Are there other perspectives we’ve overlooked? How should we weigh extreme suffering against dispersed mild discomfort at astronomical scales?

Acknowledgements

Thanks to Jian Xin Lim for loads of great comments and insight.

- ^

Scanlon, T.M. (1998). What We Owe to Each Other. Belknap Press.

- ^

To fix ideas, consider we analyse how bad a possible world by a (possibly weighted) sum of utilities. Then utilities are the measure how relevant suffering of an individual is for overall welfare. I assume one could weaken a number of assumptions and the argument might still work, but this would go beyond the scope of this post.

- ^

Mogensen and Wiblin discuss this problem in this podcast episode, fwiw. That's all I know, sorry.

Btw, if you really endorse your solution (and ignore potential aliens colonizing our corner of the universe someday, maybe), I think you should find deeply problematic GCP's take (and the take of most people on this Forum) on the value of reducing X-risks. Do you agree or do you believe the future of our light cone with humanity around doing things will not contain any suffering (or anything that would be worse than the suffering of one Jones in the “Transmitter Room Problem”)? You got me curious.

Sorry, that wasn't super clear. I'm saying that if you believe that there is more total suffering in a human-controlled future than in a future not controlled by humans, X-risk reduction would be problematic from the point of view you defend in your post.

So if you endorse this point of view, you should either believe x-risk reduction is bad or that there isn't more total suffering in a human-controlled future. Believing either of those would be unusual (although this doesn't mean you're wrong) which is why I was curious.