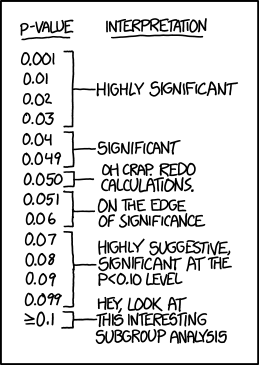

We all know that good science is hard. Sometimes science is the victim of outright fraud, such as when people intentionally falsify data. However, we frequently can mislead ourselves even with the best of intentions. Often this happens through performing multiple analyses and only keeping the ones that turn out to be statistically significant, a method called p-hacking.

For example, This FiveThiryEight interactive shows that you can create dramatically different yet statistically significant results merely by re-defining your terms. Don’t like your current results? Simply change things around, re-run your data, and maybe this time around you’ll get the results you like!

But p-hacking isn’t the only problem. Other times it can be post-hoc analysis and reasoning once your initial plan fails, to try to find something completely different yet also publishable. Another common failure is not ensuring that there are an adequate amount of people in your study to show an effect and then profoundly announcing that no effect was found. Lastly, studies that don’t do well can just end up in the file cabinet of publication bias.

-

Many techniques have been invented to crack down on these intentional and unintentional biases. One technique that does well at preventing (though not eliminating) nearly all these problems is study pre-registration, where scientists explicitly state how they will go about collecting data and what analyses they will perform prior to any actual data collection or analysis. This way, when the temptation is there to do post-hoc analysis or p-hacking, the resulting analysis can be compared to the pre-registration and rejected. Also, pre-registration announcements can be monitored to see whether the resulting study is ever published to monitor for publication bias.

However, study pre-registration may not go far enough and could go much further to ensure a level of trustworthiness that is automatically verifiable. Currently, pre-registration often explains using English words what data analysis will take place. However, the actual analysis is often written in a statistical programming language, such as STATA, R, or Python. This programming language itself is a lot more precise than the English words and removes even more potential for equivocation. Therefore, I suggest that people not only pre-register descriptions of their analysis plans but pre-register the analysis code as well.

-

The biggest barrier to this is that developing the analysis code is often an interactive process where you try to follow the pre-analysis plan but also need to wrangle the data as it happens, which is difficult to pin down without having the actual dataset present. However, we can solve this problem by generating dummy data that is similar in format to the real data.

The dummy data also opens up the opportunity to rigorously test the pre-analysis plan. If we re-run the analysis code under different scenarios, such as dummy data engineered to show no effect, dummy data engineered to show some but not a significant effect, and dummy data engineered to show a significant effect, we can double check that the analysis code holds up under these conditions. (If there’s enough interest, I intend to eventually write an R and/or Python library to automate this process of generating fake data, but for now you’re on your own.)

For example, does the analysis code show a significant effect when the dummy data wasn’t supposed to have one? Perhaps the analysis is vulnerable to testing too many hypotheses. Does the analysis code not show an effect when the dummy data was supposed to show one? Perhaps the study is underpowered.

-

A simpler idea to generating entirely dummy data is to have someone else record the actual dependent variable and randomize it before you do the analysis. When you do the analysis on the random dependent variable, you will not be able to pick up any statistical trends (and if you do, it is a sign you are p-fishing) since no trends would exist.

-

Using these pre-registration modes, you can check for yourself that your analysis plan works and will show results that are most likely to be true. But more importantly, anyone who sees your pre-registration can run it for themselves and know that your study is well protected against multiple comparisons, p-hacking, lack of statistical power, etc. Furthermore, they can run the pre-analysis code on your published actual data and verify the results in your paper. This creates a method for automatic trustworthiness of study pre-registration that would only allow fake results in the case of massive fraud, such as falsified data.

-

While I came up with this idea independently, I found out that scientists at CERN have a very similar approach:

“We don’t work with real data until the very last step,” she explains. After the analysis tools—algorithms and software, essentially—are defined, they are applied to real data, a process known as the unblinding. “Once we look at the real data,” says Tackmann, “we’re not allowed to change the analysis anymore.” To do so might inadvertently create bias, by tempting the physicists to tune their analysis tools toward what they hope to see, in the worst cases actually creating results that don’t exist. The ability of the precocious individual physicist to suggest a new data cut or filter is restricted by this procedure: He or she wouldn’t even see real data until late in the game, and every analysis is vetted independently by multiple other scientists.

This is a great idea. The only tweak I'd suggest is to let people do data analysis that's not in the pre-registration as long as it's clearly marked. Exploratory data analysis doesn't have to be a bad; if nothing else it can be a good way to get ideas for future studies.

Even though this seems like a pretty clear improvement over the status quo, it also feels like a band-aid. In the worst case I could imagine data falsification becoming more common if data analysis pre-registration became required. Funding-starved academics desperately need to create interesting publishable results ("publish or perish"), and you removed one of the main things that made this possible.

I would like to see more people write about what academia might look like if it was rethought from the ground up. OpenPhil has $10 billion, and funding is to academics what candy is to kids. If OpenPhil tells researchers they have to work a particular way, my guess is they will do it. This could be a really valuable opportunity to experiment with alternative models.

I agree with this entirely (most of my 'proper' academic work has an analysis plan and released code, but not code prior to analysis - I wish I had thought of using dummy data). It is not even clear it is that more arduous than thrashing it out on the console - this will happen either way, so the only additional cost is generating the dummy data.

Specification curves might help: https://www.youtube.com/watch?v=g75jstZidX0

I'll also point out that pre-registration is one of those ideas that is useful for casual reasoning as well: figuring out your evidence buckets and how they would sway your opinion on being filled with various pieces of evidence is often very helpful for sharpening a nebulous problem into an understandable one.