In short

- Effective Environmentalism has re-launched! 🌿

- Our aim is to make the environmental movement more effective by inspiring and enabling people to do high-impact environmental work.

- We currently have zero funding and are volunteer-run with very little available time.

- We are looking for 100,000 to 190,000 EUR in funding in order to be able to work full-time on this initiative for at least one year, including operational expenses. (Smaller or larger grants are possible.)

- Salaries and funding would enable us to register as a non-profit, develop introductory resources for effective environmental action, do outreach to the environmental movement, and build a community of ‘effective environmentalists’.

Introduction

You might have heard from us at an EAG or EAGx, on Substack, or LinkedIn, or somewhere in the climate space, but is it really official until you write an EA forum post?

We are excited to re-launch[1] the Effective Environmentalism field-building initiative! You can think of effective environmentalism as a sub-community of the environmental movement that is inspired by the core ideas of effective altruism, such as prioritisation, truth-seeking, and collaboration. We focus mainly on climate change, but also on other environmental topics such as air pollution and biodiversity because of the strong overlap.

The goal of Effective Environmentalism is to (a) grow the global community of people finding the most effective ways to tackle environmental challenges, and (b) do it. We want to bring core EA ideas to the environmental movement so that it works as effectively as possible for reducing emissions, pollution, human suffering, and animal suffering.

Why Effective Environmentalism?

Problem statement

Environmental challenges, including climate change, air pollution, and biodiversity collapse, are seriously threatening global health and development and the safety of future generations.

Compared to some other causes, there are a lot of resources (time and money) available for tackling environmental issues, but they are often spent ineffectively or even counter-productively. For example, they focus on personal lifestyle changes or rely on unproven carbon offset projects.

Aim of the project

Our hypothesis is that the environmental movement could become orders of magnitude more effective by embracing the principles of effective altruism (EA): prioritisation, impartiality, truth-seeking, and collaboration. Of course, we cannot convince the entire climate movement to adopt EA principles, but we can still try to influence part of it.

While EA-aligned climate organisations exist, efforts to grow an EA-inspired environmental movement are lacking. The aim of Effective Environmentalism is to grow an environmental movement based on the principles of effective altruism by promoting these principles in the environmental movement.

Our work

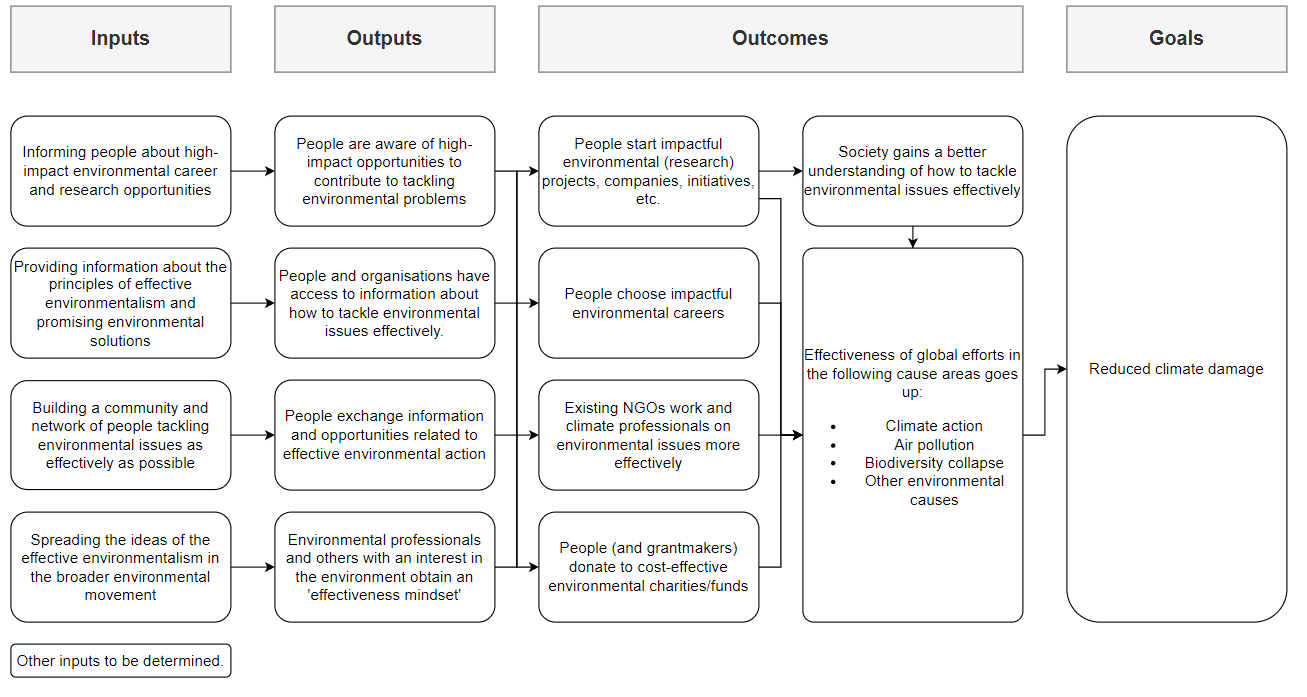

Theory of Change

Activities we'll do

Our goal is to build an ‘effective environmentalism’ movement by:

- Facilitating easy access to resources to learn about EA-inspired approaches to tackling environmental challenges. For example, by developing our website into a ‘one-stop shop’ for introductory information and opportunities in effective environmental action.

- Building a community and network of people researching and working on effective approaches to environmental challenges. For example, a moderated LinkedIn group and Slack workspace.

- Giving guidance and support for finding an impactful environmental career or starting a (research) project. For example, a career guide for applicants and a talent pool for employers.

- Spreading the ideas of effective environmentalism in public appearances and online, and equipping members of the community to do so, too. For example, by speaking at environmental conferences and continuing to grow our newsletter.

Activities we won't do

We’ve decided against the following activities because other organisations are in a better position to them:

- Directly lobbying a government ourselves, as other charities are in a better position to do so. (We might make exceptions if there’s a strong community-building aspect to this, such as public consultations. We’ve organised responses to a public consultation to include alternative proteins in the Dutch Climate Plan during a conference in Amsterdam early November.)

- Researching which interventions or charities are most effective, as existing charity evaluators are already doing work on this.

- Fundraising activities, as effective giving organisations already do this work. However, we do recommend people to donate to the funds by Giving Green and Founders Pledge as one effective action they can take to tackle climate change.

Intended audience

We think we can make the biggest impact by focusing on people with a strong interest in or experience with environmental issues like climate change, that are sympathetic to the ideas of effective altruism but not actively involved in the community.

This audience will probably work on climate change or other environmental issues regardless, but can also be relatively easily influenced to increase the effectiveness of their work. This effectiveness can either be increased by changing their career path in general, or help them become more effective in their existing line of work.

Audiences that we don’t focus on:

- The effective altruism movement. Many people here will have very impactful career paths anyways, albeit in different cause areas. In the context of the greater good, it doesn’t make sense to convince them to start working on climate change vs animal welfare or AI safety.

- Climate activists, because they already have strong ideas about how to tackle environmental issues and are therefore not a tractable audience. Additionally, being associated with activists like XR brings reputational issues such as being seen as irrational or political.

Team

Our two co-directors, Soemano Zeijlmans and Ruben Dieleman, are based in the Netherlands. The team is complementary as one co-director has a strong climate change expertise, while the other has experience with campaigning and organising for effective causes.

Soemano Zeijlmans has diverse experience in effective altruism and climate change. He is currently a Research Fellow at Ambitious Impact and previously worked as a Policy Analyst at the Good Food Institute Europe finding and advocating for cost-effective alternative protein advocacy opportunities. He has also published research on environmental and energy policy at consultancy Copper8 and worked as a freelance coach helping student groups become more effective. He holds a MA in Environment, Development and Peace from the UN University for Peace and a MSc in Environmental Economics from Vrije Universiteit Amsterdam.

Ruben Dieleman has a background in political science, project management, and journalism. He is a participant of the Dutch National Think Tank investigating circular economy and sustainability. Previously, he worked as a campaign manager with the Existential Risk Observatory in Amsterdam. In Spring 2024, Ruben completed an Operations Fellowship with Workstreams. Previously, he organised EAGxRotterdam2022, as well as several AI Safety Talks events surrounding the UK AI Safety Summit in 2023. He studied for an MSc in International Administration and Global Governance from Gothenburg University.

We also have several great volunteers who have offered to help out with local organising and outreach!

Funding need

We currently run Effective Environmentalism in our spare time as we do not have any funding, and hence we spend not nearly as much time as we would like on this initiative.

Most funding would go towards our own salaries (plus secondary benefits like retirement) in order to be able to devote time to the project. Additionally, we need a small operational budget in order to register as a charity, software, promotional materials, and travel costs.

We estimate that we can effectively absorb 100,000 to 190,000 EUR as this would pay for both salaries and operations for one year, plus some contractors if useful. We are also open for lower amounts (for project-based temporary work on the project) and higher amounts (which would allow the project to run for longer, or we can do additional hires). The amount we ask is equivalent to a typical Charity Entrepreneurship seed grant.[2]

Ideally, we would be able to start working full-time on Effective Environmentalism in early 2025, as both of our co-directors have a contract until the end of 2024. This leaves a window of opportunity for shifting our focus and careers.

We think we are able to get non-EA-related funding after initial seed funding if we've demonstrated our success and effectiveness and have built a reputation in the environmental field.

Our progress so far

- We've set up a brand, website, re-vamped newsletter, and socials.

- We've created a LinkedIn group, although this is still rather small.

- We've hosted meetups and events at EAGx Utrecht (~30 people), EAGx Berlin (~60 people), the University of Amsterdam (~15 people).

- We've helped set up a network for replying to public climate consultations to shift attention to effective climate solutions in the EU and the Netherlands, which led to at least 5 responses to include alternative proteins in the Dutch Climate Plan 2030-2050.

- We've advised a regional director of a large international environmental NGO who reached out to us for help with increasing the effectiveness of their climate strategy.

- We've briefed several people in the German Green Party (Bündnis 90/Die Grünen) about the core ideas of EA and climate at their request.

- We've had a few calls with people exploring effective climate careers to explore what kind of advice is helpful. This can inform our future resources and career guide.

How you can help

The biggest barrier to increase the reach and impact of our work is currently funding to be able to devote time to the initiative (see above). In addition to funding, you can help grow the project in the following ways:

- We’re always open for feedback and ideas to increase the effectiveness of our work and improving our call for funding. (Neither of us have experience with fundraising, so we're trying to figure this out as we go.)

- We’re looking for experts who can help us pro-bono in fields we don't have expertise in ourselves. This includes:

- Legal assistance for registering as a non-profit (‘stichting’) in the Netherlands and getting charity status (‘ANBI-status’)

- Fundraising for current/future funding

- If you enjoy making content and you have expertise on climate change and effective altruism, we’d love to hear from you!

- Submit content for our newsletter

- Subscribe to our newsletter and follow us on LinkedIn

- If you’re an environmental professional or otherwise have a strong interest in climate change, join the new LinkedIn group and share your ideas with the community!

- Spread the word to your connections who are interested in climate change or other environmental topics!

Links

- Our website (work in progress)

- Our newsletter on Substack

- Our LinkedIn

- The group page on the EA forum

You can contact us by leaving a comment here, or by sending us an email. We welcome your constructive input!

Great initiative! I have personally met many people interested in creating a positive impact in the environmental field, but who are disappointed by the limited options EA provides regarding this. Good luck :)

Thank you Trym!

This isn spot on..having been active for many years in following, observing, and participating in climate change discussions, I see that too many resources are directed towards that much emphasis is placed on individual actions like reducing plastic use or switching to reusable products, which do not address the systemic changes needed to combat the climate crisis.

Unverified carbon offset projects, often fail to provide measurable or lasting environmental benefits. This misallocation of resources detracts from more impactful solutions, such as investing in renewable energy infrastructure, protecting vulnerable ecosystems, and advocating for robust climate policies that hold industries accountable. That's what I believe.