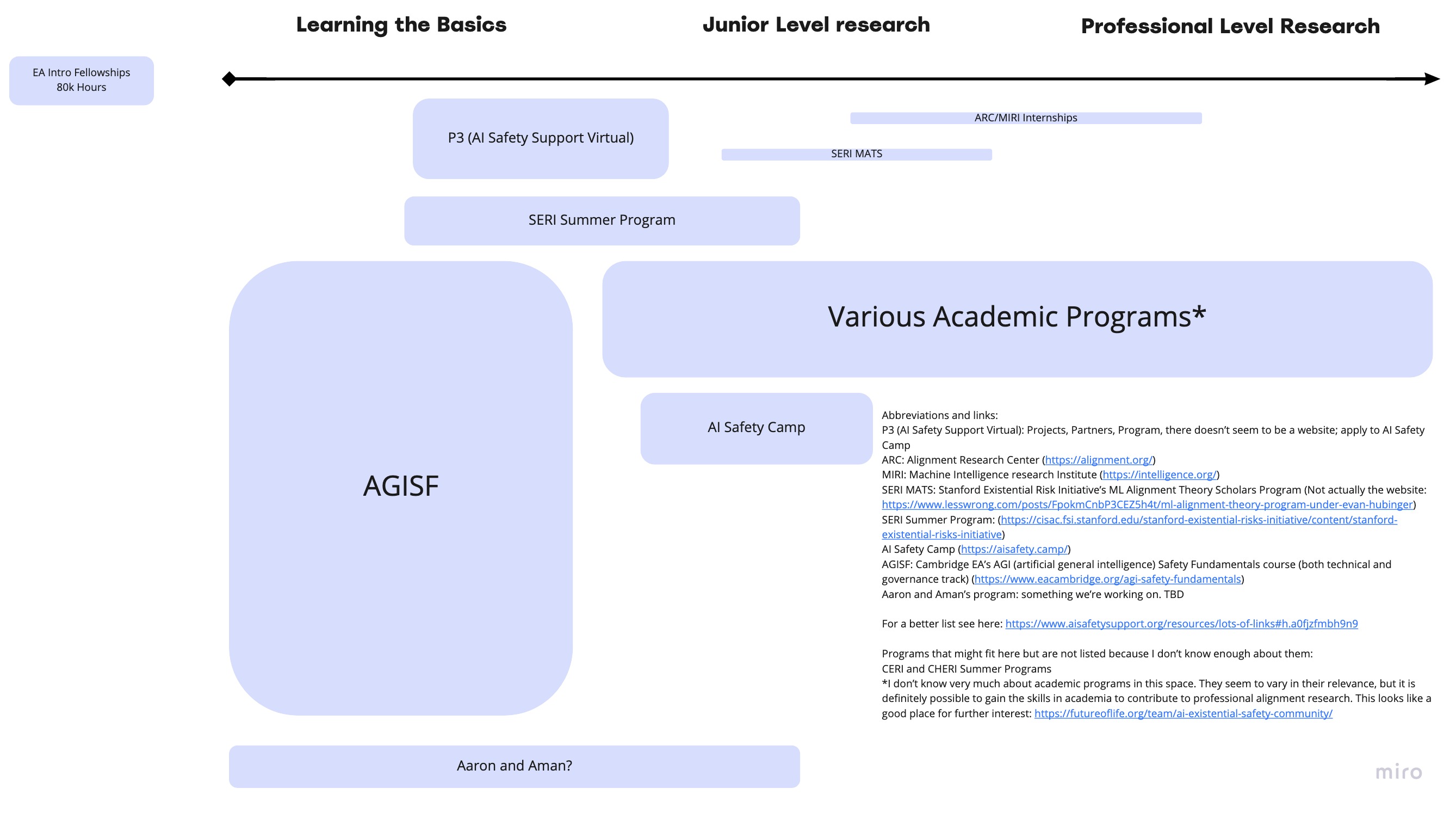

Aman Patel and I have been thinking a lot about the AI Safety Pipeline. In the course of doing so we haven't come across any visualizations. Here's a second draft of a visualized pipeline of what organizations/programs are relevant to moving through the pipeline (mostly in terms of theoretical approaches). It is incomplete. There are orgs that are missing because we don't know enough about them (e.g., ERIs). The existing orgs are probably not exactly where they should be, and there's tons of variation within participants of each program; there should be really long tails. The pipeline moves left to right and we have placed organizations generally where they seem to fit in on the pipeline. The height of each program represents its capacity or number of participants.

This is a super rough draft, but it might be useful for some people to see the visualization and recognize where their beliefs/knowledge differs from ours.

We support somebody else putting more than an hour into this project and making a nicer/more accurate visual.

Current version as of 4/11:

It's misleading to say you're mapping the AIS pipeline but then to illustrate AIS orgs alone. Most AIS researchers will let their junior level research be non-AIS research with a professor at their school. Professional level research will usually be a research masters or PhD (or sometimes industry). So you should draw these.

Similar messaging must be widespread, because many of the undergraduates that I meet at EAGs get the impression that they are highly likely to have to drop out of school to do AIS. Of course, that can be a solid option if you have an AIS job lined up, or in some number other cases (the exact boundaries of which are subject to ongoing debate).

Bottom line - omitting academic pathways here and elsewhere will perpetuate a message that is descriptively inaccurate, and pushes people in an unorthodox direction whose usefulness is debateable.

Thank you for your comment. Personally, I'm not too bullish on academia, but you make good points as to why it should be included. I've updated the graphic and is says this "*I don’t know very much about academic programs in this space. They seem to vary in their relevance, but it is definitely possible to gain the skills in academia to contribute to professional alignment research. This looks like a good place for further interest: https://futureoflife.org/team/ai-existential-safety-community/"

If you have other ideas you would like expressed in the graphic I am happy to include them!

This is potentially useful but I think it would be more helpful if you explained what the acronyms refer to (thinking mainly about AGISF which I haven't heard of SERI MATS which I know about but others may not) and link to the webpages that represent the organizations so people know where to get more information.

Thanks for the reminder of this! Will update. Some don't have websites but I'll link what I can find.

Thanks for making this!

A detail: I wonder if it'd make sense to nudge the SERI summer program bar partway to "junior level research" (since most participants' time is spent on original research)? My (less informed) impression is that CERI and CHERI are also around there.

Thanks! Nudged. I'm going to not include CERI and CHERI at the moment because I don't know much about them. I'll make a note of them

Thanks for this!

Does "Learning the Basics" specifically mean learning AI Safety basics, or does this also include foundational AI/ML (in general, not just safety) learning? I'm wondering because I'm curious if you mean that the things under "Learning the Basics" could be done with little/no background in ML.

Good question. I think "Learning the Basics" is specific to AI Safety basics and does not require a strong background in AI/ML. My sense is that the AI Safety basics and ML are slightly independent. The ML side of things simply isn't pictured here. For example, the MLAB (Machine Learning for Alignment Bootcamp) program which ran a few months ago focused on taking people with good software engineering skills and bringing them up to speed on ML. As far as I can tell, the focus was not on alignment specifically, but was intended for people likely to work in alignment. I think the story of what's happening is way more complicated than a 1 dimensional (plus org size) chart, and the skills needed might be an intersection of software engineering, ML, and AI Safety basics.