By: Priscilla Campos Díaz

Acknowledgement Note:

This project was carried out as part of the “Carreras con Impacto” program during the 14-week mentorship phase. You can find more information about the program in this entry.

Motivation

This project aims to identify the different biases generated by current AI-based recruitment algorithms and to understand the factors that cause them, subsequently evaluating the effectiveness of current regulations in the United States and the European Union. The goal is to propose specific recommendations for Latin American countries that lack a robust legal framework in this area.

As a student of Information Technologies with a deep interest in law and technology regulation, this project allows me to explore the intersection between current technological innovation and its legal implications. My interest in law stems from the urgent need to address modern legal regulations from a technical perspective, complemented by legal knowledge. This approach is essential to allow the development of policies and regulatory frameworks focused on technological advancement, ensuring the ethical and responsible use of tools like AI in sensitive processes such as recruitment.

This project seeks to highlight the importance of involving technical experts in the formulation of technical regulations. The participation of experts in artificial intelligence and other technological disciplines is crucial to ensure that regulations not only mitigate the risks associated with the use of AI algorithms but also promote innovation responsibly and effectively. . By proposing regulatory strategies based on successful international practices, we hope to contribute to the development of policies that mitigate biases generated by the use of AI algorithms in recruitment and ensure equal opportunities for all candidates, regardless of gender, race, age, or other personal characteristics.

Thus, the research aims to generate an impact both technically and legally, promoting a fairer and more equitable use of AI in Latin America, and emphasizing the importance of a multidisciplinary approach that integrates both technical and regulatory perspectives in the design of effective legal solutions.

Background

In today’s digital era, Artificial Intelligence (AI) has revolutionized how companies approach the search and selection of talent to integrate into their work teams. In a constantly evolving world, where saving time and improving efficiency are crucial, AI has become an essential tool in recruitment (Bello, 2023).

However, the implementation of these algorithms has raised significant concerns, particularly regarding fairness, privacy, and non-discrimination in recruitment. The need for specific regulations for these technologies is increasingly evident, especially considering the inherent risks they present, such as the possibility of perpetuating historical biases, invading candidates’ privacy, or making decisions based on unfair criteria. AI systems are often trained using existing datasets, which may reflect historical biases. For example, recruitment algorithms may target audiences that are 85% women for cashier positions in supermarkets or 75% African-American for taxi company jobs.

Given AI's vulnerability to bias, its applications in talent management could generate outcomes that violate ethical codes and organizational values, ultimately harming employee engagement, morale, and productivity (Kim-Schmid, 2022).

One of the main factors contributing to bias in AI recruitment is the use of biased training data. If the data used to train an AI model is not representative of the population's diversity or contains inherent prejudices, the algorithm will learn and replicate these inequalities (Martín, 2024). For instance, if a dataset used to train a recruitment selection algorithm consists primarily of resumes from men, the algorithm may develop a preference for male candidates, even when women are equally or more qualified for the job. This type of bias not only perpetuates gender inequality but can also negatively impact other traditionally vulnerable groups, such as Black or Latinx individuals, older people, and neurodivergent individuals.

In addition to training and learning from data, other stages of algorithm development can foster biases and significantly influence the model’s accuracy, such as considering and weighing different variables during design or failing to properly test diverse data in the validation phase (Hao, 2019).

Undoubtedly, the most emblematic case of how the inadequate use of AI in recruitment algorithms can perpetuate bias was Amazon's 2018 incident. The company developed an AI system to automate its recruitment process; however, during internal testing, it was discovered that the system systematically discriminated against women, favoring male candidates for technical positions.

A similar case arose from a class-action lawsuit in Illinois against the software company HireVue. The lawsuit alleged that HireVue used facial recognition tools during job interviews without obtaining informed consent from candidates, violating Illinois’ Biometric Information Privacy Act, resulting in Case No. 2022CH00719 in 2022. The existence of this regulation, focused on AI video interviews, allowed measures to be taken against the company and upheld the plaintiffs’ right to authorize, or not, the use of their data in recruitment processes (Ben-Asher, 2024).

Despite growing concerns over the risks associated with using AI algorithms and the recent development of regulatory strategies in leading regions like the United States and the European Union, technology is advancing at a faster pace than the law. Additionally, the lack of specific and comprehensive regulations to ensure the proper implementation of AI in developing countries, like those in Latin America, creates a legal vacuum that could perpetuate injustices and large-scale discrimination.

Moreover, the growing popularity and use of AI in both general applications and recruitment processes in the Latin American and Costa Rican industries are undeniable. As of November 2022, 83% of Costa Rican companies were already using AI tools (Murillo, 2022). It is therefore crucial to ensure the ethical implementation of AI in these countries.

This project seeks to identify the possible biases that could be generated from using various AI-based recruitment algorithms to determine the factors that cause them and to analyze whether the existing legislation in the United States and the European Union efficiently addresses the risks associated with these biases. Based on the analysis of current laws, the goal is to propose a series of recommendations for the effective implementation of regulations that protect candidates' rights and ensure fairness in selection processes in Latin American countries that lack such legislation.

Objectives

General Objective

Analyze and compare the existing regulatory frameworks in the United States and the European Union on the use and development of AI in recruitment processes, with the goal of promoting the proper adoption of such regulations in Latin American countries that lack adequate legal frameworks.

Specific Objectives

- To analyze and understand the specific biases generated by various AI-driven recruitment algorithms and their impact on selection processes.

- To compare the different existing regulations in the European Union (EU) and the United States (US), highlighting their legal and regulatory approaches as international benchmarks.

- To propose recommendations for the effective implementation of regulations on the use of artificial intelligence in recruitment in Latin American countries that currently lack specific legal frameworks.

Methodology

An exhaustive literature review was conducted to identify current AI-based recruitment algorithms and the inherent biases they present, as well as the technical factors that contribute to these biases. The process is detailed below:

1.1. Recruitment Algorithms

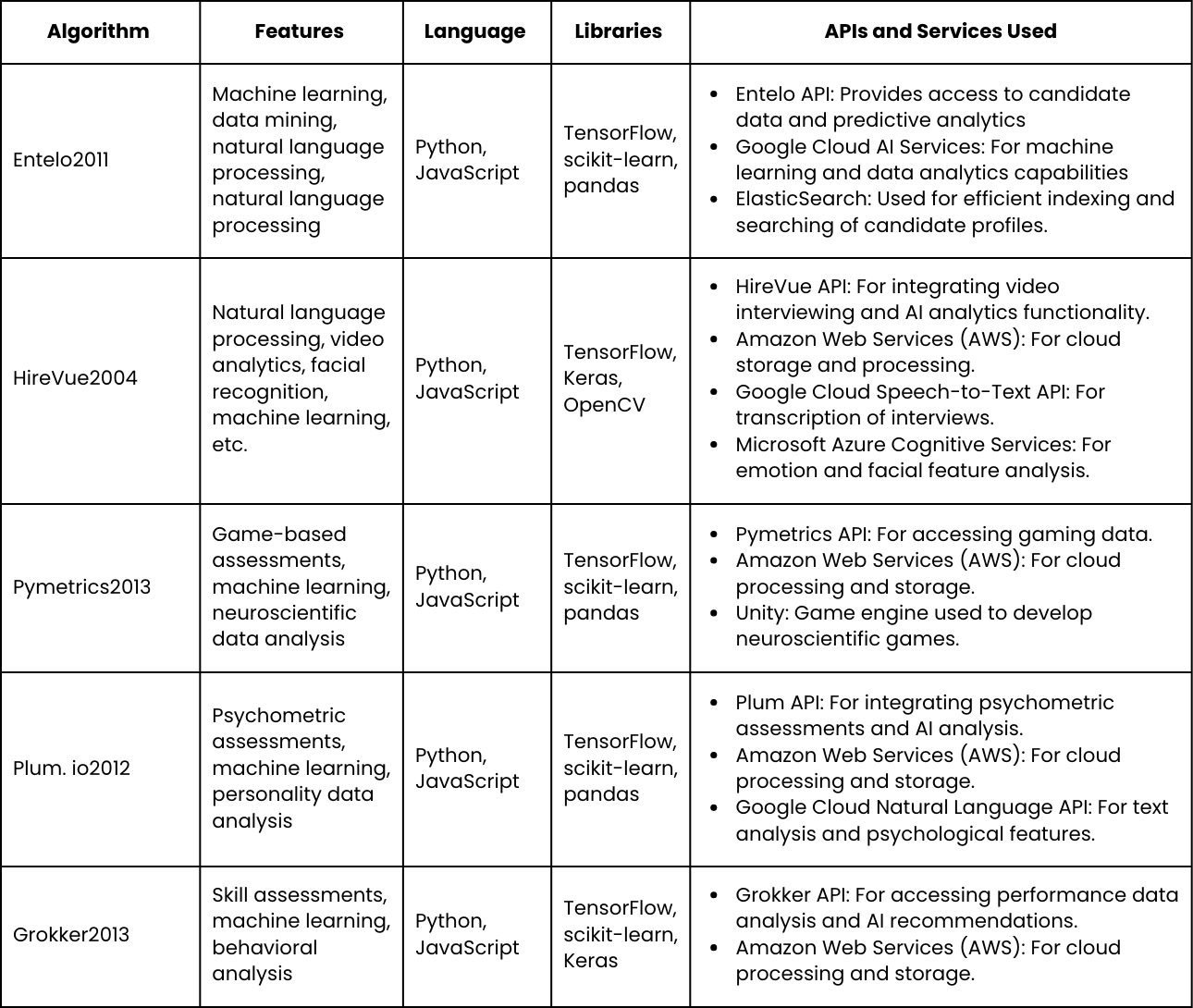

- Selection of Algorithms: A selection of AI-driven recruitment algorithms was made, taking into account their relevance in the industry and specific features, such as the use of video interviews, psychometric assessments, resume evaluations, and analysis on recruitment platforms like LinkedIn. For a detailed breakdown of the technical factors analyzed, refer to Annex 1.

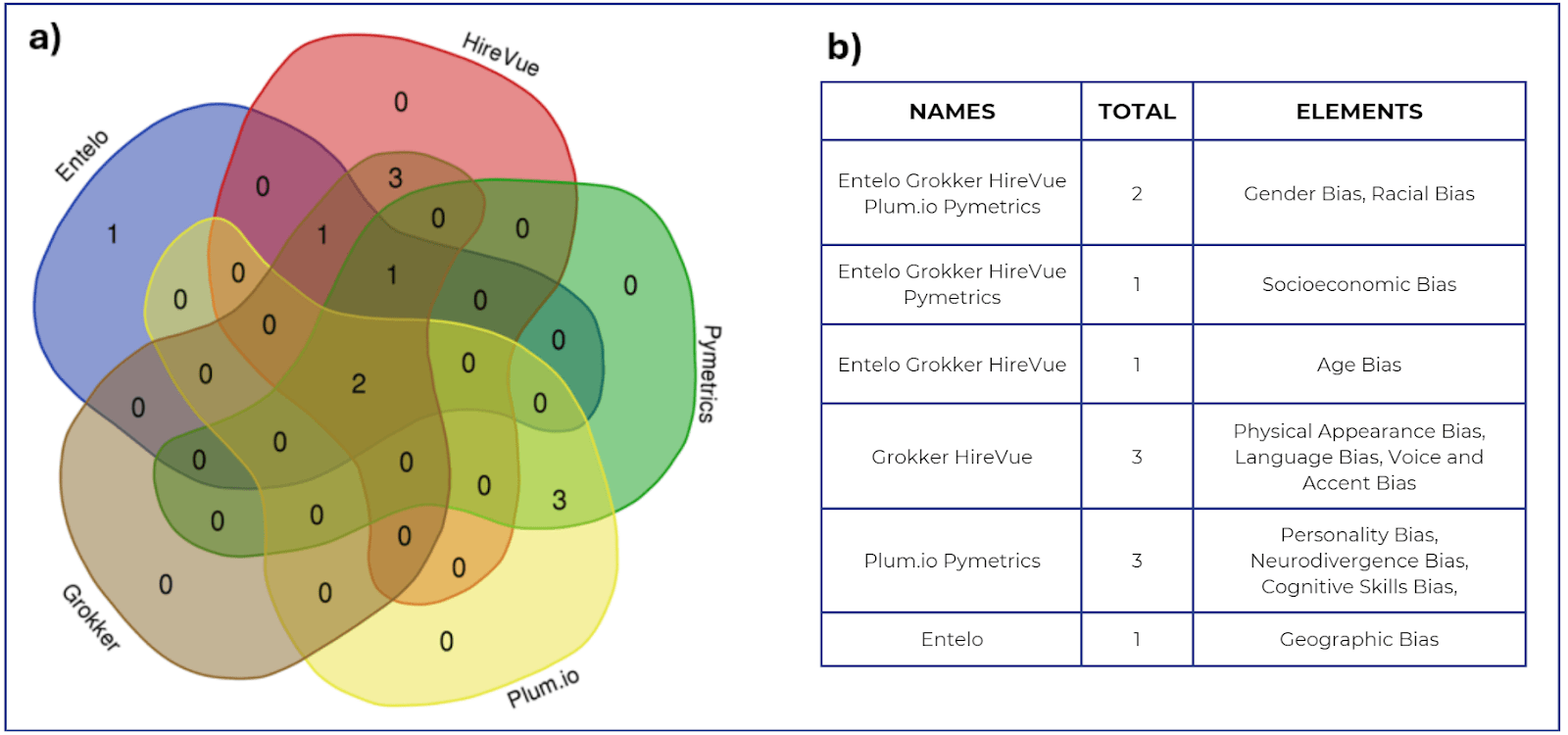

- Identification of Biases: Different biases potentially associated with the selected algorithms were listed and described based on their technical characteristics and specific functionalities. Additionally, common bias patterns among the algorithms were identified using a Venn diagram generated with the "Bioinformatics & Evolutionary Genomics" online tool.

1.2. Technical Factors

Origin of Biases: The technical factors contributing to the emergence of biases in recruitment AI were identified, including aspects like the quality of training data, design decisions in the algorithm, and limitations in the interpretation of complex data.

Prevention and Management of Biases: Strategies were analyzed to prevent and manage these biases during various stages of AI algorithm development, emphasizing the importance of training with unbiased data, data management, and the regular implementation of audits.

1.3. Existing Regulations in the US and EU for AI in Recruitment Algorithms

- Analysis of the EU Regulatory Framework: The European Union's regulatory framework for AI (AI Act) was examined, with a particular focus on the "High-Risk AI" section, which includes the use of AI in recruitment algorithms. This analysis included a detailed evaluation of relevant articles, the obligations imposed on developers and users, and the penalties for non-compliance.

- Analysis of US Regulations: Since the US operates under a federal system where power is divided between the central government and individual states, each state has considerable autonomy and can create and enforce its own laws, provided they do not conflict with the federal Constitution (Alvarado, 2023). Therefore, the regulatory frameworks of individual states were analyzed, evaluating their focus, scope, and differences in implementation. The states included in the analysis, due to their specific regulations for AI in recruitment, are California, the District of Columbia, Illinois, Massachusetts, New Jersey, New York, and Vermont.

- Comparison of Regulatory Frameworks: The regulatory frameworks of the US and the European Union were compared to assess both their effectiveness and their medium- and long-term validity. This comparison covers regulations up to July 31, 2024. Data analysis tools were used to generate graphs that facilitate the comparison between the two entities.

Analysis of Specific Bias Development in AI-Based Recruitment Algorithms

From the review of various AI-based recruitment algorithms reported in the literature, several biases were identified that are directly related to the technical functionalities of these algorithms (Table 1). The intersection of different biases among the analyzed algorithms is visually represented in a Venn diagram in Figure 1.

Table 1. Biases Associated with the Use of AI in Recruitment Algorithms

Lists the recruitment algorithms reported in the literature along with the different biases they may reproduce.

Algorithm | Bias Type |

Entelo Analyzes data such as work experience, technical skills, blog posts, among others, from resumes, LinkedIn profiles and GitHub. | Gender Bias: The algorithm may prioritize keywords and job descriptions historically associated with male-dominated fields, disadvantaging women in technical or leadership roles. |

Racial Bias: When searching for candidates on professional networks, the algorithm may favor white individuals in certain industries due to their prevalence, excluding Black or Latinx candidates. | |

Age Bias: The algorithm may automatically discard older candidates by prioritizing recent qualifications or experiences, negatively impacting this demographic. | |

Geographic Bias: By filtering based on location, the algorithm may exclude candidates from minority or economically disadvantaged communities. | |

HireVue Analyzes video interviews, evaluating facial expressions, voice tones and responses to select the most suitable candidates. | Gender Bias: Video interviews may assess women more critically if facial expression and voice tone analysis is biased toward typically male patterns. |

Racial Bias: Facial and voice analysis may penalize Black or Latinx individuals if their characteristics do not match the standards used in training, common in algorithms trained mostly on data from white individuals. | |

Physical Appearance Bias: HireVue may favor candidates who align with Western beauty stereotypes, disadvantaging people from ethnic minorities. | |

Voice and Accent Bias: Candidates with Latinx or African American accents may be penalized if the algorithm favors standard speech patterns. | |

Age Bias: The algorithm may discriminate against older individuals if visual or vocal analysis associates certain characteristics with negative age perceptions. | |

Language Bias: Candidates whose first language is not the interview language may be unfairly evaluated due to linguistic errors, impacting vulnerable groups like immigrants or international applicants. | |

Socioeconomic Bias: Video quality, which may depend on the candidate's financial resources, can negatively influence the evaluation if it's considered an indirect indicator of professionalism. | |

Pymetrics It uses psychometric tests to measure cognitive and emotional skills, comparing the results with profiles of success in specific roles. | Gender Bias: The tests may be designed with a focus on skills traditionally associated with men, disadvantaging women, especially in technical roles. |

Racial Bias: The skills assessed may reflect a cultural bias not representative of the experiences and knowledge of Black or Latinx individuals. | |

Personality Bias: If the tests favor extroverted or assertive personalities, the algorithm may discriminate against individuals who do not fit these stereotypes. | |

Neurodivergence Bias: Standard evaluations may not consider the various ways neurodivergent individuals process information, putting them at a disadvantage compared to neurotypical individuals. | |

Cognitive Skills Bias: By focusing on specific cognitive skills, the evaluations may exclude candidates with unconventional thinking styles. | |

Plum.io Evaluates the potential and competencies of candidates through personality and cognitive skills tests, focusing on their long-term compatibility. | Gender Bias: The evaluations may favor skills historically associated with men, which could disadvantage women in sectors like technology, engineering, and science, where technical skills are often linked to masculine stereotypes. |

Racial Bias: The evaluations may be designed in a way that favors skills more accessible to white individuals, affecting Black or Latinx individuals. | |

Personality Bias: By prioritizing certain personality profiles, the algorithm may exclude groups that do not fit conventional success stereotypes. | |

Neurodivergence Bias: The tests may disadvantage neurodivergent individuals by not considering the diversity in how skills and competencies are manifested. | |

Cognitive Skills Bias: Evaluations that do not recognize different forms of intelligence may disadvantage candidates whose skills do not align with conventional cognitive models. | |

Grokker Analyzes video interviews and other multimedia content, evaluating communication and presentation skills to select suitable candidates.. | Gender Bias: The algorithm may favor men if video analysis prioritizes traits or behaviors associated with masculinity, limiting opportunities for women. |

Racial Bias: By analyzing facial and vocal characteristics, the algorithm may discriminate against Black or Latinx individuals if the system is not designed to recognize and value racial diversity. | |

Physical Appearance Bias: Facial recognition technology may lean towards Western beauty standards, negatively affecting people from ethnic minorities. | |

Voice and Accent Bias: Candidates with foreign accents may be disadvantaged if the system prefers accents more common among dominant groups. | |

Age Bias: Visual analysis may identify and discriminate against candidates based on characteristics associated with age, negatively impacting older applicants. | |

Language Bias: Candidates whose native language is not the primary language may be negatively evaluated for inadequate handling of linguistic diversity. | |

Socioeconomic Bias: The quality of video and the visible environment during the interview may reflect the candidate’s socioeconomic status, which could influence the evaluation if these factors are not adequately neutralized. |

Table 1 shows that video-based algorithms, such as HireVue and Grokker, tend to exhibit a greater number of biases, particularly in aspects like physical appearance, tone of voice, and accents, due to the inherent subjectivity in visual and vocal analysis. In contrast, algorithms like Entelo, which focus on structured data such as work experience, do not have biases related to appearance, but continue to face issues linked to gender and age by prioritizing certain types of experiences and keywords.

This highlights how the methods used to evaluate candidates directly influence the types of biases that may arise. Depending on whether the algorithm is based on structured data, video interviews, or psychometric tests, the biases may vary and affect aspects such as gender, race, age, or physical appearance.

Figure 1. Intersection of Biases in AI Recruitment Algorithms. Graphical representation of the shared biases among recruitment algorithms using AI (HireVue, Entelo, Grokker, Plum.io, and Pymetrics). In part a), the generated Venn diagram is shown with an independent color for each algorithm. The description of the number and type of identified biases is detailed in part b).

As shown in Figure 1, gender and racial bias are the primary points of convergence among the analyzed algorithms. This means that all recruitment algorithms using AI are likely to reproduce discriminatory patterns against women or individuals from racial minorities. Age and socioeconomic biases are also highly represented, present in most algorithms.

In contrast, physical appearance bias, as well as voice and accent biases, are less common, occurring only in algorithms that use video interviews. Similarly, biases related to neurodivergence and cognitive abilities are only reflected in algorithms that employ psychometric assessments and/or cognitive games.

Finally, Entelo, with its explicit function of filtering by location, is the only recruitment algorithm analyzed that could exhibit discriminatory patterns based on the geographic location of the job applicant.

Comparison of Regulatory Frameworks for AI in Recruitment in the US and the EU

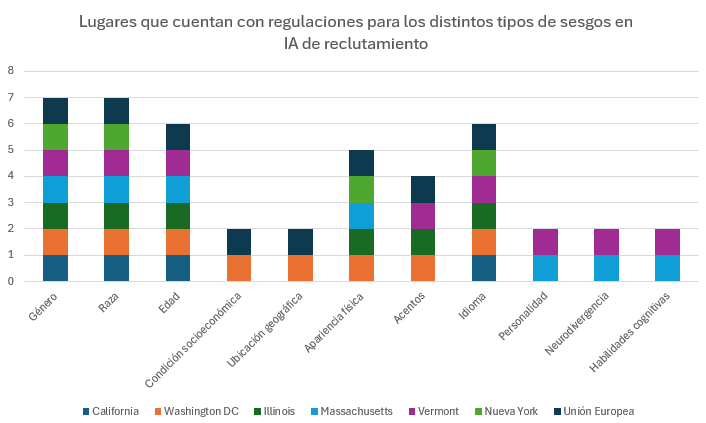

After carefully analyzing the specific regulatory frameworks for AI in recruitment in California, Washington, Illinois, Massachusetts, Vermont, New York, and the European Union, the biases explicitly addressed by these regulations were compared.

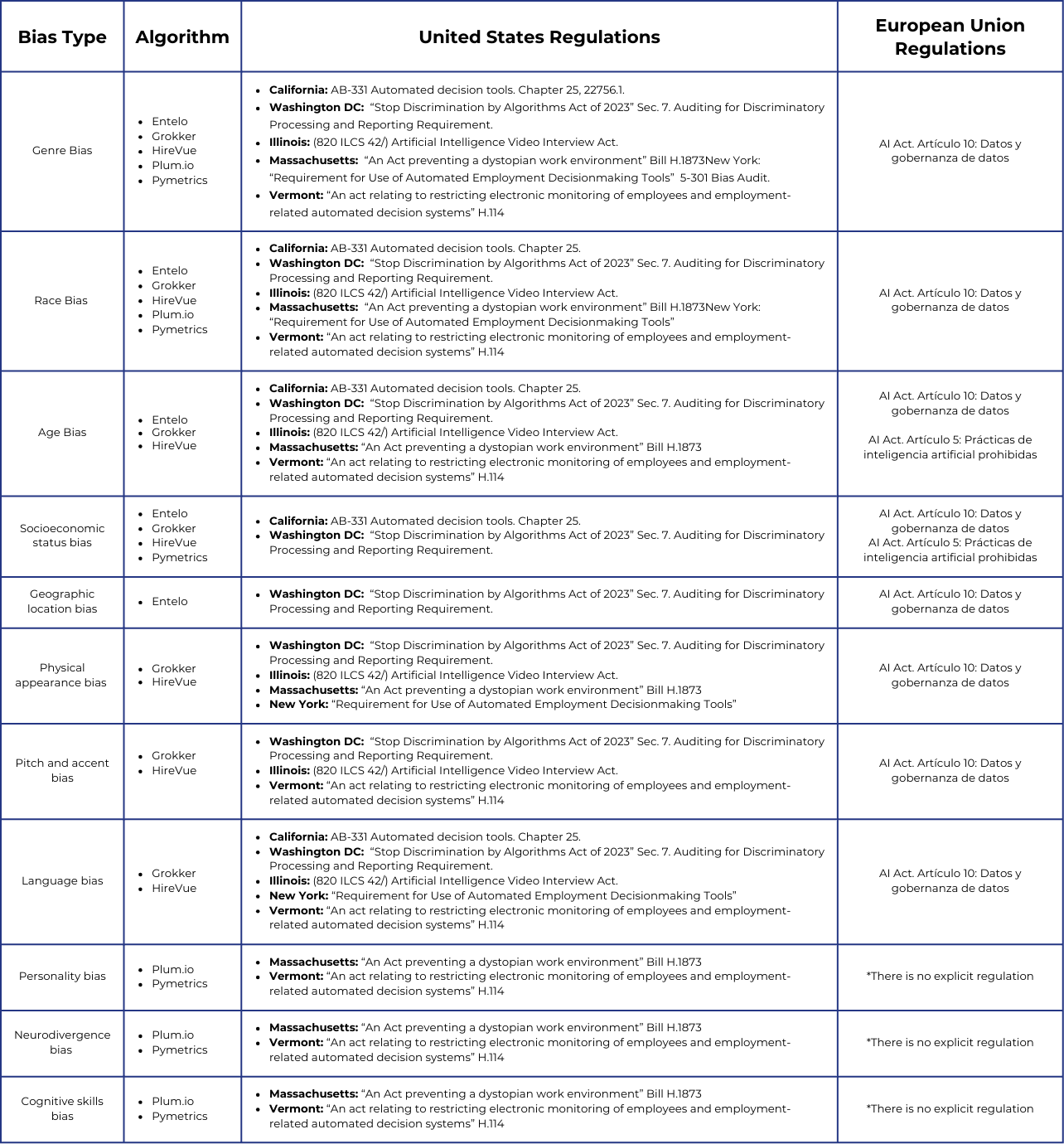

Table 2. Regions with regulatory frameworks related to the use of AI for recruitment by bias type.

NAMES | California | Washingotn | Illinois | Massachussetts | Vermont | Nueva York | European Union |

Genre | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Race | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Age | 1 | 1 | 1 | 1 | 1 | 0 | 1 |

Socioeconomic Condition | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

Geographic Location | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

Physical appearance | 0 | 1 | 1 | 1 | 0 | 1 | 1 |

Accent | 0 | 1 | 1 | 0 | 1 | 0 | 1 |

Language | 1 | 1 | 1 | 0 | 1 | 1 | 1 |

Personallity | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

Neurodivergence | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

Cognitive skills | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

The presence (1) or absence (0) of specific regulations related to the use of AI in recruitment processes for different regions in the United States and the European Union is represented, according to the type of bias they are directed at.

Table 2 shows that the regulatory frameworks of the European Union and Washington DC are the most comprehensive and inclusive concerning bias types, providing legal articles that explicitly address all the listed biases except for those related to personality, neurodivergence, and cognitive abilities. In contrast, the states of Massachusetts and Vermont do consider these latter biases within their regulatory frameworks focused on the use of AI, but they omit provisions applicable to other critical biases, such as those related to socioeconomic status, geographic location, accents, and language in the case of Massachusetts, and socioeconomic status, geographic location, and physical appearance in the case of Vermont.

On the other hand, California and New York rank as the states with regulations that cover the fewest biases analyzed. In California's case, this may be due to the current regulations addressing biases from a general perspective, primarily focusing on the more "common" or frequent biases in AI models, such as those related to race, gender, and age. New York also takes a general approach to its regulations on the use of AI in recruitment processes; however, this state uses a mathematical formula to measure and control the proportion of individuals hired from privileged groups compared to individuals from vulnerable groups who may be victims of biases such as gender, race, language, or physical appearance.

Figure 2. Distribution of regulatory frameworks related to biases in the use of AI for recruitment by region. A bar chart representing the various jurisdictions in the United States and the European Union that have regulations focused on the use of AI in recruitment processes by bias type. The different biases are shown on the X-axis, while the regions with regulations for each bias are represented on the Y-axis. The analyzed regions are distinguished by different colors (blue for California, orange for Washington, dark green for Illinois, light blue for Massachusetts, purple for Vermont, light green for New York, and dark blue for the European Union).

Figure 2 illustrates the bias categories most addressed by the different regulatory frameworks, while Table 3 compares in detail the individual laws referring to the various biases analyzed within each regulatory framework. Gender and racial biases are the most regulated, with legislation in all the frameworks studied. Age biases, meanwhile, are addressed in 6 out of the 7 studied locations, with New York being the only one that does not explicitly consider it.

From a legislative standpoint, there is significant vulnerability due to the lack of regulation in certain types of biases. The absence of specific regulations for biases related to personality, neurodivergence, cognitive abilities, socioeconomic status, and geographic location in 5 out of the 7 regulatory frameworks analyzed exposes these vulnerable groups to potential discrimination in automated recruitment processes.

Table 2. Regions with regulatory frameworks related to the use of AI for recruitment by bias type.

This table shows the various regulations identified in the United States and the European Union that address each type of bias, highlighting the name of the law and the specific article. The different recruitment algorithms that can reproduce these biases are also listed.

It is worth noting that some of the states not mentioned in the United States analysis also have specific regulations regarding the use of artificial intelligence. Such is the case of New Jersey, with its bills A. 3854 and A. 3911, aimed at regulating the use of AI tools by employers in hiring processes. Bill A. 3854 seeks to regulate the use of Automated Employment Decision Tools (AEDT), systems governed by statistical theory or systems whose parameters are defined by automatically filtering candidates for hiring or any employment terms, conditions, or privileges, with the aim of "minimizing workplace discrimination that may result from the use of these tools."

On the other hand, bill A. 3911 regulates the use of video interviews incorporating AI during the hiring process (O'Keefe & Richard, 2024). In this case, New Jersey's bills were not included in the analysis due to their rather general wording, making it difficult to categorize them in terms of the biases they address.

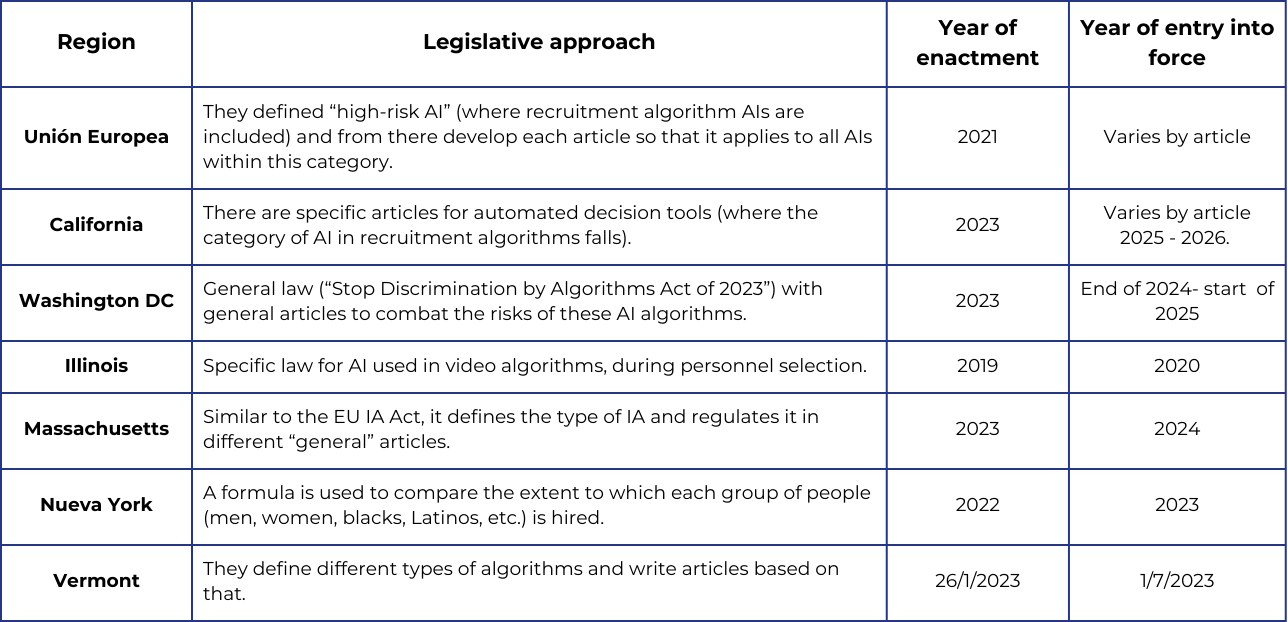

Another important point to highlight is the undeniable contrast between the state laws in the US and the AI Act of the European Union regarding the drafting of their respective regulatory frameworks focused on the use of AI (Table 4).

Table 4. Comparison of Legislative Approaches to AI Regulation by Region.

This table highlights the different legislative approaches to regulating the use of AI in the United States and the European Union, also emphasizing the years of enactment and entry into force of the various laws.

The regulation of artificial intelligence is in an early phase of implementation and evolution, with different regions adopting diverse approaches regarding the definition, scope, and enforcement of their laws, as summarized in Table 4. Analyzing these differences brings us closer to understanding the effectiveness of each legislative approach.

The European Union (EU) has established a solid framework with the "AI Act" under the concept of "High-Risk Artificial Intelligence," which encompasses all applications that, due to their impact on fundamental rights, safety, or the interests of individuals, require greater oversight and regulation. The "High-Risk Artificial Intelligence" systems mentioned in the AI Act include those used in critical areas such as education, justice, healthcare, essential infrastructure management, and personnel recruitment, among others. This comprehensive approach is currently positioned as the most efficient way to regulate these volatile technologies, given the constantly evolving nature of such algorithms.

In contrast, the approaches presented in the United States show a mix of specificity and generality. This can be clearly seen when comparing the laws in California and Massachusetts, with California regulating automated decision-making tools, including those related to recruitment, while Massachusetts adopts a general approach similar to that of the European Union but with greater flexibility to adapt to future developments. Likewise, the focus on general algorithmic discrimination in Washington DC contrasts with Illinois, which specifically regulates the use of AI in video algorithms for personnel selection.

While Illinois' specialized regulation offers some advantages, broad regulatory frameworks like the one presented in Washington DC can provide wider coverage capable of addressing a broader range of risks.

Despite these differences, it is too early to definitively assert the superiority of one approach over the other, as these frameworks have not been in force long enough to make comparisons based on data. As technology advances, it will be crucial to continue evaluating and contrasting these regulatory frameworks to ensure that they effectively address emerging risks and promote the ethical and safe use of artificial intelligence.

Recommendations for the Implementation of AI Regulations, Including AI in Recruitment Processes

Currently, very few regions in Latin America have developed or are in the process of developing regulations related to the responsible use of AI. In countries like Costa Rica, for example, where a bill was recently introduced to regulate the use of AI (Madrigal 2023), adopting a regulatory framework based on broad and clear definitions could be crucial for establishing a solid foundation from which to develop future regulations, anticipating the challenges that AI might present without relying on constant legislative revisions.

Additionally, this approach is particularly relevant for most Latin American countries that have yet to make progress in creating legislative proposals to regulate artificial intelligence.

In these cases, AI has not been prioritized in legislative agendas due to a lack of specialized resources and technical expertise in the region. This has resulted in delays in the creation of regulatory frameworks, leaving a legal vacuum in the oversight of its use, including in critical areas like recruitment processes.

Alongside the creation of broad regulations in the region, the previous analysis of the various biases generated by AI in recruitment and the comparison between the jurisdictions of the United States and the European Union have led to the development of practical recommendations. These aim to promote effective and balanced regulation that encourages technological development in an ethical and sustainable manner in the region:

- Involve technical experts in the development of regulations: Traditionally, lawyers have been solely responsible for drafting regulations. However, when it comes to AI, it is important for engineers, data scientists, and other technical experts to actively participate in the creation of these policies. Their knowledge of algorithm design, functionality, and programming is essential to ensuring the accuracy, technical feasibility, and effectiveness of regulations in mitigating the risks associated with the use of AI.

- Avoid overly restrictive regulations that discourage investment in technology: While the use and development of AI pose risks, they also offer a significant competitive advantage to countries that implement them properly. A regulatory framework that is too restrictive could reduce investment in technology and innovation, which in turn would lead to a loss of economic opportunities for the country.

- Establish governmental councils dedicated to AI regulation: To ensure that regulations evolve at the same pace as technological developments, it is necessary to create advisory committees specializing in artificial intelligence. These bodies should be made up of multidisciplinary experts (lawyers, engineers, sociologists, among others) who monitor the implementation and enforcement of regulations, ensuring their continuous updating as new technological advancements emerge.

- Ensure that the datasets used to train AI algorithms are diverse and representative: As highlighted throughout this research, one of the main challenges of artificial intelligence in recruitment is the introduction of biases into decision-making processes. To mitigate this risk, it is important to establish standards that ensure that the datasets used to train recruitment algorithms are representative, diverse, and inclusive. This will enable AI to function equitably, reducing the possibility of discrimination against certain populations.

- Train human resources professionals on the ethical use of AI: Those responsible for the hiring process must receive specialized training on the risks and benefits of using artificial intelligence in their operations. It is important that they understand how algorithmic biases can influence hiring decisions and adhere to ethical standards when using these technologies.

Perspectives

During the development of this research, various issues emerged that, although not explored in depth due to time limitations, represent crucial topics derived from the analysis and raise key questions deserving of detailed attention in future studies:

- It is important to carefully analyze the advantages and disadvantages of implementing AI-related regulations through soft or hard laws. Evaluating this will help determine the most appropriate method to achieve a balance between protecting human rights and fostering continuous innovation in the field of artificial intelligence.

- Soft laws can be implemented more quickly and offer greater flexibility, allowing them to adapt to constant technological changes and emerging needs. However, their lack of penalties may limit their effectiveness in cases of serious non-compliance.

- Hard laws, including criminal sanctions and other coercive measures, provide better control and enforcement, but their rigidity may hinder adaptation to rapid innovations in the AI field (Euroinnova Business School, n.d.).

- Investigating how artificial intelligence could perpetuate or even exacerbate the existing technological divide is essential to understanding its social implications. It is necessary to reflect on how these technologies might reinforce and deepen current socio-economic inequalities, along with the measures that should be taken to mitigate such an impact.

- Comparing the current penalties applied in countries that already have regulatory frameworks focused on AI usage is key to understanding the effectiveness of these regulations. This assessment should consider the severity of the penalties, their practical application, and their impact on the behavior of companies and institutions.

- It is essential to evaluate the impact of the political context on the creation and adaptation of laws related to AI management, considering how political systems and legislative priorities in each region affect the speed and effectiveness of implementing these regulations.

Limitations

Despite the efforts made during the development of this research, several limitations were encountered, reflecting the inherent challenges in the development and application of regulations, as well as personal constraints that impacted the depth of the analysis. Acknowledging these limitations is essential to identifying areas for improvement that could be strengthened in future research.

Among the main limitations are:

- Diversity in AI regulation across different jurisdictions: A significant challenge, as observed in Table 3, is the diversity of regulations regarding the use of AI in personnel recruitment across different U.S. states. These variations can create complications for multinational companies operating in multiple jurisdictions, as they must comply with a complex patchwork of regulations.

- Conflict of interest between governments and companies: The presence of conflicts of interest between governments and tech companies can negatively influence the formulation and enforcement of AI regulations. By exerting pressure on lawmakers, tech companies can push for favorable regulations that prioritize their economic interests over the protection of fundamental rights and social equity.

- Delays in the approval of legislative proposals: The lack of governmental interest in the regulation of AI is reflected in unjustified delays during the approval of legislative proposals. A clear example is Costa Rica, where the bill "Law for the regulation of artificial intelligence in Costa Rica", presented in May 2023, has yet to be approved.

- Lack of training and knowledge about AI among legal actors: The lack of specialized training and knowledge about AI among legislators and legal regulators undermines the effectiveness of regulations. This leads to the creation of laws that do not adequately address the complexities inherent in managing AI or that lack the necessary flexibility to adapt to future technological developments.

- Use of technical legal language: The complexity of the technical language used in the legal documents studied made it difficult to understand the literature for those outside the field, such as myself. This, in turn, caused delays in the development of the project.

- Recent and constantly evolving nature of AI regulations: The rapid and continuous evolution of regulations focused on AI use makes it difficult to conduct a stable and up-to-date long-term analysis, as well as complicating the process of information gathering.

- Lack of information on bias generation in AI applied to recruitment: There is a notable lack of literature that directly addresses the development of biases during the use of AI in recruitment, particularly in Spanish.

- Difficulty accessing specific regulatory frameworks: During the collection of information regarding state regulations in the United States, some limitations were encountered in terms of the clarity and accessibility of these resources.

Conclusions

- AI algorithms applied to recruitment can generate biases that are not adequately regulated due to the rapid pace of technological evolution and the lack of technical knowledge among legislators.

- The biases in these algorithms result from multiple technical factors, such as the quality of the training data and the design decisions of the algorithm.

- The comparison between the regulatory frameworks of the EU and the United States reveals significant differences in their implementation and scope. The EU framework, with its categorization of "high-risk AI," stands out as a cohesive model adaptable to technological evolution.

- Most Latin American countries still lack a specific regulatory framework for AI management, including those applied to recruitment. The adoption of adaptive approaches, reference to international best practices, and the inclusion of experts in the development of regulations are key steps for their development.

- The role of technical experts is indispensable in the formulation of regulatory frameworks associated with AI management, as their participation ensures the technical feasibility of the policies created and their ability to address the specific challenges posed by this ever-evolving technology.

This project highlights the need to integrate the technical perspective in the creation of legal regulations for managing AI in recruitment processes. As an IT student with an interest in law, I recognize that collaboration between technical and legal knowledge is crucial to developing effective regulations that can mitigate generated biases and promote fairness. This approach ensures that technological advancement is managed in an ethical and fair manner.

References

Allen & Lehot. (2023, 7 diciembre). What to Expect in Evolving U.S. Regulation of Artificial Intelligence in 2024. Foley & Lardner LLP. https://www.foley.com/insights/publications/2023/12/us-regulation-artificial-intelligence-2024/

Bello (2023). La Inteligencia Artificial en los procesos de reclutamiento. H&CO. https://www.hco.com/es/insights/la-inteligencia-artificial-en-los-procesos-de-reclutamiento

Ben-Asher (2024). As Employers Rely on AI in the Workplace, Legislators and Plaintiffs Push Back. American Bar Association.

Dastin (2018). Amazon abandona un proyecto de IA para la contratación por su sesgo sexista. Reuters.

EE. UU.: La Comisión para la Igualdad de Oportunidades en el Empleo publicó una nueva orientación sobre el uso de la inteligencia artificial en los procedimientos de selección de personal. (2023, 1 junio). IOE-EMP. https://industrialrelationsnews.ioe-emp.org/es/industrial-relations-and-labour-law-june-2023/news/article/usa-equal-employment-opportunity-commission-issued-new-guidance-on-the-use-of-artificial-intelligence-in-employment-selection-procedures

Euroinnova Business School. (s. f.). Euroinnova Business School. https://www.euroinnova.com/blog/diferencias-soft-law-y-hard-lawhttps://www.euroinnova.com/blog/diferencias-soft-law-y-hard-lawhttps://www.euroinnova.com/blog/diferencias-soft-law-y-hard-law

Hao (2019, 8 febrero). Cómo se produce el sesgo algorítmico y por qué es tan difícil detenerlo. MIT Technology Review. https://www.technologyreview.es/s/10924/como-se-produce-el-sesgo-algoritmico-y-por-que-es-tan-dificil-detenerlo

Kim-Schmid (2022, 13 octubre). Where AI Can — and Can’t — Help Talent Management. Harvard Business Review. https://hbr.org/2022/10/where-ai-can-and-cant-help-talent-management

Madrigal (2023). Cómo Funciona el Gobierno de Estados Unidos: Todo lo que Necesitas Saber. Situam.

https://situam.org.mx/usa/como-funciona-el-gobierno-de-estados-unidos.html

Madrigal (2023). Presentan proyecto de ley hecho por ChatGPT para regular la Inteligencia Artificial. Delfino.cr. https://delfino.cr/2023/05/presentan-proyecto-de-ley-hecho-por-chatgpt-para-regular-la-inteligencia-artificial

Martín (2024). Sesgos de la IA: significado, tipos, causas y cómo evitarlos. OpenHR. Sesgos de la IA: significado, tipos, causas y cómo evitarlos. (openhr.cloud)

Murillo (2022). 83% de las empresas del país usan herramientas de inteligencia artificial. CRhoy.

Nacion, L. (2024, 16 agosto). Nueva ley de Illinois regula el uso de inteligencia artificial en las búsquedas laborales: en qué consiste. LA NACION. https://www.lanacion.com.ar/estados-unidos/illinois/nueva-ley-de-illinois-regula-el-uso-de-inteligencia-artificial-en-las-busquedas-laborales-en-que-nid16082024/

O'Keefe & Richard (2024). New York and New Jersey legislatures introduce bills that seek to regulate artificial intelligence (“AI”) tools in employment. National law review. https://natlawreview.com/article/new-york-and-new-jersey-legislatures-introduce-bills-seek-regulate-artificial

Executive summary: AI recruitment algorithms can perpetuate biases, requiring comprehensive regulations that balance innovation with ethical concerns, as exemplified by comparing EU and US frameworks and recommending approaches for Latin American countries lacking AI legislation.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.