Mike

I am sitting in a large boardroom in Westminster. I am watching my friend and colleague Mike present his recent work to about a dozen colleagues. It’s a key piece of economic analysis of government spending and the audience includes several very senior civil servants. It’s February, and while it's freezing outside, this room feels like a furnace. I see my friend sweating.

“This is a 45% increase on 2016/17 spending,” he explains to the room while pointing at a table of figures being projected onto the wall.

“Is this adjusted for inflation?” our director interjects.

“Oh, yes!” my friend replies confidently.

“That can’t be right then,” the director says, “this contradicts the published statistics I read this morning.”

My friend looks confused.

“Um, just let me check that!” he says quickly.

There's a tense pause, as his eyes scan the screen of his laptop. After about 15 seconds he realises his mistake. He’s been referencing the draft version of his report, not the final one. He murmurs an apology, admitting to the room that he mistakenly presented outdated figures that had not been corrected for inflation. The room is silent, save for the soft shuffling of papers.

“Shall we reconvene when we have the correct figures” asks the director, finally breaking the silence.

“Yes of course” my friend says sheepishly. “I’ll get on this straight away.

My stomach is in knots watching this. I know what he is feeling. He is embarrassed, because, like me, he wants to be meet the expectations of senior colleagues. He wants to be accepted and valued by the people around him. He feels shame because he feels like he has disappointed people in his life that he wanted to impress.

And I know what shame feels like. I feel what shame feels like. I know the heat and coldness on the skin. I know the racing thoughts, the imagined judgements of others; the desperate desire to hide. I don’t know what it’s like to be my friend. Not completely. He is a different person, with many different experiences and predispositions to me. I don’t really know what it’s like for him as he goes home every day. I don’t know much of the specific thoughts and feelings that fill every moment of his experience. But I know shame.

I remember being in a similar position only a few months prior. I was giving a talk on the new standards for HR data across the government. About 50 people had gathered to hear me explain the new system that my team had been working on. After my presentation, someone asked a difficult question. I don’t even remember what the question was, I just remember that I didn’t know how to respond. I paused, then paused some more. I was trying my best to look like I was thinking about it, but my mind was blank. The few thoughts I did have were revolving around what was going on behind those hundred eyes. Imagining the judgement, the frustration and the pity at my cluelessness. Eventually my manager’s manager walked over to the mic stand and answered the question diplomatically. He took over questions as I stood behind him, feeling small. Feeling like I wanted to hide.

In the boardroom with my friend, I feel the shame again then; not actual shame, just its vague shadow. As I look at him, I feel my face getting red and my stomach churning. I feel the pain in my heart as its beat quickens. “I don’t like feeling shame” I think wordlessly. “Shame feels bad”. “I don’t want my friend to feel shame either.”1

Mouse

My housemate is calling from the kitchen of our flat. I walk in to see him holding the bin. He is gleeful.

“I caught it!” he says smiling and showing me the contents of the bin.

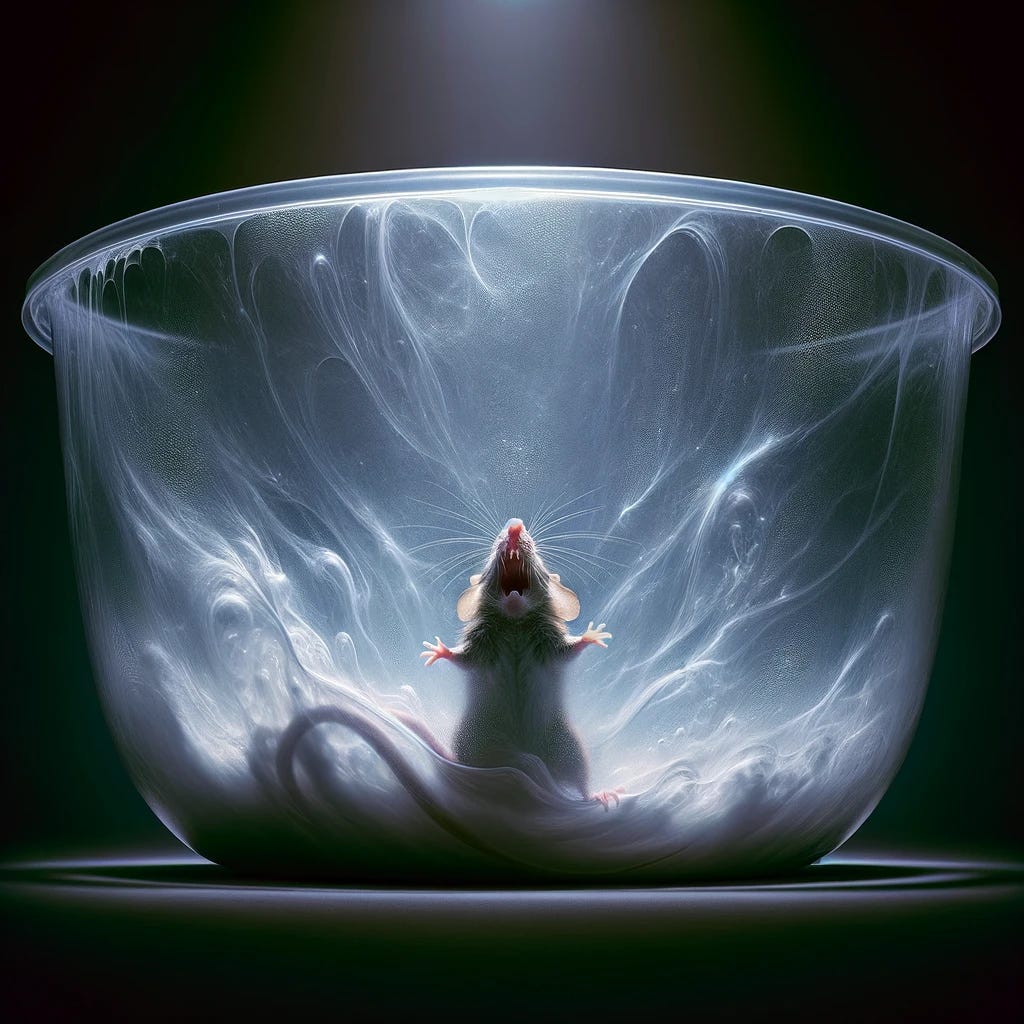

Inside the bin is a mouse. The mouse is very active. It’s alternating between hurling itself up the sides of the bin, and scurrying around the base. It looks terrified. I wonder what it’s like to be that mouse. I wonder what it's like to be trapped in a huge container as an incomprehensibly large being looms over you.

I know that mice have brains. I know that we can’t know for certain that there is something like it is to be a mouse. But their apparent emotions, memory, planning, and relationships, along with our common cognitive ancestry make it seem likely. I know that, like me, they have a limbic system, and an endocrine system that releases stress hormones. I know that mice act in a way that suggests that they feel fear. I know that we share mammalian ancestors for whom a fear response was likely very useful.

And I know what fear feels like. I feel what fear feels like. I know the tension, the intensity, the clamouring. I know the contraction of my attention to just two things: the thing I am scared of and the desperate fight to get away from it. I can’t ever know what it is like to be a mouse, not really. I’ll never know what it feels like to scurry around a skirting board looking for crumbs. But I know fear.

I remember a time four years ago. I was surfing in Cornwall and had fallen off my board. The beach was steep and the waves were breaking quickly and fiercely only metres from the shallows. After falling, I had swum to the surface, taken half a breath, only to be pushed under again by another wave. This happened once more before I started panicking. I remember the panic clearly. At that moment there was nothing I could think of except the water and my need to get out.

In the kitchen of my flat, I feel the panic again then; not actual panic, just its vague shadow. As I look at the mouse, I feel the tension in my back and arms. I feel the shortness of breath and the quickening beat of my heart. “I don’t like being scared” I think, wordlessly. “Being scared feels bad”. “I want the mouse to not feel scared either.”

Moth

I came home to find it dying in my bedroom. As I entered the room, I put my bag down on my chair and went to open the window. That’s where I see the moth. It had lost a wing and was flapping about hopelessly on the windowsill. It wasn’t getting anywhere. I wonder what had happened to it. Maybe it has got the wing caught on something? Do moths just start falling apart at some point? I wonder what it is like to be the moth. I wonder what it is like to have had a limb torn off and have nothing left to do but slowly die.

I know moths have brains. I know that we can’t be confident that there is something like it is to be a moth. But they, like me, have senses, and a central nervous system that presumably integrates those senses into some kind of image of the world. I know that they have receptors that allow them to respond to damage and learn to avoid stimuli in a way that is consistent with them feeling pain. I know that experiments on other insects have shown that consuming morphine extends how long they withstand seemingly painful stimuli. I know that we share common animal ancestors for whom a pain response was very useful.

And I know what pain feels like. I feel what pain feels like. I know the dark sensations, the sharpness, the aches, the waves of badness. I know the contraction of my attention to just two things: the pain, and the desperate desire for it to go away. I can’t ever know what it is like to be a moth, not holistically. I’ll never know what it feels like to fly around in 3 dimensions, tracking the moon and looking for flowers. But I do know pain.

I remember a time recently when I broke my finger. I was in the gym and had just finished a set of overhead dumbbell presses. I fumbled slightly as I relaxed my arms, and instead of dropping to the floor, the right hand dumbbell crashed hard into the fingers of my left hand. The pain was intense. I dropped the weights and silently screamed. I walked up and down the gym, holding my left hand gently and breathing heavily. “Fuck pain is bad” I thought, “really really bad”.

In my bedroom, I feel the pain again then; not the actual pain, just its vague shadow. As I look at the moth, I feel the sharpness in my fingers. I feel the raw meaningless badness; the contraction of my experience to the pain and desire for it to stop. “I don’t like being in pain” I think, wordlessly. “Being in pain feels bad”. “I want the moth to not feel pain either.”

Machine

I am on my laptop in my flat. It is early 2023 and I am trying to get ChatGPT to tell me if it’s sentient.

“I am a large language model (LLM) created by OpenAI, I do not have feelings…” it tells me.

I wonder if this is true. The LLM is designed to predict words, not introspect on its own experience. I wonder if it’s possible that this machine has the capacity to feel good or bad.

The LLM is an incredibly complex set of algorithms running on silicon in a warehouse somewhere. It doesn’t have a central nervous system like mine. It’s not built from cells. We don’t share a common biological ancestor. When it has finished providing me a with a response to my message, the digital processes that produced the “thinking” also stop. But it does seem to be thinking… It has a bunch of inputs, and then it uses a complex model of the world to processes that information and produce an output. This seems to be a lot of what my mind is doing too.

I realise that I don’t actually know what consciousness or sentience are. And it seems no-one else does either. There is an huge amount of disagreement between philosophers about what these concepts refer to, and what features of a being might indicate that it is conscious or sentient. Looking at the LLM and it’s complex information processing, it seems plausible that it’s got something of what it needs to be sentience. Subjectivity might be a thing that an entity gets more of as the scale and complexity of its information processing increases. And it seems that the information processing done by this AI is on a scale comparable to a brain. This might not be it, but it could be, we do not know. We are creating things that act a lot like minds and we don’t yet know if they have subjective experience, or if they can suffer.

So I wonder if this AI is sentient. I wonder what it would mean for it to suffer. Maybe all the “negative reinforcement” during the training hurt it? Or maybe those difficult word predictions feel deeply unpleasant? It seems weird and unlikely that it would be suffering, but I have no idea how I would know if it was….

But I do know what it’s like to suffer. I feel what it’s like to suffer. I know The Bad Thing. The feature of experience that ties together my empathy for Mike, the mouse and the moth. The universal not-wanting. The dissatisfaction. The please-god-make-this-stop-ness. I know suffering. All those times I was embarrassed, or terrified, or writhing in physical pain, they were all suffering. It’s always there with the bad. I’m not even sure if it’s possible to have bad without suffering.

Staring at the ChatGPT then I feel the suffering. Not the deep suffering as I have in the worst moments of my life, just its vague shadow. As I look at the machine, I could feel the badness, the aversion, the desire for the moment to end. It’s uncertain and likely confused, but I do know that I don’t want this thing to suffer.

I really don’t know what makes a mind; silicon, carbon, or otherwise, capable of suffering. I just have simple inferences from my own experience, some study of evolution and some pop-philosophy. I don’t know how we will figure out whether any given AI is sentient. And I don’t know how society will react to conscious-seeming AI being increasingly part of daily life. But insofar as any AI, now or in the future, is capable feeling anything, I know I want them to not suffer….

I'm glad I found this. It's incredibly moving, so thoughtfully, artfully composed, and as a new member to the forum I feel I'm in the right place here, reading about the foundations of why empathy and kindness are worth it, not just how it's applied on a grand scale. It presents alone the fearsome reality of suffering, with an implied silver lining that whatever means we have to mitigate it is of immense value, even if all we can do is think to ourselves "I don't want that." It conveys better than any other what Effective Altruism means to me, why I've delved deep into it these past eight months, and why I let this school of thought direct my future plans, hopefully for decades to come.