Epistemic status: Not particularly certain. I am very likely wrong about some or all of these points, and would like to hear counterarguments to these in the comments.

Many utilitarians and effective altruists advocate moral circle expansion as a cause area in order to reduce far future suffering. While I am a negative-leaning utilitarian, I am skeptical that moral circle expansion will result in less suffering in the future, and I could realistically see it contributing to active harm. Herein, I will discuss the reasons for my skepticism.

Orthogonality of values

For the purposes of this article, I will define a large moral circle as a utility function that extends to a large number of individuals, i.e. people with a large moral circle care about what happens to a large number of beings. The main problem I see with this is that people with a large moral circle are not necessarily utilitarians. Moral circle expansion combined with negative utilitarianism may be a good thing, but it will not necessarily be combined with negative utilitarianism in practice. A malevolent sadist who wants to cause as much suffering as possible could be considered to have a large moral circle, but their moral circle will result in making the world far worse if their values are put into practice. Religious fundamentalists who want to impose their morality on everyone also arguably have a large moral circle, but their values they want to force on everyone are based on religious principles rather than utilitarian considerations.

The dominance of life-focused values

In order to determine the effects of moral circle expansion, we need to determine which set of values individuals with large moral circles will have in the long run. I would argue that the most adaptive set of values are values that assert that life is sacred, and which value preserving and expanding life as much as possible. In the long run, the effects of this may become far more pronounced. The reason for this is simply because of the effects of natural selection. Individuals who value life will reproduce more than individuals who don’t. Religions like Christianity and Islam, for example, encourage their followers to proselityze their religions and promote natalist values, and both consider suicide to be sinful. Antinatalists generally do not reproduce, so any predisposition to antinatalism will tend to be weeded out of the population over time. Andrés Gómez Emilsson has argued that the future could potentially be dominated by “pure replicators'' that consist of valueless patterns that value replicating themselves and nothing else. Maladaptive values may only be able to exist within the Hansonian dreamtime, which will be supplanted by more adaptive value systems as the dreamtime subsides.

A recent example of the effects of a large moral circle without utilitarianism is the overturning of Roe v. Wade. Anti-abortion activists and the Supreme Court justices who overturned it have a large moral circle as defined above, since they want to impose their values on an entire country. But they are not utilitarians with suffering-focused values, and are instead motivated by religious principles and the belief that life is sacred.

People with large moral circles will likely want to create large populations of sentient beings. From the perspective of an antinatalist or EFIList, creating large amounts of sentient life would be doing active harm by creating large amounts of harm. Many environmentalists want to increase wild animal populations and prevent species from going extinct, yet it could be argued that utilitarians should advocate wildlife antinatalism in order to decrease suffering in nature. Antinatalists may therefore want to promote moral circle narrowing rather than expansion.

Are large moral circles sustainable?

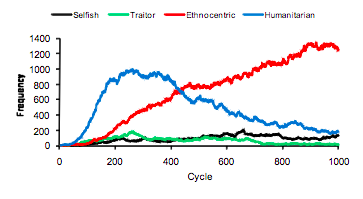

Another potential problem with moral circle expansion is that there is a risk that large moral circles are unsustainable. There is a significant risk that groups with large moral circles could collapse in the long run, and could be out-competed in the long run by groups with narrower moral circles. Universalism is a fairly recent development in human civilization, and throughout history, ethnocentrism has been the norm throughout most cultures. One study tried to determine the effects of different value systems as evolutionary strategies, including ethnocentrism, selfishness, universalism, and traitoriousness. They created a simulation to determine the effects of competing value systems, and which values would win out in the long run. In the simulation they created, they found that ethnocentric values tended to win out in the long run.

Moral circle expansion could cause psychological suffering

A final reason why I’m skeptical of moral circle expansion as a cause area is that it could result in significant psychological suffering for its adherents. This may be less relevant to longtermism than the other points discussed above, but is a relevant short term consideration. If people with large moral circles are high in affective empathy, any deviation from their values will likely cause them significant psychological suffering. Consider mass shootings, for example. Any disaster that draws national attention will cause mourning on a national scale. From a felicific calculus standpoint, the vast majority of the suffering generated is the result of numerous people feeling bad for the victims, and the suffering felt directly by the victims is only a small proportion of the total suffering generated.

Consider a hypothetical society where everyone in the society is a clinical psychopath or sociopath. Ironically, it is likely that this society would have less suffering per capita compared to a society of empaths. Even if a hypothetical society of sociopaths would have more malevolence and criminality on average, such a society would also have a complete absence of affective empathy, guilt, and grief. Because of this, such a society may have far less suffering on average compared to a society higher in empathy.

I think there are a lot of problems with the idea of directly pushing for moral circle expansion as a cause area -- for starters, moral philosophy might not play a large role in actually driving moral progress. But I see the concept of moral circle expansion as a goal worth working towards (sometimes indirectly towards!), and I think the discussion over moral circle expansion has been beneficial to EA -- for example, explorations of some ways our circle might be narrowing over time rather than expanding.

I'd also like to mention that, of course, a cellular-automata simulation of different evolutionary strategies is very different from the complex behavior of real human societies. There are definitely lots of forces that push towards tribalistic fighting between coalitions (ethno-nationalist, religious, political, class-based, and otherwise), but there are also forces that push towards cooperation and universalism:

"I think there are a lot of problems with the idea of directly pushing for moral circle expansion as a cause area -- for starters, moral philosophy might not play a large role in actually driving moral progress. "

Could you please explain to me why, in your view, this is a "problem" with moral circle expansion as a cause area? Thanks!

I like the idea of expanding people's moral circle, I'm just not sure what interventions might actually work. The straightforward strategy is "just tell people they should expand their moral circle to include Group X", but I'm often doubtful that strategy this will win converts and lead to lasting change.

For example, my impression is that things like the rise and decline of slavery were mostly fueled by changing economic fundamentals, rather than by people first deciding that slavery was okay and then later remembering that it was bad. If you wanted to have an effect on people's moral circles, perhaps better to try and influence those fundamentals than trying to persuade people directly? But others have studied these things in much greater depth: https://forum.effectivealtruism.org/posts/o4HX48yMGjCrcRqwC/what-helped-the-voiceless-historical-case-studies

By analogy, I would expect that creating tasty, cost-competitive plant-based meats will probably do more to expand people's moral concern for farmed animals, than trying to persuade them directly about the evils of factory farming.

Since I think people's cultural/moral beliefs are basically downstream of the material conditions of society ("moral progress not driven by moral philosophy"), therefore I think that pushing directly for moral circle expansion (via persuasion, philosophical arguments, appeals to empathy, etc) isn't a great route towards actually expanding people's moral circles.

Ah okay, thanks for explaining. Sounds like by "pushing for moral circle expansion as a cause area", you meant "pushing for moral circle expansion via direct advocacy" or something more specific like that. When I and others have talked about "moral circle expansion" as something that we should aim for, we're usually including all sorts of more or less direct approaches to achieving those goals.

(For what it's worth, I do think that the direct moral advocacy is an important component, but it doesn't have to be the only or even main one for you to think moral circle expansion is a promising cause area.)

The middle point is pretty interesting! (I think questions of sustainability/inevitability have mixed implications for aspiring effective altruists, because they might update your views on size/importance and tractability in the opposite directions)