As part of a sequence of posts on the Meta Coordination Forum (MCF) 2023, this post summarizes the results of the MCF Community Survey.

About the Community Survey

We (the organizers of Meta Coordination Forum 2023) invited community members to share their views on meta-EA topics in an announcement about MCF early September 2023.[1]

The survey we invited community members to fill out included many of the same questions we asked MCF invitees (summarized here), as well as some new questions on topics like transparency. Thirty-six (n = 36) members of the community responded. In the summary of results below, we compare community members' responses with MCF invitees’ responses when possible.

Caveats

- We think this summary is likely not representative of the EA community. We think this because the survey received relatively few responses and it was not widely shared (only in this forum post). I (Michel) wish we would have publicized this survey more in hindsight, but I understand why we didn’t prioritize this at the time: the weeks before MCF were very busy for the team.

- We often used LLMs for the summaries.

The Future of EA

Briefly outline a vision for effective altruism that you’re excited about.

Community & Inclusion (7 mentions):

- A welcoming and friendly EA that poses the question, "What's the most effective way to do good?" and doesn't impose strict ideologies.

- EA should be inclusive, catering to kind, curious, ambitious individuals and fostering overlaps with other communities.

- It should represent an open church that respects diverse opinions on cause areas.

- Actively address racism, classism, sexism, and maintain strong ties with the academic world.

- It should maintain worldview diversity, being aware of every cause area's limitations.

- Emulate successful movements like climate, civil rights, and animal rights.

Career & Life Paths (4 mentions):

- Encourage individuals to pursue higher-impact life paths, through donations, choosing impactful careers, or adjusting current roles for greater impact.

- Equip people with tools and training to achieve high impact. EA shouldn't convey that only the 'elite' matter; instead, it should be inclusive.

- Offer guidance suited to various life situations; for example, advice overly focused on studying computer science or consultancy isn't suitable for everyone.

- Allow people to enjoy EA as a community regardless of their professional affiliations. Example: a school teacher should be valued at EA community gatherings.

Governance & Structure (5 mentions):

- Infrastructure to handle interpersonal conflicts and deal with problematic individuals.

- Develop independent cause/field-specific professional networks decoupled from EA.

- Encourage decentralized, localized communities, professionalize cause areas, and shift from centralized funding for community building.

- EA should preach and practice worldview diversification and cause neutrality. Reduce concentration of power, increase democratic elements, and ensure inclusivity and professionalism.

- Improve coordination across the movement, better map organizations and their roles, and adopt innovative governance techniques for enhanced collaboration.

Global Perspective (3 mentions):

- EA should become truly global, reflecting the worldwide nature of future-shaping transformative technologies.

- A global, decentralized EA movement led by community builders that influence people and equip them with tools and networks.

- Focus on ensuring EA remains a hub for diverse cause areas to interact, provides capacity for neglected global threats, and aims to become a mainstream ideology.

Role & Outreach (5 mentions):

- EA should act as a catalyst to shift the entire impact-based economy towards higher efficacy.

- Envision EA as an ideology 'in the water', akin to environmentalism or feminism, making it mainstream.

- EA should be a movement focusing on the principles of scale, tractability, and neglectedness.

- Provide tools and networks to enable individuals and institutions to achieve the most good, with an emphasis on specific cause areas but remaining open to new ones.

- EA should focus on both current and future technological progress, balancing between harm prevention and growth encouragement, especially from a longtermist perspective.

What topics do you think would be important to discuss at the Meta Coordination Forum?

The Meta Coordination Forum received a wide range of suggested topics to discuss, covering various aspects of EA.

Strategy and Focus

- EA's Future Focus: Suggestions were made on discussing the trajectory and focus of EA five years from now.

- Cause Prioritization: Multiple mentions were made about the need for a discussion on cause prioritization, especially concerning AI Safety. One point specifically raised the importance of monitoring neglectedness and importance in causes.

- Resource Distribution: Questions were posed about distributing resources between AI and non-AI work and finding new mega donors to diversify from Open Philanthropy.

Governance and Oversight

- Community Oversight: Mentioned multiple times, questions about the governance and oversight of EA organizations were raised, including the efficacy of the Community Health Team.

- Open Philanthropy’s Role: Multiple responses focused on Open Philanthropy’s large influence due to its funding monopoly, questioning its accountability and suggesting the introduction of other funders.

- Decision-making: Multiple queries were made about the top-down vs. bottom-up decision-making within EA. Questions about democratic elements in central EA were also mentioned.

Community and Culture

- Community Health: Some responses wanted to see more effort put into reducing what they perceived as governance failures in cases like FTX and Nonlinear, alongside better support community builders.

- Inclusion and Accessibility: These were brought up in the context of what type of community EA should be.

- Branding and Identity: Several responses discussed EA’s branding, including the epistemic health of the community and who should be responsible for the "brand."

- Global Inclusion: One respondent wanted to see more discussion on promoting EA principles in the Global South.

Transparency and Communication

- Internal and External Communication: Multiple responses indicated a need for clearer internal communication and the division between internal & external communication.

- Coordination Mechanisms: There were calls for better coordination mechanisms generally and how information should be disseminated to the broader community.

Ethical and Philosophical Considerations

- Longtermism: Its importance and how closely connected it is to EA was discussed.

- Consequences: One person questioned whether EA is actually bringing about better consequences in the world.

- Ethical Frameworks: Points were raised about whether the community should lean more into non-consequentialist ethics and if it should be more risk-averse.

Specific Initiatives and Events

- Recent Developments: AI safety was noted as the area where the strategic situation has shifted the most, citing events like ChatGPT, GPT4, and various AI letters and task forces.

- FTX and Scandals: How to move on from FTX and other internal scandals, such as sexual assault and governance failures, was mentioned multiple times.

- Role of Various EA Entities: Topics like the role of EA Groups, EA Funds, and CEA were brought up, with a hope of having these groups make their priorities clearer.

Overall, the suggested topics reflect a community grappling with questions about its focus, governance, and ethical orientation, while also considering how to improve inclusivity, communication, and long-term sustainability.

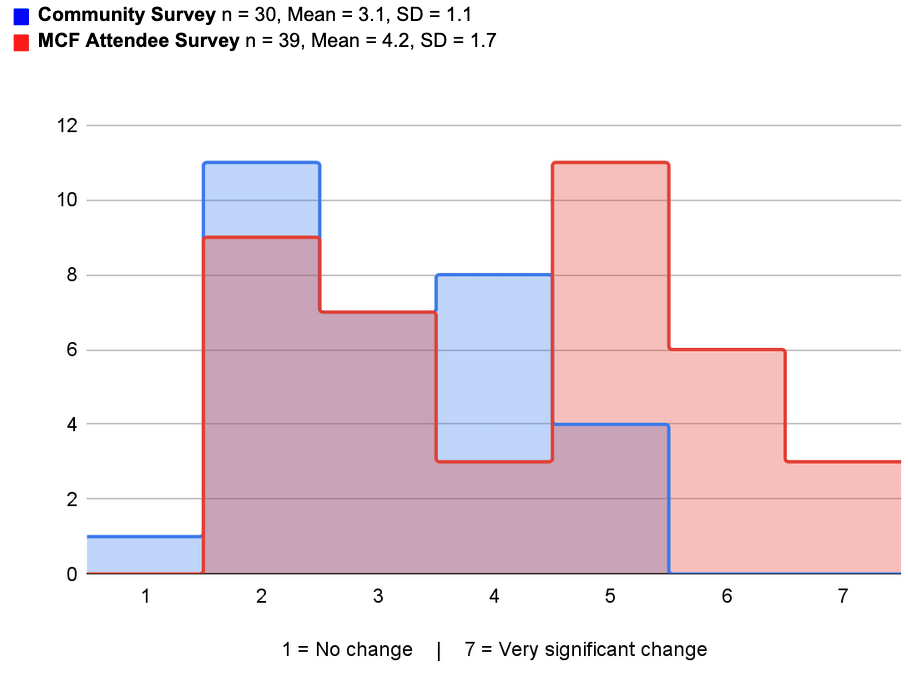

How much should reflecting on the FTX collapse change the work-related actions of the average attendee of this event?

Community views on the future of EA

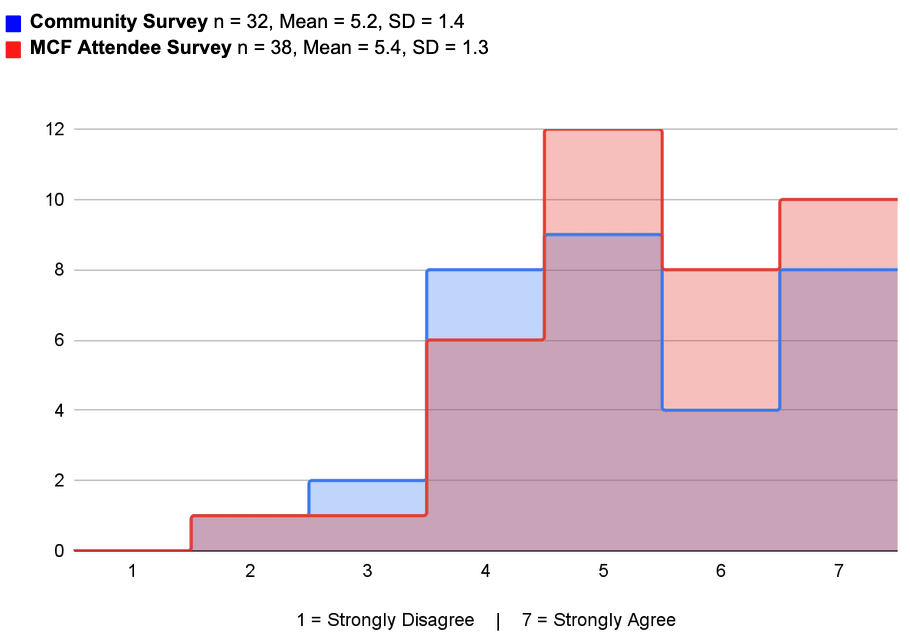

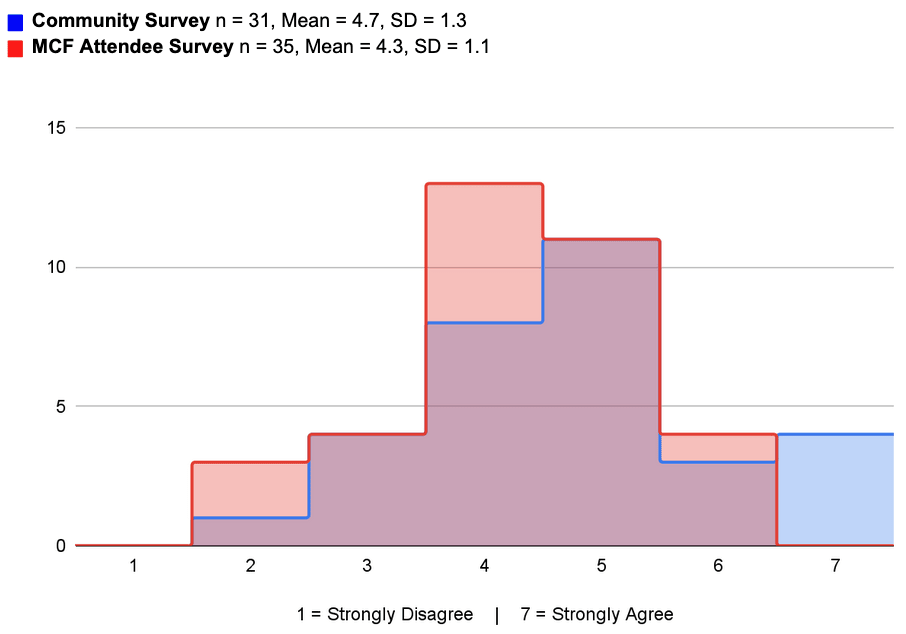

When possible, we compared these views to Meta Coordination Forum invitees who were asked the identical question.

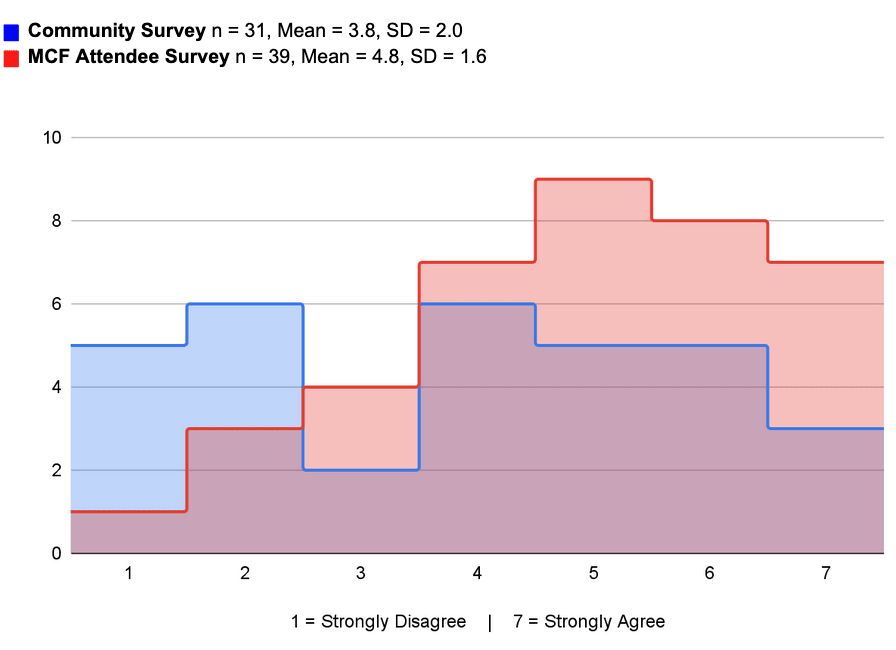

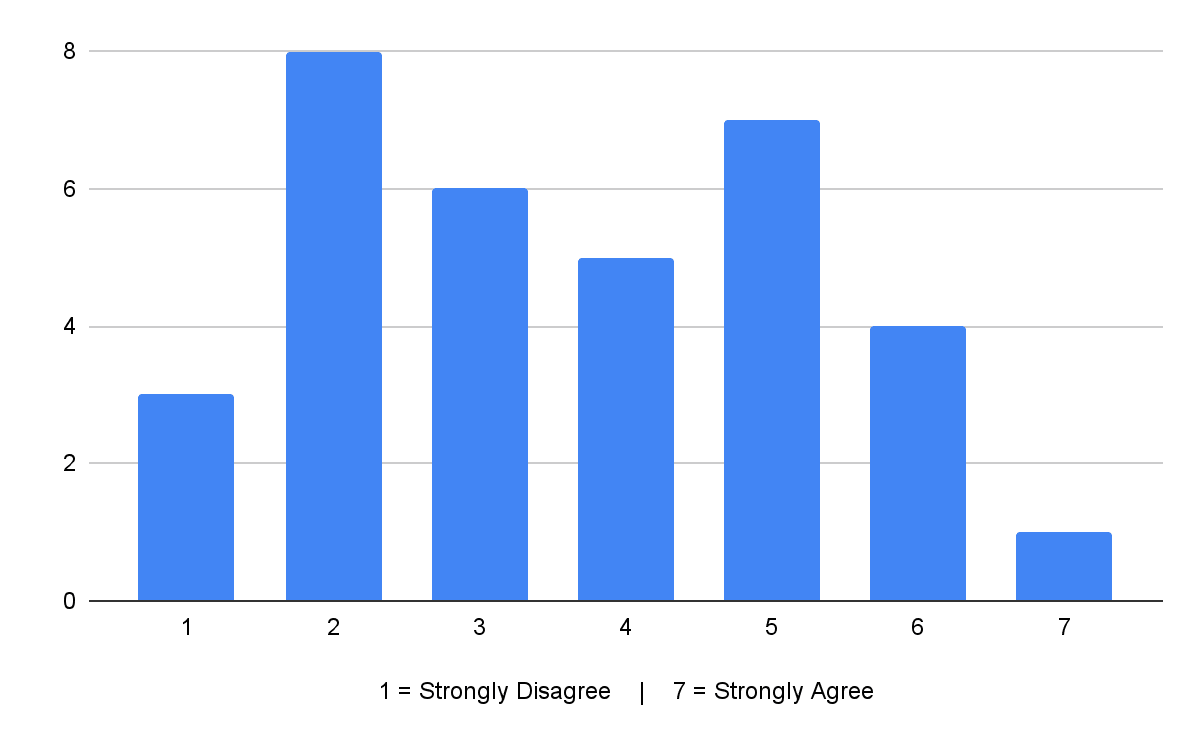

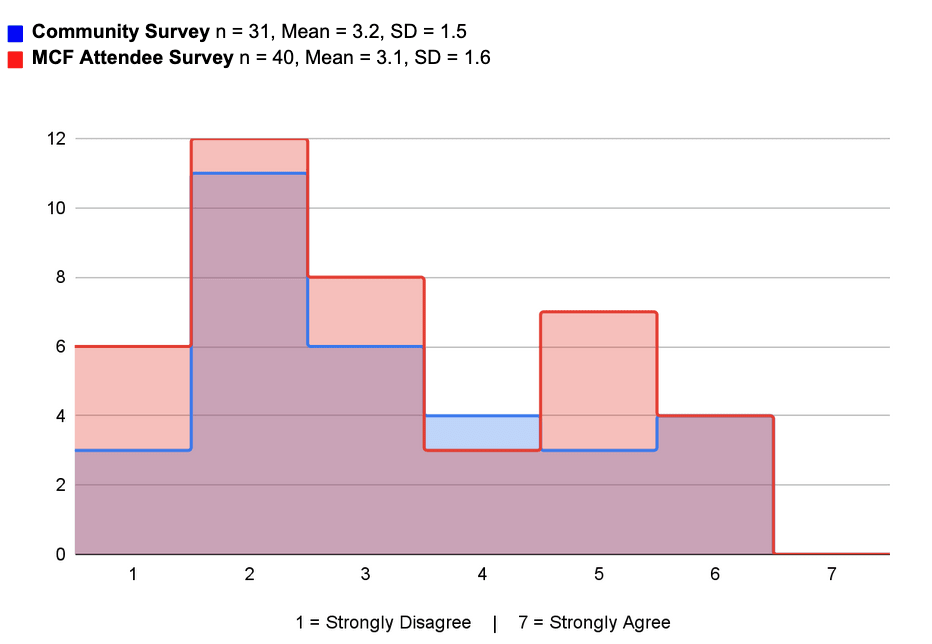

EA should be a community rather than a professional network. (n = 33, Mean = 3.5, SD = 1.6)

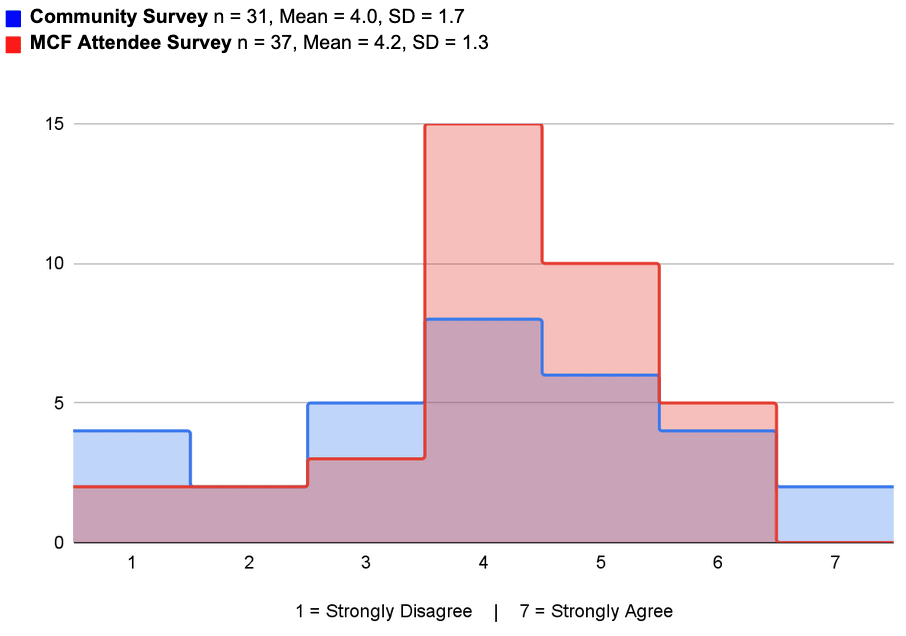

Assuming there will continue to be three EAG-like conferences each year, these should all be replaced by conferences framed around specific cause areas/subtopics rather than about EA in general (e.g. by having two conferences on x-risk or AI-risk and a third one on GHW/FAW).

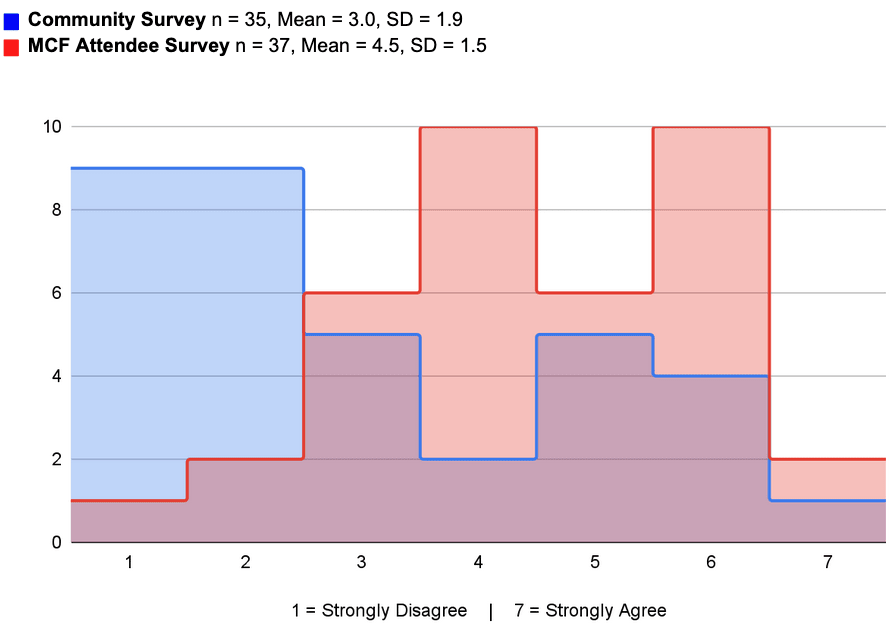

There is a leadership vacuum in the EA community that someone needs to fill.

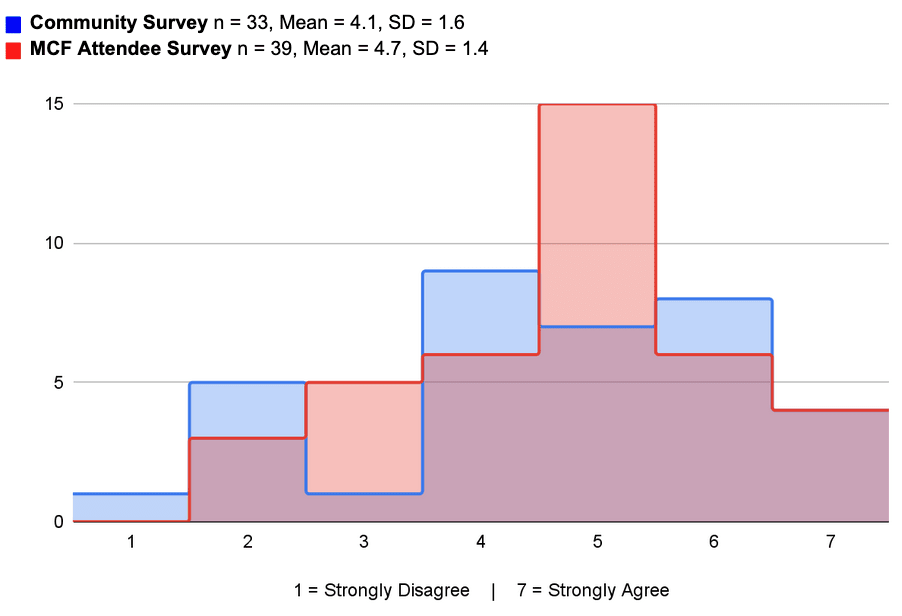

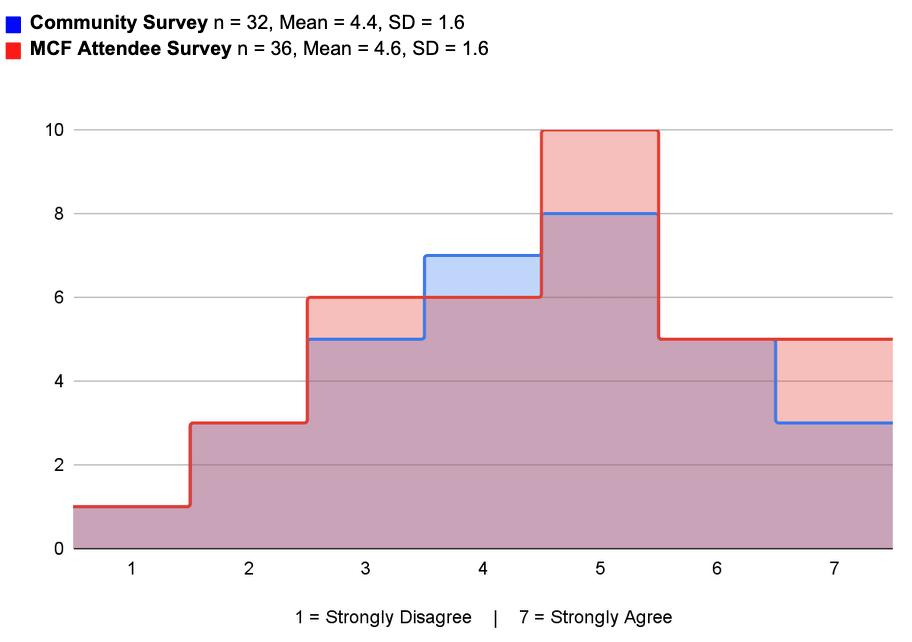

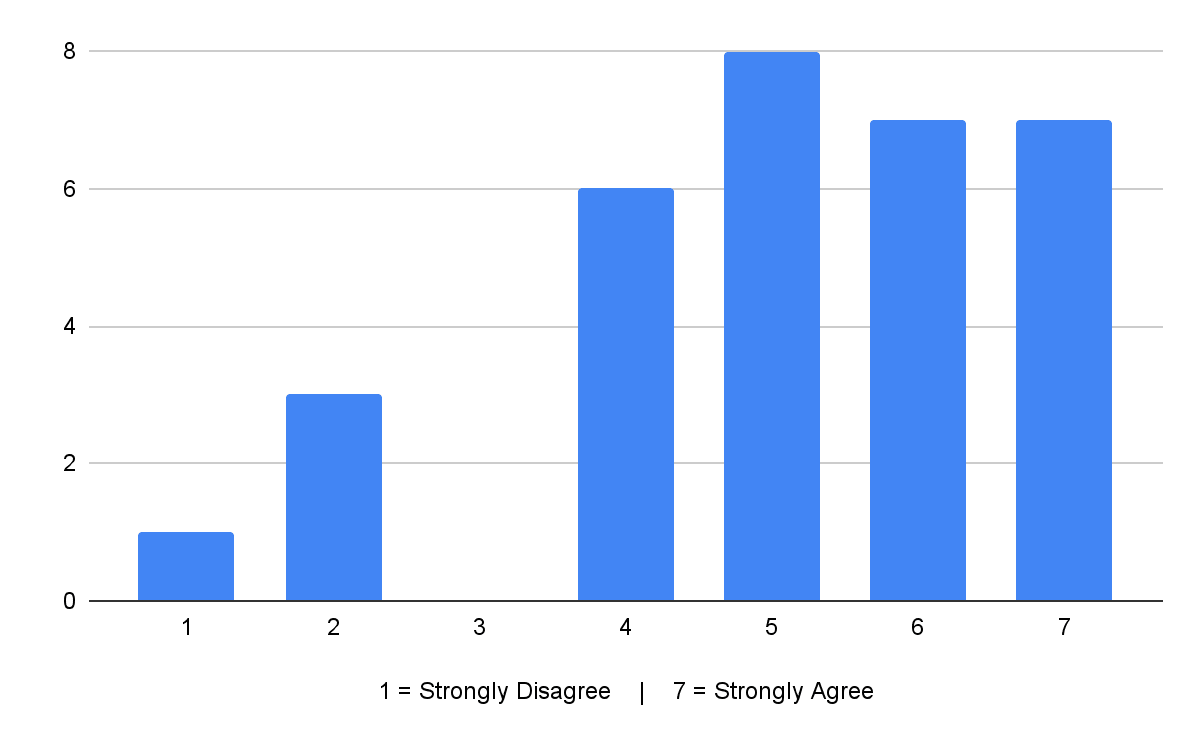

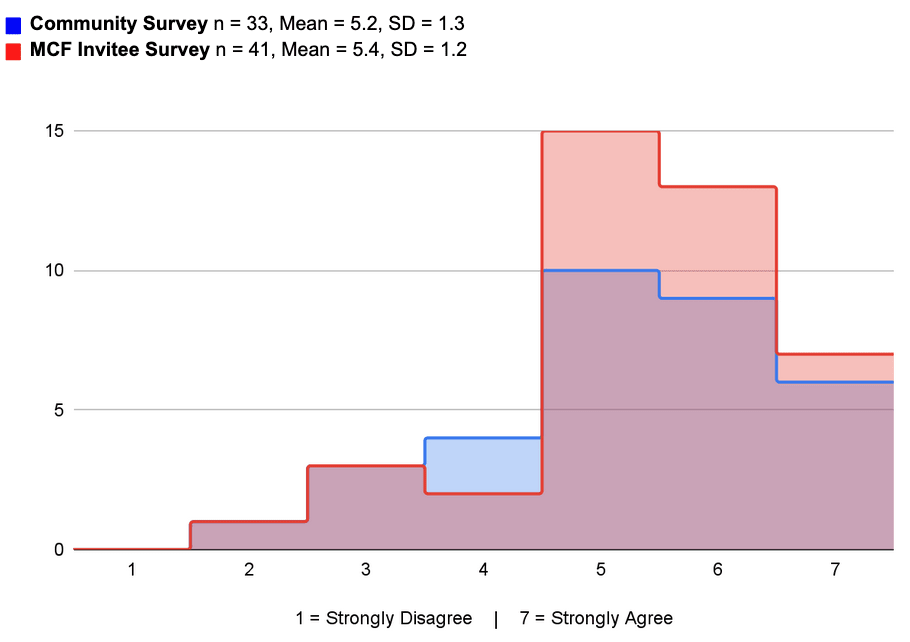

We should focus more on building particular fields (AI safety, effective global health, etc.) than building EA. (n = 34, Mean = 4.1, SD = 1.6)

Transparency

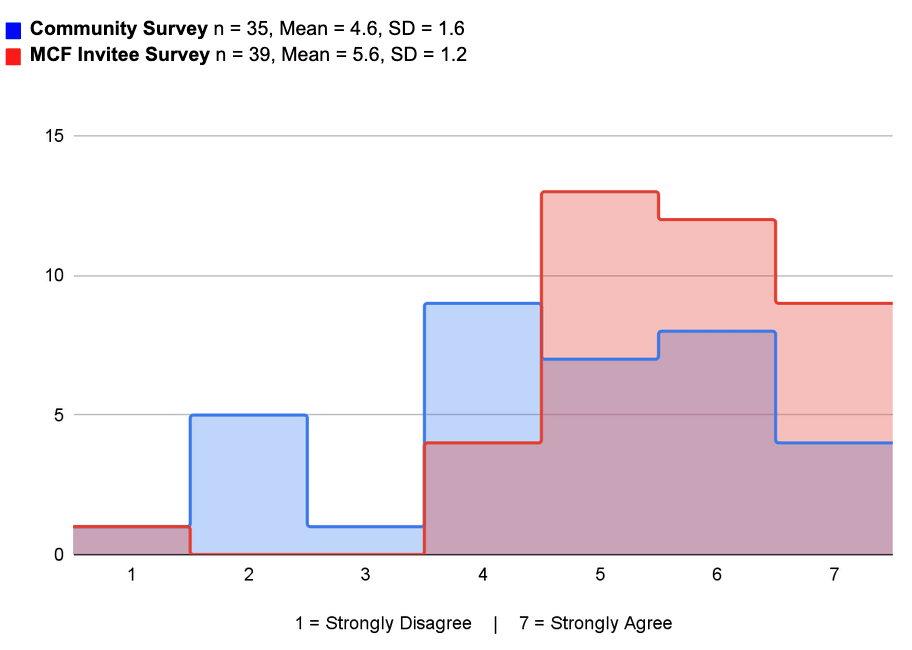

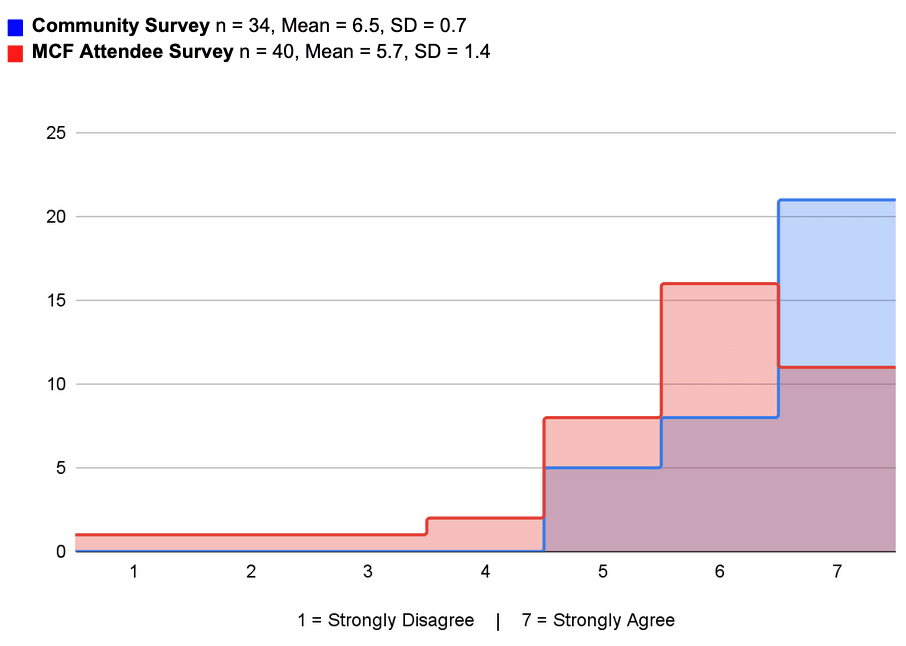

EA thought-leaders and orgs should be more transparent than they currently are. (n = 32, Mean = 5.1, SD = 1.7)

If you're pro more transparency on the part of EA thought-leaders and orgs, what type of information would you like to see shared more transparently?

Projects listed below were mentioned once, unless otherwise noted. This is a long list, so org leaders may be interested in which of the below people most want to see.

- Justifications for Role Assignments: A clear rationale for why specific people are chosen for roles, especially when they lack obvious experience, to avoid perceptions of nepotism or bias.

- Moral Weights: Transparency about the ethical considerations that guide decisions.

- Regular Updates & Communication:

- Organizational updates every 6-12 months.

- Monthly, informal updates from employees.

- Write-ups akin to communicating with a trusted friend, sharing personal and institutional positions. (Multiple mentions)

- Insight into Operations and Strategy:

- Monitoring, evaluating, and sharing results with the community.

- How decisions were made and who was consulted.

- Risks the community should be aware of.

- Opportunities for community involvement.

- Financial incentives.

- Concrete plans for various issues. (Multiple mentions)

- Transparency from Major Funders: Explanation of funding priorities, and factors that influenced them. For example, knowing a funder's mission to spend their founder's net worth before they die could explain certain actions. (Mentioned twice)

- Organizational Culture: Information about the work culture, measures for a safe environment, and potentially on addressing social issues like transphobia and racism.

- Transparent Decision-Making:

- Disclosure of conflicts of interest.

- Responses to criticisms.

- Lists of advisors and board members, and their selection criteria.

- Strategies, theories of change, and underlying assumptions. (Multiple mentions)

- Community Building:

- Transparency in the focus areas (e.g., why focusing on elite universities).

- Decentralization of power around funding.

- Clarity on decision-makers in EA orgs and their structure. (Multiple mentions)

- Accountability and Governance:

- Public blog posts about decision-making within EA orgs, ideally semi-annually.

- Suggestions for more democratic features like community elections for some leadership positions. (Mentioned twice)

- Miscellaneous:

- A post-FTX reflection.

- Updates on what EA Funds is doing.

- A transparent scope.

The Relationship between EA and AI safety

Given the growing salience of AI safety, how would you like EA to evolve?

AI Safety and EA Overlap:

- Maintaining Distinction (7 mentions):

- Many feel that AI safety and Effective Altruism (EA) should not be seen as one and the same. For instance, AI safety should not dominate EA so much that they become synonymous.

- Some opine that the overlap between EA and AI safety is currently too extensive. They believe that community building efforts should not converge further, but should expand into newer, untapped areas.

- Separate Infrastructure (4 mentions):

- Proposals include creating an AI safety equivalent of the Centre for Effective Altruism (CEA) and allowing AI safety-focused organizations to operate as active spin-offs, similar to Giving What We Can (GWWC) or CE.

- Continued Connection (1 mention):

- Despite advocating for a distinct AI safety community, some also acknowledge the inherent ties between AI safety and EA, emphasizing that AI safety will always remain linked to EA.

Role of AI Safety in EA:

- Support (5 mentions):

- Many respondents acknowledge the importance of AI safety and feel that EA should continue to provide resources, mentorship, and support. Some emphasize the need for more programs, pipelines, and organizations.

- Broad Approach (3 mentions):

- There's a sentiment that while AI safety is critical, EA should not singularly focus on it at the expense of other initiatives. A diverse and multifaceted approach is essential to address various global challenges.

- Worldview Diversity (2 mentions):

- Some respondents advocate for worldview diversity within EA. They stress the importance of understanding that different worldviews will assign varying levels of importance to AI safety.

- Engagement Depth (2 mentions):

- Respondents advocate for deeper engagement with AI safety as a cause, urging against merely nudging people into default paths.

Public Perception and Branding:

- Concerns about Association (5 mentions):

- Some respondents express concerns that closely tying AI safety to EA could lead to public skepticism, especially from groups that might traditionally be wary of EA. This might hinder collaborative efforts in areas like policy advocacy.

- Potential PR Implications (2 mentions):

- The association of AI safety with EA might deter some talent from joining either cause. Given the recent PR challenges faced by EA, some believe it might be advantageous for AI safety to be recognized as its own field/cause.

Strategic Considerations:

- Growth and Talent Acquisition (3 mentions):

- A few respondents emphasize the need to grow both the AI safety and EA communities. They suggest that recruitment for AI safety shouldn't necessarily require familiarizing prospects with the entire EA ideology, and vice versa.

- Near-term Optimization (1 mention):

- A note of caution was raised against over-optimizing based on near-term timelines.

Safety and Security Considerations (1 mention):

- With the rise of AI capabilities, one respondent thought that the EA community should be more security conscious, including minimizing social media use and being wary of potentially hackable technologies.

Other focuses:

- Digital Sentience (1 mention):

- There's a call for greater focus on the issue of digital sentience.

- Effective Giving (1 mention):

- A respondent expressed the desire for the growth of effective giving as a primary facet of EA.

Agreement voting on relationship between EA & AI safety

Most AI safety outreach should be done without presenting EA ideas or assuming EA frameworks.

Most EA outreach should include discussion of AI safety as a cause area.

We should promote AI safety ideas more than other EA ideas.

We should try to make some EA principles (e.g., scope sensitivity) a core part of the AI safety field.

AI safety work should, like global health and animal welfare, include many more people who are not into effective altruism.

EA hubs should try to set up separate AI safety office spaces.

We should have separate EA and AI safety student groups at top 20 universities.

We should have separate EA and AI safety student groups at most top 100 universities.

Do you have any comments on the relationship between EA and AI safety more broadly?

- Separation vs. Integration:

- Many respondents believe there should be a distinction between EA and AI safety. Some argue that while EA may fund and prioritize AI work, the communities and work should remain separate. They cite risks of dilution and potential misalignment if the two become synonymous.

- On the flip side, others feel that AI safety is like any other cause within the EA framework and should not be treated differently. There's an emphasis on the benefits of interdisciplinary exchanges if AI safety remains under the EA umbrella. (Brought up multiple times).

- There were comparisons drawn to the animal welfare space, where tensions between EA-aligned and non-EA-aligned advocates have arisen, leading to potential distrust and a tainted "animal rights" identity. There's a worry that AI Safety could face similar challenges if it becomes too diluted.

- There's a suggestion to establish a new organization like CEA focused solely on AI safety field-building, separate from other EA community-building efforts.

- Role of EA in AI Safety:

- EA's value in the AI safety domain might lie not in recognizing AI safety as a concern (which may become mainstream) but in considering neglected or unique aspects within AI safety. EA could serve as a 'community of last resort' to address these issues.

- Concerns with too much focus on AI safety:

- Some respondents expressed concerns about the close association between AI safety and EA. They feel that EA has become heavily dominated by AI safety discussions, making it challenging for those working in non-AI safety domains.

- EA should not solely be about AI safety. The community should return to its roots of cause neutrality and effectiveness.

- One respondent suggests monitoring university group feedback to ensure that attendees don't feel pressured to prioritize a particular cause. They propose specific survey questions for this purpose.

- Outreach & Engagement:

- It's suggested that AI safety outreach should occur without the EA branding or assumptions, even if managed by local EA groups. This would prevent potential misrepresentations and ensure genuine interest.

- There's a call for diversity and thoughtful execution in outreach to make people aware of AI risks. One respondent mentioned the idea of having someone responsible for "epistemics" during this outreach.

- Concerns were raised about the implications of an AI safety-centric approach on EA engagement in the Global South.

Meta-EA Projects and Reforms

What new projects would you like to see in the meta-EA space?

Projects listed below were mentioned once, unless otherwise noted.

Career and Talent Development

- Career Training and Support: A repeated theme is the need for career training and financial support for people transitioning to high-impact roles.

- Talent Pipeline: Interest in creating better talent pipelines for university students, focusing on EA knowledge and rationality.

- Mid-Career Engagement: Multiple responses indicate a need for engaging mid-career professionals and addressing operational errors in EA organizations through their expertise (Mentioned 3+ times).

Governance and Internal Affairs

- Mediation and Communication: The idea of professional mediators to handle interpersonal issues within EA is floated, along with more internal mediation (Mentioned 2 times).

- Whistleblowing: Establishment of an independent whistleblowing organization to highlight conflicts of interest and bad behavior. (Mentioned 2 times).

- Transparency and Epistemics: A need for better transparency from EA leaders and organizations for improving epistemic quality (Mentioned 2 times).

- Diversifying Boards and Training: Suggestions for training people for governance roles, like serving on boards and auditing.

Outreach and Inclusion

- Global Outreach: Multiple responses advocate for making EA and AI safety more global and less elite-focused (Mentioned 2+ times).

- Community Building: Support for national/regional/supranational cooperation initiatives and secure employment options for community builders is desired.

- Diversity Initiatives: Calls for more diversity efforts, including funding for Black in AI and similar groups.

Research and Strategy

- Prioritization Research: A call for building a professional community around prioritization research.

- AI Safety: Interest in an AI safety field-building organization and a startup accelerator (Mentioned 2 times).

- Donor Advisory: A desire for more UHNW (Ultra High Net Worth) donation advisory, especially outside of longtermism.

- Effective Animal Advocacy: The need for better public communication strategies in areas other than longtermism, such as animal advocacy.

Educational Programs and Public Engagement

- Educational Curricula: Suggestions for EA-aligned rationality and ethics to be integrated into schools and universities.

- Virtual Programs: Suggestions for shorter, lower-barrier virtual programs for local community builders interested in AI.

Miscellaneous

- Alternative Models and Competitors: Desire for alternative organizations to existing EA structures, and better models for internal EA resource prioritization (Mentioned multiple times).

- Public Intellectual Engagement: Calls for EA to bring in outside experts for talks and collaboration, including figures like Bill Gates.

What obviously important things aren’t getting done?

- Leadership and Management: There's a call for more experienced professionals in leadership roles, citing issues with EA organizations hiring people who are unqualified for the roles they are taking on. This point is raised multiple times, with some respondents suggesting potentially hiring management consultants.

- Coordination in AI Safety: A recurring theme is the lack of centralized coordination, especially in AI safety and governance. It is felt that there is no specific group overseeing the pipeline of AI safety and governance, nor are there efforts to understand and fill in gaps. Some responses argue for more robust centralized coordination, even if it’s separate from meta EA organizations.

- Community Health and Reconciliation: Some respondents raised concerns about the community's overall health, specifically regarding stress and anxiety. The enforcement of community health policies is also called into question, with suggestions for a well-resourced Community Health team that is potentially separate from the team that enforces the policies.

- Policy and Global Concerns: Some respondents called for EA to extend to more countries, and to close the gap between EA theory which often originates from the Global North and practice, which is often directed towards the Global South.

- Skeptical Perspective on AI: One response expresses skepticism about the AI safety community’s motivations, raising concerns about the community being self-serving. It suggests that EA could garner more credibility by achieving something substantial and altruistic, such as a large-scale poverty reduction project.

- Outreach and Engagement: Respondents noted issues regarding the EA community's engagement with existing cause communities and the political left. The need for more nuanced debate and peer-review processes involving non-EA experts was also mentioned, and outreach to mid-career professionals and traditional career advice services was recommended.

- EA's relationship to Wealth: A point is made that EA should not be organized around those who have money or align with the goal of increasing their wealth.

- Funding and Philanthropy: Respondents voiced concerns about the over-reliance on Open Philanthropy, suggesting the need for 'Open Phil 2.0 and 3.0' to diversify funding sources and mitigate vulnerabilities. Earning to Give fundraising for EA causes was also suggested.

- Other Areas: Responses also touch on the need for community-wide strategy generation, coordination mechanisms, and polling. Animal welfare is noted, though the respondent claimed less familiarity with that space.

We hope you found this post helpful! If you'd like to give us anonymous feedback you can do so with Amy (who runs the CEA Events team) here.

- ^

After summarizing the results, we shared a summary of responses and key themes with MCF invitees shortly before the event.

A quick note to say that I’m taking some time off after publishing these posts. I’ll aim to reply to any comments from 13 Nov.

I'd be curious to hear about potential plans to address any of these, especially talent development and developing the pipeline of AI safety and governance.

Any plans to address these would come from the individuals or orgs working in this space. (This event wasn't a collective decision-making body, and wasn't aimed at creating a cross-org plan to address these—it was more about helping individuals refine their own plans).

Re the talent development pipeline for AI safety and governance, some relevant orgs/programs I'm aware of off the top of my head include: