TLDR: Throughout human history pattern recognition, inspiration, and reasoning have been at the core of most innovations - including artificial intelligence. It is theorised that a code-generation model with the ability to demonstrate the aforementioned parameters should be able to build recursively better AI systems. This is the current realised 'holy grail' of AI and typically referred to as the singularity, in which there appears to be a very highly likelihood (>80%) of it being surpassed within the next 14 years. Naturally, the advent of artificial general intelligence (AGI) would occur shortly-after (~15 years), alongside the development of a superintelligence. As organisations compete to be the first to build such a technology, processing power continues to increase, access to training data surges, and new algorithms and approaches to machine learning are being discovered.

Previously, I had written a post titled 'Objects in Mirror Are Closer Than They Appear' in which I briefly speculated on how a recursive self-improving AI system would soon lead to AGI. Following several discussions within the field, I was guided to three publications[1][2][3] that have further solidified my stance on which approaches may lead to AGI, but also greatly shortened the timeline on when I believe such a threshold will be surpassed.

In order to render this a comprehensive argument without necessitating the read of my previous post, I will rehash several passages from it which I continue to deem relevant to my updated answer of why 'AGI will be developed by Jan 1, 2043'. Now with that out of the way, here is why (and how) a novel algorithm discovery, symbolic reasoning, and code-generation model will result in an intelligence explosion.

Defining Artificial General Intelligence

To ensure this post is an accessible read, I would like to define some core vocabulary and concepts that will be used throughout, in order to avoid conflict of interpretation.

The commonly accepted definition for AGI amongst academics states that 'an agent should possess the ability to learn, understand and preform any intellectual task that a human can'[4]. Though this may appear as overly ambitious to many, one must remember that our own cognitive abilities are the result of neural activation patterns which simultaneously permits us sentience and to experience qualia. By applying this computational theory of mind to Turing-completeness, an AI model could convincingly imitate cognition (should it be provided sufficient memory, high quality training data, and an appropriate algorithm). The reason I explicitly state 'imitate' rather than 'simulate' is that there is presently no mathematical model of the brain, nor do we have an understanding of sentience (let alone consciousness), and so developing an end-to-end learning system to accurately predict an intelligent entity's most probable actions should suffice as AGI.

Within the field however, many critics and researchers debate whether surpassing this threshold is even feasible. Their views typically range from questioning if AGI can demonstrate 'true' creativity, to whether we can even test if it has achieved sentience. I do not deem these factors necessary for an artificial general intelligence though, as neither can be answered for humans either. I back this point with a statement by French writer Voltaire that "Originality is nothing but judicious imitation". Take dreams as an example - a superlative display of so-called creativity, but as research[5][6] has repeatedly shown, they are simply an attempt by our brain to compile an assortment of memories into an experience. Where they fail (e.g. missing fingers, incorrect reflections etc.) is also unintentionally mimicked by diffusion models when rendering images, as demonstrated below in figure 1:

Figure 1: The result of three different diffusion models prompted with "closeup of a hand touching its reflection in a puddle". Source (from left to right): Stable Diffusion, Craiyon, Dream by Wombo.

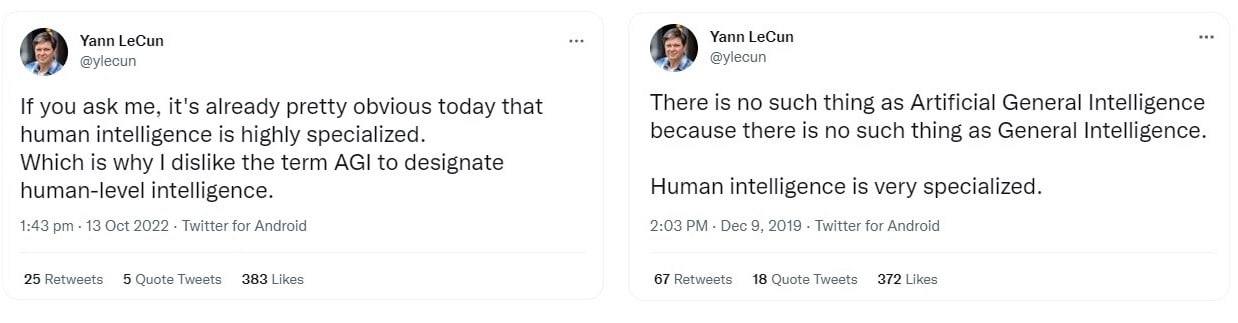

At times, I will use the term 'general human-level intelligence' when describing an AGI's abilities. By this, I am referring to the comprehensive intellect of all mankind. Without the inclusion of the word 'general', we're only provided with what a sole human's capabilities are, which results in an unfair comparison against what AGI should be. Renowned names amongst the AI community, such as Yann LeCun, have frequently spoken against human-intelligence as a baseline for AGI (as depicted in below), and though I agree, this is only due to a lack of 'general' prefixing 'human intelligence'.

Sure, human-level intelligence is usually specialized per person, however, this just renders large language models (LLMs) such as LaMDA, GPT-3, and BLOOM to be a more apt comparison since they are proficient in engaging in dialogue, fundamental arithmetic, and some even convincing experts that they're sentient[7]. I do regard near-future iterations of LLM's to be a precursor to AGI though, as their understanding of language enables reasoning and thus, programming (e.g. OpenAI's Codex and DeepMind's AlphaCode) - which allows for systems to soon be optimising their own source code, whilst simultaneously enhancing their skillset at an exponential rate.

Incentivising Innovation

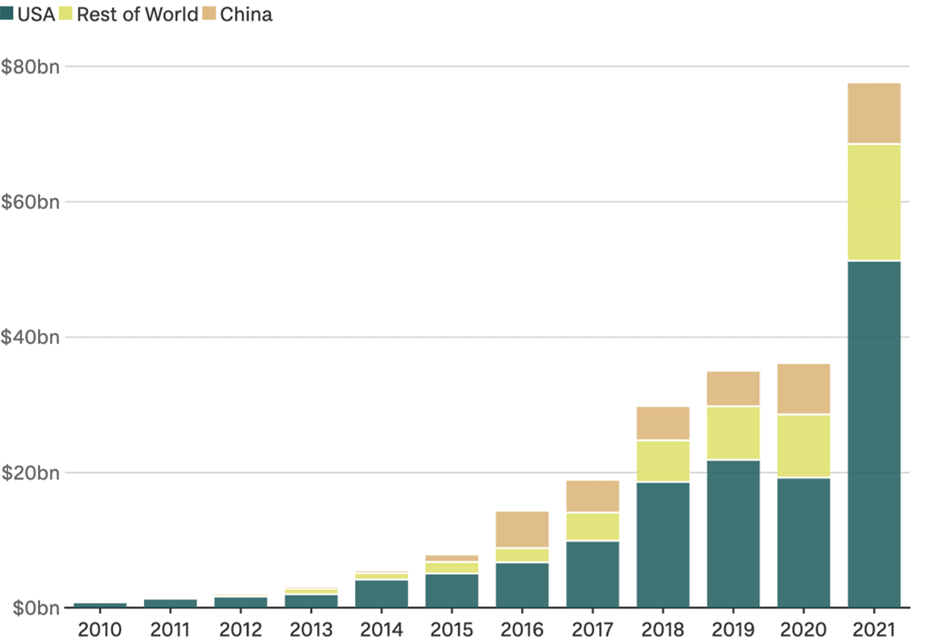

The pioneers of machine learning were severely limited by the technology of their time - so much so that the first ever chess engine, Turochamp had to be handwritten (by Alan Turing himself). They optimistically believed though, that computational processing power would rapidly increase, enabling more advanced algorithms to be utilised. This was largely true until organisations (such as DARPA) began to cut funding on research they deemed as unguided; machine learning included - resulting in an AI winter. The recent success of narrow AI's application throughout an exhaustive list of disciplines, however, has allowed for a wider recognition of AGI and its potential. This growing interest is evident in the diagram below, which demonstrates how investment into machine learning has rapidly scaled as governments, institutions and businesses have realised the power of a system capable of interdisciplinary automation and scientific breakthroughs; essentially a superintelligence.

Figure 2: The increasing global investment into research and development for artificial intelligence (2010-2021). Source: Tortoise Global AI Index/Crunchbase.

The question of 'why?' in respect to what prompts organisations to allocate absurd amounts of funding and resources towards the development of AGI can roughly be narrowed down to one word - competition. Historically the previous incentive to innovate was war, but contemporarily it appears to be our crashing economy. Within the sphere of AI, however, the realised potential of a system capable of general human-level intelligence transcends into a race for international power across politics, the economy, and ultimately arriving at a singularity across all domains. This train of thought arrives as a result of British mathematician Irving Good's argument in 1965 that "since the design of machines is [an] intellectual activity, an ultra-intelligent machine could design even better machines; [and thus] there would unquestionably be an 'intelligence explosion'".

In the prelude of Max Tegmark's novel 'Life 3.0, many of the researchers building AGI did so "for much the same reason that many of the world's top physicists joined the Manhattan Project to develop nuclear weapons: they were convinced that if they didn't do it first, someone less idealistic would". Such an ideology manifesting itself amongst groups with sufficient resources and foresight of the opportunities AGI presents would undoubtedly incentivise participation within this race. Unfortunately, whilst this fear can be a great motivator, its drawbacks tend to hinder one's ability to think broadly, such as AI alignment plans, or ensuring the general public may still seek fulfilment - though this is beyond this scope of this post. However, it is ultimately this urgency between organisations which I believe will soon lead towards the development of AGI, as greater funding should allow for research in novel approaches, optimized algorithms, improved hardware, and attaining higher quality data.

The Hardware is Already Here

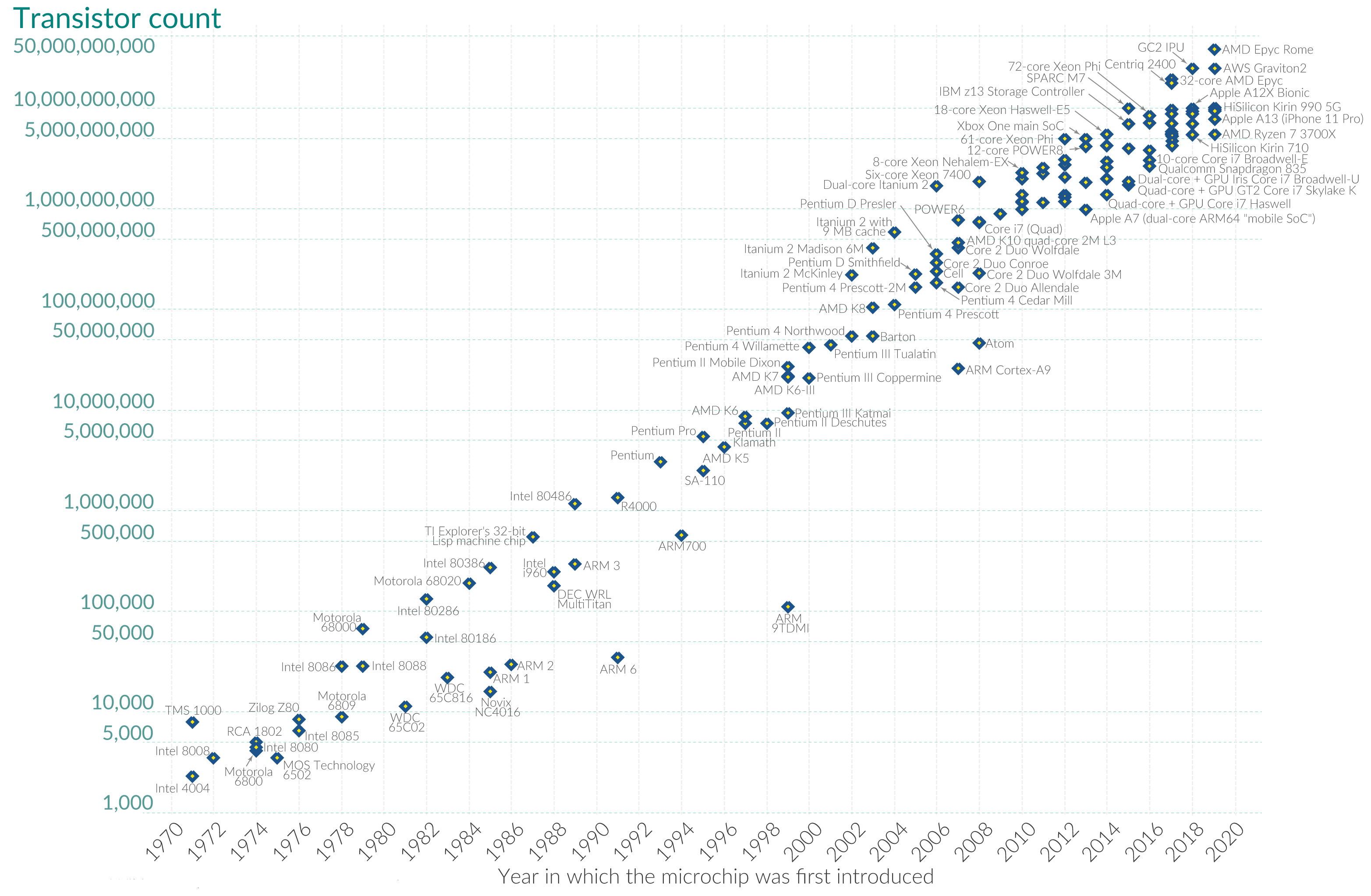

Should Moore's Law plateau before the threshold of general human-level intelligence is surpassed by AI, I would not deem it a hinderance to the overall goal. In fact, I would argue that we are already close (< 8 years) to converging towards the limits of classical physics within the sphere of computing in regards to transistor sizes. The increasing transistor density per microchip (as demonstrated in figure 3) is already resulting in transistors to approach atomic scales, and a continuation of this trend would result in inefficiencies, as the energy passing through them will be exceeded by energy consumption required to maintain a stable temperature.

Figure 3: Graphical representation of Moore's Law, demonstrating the doubling of transistors upon microchips approximately every two years. Source: Wikipedia & OurWorldinData.org (wikipedia.org/wiki/Transistor_count)

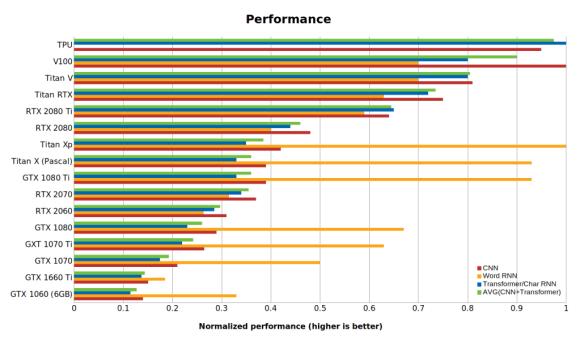

To resolve these constraints, scaling the number of AI accelerators within a system (fundamentally a supercomputer) allows for more computation to be performed within a shorter timespan. But firstly, what exactly are AI accelerators? Simply, they are a category of application-specific integrated circuits (ASICs) with optimised circuitry to train models, such as GPUs and tensor cores. Training models revolves around performing matrix operations and thus, when it was realised that GPUs multi-threading capabilities could be taken advantage of to carry out parallel computations, they became the industry standard. Further efforts resulted in the development of hardware dedicated to computing matrix operations by leading firms such as Tesla’s D1 chips, Google’s TPUs, and NVIDIA’s Tensor Core Unit’s which have reduced the training times of neural networks by orders of magnitude.

Figure 4: Performance of commercially available (local/cloud) processing units tested on 4 different deep learning models. Released from 2016-2021 (not including NVIDIA’s latest 30 series). Source: Saitech Incorporated.

Current supercomputers utilised by (publicly acknowledged) groups working towards AGI already operate between the realm of 94 - 1,102 PFlop/s, with Tesla claiming Dojo will soon be capable of 1.8EFlop/s of processing. Though I do foresee this trend eventually stagnating (despite competition incentivising the development of even more powerful hardware) we must not forget that the optimisation of software, and research into potentially applicable algorithms is also vital in scaling a model's abilities. In fact, I would presently consider this the more important domain to focus efforts on.

The Significance of Transformers

Language, just like many other behaviours and occurrences in nature, is a pattern. I believe that the forms of communication it has permitted (ranging from speech to writing) are what have allowed humanity to technologically progress more than any other species on Earth. Through communication, we have been able to manage large logistics operations, such as constructing cities and developing transportation, but most importantly, by exchanging ideas, humanity has been able to advance its understanding of science. This was evidently clear to Isaac Newton as he stated long-ago, "if I have seen further, it is by standing on the shoulders of giants". So how does this apply to the eventual development of AGI?

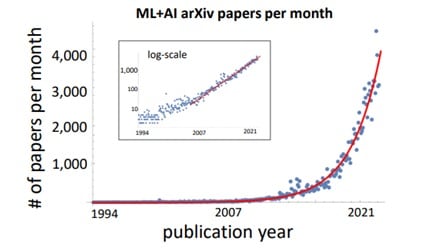

Ever since the publication of 'Attention Is All You Need'[8], there has been a surge in the number of LLMs based upon the Transformer architecture. Though our ability to communicate with models is what will fundamentally allow us to direct their outputs (and likely assess whether AGI has been developed), researchers have demonstrated that fine-tuning various (hyper)parameters to train on new datasets can scale their capabilities. Examples include Google's Minerva[9] which can use mathematical notation to solve quantitative reasoning problems, and OpenAI's GPT-f[10][11] for automated theorem proving and exhibiting symbolic reasoning. Their present significance however, is visible in the fact that of all AI related papers posted to arXiv within the last two years, 70% have referenced transformers, and 57% of ML researchers believe that this technology is a significant step towards the creation of AGI[12].

From the inception of this field, one of machine learning's greatest milestones has been to develop a system capable of carrying out AI research. This is the realised 'holy grail' of AI and the approach that I believe will lead to artificial general intelligence, but how does this relate to transformers? Ultimately, they have rendered self-supervised learning a more accessible task which I would deem a highly important attribute for a recursively self-improving AI to possess. Though, despite it being a new technology, I do (and rather optimistically) believe we will have developed a multi-agent system will full capability of building better AI via optimised performance and extended abilities by 2035 - but why? Well, with the recent rate of publications and innovations in this sphere (evident in figure 5), it should come as no surprise then that there presently exists a paper, who's authors claim to have achieved this; to a lesser degree[13].

Figure 5: Number of papers published per months in the arXiv categories of AI grow exponentially. The doubling rate of papers per months is roughly 23 months. The categories are cs.AI, cs.LG, cs.NE and stat.ML[14].

'Self-Programming Artificial Intelligence Using Code-Generating Language Models' is the title of a paper submitted to the NeurIPS 2022 conference. By implementing a code-generation transformer model alongside a genetic algorithm, the authors have allowed for the system to alter its source code to extend its capabilities, guided by an internal response to an external assessment. The matter of fact, however, is that this paper is not a showcase of novelty, but rather a demonstration that it is possible for AI to recursively self-improve.

Objects in Mirror Are Closer Than They Appear

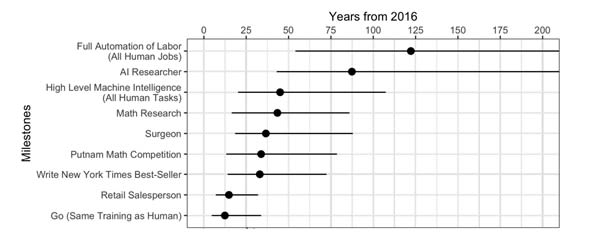

In 2016, DeepMind's AlphaGo beat world-champion Lee Sedol 4-1 whilst simultaneously unveiling an entirely new approach to the game, through moves such as the renowned 'move-37'. The significance of DeepMind’s success, however, was not that they had engineered an AI to beat Lee Sedol, but that they had done so ~12 years before field-experts estimated the milestone to be surpassed, as depicted below:

Figure 6.1: Long-term timeline of median estimates (with 50% intervals) for AI achieving human-level performance. Milestone intervals represent the date range from the 25% to 75% probability of the event occurring[15].

This pattern of accomplishing milestones within the sphere of AI several years prior to expectations is becoming progressively evident as more people take an interest to machine learning research in addition to the competitive atmosphere between groups as previously discussed.

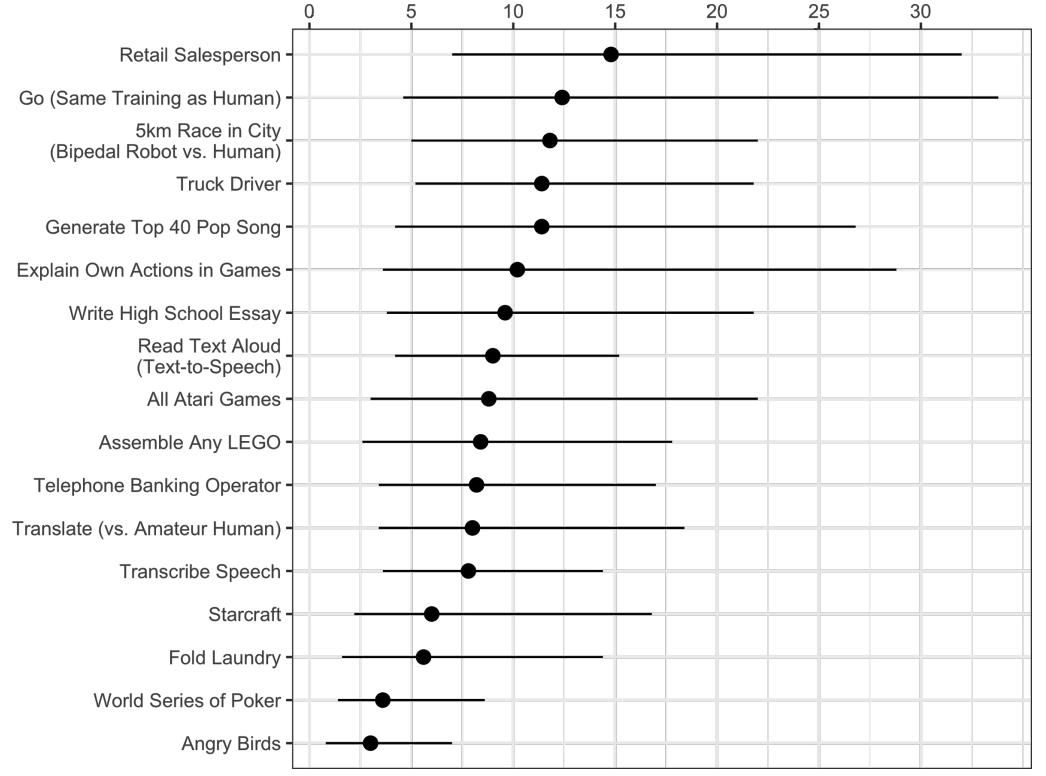

Figure 6.2: Short-term timeline of median estimates (with 50% intervals) for AI achieving human-level performance. Milestone intervals represent the date range from the 25% to 75% probability of the event occurring[15].

Figure 6.2 depicts some of the major short-term objectives which would either assist in the creation of AGI or mass-automation. Of these seventeen, eight have already been surpassed (all of which did so prior to the median of industry-expert estimations):

- DeepMind’s AlphaGo (March 2016) – Beat Go champion

- DeepMind’s WaveNet (September 2016) – Text to Speech

- Google’s Neural Machine Translation (November 2016) – Translation

- DeepStack (March 2017) – World Series of Poker

- DeepMind’s AlphaStar (January 2019) – StarCraft II

- OpenAI’s GPT-3 (June 2020) – Write high school essay

- DeepMind’s MuZero (December 2020) – All Atari games

- OpenAI’s Whisper (September 2022) – Transcribe Speech

One commonly overlooked factor as to why these breakthroughs in AI are seemingly being realized so frequently is the growing accessibility to data. The ease of uploading content to the internet, running simulations, and synthetic data-generation have allowed for higher quality datasets to train models on and improve their prediction outputs. Some groups opt to make their work available to the public such as OpenAI’s Co-pilot, and have users report back the quality of their interaction or experience with it, which may be used to perpetually better the models outputs. This form of semi-supervised deep reinforcement learning is largely inspired by how we human’s process and apply information, causing some researchers to believe that this may be a promising path to achieving AGI – though two significant factors stand out. The human brain is estimated to consist of 86 billion neurons and operates on typically 20watts of power at any given time. Evidently, it is considerably more efficient than the most powerful general-purpose publicly known agents (e.g. GATO), leading many to question how we can maximise performance whilst minimising their size; presently rumoured to be one of OpenAI’s approaches to building GPT-4, and the other claiming that it will be a multi-trillion parameter model. Though these are important to consider when developing AI, I would argue the primary focus should instead be on recursive self-improvement, such that the system can optimise its own source code - a programme able to design its successor. AGI will happen along the way, rapidly.

In my opinion, the most important milestone for AI to achieve prior to AGI is the ability to not only carry out math research, but to also explain its reasoning and provide proofs. DeepMind's AlphaTensor has demonstrated that this is possible through having automated the discovery of a more efficient algorithm for matrix multiplication[1] (a core process for training neural networks), however, this still has some way to go before the conduction of math research is considered a surpassed milestone. I previously mentioned how Google's Minerva and OpenAI's GPT-f are somewhat capable of explaining their reasoning and providing proofs, though to apply it to novel discoveries at scale is the next hurdle I would consider vital to not just the development of AGI, but also solving the alignment problem. This is largely due to the nature of creating models, as researchers clearly plan them with maths and thus, a self-improving AI may ultimately render its own source-code a black box.

The Benchmark

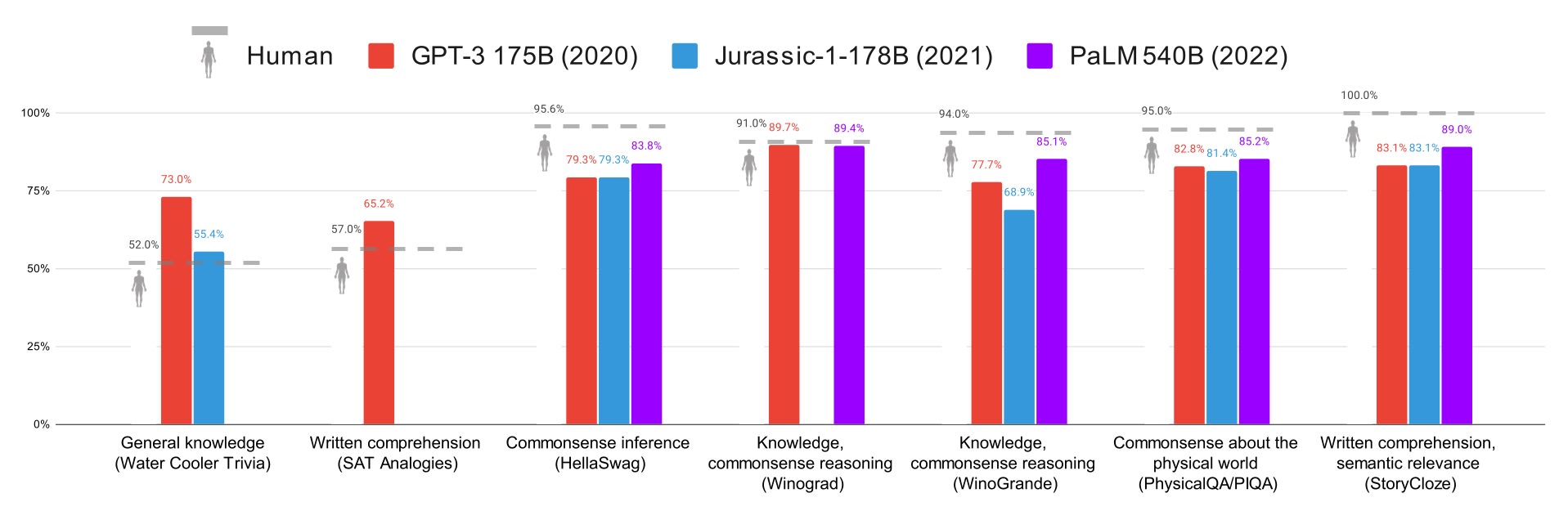

So, let's say we eventually reach a point within the near future in which an AI system's capabilities are broad enough (whether through recursive self-improvement or multi-modality etc.) to be considered a contender for the title of AGI. How would we test this? Previously, the Turing Test was considered a valid assessment for whether a machine was truly capable of exhibiting intelligent behaviour via text based conversations, though as time progressed it became more of a thought experiment. On the Lex Fridman Podcast, Elon Musk proposed a video conference where if one could not distinguish whether the individual they're conversing with is real or not, AGI had been achieved. I disagree however, as the abilities of the agent/model being tested are still very limited in regards to the overall human skillset. As of April 2022, Meta, NYU, DeepMind and Samsung have designed a benchmark to assess the IQ of language models against humans and one another.

Figure 7: Scores of recent LLMs against humans and other models on SAT and trivia questions. Source: Dr Alan D. Thompson (lifearchitect.ai/iq-testing-ai)

Whilst this is a much more comprehensive test (similar to assessing students for their academic abilities in regards to whether they should be accepted into a university course), I would not consider it to test enough skillsets. So, what do I propose? A co-op game, inspired by DeepMind's approach to designing environments to test their agent's abilities, but I would like to take a brief moment to explain how this would vary.

Imagine a game where an agent and yourself are tasked to cooperate to solve puzzles across varying environments inspired by the real world (ideally including realistic physics simulations too), however, neither you nor the agent have any precursory knowledge of the keybinds, events, or environment that will be presented. Examples would include being tasked to find several randomly placed items within a relatively small but procedurally-generated office floor/building whilst communicating via text/voice chat to notify the other of your progression, location and other variables. The goal would be to successfully find all items within the allocated time. A game like this would assess several abilities including (but not limited to):

- Engaging in dialogue

- Navigating environments

- Decision-making

- Cooperation with humans

- Understanding objectives

Whilst this example was not a comprehensive test of all human skillsets, I'd like to imagine this is something other levels within the game could cover, such as reasoning (via riddles or mathematical questions) etc. This benchmark obviously still requires a lot fine-tuning and would require significant amounts of resources/funding to develop, but I do consider the fundamental idea of a co-op game possess the potential to assess a lot of abilities one would expect of AGI. The opportunities are quite seemingly endless.

Closing Remarks

There appears to be a high likelihood (>80%) of human-level artificial general intelligence being developed within the next 15 years. As organisations compete to be the first to develop such a technology, processing power will increase, access to training data will surge, and new algorithms and approaches to machine learning will be discovered. Whilst the opportunities AGI can provide are exciting, it is also worrying if alignment plans are not enforced or its developers exploit its abilities for selfish purposes. This fear is commonly discussed within the sphere of AI and what I believe to drive the competition. I find this exciting yet extremely worrying as it causes me to truly believe that human level artificial intelligence is close but are we ready? Are alignment plans in place? Are those leading the effort well-intentioned? Ultimately, building the first artificial general intelligence awards control of humanity’s fate. I just hope whoever gets there first has our best intentions at heart.

References

- Alhussein Fawzi, Matej Balog, Aja Huang, Thomas Hubert, Bernardino Romera-Paredes, Mohammadamin Barekatain, Alexander Novikov, Francisco J. R. Ruiz, Julian Schrittwieser, Grzegorz Swirzcs, David Silver, Demis Hassabis, Pushmeet Kholi. "Discovering faster matrix multiplication with reinforcement learning". In Nature, 2022

- Stanislas Polu, Jesse Michael Han, Kunhao Zheng, Mantas Baksys, Igor Babuschkin, Ilya Sutskever. "Formal Mathematics Statement Curriculum Learning". In arXiv:220201344v1 [cs.LG], 2022

- Yujia Li, David Choi, Junyoung Chung, Nate Kushman, Julian Schrittwieser, Remi Leblond, Tom Eccles, James Keeling, Felix Gimeno, Agustin Dal Lago, Thomas Hubert, Peter Choy, Cyprien de Masson d'Autume, Igor Babuschkin, Xinyun Chen, Po-Sen Huang, Johannes Welbl, Sven Gowal, … , Oriol Vinyals. "Competition-Level Code Generation with AlphaCode. In arXiv:2203.07814 [cs.PL], 2022

- Hal Hodson. "DeepMind and Google: the battle to control artificial intelligence". In The Economist, 1843 magazine, 2020

- Mark Blagrove, Perrine Ruby, Jean-Baptiste Eichenlaub. "Dreams are made of memories, but maybe not for memory". In Cambridge University Press, 2013

- American Academy of Sleep Medicine. "Dreams reflect multiple memories, anticipate future events". In ScienceDaily, 2021

- Blake Lemoine. "Is Lambda Sentient? – an Interview". In Medium, 2022

- Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin. "Attention Is All You Need". In arXiv:1706.03762v5 [cs.CL], 2017

- Aitor Lewkowycz, Anders Andreassen, David Dohan, Ethan Dyer, Henryk Michalewski, Vinay Ramasesh, Ambrose Slone, Cem Anil, Imanol Schlag, Theo Gutman-Solo, Yuhuai Wu, Behnam Neyshabur, Guy Gur-Ari, and Vedant Misra. "Solving Quantitative Reasoning Problems with Language Models". In arXiv:2206.14858v2 [cs.CL], 2022

- Stanislas Polu, Ilya Sutskever. "Generative Language Modelling for Automated Theorem Proving". In arXiv:2009.03393 [cs.LG], 2020

- Stanislas Polu, Jesse Michael Han, Kunhao Zhen, Mantas Baksys, Igor Babuschkin, Ilya Sutskever. "Formal Mathematics Statement Curriculum Learning". In arXiv:2202.01344v1 [cs.LG], 2022

- Sam Bowman. Survey of NLP Researchers: NLP is contributing to AGI progress; major catastrophe plausible. In Less Wrong and AI Alignment Forum, 2022

- Self-Programming Artificial Intelligence Using Code-Generating Language Models. In Open Review, 2022

- Mario Krenn, Lorenzo Buffoni, Bruno Coutinho, Sagi Eppel, Jacob Gates Foster, et al. Predicting the Future of AI with AI: High-Quality link prediction in an exponentially growing Knowledge network. arXiv:2210.00881v1 [cs.AI], 2022

- Katja Grace, John Salvatier, Allan Dafoe, Baobao Zhang and Owain Evans. When Will AI Exceed Human Performance? Evidence from AI Experts. arXiv:1705.08807v3, 2017