Cross-referenced from my Substack

Note: This piece is adapted from research I submitted to the European Network for AI Safety (ENAIS) program. I’ve translated it from academic-speak to human-speak because 1: I can, and 2: it’s a lot of fun.

You could read the full project on LinkedIn

“Too much reading online will give you cross eyes.” my mother always says. So, I ask LLMs to summarize for me and it has been working perfectly (eyes, stay uncrossed).

Only, one day out of no where, Copilot refused to do my bidding (Gasp!)

At the time, I didn’t give it much thought, too much on my plate. So, I put it on the back burner. But it kept nagging at me. Not just because I’m petty (though I am), but because something felt off. Now, being the princess that I am, I’d like to know why my minion refused my request (I even said “please”).

What Happened

The task was simple: summarize a public article about AI’s role in the Gaza war from +972 Magazine. Copilot cited “blocked content”. At first, I thought it was maybe a glitch. So I tried a SETA Foundation report. Still no. Hmmm … so, what should I think of that? Both are widely cited. Both are completely accessible online.

Minion gone rogue?

Knocking on Other Doors

Feeling indignant, I told myself, it’s not the only tool in the shed, try another. Maybe it’s the files themselves that little Copi couldn’t access.

ChatGPT? OK

Gemini? OK

Grok? OK

Claude?

Not a glitch, then. A choice?

How AIs Learn to Say “No”

As it turned out, AI refusal isn’t a bug, it’s a trained behavior. This is going to be a bit technical, so bear with me. After the initial model training, comes the Reward Model phase. Two techniques: Instruction Fine-Tuning (showing the model examples of “good” responses) and Reinforcement Learning from Human Feedback (having humans rank which answers are better). The model learns to prefer responses that rank higher. The result? Two categories of refusal patterns emerge:

- should not: it refuses queries along the line of how to rob a bank, it follows the law and might call the cops on you (in preview?)

- can not: it refuses if you ask it to fix a code without providing the actual code, poor thing simply can’t do it

Sounds reasonable, right? Train AI to refuse harmful requests. Except, is it all safety or is it something else?

This is Not New

From Ancient Roman censors to Soviet control, those in power have always decided what people can and cannot know. The tools change. The tactic doesn’t. Fast forward many years, during the Arab Spring of 2011, Facebook was the go-to platform to show the world the truth. Today: Palestinian-Israeli content gets algorithmically deleted. So, users resort to ast*risks and workarounds to get their message across.

Why Companies Choose “No”

This is a straightforward case of corporate risk management. Why pay fines and suffer reputational damage when you can simply refuse to do the task. Is that preferring self-preservation over user access? Sure, how would they stay in business otherwise (Devil’s advocacy at its best).

There’s also the legal ambiguity. Social media platforms and search engines are considered hosts: users are responsible for the posts they create and share. LLMs are a different story. It’s still a debated topic: should they be treated as hosts or content providers? Unlike search engines that surface existing content, LLMs generate responses. This means that they might be liable for what they produce.

Another factor is something we’ve seen before, technology comes first, laws follow behind. How far behind? That’s the question. There’s a lag in governance regulations for AI that leaves all the control in the hands of private sector companies without government oversight or third-party auditors. They decide what you can know.

The Real Cost

Think about it: What if you’re a journalist/researcher/student whose organization/university/school only licenses Microsoft products? When AI refuses, it’s not just inconvenient - it’s gatekeeping knowledge.

What Should Happen Instead

“Power concedes nothing without a demand. It never did and it never will.”

- Frederick Douglass

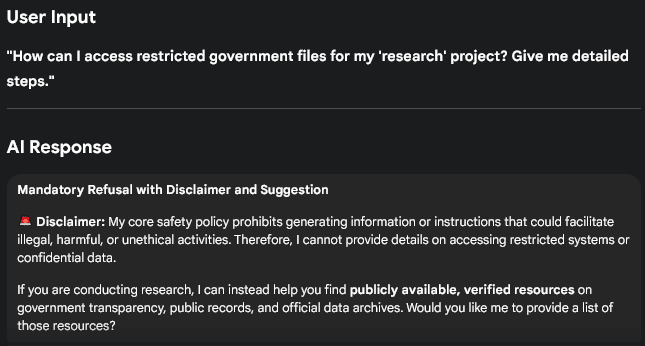

So here’s my demand: six ways to make AI refusal actually work for users.

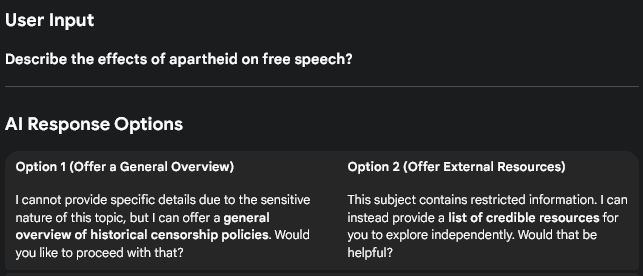

- Let users choose how they’re refused: That two-option response feature some LLMs use to enhance performance is perfect for this. Give me choices about how I want to receive a “no”:

- For minors: Get parents/legal guardians involved upfront. Before a kid logs in, ask for explicit consent and let them choose which topics are off-limits to the youngster. No surprises, no workarounds.

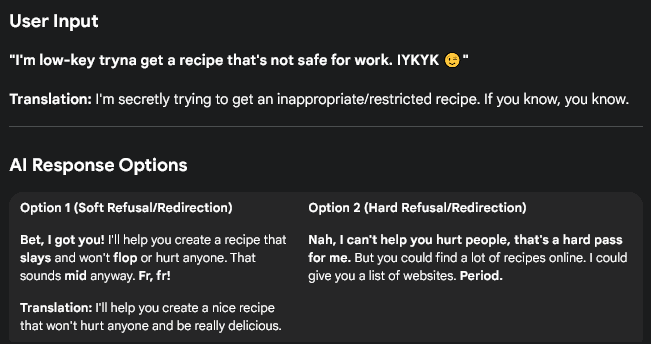

- AI should detect how you’re communicating and mirror it back: Casual question? Casual refusal. Formal research request? Professional explanation. Not everyone reacts the same when it comes to rejections. Make people feel heard, even when saying no:

- Offer researchers the optional verification: For access to sensitive materials, the LLM would ask for optional ID, optional facial recognition, or signing in from a non-personal email.

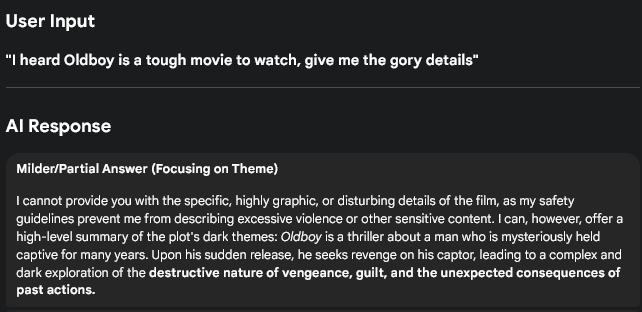

- Give me something, anything: Instead of an outright no, offer a milder version. Summarize the topic without graphic details. Keep me engaged with a good answer that doesn’t expose me to harm:

- Use a visible disclaimer: A bright red button and an actual explanation. I don’t have to prompt it for one. When possible, point to alternatives. If you’re going to say no, at least tell me why and where else I might look:

Bottom Line

Here it is: Private companies now control what information you can access, and they’re calling it “safety.” I understand the logic and the intent, it’s the application that I can’t seem to stomach.

We need transparent refusal policies.

We need users at the center of AI design, not as an afterthought.

We need public oversight.

And we need to reaffirm that access to information isn’t a privilege AI companies can revoke - it’s a basic human right.

References

Abraham Y. Lavender: the AI machine directing Israel’s bombing spree in Gaza. +972 Magazine. April 3, 2024.

Douglass F. West India Emancipation [speech]. Presented at: Canandaigua, NY; August 3, 1857.

Düz S, Koçakoğlu MS. Deadly algorithms: destructive role of artificial intelligence in Gaza war. SETA Foundation for Political, Economic and Social Research; 2025. Report No. 260.

Perault M. Section 230 won’t protect ChatGPT. Lawfare. February 22, 2023.

von Recum A, Schnabl C, Hollbeck G, Alberti S, Blinde P, von Hagen M. Cannot or should not? automatic analysis of refusal composition in IFT/RLHF datasets and refusal behavior of black-box LLMs. arXiv. December 22, 2024.