No concept man forms is valid unless he integrates it without contradiction into the sum total of his knowledge. To arrive at a contradiction is to confess an error in one's thinking; to maintain a contradiction is to abdicate one's mind and to evict oneself from the realm of reality

- Ayn Rand, Atlas Shrugged.

The Road To Hell Is Paved With Good Intentions

- Ancient Proverb.

This essay is a comprehensive philosophical critique of several aspects of Effective Altruism thought and philosophy. For the sake of clarity, simpler models have been used in place of more complex alternatives. For the sake of simplicity, non technical language has been employed.

John Stuart Mill was a prodigy. At the age of three, he was already literate in Ancient Greek. By eight, he had acquired a sophisticated education in philosophy, mathematics, physics, and astronomy. By twelve, the grasp of his intellectual pursuits had extended to logic; by thirteen, economics; and by fourteen, chemistry. None of them seemed to have done him much good.

Upon reaching twenty, Mill suffered a severe nervous breakdown and contemplated suicide several times. He reemerged from this personal crisis a different man. But the breadth of his interests remained. Throughout his life, Mill would make significant contributions to natural philosophy, logic, economics, politics, and ethics.

Perhaps his two most prominent achievements were his contributions to neoclassical economics which is, for good or ill, the most popular version of economics today, and his contributions to utilitarianism, an ethical philosophy that sought to maximize the greatest amount of good for the greatest number of people.

It's no surprise that Mill, given his facility with math, co-founded the two most mathematical approaches to economics and ethics respectively. What would have come as a surprise to him was how quickly each separated from the other after his demise.

Economics chiefly became the study of efficient means to achieve certain goals. Whether the goals in question were ethical or important were deemed to be beyond its purview. George Stigler, a Nobel laureate in economics, went as far as declaring that economics and ethics had no business with each other[1]. The estrangement was solidified after a number of studies revealed that Economics professors were less likely to give to charity than professors in other fields, Economics majors were more likely to approve of unethical behavior, and Economics Students were less willing to share money fairly.

But times do nothing but change. In the last decade, a new kind of ethical system has risen to prominence. I am writing, of course, about effective altruism, a recent philosophy aptly summarized by co-founder William Macaskill, as the ' use of evidence and reason to figure out how to benefit others as much as possible, and take action on that basis'.

Effective Altruism is a rationalist, economic approach to ethical action. It is a second-order ethical system, concerned less with defining what is or isn't intrinsically good than with establishing an analytic framework for doing the most good that you can. What constitutes moral goodness is not as important as what constitutes efficient means. In Macaskill's words, effective altruism is "ethical engineering rather than ethical science".

This marriage of ethics and calculation is without doubt a powerful reason for its adoption among certain circles. Its ideas have found their greatest affinity with Ivory Tower philosophers and Silicon Valley billionaires. But effective altruism is by no means elitist. Its emphasis on individual action - 'the most good you can do' - makes it remarkably democratic. And in a world where many are dissatisfied with religion, EA can offer a vital alternative. It transforms formerly non-ethical considerations into ethical ones. In a way, effective altruism moralizes everything. After all, if the most good we can do is the criteria for moral goodness, every aspect and activity of our lives can be measured rather unhelpfully against some ideal morally efficient lifestyle.

Regardless, I agree with several elements of effective altruism. More importantly, I wholeheartedly agree with one unique element of effective altruism - its principle that transparency is important for making good moral decisions. To quote William Macaskill in his book Doing Good Better: Effective Altruism and How You Can Make a Difference:

"[I]magine if you went into a grocery store and none of the products had prices on them. Instead, the storekeeper asks: “How much would you like to spend at this grocery store today?” When you give the storekeeper some money, he hands over a selection of groceries chosen by him. This, of course, would be absurd. If this was how things worked, how could we figure out if one grocery store was better than another? One store could charge ten times the amount for the same produce, and, prior to actually paying for the products, we wouldn’t be able to tell. If it would be absurd to buy groceries this way, why is donating to charity any different? In the case of charity, you’re buying things for other people rather than yourself, but if you’re interested in using your money to help other people effectively, that shouldn’t make a difference."

This seems to me a perfectly reasonable point. Until the rise of effective altruism, however, the importance of transparent outcomes had largely been ignored. To some, insisting on transparency risks converting ethical action into a game of ranked priorities. But the importance of transparency far outweighs any such costs. Without it, we reward people for empty acts of virtue, a problem which continues to hinder foreign aid programs.

Transparency is important in other ways too. It is impossible to improve our ethical performance unless there is a clear idea of how well or how badly we are doing. The argument that ethical performance should not be measured is moot since we already implicitly measure ethical performance in many ways. Transparency of outcomes merely sharpens our abilities to do so.

The value of transparency even extends to the entire effective altruist movement as a whole. One of its greatest achievements might be its reward of virtue signaling with status. Although few are likely to admit this openly, virtue signaling is an important ingredient of moral behavior. The Economist Thorstein Veblen is famous for his concept of conspicuous consumption: his insight that people purchase certain products and services only because of what it communicates about them to society. And yet, almost no attention has been devoted to conspicuous virtue, even though throughout history, people have always sought to communicate their virtue through obvious and exclusive signals: special clothing, unique architecture, and specific symbols.

Fortunately, in comparison to conventional markers of virtue that have been degraded to mostly reward in-group membership above all else, the effective altruism movement still seeks to reward efficient ethical action above all else. This isn't to imply, however, that effective altruism is without significant problems of its own. In fact, in many cases, I will attempt to show that effective altruism suffers from either or both of these features:

1. Ethical complications.

2. Practical impossibility.

The rest of this essay will consist of four different sections. I shall begin by showing how conventional criticisms of effective altruism philosophy not only fail to hit the mark but also neglect less obvious and far more important problems. I shall then continue my criticism by using Peter Singer's famous drowning child paradox as an easy introduction into some of the ways effective altruism can go wrong. Third, I will consider the most prominent EA philosophy, longtermism, and argue that it should be discarded permanently. And finally, I shall propose some tentative solutions to some of the problems I will raise in earlier sections.

Why Many Criticisms Of Effective Altruism Fail To Hit The Mark

The usual launchpad for many criticisms of effective altruism is to begin with its utilitarian similarities and exploit some of the famous contradictions that result. A familiar starting point is the famous trolley problem. Although details slightly vary, the trolley problem asks us to imagine a scenario in which a runaway trolley is on course to collide with and kill a number of people (usually five) down a track, but a bystander can intervene only by diverting the vehicle to kill just one person on a different track.

It is obvious that strong utilitarianism recommends the option of diversion. As long as you are trying to maximize the number of lives saved, saving five lives is considerably superior to saving a single person. But the implications of that argument could be abominable. Consider the cosmetically different case of five dying people in need of organ donation. Is it acceptable to find one healthy innocent individual, murder him, and then transplant his organs to save the five dying people? Most people would recoil at such actions. Indeed, Nobel Laureate Kazuo Ishiguro's Never Let Me Go creates an unforgettable emotional impact partly because our moral sensibilities are so strongly aligned against such actions. And yet, from the perspective of strict utilitarianism, this is the right thing to do.

The critic then often argues from these unpalatable conclusions back towards effective altruism and suggests or simply states that since utilitarianism is full of these problems, effective altruism is full of these problems as well[2].

I don't consider this good criticism for four reasons. The first is that it is rather lazy. Utilitarianism is an old ethical system. Effective Altruism, on the other hand, is a quite recent one. To criticize the latter on grounds and issues that we have known about for decades does not teach us anything new. It is more useful to examine EA separately and criticize its own unique problems.

Second, although utilitarianism and effective altruism share a lot in common, they also have several features they do not share at all. Many in the EA community are firmly against the pure machiavellianism of the-ends-justify-the-means reasoning. In a blog post by William Macaskill and Benjamin Todd, both authors clearly disapproved of the idea of taking a harmful job in order to be able to do more good as a result. In addition, because effective altruism unlike utilitarianism is concerned with how to do good rather than what is or isn't good, EA does not necessarily imply that what is good is always the maximization of wellbeing. In fact, effective altruism does not imply any strict definitions of what is good. This moral 'looseness' is a virtue that sets it apart from the rigorous strictures of utilitarianism.

My third reason flows from the fact that these issues do not plague utilitarianism and EA alone. Other 'rigid' ethical theories create morally repellent conclusions as well. Take Duty-based Ethics. Different duties might conflict with each other, and the same obligation could be reasonable in one situation and dangerous in the next. Readers who have a passing familiarity with Asimov's three laws of robotics will easily recall the numerous contradictions which can be derived from rule-based ethics. It seems that every moral injunction - be honest or don't murder or maximize good, et cetera - can lead to at least one morally repellent conclusion in a particular context. As a caveat, however, because utilitarianism is concerned only with the potential surface area of any moral action, it opens itself up to many more morally repellent conclusions. This caveat will be useful later when I consider longtermism.

My fourth reason is perhaps the boldest of all because it asserts there is actually nothing wrong sometimes with choosing to divert the trolley in the trolley problem. In their paper, Lexical Dominance in Reasons and Values, Iwao Hirose and Kent Hurtig propose that if certain kinds of reasons denoted as M reasons typically dominate other kinds of reasons denoted as P reasons in terms of normative force, then we ought to choose the option which has a greater number of M reasons. If we consider saving a life as an M reason given how strongly it dominates many other kinds of moral justification, then it follows that we should rather save five lives by diverting than save one.

By way of illustration, consider the Allies' decision to invade Nazi Germany. It was obvious then and is obvious now that collateral damage would result: at least one innocent person would be killed or injured as a result, and many innocent people were. Still, no reasonable person can claim that it was morally wrong to invade Nazi Germany. It was the morally right decision then and if such unfortunate circumstances were to ever recur, it would be the morally right decision now. The argument from collateral damage does not alter that fact.

These criticisms should generally be retired from applying to effective altruism not only for the reasons adduced above but because they divert our attention from the several important problems that infect Effective Altruism as a whole. It is to those problems that I shall now turn to.

Effective Altruism And The Drowning Child Parable.

Peter Singer is in many ways one of the most important living philosophers today. He is a recipient of the Berggruen prize, widely recognized as the 'Nobel Prize for philosophy', and is most famous for his drowning child parable, a strong contender for the most popular philosophical thought experiment in the world.

The drowning child parable is a fundamental cornerstone of EA philosophy. It has served as the moment of conversion for many in the EA community. Singer himself is also an effective altruist and released an enthusiastic defense of the philosophy in 2015. As a lucid example and a foundational element of effective altruism, the drowning child parable is the perfect introduction into my contentions with the philosophy.

The clarity and conciseness of the drowning child parable is remarkable. Although there are slightly varying versions, I shall adopt the version used by Singer in his book, The Life You Can Save:

'Imagine you’re walking to work. You see a child drowning in a lake. You’re about to jump in and save her when you realize you’re wearing your best suit, and the rescue will end up costing hundreds in dry cleaning bills. Should you still save the child?'

The natural and proper response is to say yes. No one with an ounce of morality would choose saving his or her suit over the life of a little girl. And then, Singer hits you with a sledgehammer of a conclusion: we all are guilty of the same crime we profess to be so fundamentally against. Every time we opt for some unnecessary consumption, say a new pair of shoes or a pointless new product, we have prioritized that over saving a child dying either close to us or in a faraway country. The parable subtly insinuates that our moral culpability is in many ways equal to that of a bystander who chooses to walk on to save his new expensive suit.

I agree - it's impossible not to - with Singer's parable as long as it is interpreted vaguely. Beyond that though, the drowning child parable is rather like a magic trick: by making an incident that attention grabbing front and center, it distracts us from what is truly going on. It is necessary to list out all the implicit assumptions which are present in that short story:

1.) The costs of the moral action is small: dry cleaning costs for a suit.

2.) The costs of the moral action is clear: nothing more than temporary damage to the bystander's suit is necessary to save the child's life

3.) The benefits of the moral action are huge: the saving of a little child's life.

4.) The benefits of the moral action are clear and can be estimated immediately: the bystander knows exactly what's at stake if he decides to dive in the pool. Feedback is also direct and immediate.

5.) The bystander has the capacity to solve the problem: it is assumed he can swim and is strong enough to carry her to safety.

6.) The action is a one-off: the bystander does not have to repeat this action over and over and over again.

7.) The bystander has no equally pressing problems at the moment.

8.) There are no relatives of the bystander, to whom he will owe a moral duty, who are also in dire need of help.

9.) The action results in absolute gain: the benefit of the moral action is not diluted by what anyone else does or doesn't do.

10.) There is no other person around with a specific moral, legal or political responsibility to help the child.

11.) The intent is strongly correlated with the possible result: By electing to help her, the bystander will not be unwittingly helping someone else who is in no need of any assistance.

12.) It is absolutely the best way to help her, and the bystander knows this: the problem is not complex with many possible solutions and there is no large degree of uncertainty around the best solution.

13.) There is nothing standing in the way of the bystander helping her

14.) The bystander can solve the problem on his own: solving the problem doesn't require any complex, large-scale response from many people acting in coordination.

15.) Her Input is unnecessary.

16.) His actions, if he chooses to save her, are very unlikely to make the situation worse.

The simple heartwarming story has made sixteen implicit and important assumptions, and possibly a few more. In the real world, it is often the case that many of these assumptions do not hold.

Take for instance assumption 1. The average dry cleaning costs of a suit is pegged at 12 to 15 dollars. On the other hand, GiveWell estimates that it takes about 4,500 dollars to save a life. There is a 300x difference between 15 and 4,500. Regardless of one's views on the matter, this substantially dilutes the force of the thought experiment. It simply costs a lot more to save a single life, even in the best of times.

Or take assumption 3, that the benefits are clear. This assumption hardly holds in the real world either. During the 1980s, the International Monetary Fund embarked on a project of massive loans to African countries in return for deregulating their local economies and adopting neoliberal free trade policies. These projects were often known as structural adjustment programs, and their overall impact has been paltry at best. Research by the revered economist, Ha Joon Chang, revealed that since the inception of structural adjustment programs, the economy of Sub-saharan Africa had barely grown by only 0.2 percent at the time of writing (between 1980 and 2009). In contrast, in the two decades prior to those policies, Sub-saharan African economies grew at 1.6 per cent in per capita terms[3].

There is no need to attribute any intentions of malevolence to these organizations, although charges of negligence and arrogance might be more legitimate. The simple truth is growing an economy is hard. It's not merely hard but also complex. It's often difficult to predict the long-term effects of macroeconomic actions until after substantial periods of time. In contrast, the benefit of the moral action in saving the little girl's life is clear and can be estimated immediately.

Asssumption 16 is also without guarantee. In his fascinating book Climate Alarmism And What It Costs Us All, Bjorn Lomborg writes:

'In Fiji, the government teamed up with a Japanese technology company to deliver off-grid solar power to remote communities. They provided a centralized solar power unit to the village of Rukua. Prime Minister Frank Bainimarama proudly declared he had “no doubt that a number of development opportunities will be unlocked” by the provision of “a reliable source of energy.” Understandably, all of Rukua was thrilled to get access to energy and wanted to take full advantage. So more than thirty households purchased refrigerators. Unfortunately, the off-grid solar energy system was incapable of powering more than three fridges at a time, so every night the power would be completely drained. That led to six households buying diesel generators. According to researchers who studied this project: “Rukua is now using about three times the amount of fossil fuel for electricity that was used prior to installation of the renewable energy system.” In rather understated language, the researchers conclude that the project did not “meet the resilience building needs” of the community.'

Neither is assumption 11. In a report released in 2020 by the World Bank, about 7.5 percent of foreign aid is estimated to be diverted into offshore accounts of tax havens like Luxembourg.

Assumption 9, the assumption of absolute gain, presents similar difficulties. By absolute gain, I mean certain goods are useful intrinsically. Survival for instance is an absolute gain. People's desire to survive are generally not based on whether other people around them have survived or not. Almost everyone alive in the world today has definitely lost someone close to them and still kept on living. Certain other desires like the need for basic shelter, clothing, and food can also be said to be absolute. Without absolute gains, people are very unlikely to be happy. Indeed, they are very unlikely to exist at all.

But not all gains are absolute. Some goods are only valued because other people don't have them. Whether they make people happier depends strongly on what other people around them already have or don't have. Beyond a basic cutoff, almost everything is of this nature. Although it does not bear admitting, status is an important component of happiness, and gains in status can only be relative. A study performed by researchers at the Universities of Warwick and Cardiff found that beyond a certain point, money only makes people happier if it means they are richer in relative terms. People will often reject the chance to be richer in absolute terms if it meant they were also relatively less well-off. Multiple studies of the ultimatum game across the world have replicated this phenomenon.

Despite being thousands of times richer than the average man, billionaires are not thousands of times happier than the rest of us. The most recent analysis of the diminishing utility of money by Matthew Killingworth suggests that the relationship between money and happiness plateaus at 200,000 dollars. It seems that after some cutoff, doing all the good you possibly can will have negative returns once you adjust for the cost of your efforts relative to its diminishing utility. The returns in happiness from making everyone much better off are far smaller than they initially appear once you account for the human tendency to get used to things and the fact that a lot of happiness derives from relative improvement rather than absolute improvement in welfare.

Assumption 6 is also suspect. Poverty is not simply a function of having less money and thereby needing more donations. It is best understood of as a collective problem. Till date, no country in history has ever gotten wealthy through charity. As long as the root problems remain unfixed, the one-off is unlikely to make much of a difference. Such charity might even backfire if it creates perverse incentives of dependence or if it is suddenly taken away, returning its former recipients to a less satisfactory life than they had before.

These are merely a sample of the many, many problems that can result when thought experiments are interpreted too literally. The farther the conditions of reality are from the assumptions of the drowning child parable, the less valuable it is as a guide to our actions except in the loosest sense possible. Unfortunately, in the overwhelming majority of situations, many of these implicit assumptions hold very weakly or fail to hold at all.

The good news is effective altruism can be at its most effective when dealing with problems that resemble the drowning child parable as closely as possible. The remarkable success of EA in charity donations, organ donations, and vaccines is clear proof. These problems are all one-off, absolute gain problems where many of the other assumptions clearly hold.

Although it's a common mistake to identify Effective Altruism with donation work, it clearly still remains one of its most significant achievements. In an heartwarming example from GiveWell, "over 110,000 individual donors have used GiveWell’s research to contribute more than $1 billion to its recommended charities, supporting organisations like the Against Malaria Foundation, which has distributed over 200 million insecticide-treated bednets. Collectively, these efforts are estimated to have saved 159,000 lives".

The problem is the farther away effective altruism moves from solvingproblems which replicate these assumptions, the worse things tend to get. Perhaps the clearest proof of this is longtermism, a very recent effective altruist philosophy with some very unpalatable implications.

The Rise of Longtermism

Longtermism is, without doubt, the most novel and most influential contribution of effective altruism. It has received endorsement from billionaires such as Elon Musk, Vitalik Buterin and Dustin Moskowitz. William Macaskill's fascinating new book, What We Owe the Future, has attracted a wide amount of attention and praise with several reviews in the most influential print media around. Longtermism is so influential now that many within and outside the EA community simply conflate the two. At present, this is still significantly mistaken but current trends suggest that such assumptions will be less out of place a couple of years from now.

The ideas behind longtermism are not new. Many ancient cultures enshrine long-term considerations for the future. The Maori people of New Zealand, for instance, often use the word kaitiakitanga, a deeply held, ancient belief which roughly translates to protecting the environment for the sake of future generations. However, longtermism goes beyond such vague concerns and makes a set of very precise claims. These precise claims, as I will show, lead to all manner of repugnant conclusions and illogical implications. Longtermism is not merely inconsistent but also morally repellent and highly irrelevant.

To preserve the clarity required to demonstrate my claims, I shall focus mainly on The Case For Strong Longtermism by Hilary Greaves and William Macaskill. Three factors have influenced my decision: first off, it is fairly recent and so can be taken as a decent approximation of longtermism. Second, it is extremely rigorous. This makes it fairly impossible to misinterpret any claims in good faith. The third is it comprehensively covers all aspects of longtermism. However, from time to time, other texts will also be making an appearance.

Conventional Criticisms Again Fail To Hit The Mark.

There are three specific claims that underlie longtermism:

1.) Many more people will exist in the future than already exist right now.

2.) Future lives matter just as much as the lives of people living today.

3.)We can positively affect future lives.

As long as you accept these three premises, it follows that our most important moral responsibility is towards future lives. More concretely, our strongest moral duty is to maximize the survival and flourishing of as many future lives as possible. And so, we have two key moral responsibilities:

a.) To increase the quality of future lives as much as we can.

b.) To maximize the survival chances of human civilization.

In The Case For Strong Longtermism, Will Macaskill and Hilary Greaves distinguish between deontic strong longtermism and axiological strong longtermism. But such distinctions cannot reasonably hold beyond a certain point: the essential premise that we must maximize the wellbeing of future lives makes longtermism indistinguishable from utilitarianism. The moral 'looseness' of Effective Altruism is impossible with longtermism. This makes longtermism equally vulnerable to all the criticisms of utilitarianism that were considered earlier.

Olle Häggström, professor of mathematical statistics and noted longtermist asks us to imagine a situation where the head of the CIA informs the American president that somewhere in Germany is a lunatic working on a doomsday weapon to wipe out humanity and that this lunatic has about a one-in-a-million chance of success. To complicate matters, they have no further information on his identity and location.

In Häggström's own words:

'If the president has taken Bostrom’s argument to heart, and if he knows how to do the arithmetic, he may conclude that it is worthwhile conducting a full-scale nuclear assault on Germany to kill every single person within its borders.'

Ironically, longtermism and utilitarianism are very brittle once you scale them up.

Many criticisms of Longtermism have tried to weaken either premise 2 or premise 3, by claiming that future lives cannot be weighted equally or by casting doubt on our ability to affect future lives in positive ways. These criticisms are valid and legitimate. In particular, premise 3 is susceptible to the principle of epistemic cluelessness; that is, our inability to predict the effects of our actions over a considerable period of time. To repeat the countless examples from our history that prove this point would take up entire libraries.

But I don't like these criticisms. Take criticism of the second premise: future lives should not be weighted equally. Even if this point is conceded and we assume that future lives should matter say half as much as the lives of those living today, the simple possibility that there could be vastly more future lives shifts the argument in favour of longtermism again.

Consider a simple scenario where the lives of each person living today is deemed to have 100 percent worth. Current estimates put human population at approximately 8 billion people. If we assume that 100 percent of worth is equal to 1 unit of worth, this makes 8 billion units of worth overall. A longtermist can simply and quite reasonably claim that 16 billion or more future lives will exist and we are back to where we started since 16 billion x 0.5 units of worth = 8 billion units of worth. Many longtermists even assert that the number of future lives will be many orders higher.

Criticisms of premise 3 are also of this sort. Even if we assign extremely low probabilities of our abilities to help future lives positively, longtermists can simply claim that the payoff is so massive anyway that the expected value of longtermism still requires that its claims should be taken seriously. These arguments and counter arguments are merely a sophisticated version of a Yes, it is/ No, it isn't game. They are extremely sensitive to the biases of the people in question.

In contrast, I am concerned less with the viability of longtermism's premises which have been pursued exquisitely elsewhere, than I am with several of its important implications. In my opinion, a philosophy becomes dangerous the moment it regards the future as more certain than the past.

The Argument From Repugnance.

Although the authors of The Case For Strong Longtermism claim that it is compatible with both deontological and utilitarian ethics, the premises of longtermism prioritize utilitarianism. By virtue of its premises, a longtermist is morally obligated to maximize the wellbeing of future lives. Longtermists' arguments are even stronger: they argue that it is everyone's moral obligation to maximize the wellbeing of future lives.

This makes longtermism intimately connected with population ethics - a recent branch of philosophy concerned with the ethical problems that arise when our actions affect who is born and how many people are born in the future. In order to maximize the wellbeing of future lives, a longtermist has to make consistent comparisons between different possible worlds of future lives[4].

In his new book, What We Owe The Future, William Macaskill carefully analyzes the different theories of population ethics. Averagism, for instance, is the theory that aims to maximize the average wellbeing of future lives. A population is superior to another when its average wellbeing is higher. But Averagism is susceptible to the sadistic conclusion: adding many lives of positive wellbeing can decrease average wellbeing more than adding a few lives of negative wellbeing. A life of positive wellbeing is a life of +1, +2, ... units of wellbeing. A life of negative wellbeing, on the other hand, is a life of -1, -2, -3, ... units of wellbeing.

Under averagism, adding a few lives of -100 wellbeing can be superior to adding many many lives of +5 wellbeing since the average will decrease less in the first instance. Since such implications are morally repugnant, Macaskill rejects the principle. He also rejects other views with other similarly repugnant conclusions before settling on Totalism, the view that one population is better than another if its total wellbeing is higher.

But Totalism has problems of its own. Suppose a possible world with a billion flourishing lives. According to Totalism, another world with a larger number of people living lives barely worth living is superior as long as its total positive wellbeing is higher. This implication was so deeply problematic for philosopher Derek Parfit, upon whose seminal work population ethics is founded, that he rejected Totalism as well in favour of a possible Theory X that would not generate such conclusions. Such a theory X is impossible. In 1989, the economist Yew-Kwang Ng proved that as long as you accept the perfectly reasonable principle that between two worlds of equal numbers of people, the world with more wellbeing in total and on average is superior, then you cannot go on to reject Totalism.

In fact, as far as I know, there is no possible theory of population ethics which can satisfy these three criteria:

1.) Adding lives of positive wellbeing is always good.

2.) Lives of positive wellbeing are always better than lives of negative wellbeing.

3.) Lives of more positive wellbeing are always superior to lives of less positive wellbeing.

Totalism satisfies criteria 1 and 2 but not criteria 3. Critical level theories satisfy criteria 3 but fail to satisfy either 1 or 2. Other theories fail in similar ways.

To be fair, Macaskill is fully aware of the repugnant implications of Totalism. He simply argues that this repugnance is preferable to that of other theories. I'm not satisfied with this acceptance. First off, the repugnant and sadistic implications of all population ethics theories indicates that the real problem is the utilitarian approach they are all based on. As a result, some have argued and I concur that some version of theory X will only be possible with a non-consequentialist ethical theory.

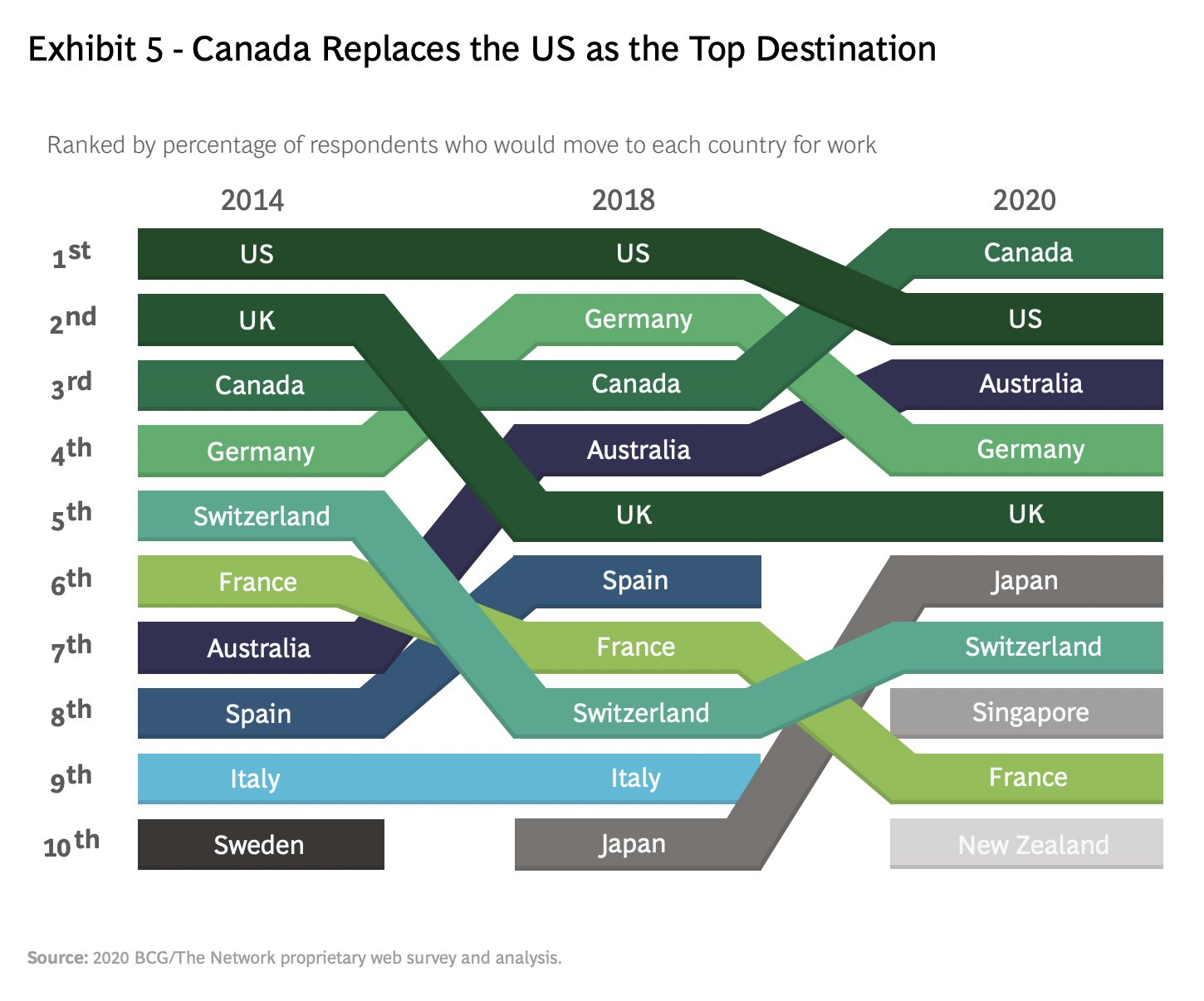

Second, all evidence suggests that Totalism is not how people in real life choose between different options of different populations with different standards of life. If we roughly assume GDP as a functional proxy for wellbeing, and there is a strong case that it is[5], then according to Totalism, countries like China and India with higher GDPs overall should be preferable by intending immigrants to countries like Singapore and Switzerland. We should therefore expect immigrants to choose to immigrate to China and India rather than to the latter two.

The opinions of immigrants are preferred because they constitute one of the clearest indicators of how well a country is doing not only because people always vote with their feet even in the face of daunting obstacles, but also because these opinions are less tainted by bias and patriotism and mediated instead by the simple incentive of which place is better. Immigrants have everything to gain by making the right decisions. The data suggests what we already know: intending immigrants themselves do not see it this way.

It is clear from the image that immigrants are more concerned with the probability that they will live a better life than they already do rather than with the total wellbeing of the receiving country. If they acted according to Totalism, it would be a very different list. A utilitarian model that began along these lines would at the very least conform to how people behave in the real world when forced or asked to choose between two different standards of life.

Why Do Utilitarian Models Have These Issues.

The reason all population ethics theories lead to such morally repellent conclusions is because utilitarianism makes a fundamental mistake about moral outcomes: morals are not the same as math.

In math, +4 and -8, +2 and +2, +100 and -96, ... are all perfectly equivalent. They all sum up to 4. There is no world in which + 4 and - 8 is not equal to +2 and +2 without violating some fundamental laws of arithmetic. Mathematical statements are input insensitive as long as the outputs are equal to one another. Moral outcomes don't work the same way. They are input sensitive. Imagine a person who cheats at his exams, scores full marks, and is extremely happy as a result. Assume also that he would be equally happy if he had scored full marks on merit. If moral outcomes were like arithmetical statements, there would be no relevant distinction between those two situations. As far as the outcome is concerned, he is equally happy in both cases.

But very few reasonable people would agree that since the outputs in happiness were the same, the two inputs were now equal to each other. In the same vein, it matters a great deal how we arrive at any moral outcome. 1 thousand people living lives barely worth living is not regarded as equal in any way to 100 people living flourishing lives even if their outputs in positive wellbeing units add up to the same thing. Morality is input sensitive even when the outcomes are equal. And as long as nothing fundamentally changes about human morality, utilitarianism is going to keep running into these problems forever.

The Argument From Extinction Risk

Since the primary moral obligation under longtermism is to maximize the wellbeing of future lives, it follows that the survival of future lives is of paramount importance. After all, there is no maximizing of future wellbeing if there are no future lives at all. Hence, extinction risk is the ultimate catastrophe for any serious longtermist. The Economist Yew-Kwang Ng, who predates many of the ideas of longtermism, has expressed in an interview that 'If we all die, our future welfare become zero. This is a big loss'.

Similarly, in What We Owe the Future, Macaskill reproduces Derek Parfit's sentiments on extinction risk and comes to a similar conclusion: “what now matters most is that we avoid ending human history.” But such ideas are actually logically inconsistent with Totalism.

Totalism, as we already know, is the view that we should maximize the total positive wellbeing of future lives. The flip side of that is we should also minimize the total negative wellbeing of future lives. Person affecting views, which are committed to preventing lives with negative wellbeing from being born but not committed to creating lives with positive wellbeing, are rejected by Parfit and Macaskill for their moral asymmetry.

The rejection of person affecting views stands on legitimate grounds. But it also means in the event that possible future people might have lives of horrific deprivation, it is our duty under Totalism to ensure they do not exist at all. Or to put it more clearly, preventing the misery of future generations by preventing their existence if necessary is a stronger moral obligation under Totalism than preventing existential risk.

Since this is in direct conflict with the creed of longtermism, Greaves and Macaskill try to resolve this by assuming that strong longtermism is conditional on a large and fixed future population anyway and we are simply affecting how good or bad their lives will be. I find this a case of burning the mansion to save the shed. If a large fixed population of future lives will exist regardless, then it is completely illogical to care about extinction risk - after all, no matter what we do now, a future population will exist.

Any concern about existential risk is rendered meaningless given that assumption. By trying to protect their sentiments of extinction risk as the worst thing that can happen to human civilization, they have relegated the prevention of existential risk from an inferior moral obligation to a moral obligation that cannot exist at all. The only way to evade this dilemma is either to discard Totalism or to assume that any version of existence, regardless of how painful or wretched or miserable, is still positive wellbeing. But such an assumption is not only morally offensive, it contradicts many longtermists' own views about euthanasia and the quality of existence ( euthanasia is an immoral act under these conditions). It also renders the very notion of negative wellbeing strictly impossible.

The Argument From Unknown Unknowns

As I wrote earlier, epistemic cluelessness is not a very robust criticism because it is susceptible to the bias of the people in question. Cluelessness is really an argument from known unknowns - we cannot predict the long-term effects of our actions or of the state of humanity. We simply know that we do not know these things.

There is, however, a substantially stronger variant of the argument I will present here, which is the argument from unknown unknowns. Consider for a moment the most important problems facing Europe and the world today: weakening democracies, climate change, AI risks and technological displacement, among others. If we backtrack merely five hundred years before, a blink compared to the amount of time sentient life has existed, we find a completely different situation.

At the time, Europe was nearing the end of a mini ice age, exactly the opposite of the global warming crisis we face today. There were virtually no democracies on the continent, and the earliest precursor of the computer would not arrive until well over two hundred years later. The idea that the common man was entitled to political representation or that computers could do as good a job as humans or that burning fossil fuels warms the climate weren't just risks that Europeans at the time couldn't anticipate. It was more the case that underlying those risks were concepts they weren't even familiar with in any reasonable way. They weren't just things they knew they didn't know. They were things they didn't even know they didn't know. They were unknown unknowns.

And yet, these unknown unknowns constitute some of the most important problems Europeans and the rest of us face today. That's because problems aren't problems in isolation. A problem grows out of the current state of that particular society. It is a relationship between what we have solved and what we are yet to, between what we know and what we know we don't. At any given time, a society's problems can only be known unknowns. They cannot be unknown unknowns.

When I look at the considerable efforts of longtermists today, the same pattern is evident. The problems longtermists prioritize are all problems that we either have right now or can reasonably anticipate having in the future: emerging biotechnology risks, AI alignment, Climate Change, etc. They are all known unknowns we've not yet solved for, either in terms of pure technological capability or in terms of summoning an adequate societal response. They lie just outside or fairly outside the edge of our capabilities as a society.

We cannot even begin to anticipate, let alone deal with unknown unknowns because we have not solved the problems we already have. To get to AI risk, we had to solve computation, a suite of hardware problems, invent new algorithms, and harness deep learning models among others. It was only by solving the problems of manufacturing and agriculture and transportation that we could even begin to have substantial impact on the climate of the earth.

So, we arrive at a reasonable conclusion: the only way to help future lives is by solving problems we already have or anticipate. We help them by helping ourselves. We have no possible way of rendering more assistance because the problems they will face will only grow out of the problems we have removed from their path. At any given moment, there are at least three to five different generations currently in the world right now. A philosophy that therefore simply prioritized solving all the problems people alive already face or will grow up to face is not a philosophy that can be improved upon by longtermism. And yet, this philosophy, which I will call, for lack of a better term, existing lives-ism, makes no additional assumptions about the number and nature of future lives in the distant future or about their worth or about whether they will be digital, physical, or some hybrid of both.

In the scientific discipline and much else, when two theories produce the same results and generate the same predictions, we should opt for the theory with the fewer number of assumptions. This well-known principle is known as Occam's Razor. In the words of Albert Einstein,

" the supreme goal of all theory is to make the irreducible basic elements as simple and as few as possible without having to surrender the adequate representation of a single datum of experience.”

I see no reason not to apply Occam's Razor here. Since existing lives-ism pretty much leads us to the same place as longtermism and since it also makes far fewer assumptions, existing lives-ism is the superior moral theory. To the extent that longtermism is useful, it is highly irrelevant.

The Argument From Dependence

In The Case For Strong Longtermism, both authors acknowledge that one of the strongest criticisms of longtermism is its reliance on extremely low probabilities of extremely large payoffs. As long as the expected value is higher, a longtermist will prefer to save or help the lives of trillions of future people with a very low probability of success than help a large number of people, often numbering in the millions, with a far higher probability of success. They are remarkably non risk averse. They demonstrate a stronger preference for 'recklessness' than 'timidity' as long as the expected value of the decision is higher. This inclination could be regarded as fanaticism.

In their excellent paper on decision theory, Nick Beckstead and Teruji Thomas demonstrate that both timidity and recklessness lead to uncomfortable conclusions[6]. Timidity and recklessness in these contexts have very specific denotations that I will not bother to reproduce. Suffice to say that a reckless decision maker will ignore the odds no matter how small as long as the expected payoff is large enough and a timid decision maker will only act if the odds are high enough even when the payoff is considerably lower than the other option. Since longtermists are clearly in favour of recklessness, they often argue about how timidity leads to non transitive preferences or are susceptible to random events and uncertainties, a criticism Hayden Wilkinson memorably labeled Indology and Egyptology.

They generally omit that recklessness itself also violates certain principles of decision theory and can lead to absurdities where the decision maker will prefer the tiniest probabilities of success if the reward is infinite, odds like 0.00000000000000000000000000000000000000000000000000000000000000001 and even smaller probabilities of success as long as the reward is infinite, almost effectively guaranteeing that the decision maker can rerun the same decision hundreds of thousands of times and still never win.

But, on the whole, I have no problem with fanaticism. To reject it could lead to equally strange conclusions. It's all perfectly fine to choose the option with the higher expected value, regardless of the odds. But this is only if the options are independent. Quite remarkably, no longtermist seems to have considered that fact. As long as the two choices are independent, then fanaticism is a fine course of action and expected value is a fine guide. When they are not, it is a completely different matter.

Future lives don't just pop out of the blue. If the world had ended in 1700, there would be no humans today. Just as tritely, if it had gone extinct at any possible time before the moment you are reading this, there would no longer be any future lives. Future lives depend on present lives to exist.

Simply put, the choices between helping future lives and helping present lives aren't independent choices. Consider a hypothetical case of helping Mary with 95 percent probability of success or her fifty hypothetical descendants with a 5 percent chance of success. If all you are trying to do is maximize lives saved, then the option is clear: expected value dictates that you choose these fifty hypothetical descendants. But that would frankly be both illogical and impossible. The only way to help Mary's hypothetical descendants would be to save Mary now. If Mary dies, they all cease to exist.

This recurs on a wider scale. Should you save ten million people now or the billions of future lives that will exist later. These options are not independent. The existence of many of those future lives depend on those ten million people who longtermists quickly ignore in making their simple calculations. Should you save 99 percent of the current world's population or all the future lives that will ever exist at far lower odds of success. Expected Value recommends the second option. Common sense realizes that the second option isn't an option at all.

It's a bit like choosing to fail at the quarter final because the final will offer a much greater payoff. Life isn't independent. It is sequential. Options can only exist later if we choose the option of prioritizing now. Nothing more is logical. Nothing less is necessary.

A Few Recommendations And A Conclusion.

I have the deepest respect for many in the EA community. I consider it an important alternative or supplement to organized religion for many and applaud the charitable work of many effective altruists. I don't think there is a lot wrong with EA in practice as of the moment. But there is certainly a lot wrong with it in theory. And the boundaries between theory and practice are often less rigid than they often appear.

As J.M Keynes memorably expressed,

'Practical men who believe themselves to be quite exempt from any intellectual influence, are usually the slaves of some defunct economist. Madmen in authority, who hear voices in the air, are distilling their frenzy from some academic scribbler of a few years back'.

I shall offer four main suggestions:

1.) Effective Altruism is best at dealing with one off, absolute gain problems which possess many of the other assumptions of Peter Singer's the drowning child analogy. Examples of this include charitable donations, organ donations, vaccine rollouts, and simple aid. None of it should be underestimated. However, it is really bad at solving problems that diverge considerably from those assumptions. The farther away the problems are from the drowning child parable, the less certain I am of effective altruism's effectiveness.

2.) In cases like these, effective altruists have to make much more effort than they currently are at reaching out to other kinds of experts and incorporating different kinds of approaches. Some of that will include making tradeoffs between effectiveness and altruism, since many of these problems will not be amenable to strict metrics and measurement. It is interesting that this paradox was grasped by J.S Mill upon which he dissolved the Utilitarian Society.

Mill intuitively realized that although happiness should always be the end goal, it was best tackled indirectly without the metrics and rigour of utilitarianism, because happiness was an ill-defined problem. The problems that so obsess longtermists such as AI alignment and pandemic risks are also Ill defined problems. Solving them will require making use of obliquity, indirectness, and bottom-up gradualism, and somewhat letting go of metrics and measurement.

3.) A possible version of theory X will have to incorporate ideas from non consequentialist moral philosophy to truly be meaningful. It will also have to start with how people in the real world already make decisions between different countries rather than with theoretical principles that do not translate to realistic behavior.

4.) Longtermism should be abandoned. This recommendation is bold but certainly worth thinking about in light of the issues already raised above. The truth is life is a bit like a book. If we hold it too close, we can't read it at all. If we hold it too far away, we recreate the same problem. A moral theory that starts with trying to create a better world for ourselves, our children, our grandchildren and great grandchildren only will lead us to the same kind of concern and prudence about the future I believe longtermists actually care about, make fewer arbitrary assumptions, and conform perfectly with other moral theories and attitudes.

We certainly need more good in the world and effective altruism will definitely play its part in contributing to that. We may never be able to maximize all the good we can do. That's all right as long as we keep doing all the good we already are. Our journey to paradise might never end. In some fundamental sense, that might be the whole point.

- ^

What's Wrong With Economics (Skidelsky, 2020).

- ^

For an extremely well written example of this kind of criticism, see Erik Hoel, Why I am Not An Effective Altruist. https://www.google.com/url?sa=t&source=web&rct=j&url=https://erikhoel.substack.com/p/why-i-am-not-an-effective-altruist&ved=2ahUKEwj5q-btp-n5AhUkuaQKHfuHCmEQFnoECAQQAQ&usg=AOvVaw3oE6S40td-dO-6eIyo4tg-AOvVaw3oE6S40td-dO-6eIyo4tg-

- ^

23 Things They Don't Tell You About Capitalism (Chang, 2010).

- ^

For an excellent introduction into population ethics and its many troubling views, see What We Owe The Future, Chapter 8 ( Macaskill, 2022).

- ^

See Stubborn Attachments (Cowen, 2018).

- ^

For a comprehensive analysis of the impracticalities of both approaches, see A Paradox For Tiny Probabilities and Enormous Values ( Beckstead and Thomas, 2021).

Very thoughtful and readable piece I am still chewing on. For now, just this....

This sentiment seems based on the very widely held assumption that life is better than death. It seems helpful to keep in mind that there is actually no proof to validate this assumption. There are many theories which can be explored and respected, but imho, the bottom line is that nobody knows.

This insight matters to me because if nobody knows what death is, and we have as yet no evidence that anyone can know, then we are liberated from the constraint of facts (which we don't have) and each of us is free to design our own relationship with this very important unknown.

Reason suggests that we should, each by our own methods, attempt to form as positive a relationship with death as we can. To the degree we succeed in that, such a success could have profound effect upon how we live. You write...

Yes, and to the degree this secular "religion" of EA can positively affect our relationship with the fundamental reality of our human situation, to the degree it can successfully provide some of the same existential reassurance as traditional religions attempt to do, it may achieve it's most maximum effectiveness in making the world a better place.

A great many of the problems which EA seeks to solve arise out the very fundamental human fear of death. So long as we assume that this life is all we have, based on no proof at all, we may find ourselves trapped within a very limited perspective which can lead to various forms of desperation which do not make the world a better place.

First off, I appreciate the reply. Hmmm. This is true though - no one simply knows what death is or feels like. It's even more grating because no one can tell us what it is or feels like.

The philosopher Raymond Smullyan liked to think of life as something of an umbrella of realities, akin to a video game with several levels. He thought hallucination was one level and dreams were another and perhaps death was yet another as well. It's certainly an intriguing way to look at it.

I do think though that one of the few reasons people still cling to religion is because it transforms or attempts to transform death from something to fear into something to await. Whether EA can or should try to do the same is an interesting question that I cannot answer.

I do know that in the absence of that, we've seen all sort of really weird movements crop up and a naked, visceral fear of death has risen to prominence in the form of cryogenics. Perhaps, we have more than one life. But at the very least, it seems spending most of it in fervent attempts to extend it is rather ironic.

I haven't yet read all of this (short on time) but I wanted to flag that what I have read seems deeply thoughtful, I'm disappointed that this post hasn't had more engagement, and I for one am grateful you took the time and effort to write it.

Thank you very much. I appreciate it.