Metacommentary:

- Full-text below, but the reading experience may be better on the linked Notion page

- This is an entry for the AI Safety Public Materials Bounty

- This is one of my final projects for the Columbia EA Summer 2022 Project Based AI Safety Reading Group (special thanks to facilitators Rohan Subramini and Gabe Mukobi)

- The intended audience for this summary is the general public (completely non-technical, no prior knowledge at all)

- Goals:

- Per the bounty: reduce "the number of people rolling their eyes at AI safety"

- Note: Goal is NOT to produce strong positive reactions to AI Safety (e.g. getting involved, donating, etc.), rather to eliminate strong negative reactions to these things (e.g. eye-rolling, politicization, etc.)

- Convey the potentially serious risks of advanced AI and the importance of AI Safety without coming off as alarmist, overly scary, or too weird / sci-fi

- Produce future reactions to AI Safety roughly like the statement: “Oh yeah, I’ve heard of AI Safety. Very reasonable thing to work on. Glad someone is thinking about that.”

- Be as short as possible so that people might actually read it

- Be jargon-free

- Per the bounty: reduce "the number of people rolling their eyes at AI safety"

- Very open to feedback / making updates

- This is my first-ever post here, so apologies if I did anything wrong!

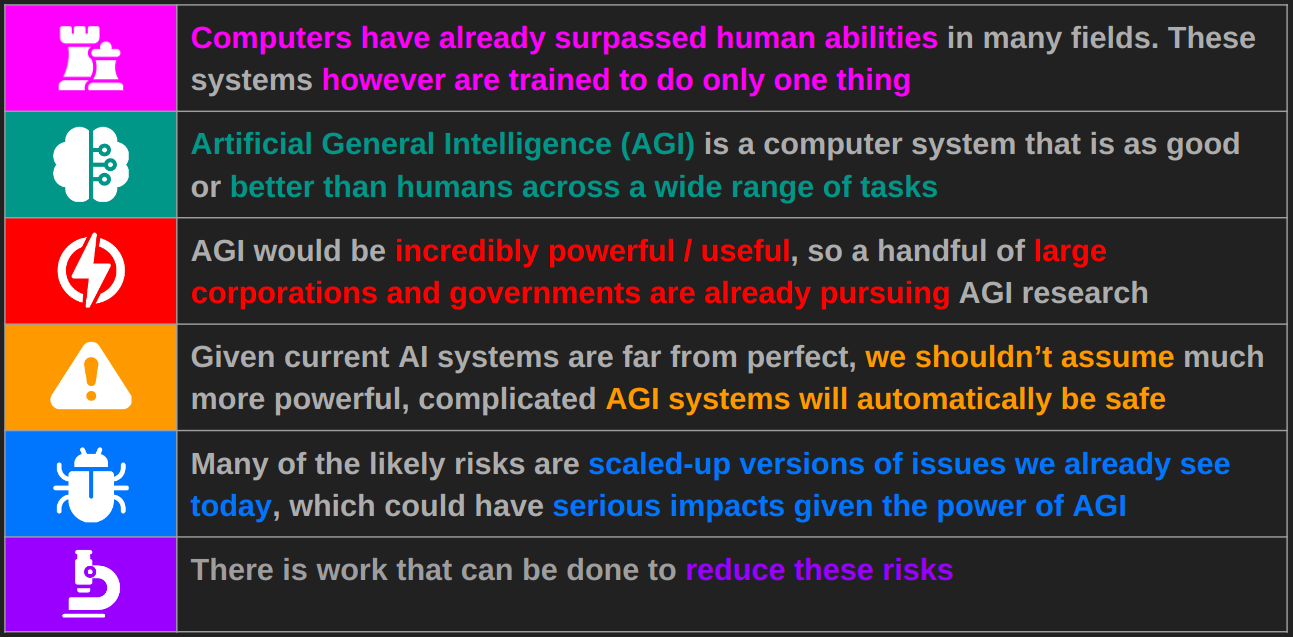

Executive Summary

- Computers have already surpassed human abilities in many fields. These systems however are trained to do only one thing

- Artificial General Intelligence (AGI) is a computer system that is as good or better than humans across a wide range of tasks

- AGI would be incredibly powerful / useful, so a handful of large corporations and governments are already pursuing AGI research

- Given current AI systems are far from perfect, we shouldn’t assume much more powerful, complicated AGI systems will automatically be safe

- Many of the likely risks are scaled-up versions of issues we already see today, which could have serious impacts given the power of AGI

- There is work that can be done to reduce these risks

Full Summary

Computers have already surpassed human abilities in many fields. These systems however are trained to do only one thing.

Computers have bested world champions in board games like chess and Go, and video games like Starcraft and DOTA. They’ve achieved superhuman results in scientific tasks such as protein structure prediction. They can generate images and art of nearly anything given just a text description. A chatbot was recently even able to convince a senior engineer at Google that it was sentient. (Note: Experts agree it wasn’t.)

While impressive, each of these systems were trained to do just that one thing. The chess system, for example, is utterly incapable of producing anything resembling a coherent sentence. It just plays chess.

These types of systems are often referred to as “narrow” Artificial Intelligence (AI) because they can only do a narrow set of things.

Artificial General Intelligence (AGI) is a computer system that is as good or better than humans across a wide range of tasks.

By contrast, an Artificial General Intelligence (AGI) is a computer system that is as good or better than humans across a wide range of tasks.

This is the type of AI you’re probably used to seeing in movies, TV shows, books, video games, etc. It would be capable of all the mental tasks a human can do. It could:

- Have a conversation on any topic

- Tell jokes

- Speak any language

- Trade the stock market

- Do your taxes

- Make art and music

- Write poems or novels

- Make well-reasoned plans and decisions

- Run a company

- Do research in any field of science

- Write code

- And more

It would be able to do all of this at least as well as (if not better than) humans.

AGI would be incredibly powerful / useful, so a handful of large corporations and governments are already pursuing AGI research

An AGI system would be incredibly powerful and widely useful. It could help cure cancer and other diseases, find new clean sources of energy, advance space exploration, and more. It could also be used to just make tons of money. More negatively, it could be used by a government, military, or individual to wage war, dominate world politics, or otherwise exert control over others.

Given this potential, a handful of major companies and governments are already working to build AGI. In particular, there are large, well-funded industrial AI labs such as Google Brain, Microsoft AI Research, OpenAI, and Deepmind actively working on this. Not to mention, all the world superpowers (USA, EU, China, Russia, etc.) are investing heavily as well. Moreover, as the possibility of AGI becomes increasingly apparent, more and more companies and governments will begin to pursue this research.

Given current AI systems are far from perfect, we shouldn’t assume much more powerful, complicated AGI systems will automatically be safe

Like other powerful technologies, AGI is not without risk. Even our current, relatively weak and narrow systems have problems. Some systems have been shown to exhibit racial, gender, or other biases based on the data they were trained on. Others have learned to “cheat” on their programmed goal in sometimes clever, sometimes hilarious ways. Despite their relative weakness, current AI systems can already have profound negative societal impacts. For example, many people blame social media recommendation systems for increasing political polarization and distrust.

Such failures are common enough that we can’t just assume much more powerful, complicated systems will automatically be safe. In fact, there are reasons to think advanced AI might be unsafe by default.

Many of the likely risks are scaled-up versions of issues we already see today, which could have serious impacts given the power of AGI

When it comes to risks from advanced AI, the most common portrayal in the media is Terminator-style killer robots. While this is a risk (see the controversial research into lethal autonomous weapons), there exist many other more mundane risks which are effectively scaled-up versions of the same types of issues we already see in less powerful AI systems: subtle computer bugs, unintended consequences, and misuse. Given the power of AGI, even seemingly small issues could have major consequences.

There are also risks beyond those already present in current systems. For example, one possible failure mode might be AI with an obsessive, singular focus on its goal without regard to anything else, such as human ethics. For example, consider a corporate AI with the goal to “maximize profit” that realizes that instead of making the highest quality product, it should make the most addictive product and addict as many people as possible (a la Big Tobacco). Another failure mode is a world in which humans become so reliant on powerful AI that we are unable to survive without it (e.g. a world in which all food is grown / provided by AI). Analysts in DC are concerned about another risk: that AIs might be incentivized to deceive humans in order to achieve their goals. Humans are obviously capable of deception, so we should expect a sufficiently advanced AI will be capable of the same. As a final example, an AGI in the wrong hands could enable or further strengthen totalitarian regimes / surveillance states (Note: China already appears to be taking steps in this direction.)

There is work that can be done to reduce these risks

While there are a lot of risks advanced AI systems could introduce, these are not unsolvable problems. They just need serious effort and research to prevent. Broadly speaking the path to safe AI consists of both 1) safety-focused technical research to, for example, detect and prevent dangerous AI misbehavior and 2) policy measures to prevent things like misuse and a nuclear weapons style arms race. Each of these are likely to be challenging for different reasons. Given we don’t really know when AGI will be possible, now is the time to start this work so that we’re ready when the time does come.

Thanks for this Sean! I think work like this is exceptionally useful as introductory information for busy people who are likely to pattern match "advanced AI" to "terminator" or "beyond time horizon".

One piece of feedback I'll offer is to encourage you to consider whether it's possible to link narrow AI ethics concerns to AGI alignment in a way that your last point, "there is work that can be done" shows how current efforts to address narrow AI issues can be linked to AGI. This is especially relevant for governance. This could help people understand why it's important to address AGI issues now, rather than waiting until narrow AI ethics is "fixed" (a misperception I've seen a few times).

Weasel word. Perhaps change to "it almost certainly wasn't" and link to this tweet / experiment from Rob Miles?